Whenever I try to picture the internet at work, I see little pixels of information moving through the air and above our heads in space, getting where they need to go thanks to 5G towers and satellites in the sky. But it’s a lot deeper than that — literally. Google Cloud’s Vijay Vusirikala recently talked with me about why the coolest part of the internet is really underwater. So today, we’re diving into one of the best-kept secrets in submarine life: There wouldn’t be an internet without the ocean.

First question: How does the internet get underwater?

We use something called a subsea cable that runs along the ocean floor and transmits bits of information.

What’s a subsea cable made of?

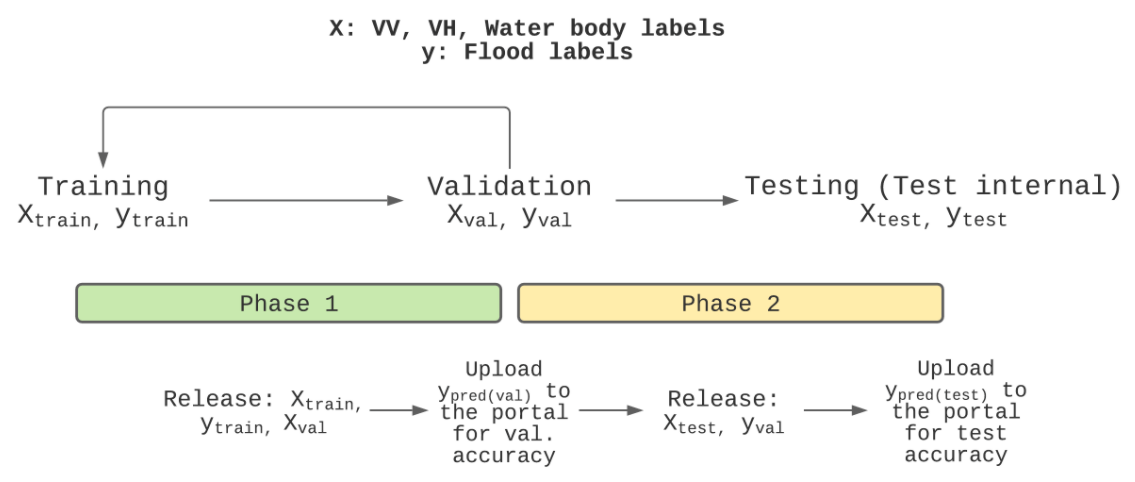

These cables are about the same diameter as the average garden hose, but on the inside they contain thin optical fibers. Those fibers are surrounded by several layers of protection, including two layers of ultra-high strength steel wires, water-blocking structures and a copper sheath. Why so much protection? Imagine the pressure they are under. These cables are laid directly on the sea bed and have tons of ocean water on top of them! They need to be super durable.

A true inside look at subsea cables: On the left, a piece of the Curie subsea cable showing the additional steel armoring for protection close to the beach landing. On the right, a cross-sectional view of a typical deep water subsea cable showing the optical fibers, copper sheath, and steel wires for protection.

Why are subsea cables important?

Subsea cables are faster, can carry higher traffic loads and are more cost effective than satellite networks. Subsea cables are like a highway that has the right amount of lanes to handle rush-hour traffic without getting bogged down in standstill jams. Subsea cables combine high bandwidths (upwards of 300 to 400 terabytes of data per second) with low lag time. To put that into context, 300 to 400 terabytes per second is roughly the same as 17.5 million people streaming high quality videos — at the same time!

So when you send a customer an email, share a YouTube video with a family member or talk with a friend or coworker on Google Meet, these underwater cables are like the "tubes" that deliver those things to the recipient.

Plus, they help increase internet access in places that have had limited connectivity in the past, like countries in South America and Africa. This leads to job creation and economic growth in the places where they’re constructed.

How many subsea cables are there?

There are around 400 subsea cables criss-crossing the planet in total. Currently, Google invests in 19 of them — a mix of cables we build ourselves and projects we’re a part of, where we work together with telecommunications providers and other companies.

Wow, 400! Does the world need more of them?

Yes! Telecommunications providers alongside technology companies are still building them around the world. At Google, we invest in subsea cables for a few reasons: One, our Google applications and Cloud services keep growing. This means more network demand from people and businesses in every country around the world. And more demand means building more cables and upgrading existing ones, which have less capacity than their modern counterparts.

Two, you cannot have a single point of failure when you're on a mission to connect the world’s information and make it universally accessible. Repairing a subsea cable that goes down can take weeks, so to guard against this we place multiple cables in each cross section. This gives us sufficient extra cable capacity so that services aren’t affected for people around the world.

What’s your favorite fact about subsea cables?

Three facts, if I may!

First, I love that we name many of our cables after pioneering women, like Curie for Marie Curie, which connects California to Chile, and Grace Hopper, which links the U.S., Spain and the U.K. Firmina, which links the U.S., Argentina, Brazil and Uruguay, is named after Brazil’s first novelist, Maria Firmina dos Reis.

Second, I’m proud that the cables are kind to their undersea homes. They’re environmentally friendly and are made of chemically inactive materials that don't harm the flora and fauna of the ocean, and they generally don’t move around much! We’re very careful about where we place them; we study each beach’s marine life conditions and we adjust our attachment timeline so we don’t disrupt a natural lifecycle process, like sea turtle nesting season. For the most part they’re stationary and don't disrupt the ocean floor or marine life. Our goal is to integrate into the underwater landscape, not bother it.

And lastly, my favorite fact is actually a myth: Most people think sharks regularly attack our subsea cables, but I’m aware of exactly one shark attack on a subsea cable that took place more than 15 years ago. Truly, the most common problems for our cables are caused by people doing things like fishing, trawling (which is when a fishing net is pulled through the water behind a boat) and anchor drags (when a ship drifts without holding power even though it has been anchored).