Posted by Dave Burke, VP of Engineering

Posted by Dave Burke, VP of Engineering

Today we're releasing the second beta of Android 15, which continues our work to build a platform that helps improve your productivity, minimize battery impact, maximize smooth app performance, give users a premium device experience, protect user privacy and security, and make your app accessible to as many people as possible — all in a vibrant and diverse ecosystem of devices, silicon partners, and carriers.

Android delivers enhancements and new features year-round, and your feedback on the Android beta program plays a key role in helping Android continuously improve. The Android 15 developer site has lots more information about the beta, including downloads for Pixel, and the release timeline. We’re looking forward to hearing what you think, and thank you in advance for your continued help in making Android a platform that works for everyone.

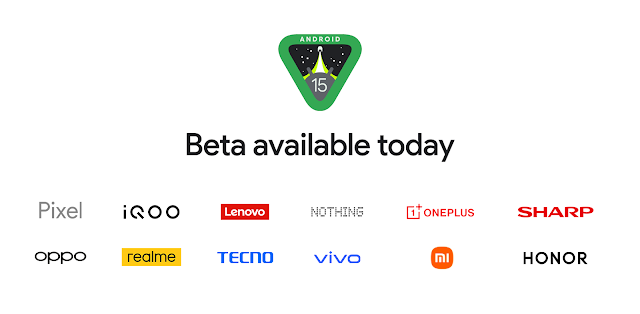

Now available on more devices

The Android 15 beta is now available on handset, tablet, and foldable form factors from partners including Honor, iQOO, Lenovo, Nothing, OnePlus, OPPO, Realme, Sharp, Tecno, vivo, and Xiaomi, so there are so many more devices for you to test your app on, and so many more users that can run your app on the Android 15 beta.

Making Android more efficient

We are continuing to optimize the platform to improve the quality, speed and battery life of Android devices.

Foreground services changes

Foreground services keep apps running in an active state so they can do something critical and user-visible, often at the expense of battery life. In Android 15 Beta 2, the dataSync and mediaProcessing foreground service types now have a ~6 hour timeout, after which the system calls Android 15's new Service.onTimeout(int, int) method. At this point, the service is no longer considered a foreground service. If the service does not call Service.stopSelf() in response to the timeout, it will get stopped with a failure.

Beta 2 also adds new requirements for starting foreground services while the app is running in the background. - If your foreground service relies on the SYSTEM_ALERT_WINDOW permission exemption for background start, you are now required to have a visible overlay when targeting Android 15.

For battery-efficient best practices, debugging network and power usage, and detail on how we're improving battery efficiency of background work in Android 15 and recent versions of Android, see the "Improving battery efficiency of background work on Android" I/O talk.

Upcoming required support for 16 KB page sizes

Android 15 adds support for devices that use larger page sizes, with support for 16 KB pages in addition to the standard 4 KB pages. If your app uses any NDK libraries, either directly or indirectly through an SDK, then you will likely need to simply rebuild your app for it to work on these 16 KB page size devices.

Devices with larger page sizes can have improved performance for memory-intensive workloads. While our testing may not be representative of all devices in the ecosystem, here are a some of the performance gains we identified in our initial testing of devices configured with 16KB page sizes:

- Lower app launch times while the system is under memory pressure: 3.16% lower on average, with more significant improvements (up to 30%) for some apps that we tested

- Reduced power draw during app launch: 4.56% reduction on average

- Faster camera launch: 4.48% faster hot starts on average, and 6.60% faster cold starts on average

- Improved system boot time: improved by 1.5% (approximately 0.8 seconds) on average

As device manufacturers continue to build devices with larger amounts of physical memory (RAM), many of these devices will adopt 16 KB (and eventually greater) page sizes to optimize the device's performance. Adding support for 16 KB page size devices enables your app to run on these devices and helps your app benefit from the associated performance improvements. We plan to make 16 KB page compatibility required for app uploads to Play Store next year.

To help you add support for your app, we've provided guidance on how to check if your app is impacted, how to rebuild your app (if applicable), and how to test your app in a 16 KB environment using emulators (including Android 15 system images for the Android Emulator).

Modernizing Android's GPU access

Android hardware has evolved quite a bit from the early days where the core OS would run on a single CPU and GPUs were accessed using APIs based on fixed-function pipelines. The Vulkan graphics API has been available in the NDK since Android 7.0 (API level 24) with a lower-level abstraction that better reflects modern GPU hardware, scales better to support multiple CPU cores, and offers reduced CPU driver overhead — leading to improved app and game performance. Vulkan is supported by all modern game engines.

Vulkan is Android’s preferred interface to the GPU. Therefore, Android 15 includes ANGLE as an optional layer for running OpenGL ES on top of Vulkan. Moving to ANGLE will standardize the Android OpenGL implementation for improved compatibility, and, in some cases, improved performance. You can test out your OpenGL ES app stability and performance with ANGLE using the "Developer options → Experimental: Enable ANGLE" setting in Android 15.

The Android ANGLE on Vulkan roadmap

As part of streamlining our GPU stack, going forward we will be shipping ANGLE as the GL system driver on more new devices, with the future expectation that OpenGL/ES will be only available through ANGLE. That being said, we plan to continue support for OpenGL ES on all devices.

Recommended next steps: Use the developer options to select the ANGLE driver for OpenGL ES and test. For new projects, we strongly encourage using Vulkan for C/C++.

Modern graphics

Android 15 continues our modernization of Android's Canvas graphics system with new functionality:

- Matrix44, provides a 4x4 matrix for transforming coordinates that should be used when you want to manipulate the canvas in 3D.

- clipShader intersects the current clip with the specified shader, while clipOutShader sets the clip to the difference of the current clip and the shader, each treating the shader as an alpha mask. This supports the drawing of complex shapes efficiently.

More efficient AV1 software decoding

dav1d, the popular AV1 software decoder from VideoLAN is now available for Android devices not supporting AV1 decode in hardware. It is up to 3x more performant than the legacy AV1 software decoder, enabling HD AV1 playback for more users, including some low and mid tier devices.

For now, your app needs to opt-in to using dav1d by invoking it by name "c2.android.av1-dav1d.decoder". It will be made the default AV1 software decoder in a subsequent update . This support is standardized and backported to Android 11 devices that receive Google Play system updates.

For more on the latest features and developer solutions for Android media and camera, see the "Building modern Android media and camera experiences" I/O talk.

A more private, secure Android

We're always looking to give users more transparency and control over their data while enhancing the core security features of the platform. See the "Safeguarding user security on Android" I/O talk for more of what we're doing to improve user safeguards and protect your app against new threats.

Private space

Private space allows users to create a separate space on their device where they can keep sensitive apps away from prying eyes, under an additional layer of authentication. Private space uses a separate user profile. When private space is locked by the user, the profile is paused, i.e. the apps are no longer active. The user can choose to use the device lock or a separate lock factor for private space. Private space apps show up in a separate container in the launcher, and are hidden from the recents view, notifications, settings, and from other apps when private space is locked. User generated and downloaded content (media, files) and accounts are separated between the private space and the main space. The system sharesheet and the photo picker can be used to give apps access to content across spaces when private space is unlocked. There is a known issue with private space in Beta 2 that affects home screen apps; you can find out more in the Beta 2 release notes. We'll have an update in the coming days, so you may wish to wait until then to test your app with private space to make sure it works as expected.

Selected photos access improvement

It is now possible for apps to highlight only the most recently selected photos and videos when partial access to media permissions is granted. This can improve the user experience for apps that frequently request access to photos and videos. This can be achieved by enabling the QUERY_ARG_LATEST_SELECTION_ONLY argument when querying MediaStore through ContentResolver.

valexternalContentUri = MediaStore.Files.getContentUri("external")

val mediaColumns = arrayOf(

FileColumns._ID,

FileColumns.DISPLAY_NAME,

FileColumns.MIME_TYPE,

)

val queryArgs = bundleOf(

// Return only items from the last selection (selected photos access)

QUERY_ARG_LATEST_SELECTION_ONLY to true,

// Sort returned items chronologically based on when they were added to the device's storage

QUERY_ARG_SQL_SORT_ORDER to "${FileColumns.DATE_ADDED} DESC",

QUERY_ARG_SQL_SELECTION to "${FileColumns.MEDIA_TYPE} = ? OR ${FileColumns.MEDIA_TYPE} = ?",

QUERY_ARG_SQL_SELECTION_ARGS to arrayOf(

FileColumns.MEDIA_TYPE_IMAGE.toString(),

FileColumns.MEDIA_TYPE_VIDEO.toString()

)

)

val cursor = contentResolver.query(externalContentUri, mediaColumns, queryArgs, null)

Permission checks on content URIs

Android 15 introduces a new set of APIs that perform permission checks on content URIs. They include:

Secured background activity launches

Android 15 protects users from malicious apps and gives them more control over their devices by adding changes that prevent malicious background apps from bringing other apps to the foreground, elevating their privileges, and abusing user interaction. Background activity launches have been restricted since Android 10.

Malicious apps within the same task can launch another app's activity, then overlay themselves on top, creating the illusion of being that app. This "task hijacking" attack bypasses current background launch restrictions because it all occurs within the same visible task. To mitigate this risk, we've added a flag that blocks apps that don't match the top UID on the stack from launching activities. To opt in for all of your app's activities, update the allowCrossUidActivitySwitchFromBelow attribute in your app's AndroidManifest.xml file:

<application android:allowCrossUidActivitySwitchFromBelow="false" >

Once your app has opted into the new protection, specific activities designed to be shared can be opted-out using this API within the Activity:

public void onCreate(Bundle bundle) {

super.onCreate(bundle);

setAllowCrossUidActivitySwitchFromBelow(true);

...

}

Learn more about restrictions on starting activities from the background.

Safer Intents

Android 15 introduces new security measures to make intents safer and more robust. These changes are aimed at preventing potential vulnerabilities and misuse of intents that can be exploited by malicious apps. There are two main improvements to the security of intents in Android 15:

- Match target intent-filters: Intents that target specific components must accurately match the target's intent-filter specifications. If you send an intent to launch another app's activity, the target intent component needs to align with the receiving activity's declared intent-filters.

- Intents must have actions: Intents without an action will no longer match any intent-filters. This means that intents used to start activities or services must have a clearly defined action.

Important: These improvements will be part of Strict Mode. If you would like to try them out please add the following method:

public void onCreate() {

StrictMode.setVmPolicy(VmPolicy.Builder()

.detectUnsafeIntentLaunch()

.build());

...

Increased minimum target SDK version from 23 to 24

Android 15 increases the minimum targetSdkVersion required to install apps from 23 to 24, building on the previous minimum target SDK change from Android 14, Outdated apps often lack the latest security protections, making devices and data vulnerable. Requiring apps to meet modern API levels helps to ensure better security and privacy.

If you try to install an app that targets a lower API level than 24, you'll see an error raised in Logcat:

INSTALL_FAILED_DEPRECATED_SDK_VERSION: App package must target at least SDK version 24, but found 7.

A premium device experience

Android 15 includes features that help your apps improve the experience of using an Android device, including smoother transitions, a more helpful UI, updates for large-screen devices, and more beautiful options for designers.

Improved large screen multitasking

Android 15 beta 2 gives users better ways to multitask on large screen devices. For example, users can pin the taskbar on screen to quickly switch between apps or save their favorite split-screen app combinations for quick access. This means that making sure your app is adaptive is more important than ever. Google I/O has sessions on Building adaptive Android apps and Building UI with the Material 3 adaptive library that can help, and our documentation has more to help you Design for large screens.

Window Insets

In addition to edge-to-edge enforcement, when targeting SDK 35+ in Android 15 Configuration.screenWidthDp and screenHeightDp, now include the depth of the system bars. While these values may still be used for resource selection (e.g. res/layout-h500dp), using them for layout calculations is discouraged.

Picture-in-Picture

Android 15 introduces new changes in Picture-in-Picture (PiP) ensuring an even smoother transition when entering into PiP mode. This will be beneficial for apps having UI elements overlaid on top of their main UI, which goes into PiP. Currently, onPictureInPictureModeChanged is used to define logic that toggles the visibility of the overlaid UI elements. This callback is triggered when the PiP enter or exit animation is completed. Starting from Android 15, we are introducing a new state in the PictureInPictureUiState class. The onPictureInPictureUiStateChanged callback will be invoked with isTransitioningToPip() as soon as the PiP enter animation starts and the app can hide the overlaid UI elements.

override fun onPictureInPictureUiStateChanged(pipState: PictureInPictureUiState) {

if (pipState.isTransitioningToPip()) {

// Hide UI elements

}

}

override fun onPictureInPictureModeChanged(isInPictureInPictureMode: Boolean) {

if (isInPictureInPictureMode) {

// Unhide UI elements

}

}

This quick visibility toggle of irrelevant UI elements (for a PiP window), will ensure a smoother and flicker free PiP enter animation.

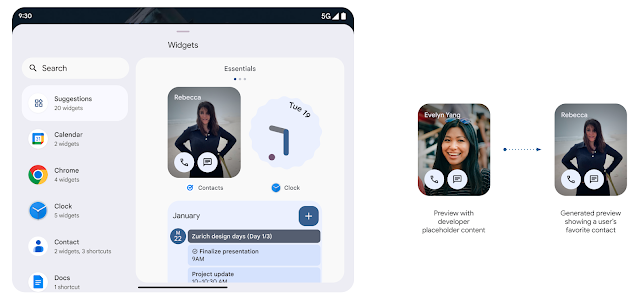

Richer Widget Previews with Generated Previews

Make your widget stand out by showing a personalized preview. Apps targeting Android 15 can provide Remote Views to the Widget Picker, so they can update the content in the picker to be more representative of what the user will see. Apps may use the AppWidgetManager setWidgetPreview, getWidgetPreview and removeWidgetPreview methods to update the appearance of their widgets with up to date and personalized information.

Predictive Back

Predictive back provides a smoother, more intuitive navigation experience while using gesture navigation, leveraging built-in animations to inform users where their actions will take them to reduce unexpected outcomes. In Android 15, predictive back will no longer be behind a developer option, so system animations such as back-to-home, cross-task, and cross-activity will appear for apps that have properly migrated.

Set VibrationEffect for notification channels

Android 15 beta 2 now supports setting rich vibrations for incoming notifications by channel using NotificationChannel.setVibrationEffect, so your users can distinguish between different types of notifications without having to look at their device.

New data types for Health Connect

Health Connect, the centralized way for users to control and manage access to their fitness data, is adding support for additional data types to support even more health and fitness use cases. This release has 2 new data types: skin temperature and training plans.

Skin temperature tracking allows users to store and share more accurate temperature data from a wearable or other tracking device.

Training plans are structured workout plans to help a user achieve their fitness goals. Training plans support includes:

"Choose how you're addressed" system preference

Initially only in French, but expanding soon to additional gendered languages, users can customize how they are addressed by the Android system with a grammatical gender preference. The new setting can be found in the system language settings: Settings → System → Languages & Input → System languages → Choose how you’re addressed.

French grammatical gender preference

An example of where this preference changes the string being shown

Modern internationalization via ICU 74

Android 15 Beta 2 includes API-related updates from ICU 74. ICU 74 contains updates from Unicode 15.1, including new characters, emoji, security mechanisms and corresponding APIs and implementations, as well as updates to CLDR 44 locale data with new locales and various additions and corrections.

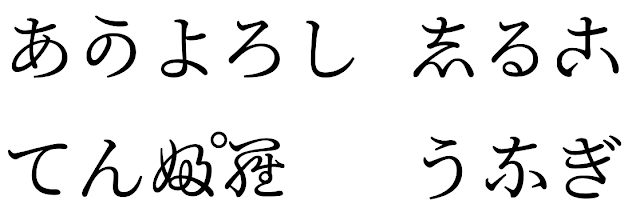

CJK Variable Font

Starting from Android 15, the font file for Chinese, Japanese, and Korean languages, NotoSansCJK, is now a variable font. Variable fonts open up new possibilities for creative typography in CJK languages. Designers can explore a broader range of styles and create visually striking layouts that were previously difficult or impossible to achieve.

New Japanese Hentaigana Font

In Android 15, a new font file for old Japanese Hiragana (known as Hentaigana) is bundled by default. The unique shapes of Hentaigana characters can add a distinctive flair to artwork or design while also helping to preserve accurate transmission and understanding of ancient Japanese documents.

Avoiding clipped text

Some cursive fonts or language characters that have complex shaping may draw the letters in the previous or next character’s area. Such letters may be clipped at the beginning or ending position. Starting in Android 15, TextView allocates additional width for such letters and puts extra padding to the left.

Because this changes how the TextView decides the width, TextView allocates more width by default if the applications target Android 15 or later. You can enable or disable it by calling setUseBoundsForWidth API on TextView. Because adding left padding may cause a misalignment of existing layouts, the padding is not added by default even when targeting Android 15 or later.

To add extra padding to prevent clipping, call setShiftDrawingOffsetForStartOverhang.

<TextView

android:fontFamily="cursive"

android:text="java" />

<TextView

android:fontFamily="cursive"

android:text="java"

android:useBoundsForWidth="true"

android:shiftDrawingOffsetForStartOverhang="true" />

<TextView

android:text="คอมพิวเตอร์" />

<TextView

android:text="คอมพิวเตอร์"

android:useBoundsForWidth="true"

android:shiftDrawingOffsetForStartOverhang="true" />

App compatibility

If you haven't yet tested your app for compatibility with Android 15, now is the time to do it, with many more devices entering the program. In the weeks ahead, you can expect more users to try your app on Android 15 and raise issues they find.

To test for compatibility, install your published app on a device or emulator running Android 15 beta and work through all of your app's flows. Review the behavior changes to focus your testing. After you've resolved any issues, publish an update as soon as possible.

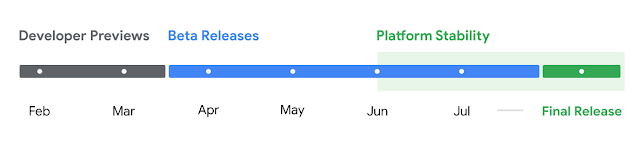

To give you more time to plan for app compatibility work, we’re letting you know our Platform Stability milestone well in advance.

At this milestone, we’ll deliver final SDK/NDK APIs and also final internal APIs and app-facing system behaviors. We’re expecting to reach Platform Stability in June 2024, and from that time you’ll have several months before the official release to do your final testing. The release timeline details are here.

Get started with Android 15

Today's beta release has everything you need to try the Android 15 features, test your apps, and give us feedback. Now that we’re in the beta phase, you can check here to get information about enrolling your device; Enrolling supported Pixel devices will get this and future Android Beta updates over-the-air. If you don’t have a supported device, you can use the 64-bit system images with the Android Emulator in Android Studio. If you're already in the Android 14 QPR beta program on a supported device, you'll automatically get updated to Android 15 Beta 2.

For the best development experience with Android 15, we recommend that you use the latest version of Android Studio Koala. Once you’re set up, here are some of the things you should do:

- Try the new features and APIs - your feedback is critical during the early part of the developer preview and beta program. Report issues in our tracker on the feedback page.

- Test your current app for compatibility - learn whether your app is affected by changes in Android 15; install your app onto a device or emulator running Android 15 and extensively test it.

We’ll update the beta system images and SDK regularly throughout the Android 15 release cycle. Read more here.

For complete information, visit the Android 15 developer site.

Java and OpenJDK are trademarks or registered trademarks of Oracle and/or its affiliates.

OpenGL is a registered trademark and the OpenGL ES logo is a trademark of Hewlett Packard Enterprise used by permission by Khronos.

Vulkan and the Vulkan logo are registered trademarks of the Khronos Group Inc.

VideoLAN cone Copyright (c) 1996-2010 VideoLAN. This logo or a modified version may be used or modified by anyone to refer to the VideoLAN project or any product developed by the VideoLAN team, but does not indicate endorsement by the project.

All trademarks, logos and brand names are the property of their respective owners.

Posted by Ash Nohe and Summers Pitman – Developer Relations Engineers

Posted by Ash Nohe and Summers Pitman – Developer Relations Engineers

Posted by Donovan McMurray – Developer Relations Engineer

Posted by Donovan McMurray – Developer Relations Engineer

Posted by Matthew McCullough – VP of Product Management, Android Developer

Posted by Matthew McCullough – VP of Product Management, Android Developer

Posted by Maru Ahues Bouza – Product Management Director, Android Developer

Posted by Maru Ahues Bouza – Product Management Director, Android Developer