Posted by Wesley Chun, Developer Advocate, Google Cloud

Next generation service

Since its initial launch in 2008 as the first product from Google Cloud, Google App Engine, our fully-managed serverless app-hosting platform, has been used by many developers worldwide. Since then, the product team has continued to innovate on the platform: introducing new services, extending quotas, supporting new languages, and adding a Flexible environment to support more runtimes, including the ability to serve containerized applications.

With many original App Engine services maturing to become their own standalone Cloud products along with users' desire for a more open cloud, the next generation App Engine launched in 2018 without those bundled proprietary services, but coupled with desired language support such as Python 3 and PHP 7 as well as introducing Node.js 8. As a result, users have more options, and their apps are more portable.

With the sunset of Python 2, Java 8, PHP 5, and Go 1.11, by their respective communities, Google Cloud has assured users by expressing continued long-term support of these legacy runtimes, including maintaining the Python 2 runtime. So while there is no requirement for users to migrate, developers themselves are expressing interest in updating their applications to the latest language releases.

Google Cloud has created a set of migration guides for users modernizing from Python 2 to 3, Java 8 to 11, PHP 5 to 7, and Go 1.11 to 1.12+ as well as a summary of what is available in both first and second generation runtimes. However, moving from bundled to unbundled services may not be intuitive to developers, so today we're introducing additional resources to help users in this endeavor: App Engine "migration modules" with hands-on "codelab" tutorials and code examples, starting with Python.

Migration modules

Each module represents a single modernization technique. Some are strongly recommended, others less so, and, at the other end of the spectrum, some are quite optional. We will guide you as far as which ones are more important. Similarly, there's no real order of modules to look at since it depends on which bundled services your apps use. Yes, some modules must be completed before others, but again, you'll be guided as far as "what's next."

More specifically, modules focus on the code changes that need to be implemented, not changes in new programming language releases as those are not within the domain of Google products. The purpose of these modules is to help reduce the friction developers may encounter when adapting their apps for the next-generation platform.

Central to the migration modules are the codelabs: free, online, self-paced, hands-on tutorials. The purpose of Google codelabs is to teach developers one new skill while giving them hands-on experience, and there are codelabs just for Google Cloud users. The migration codelabs are no exception, teaching developers one specific migration technique.

Developers following the tutorials will make the appropriate updates on a sample app, giving them the "muscle memory" needed to do the same (or similar) with their applications. Each codelab begins with an initial baseline app ("START"), leads users through the necessary steps, then concludes with an ending code repo ("FINISH") they can compare against their completed effort. Here are some of the initial modules being announced today:

- Web framework migration from

webapp2 to Flask - Updating from App Engine

ndb to Google Cloud NDB client libraries for Datastore access - Upgrading from the Google Cloud NDB to Cloud Datastore client libraries

- Moving from App Engine

taskqueue to Google Cloud Tasks - Containerizing App Engine applications to execute on Cloud Run

Examples

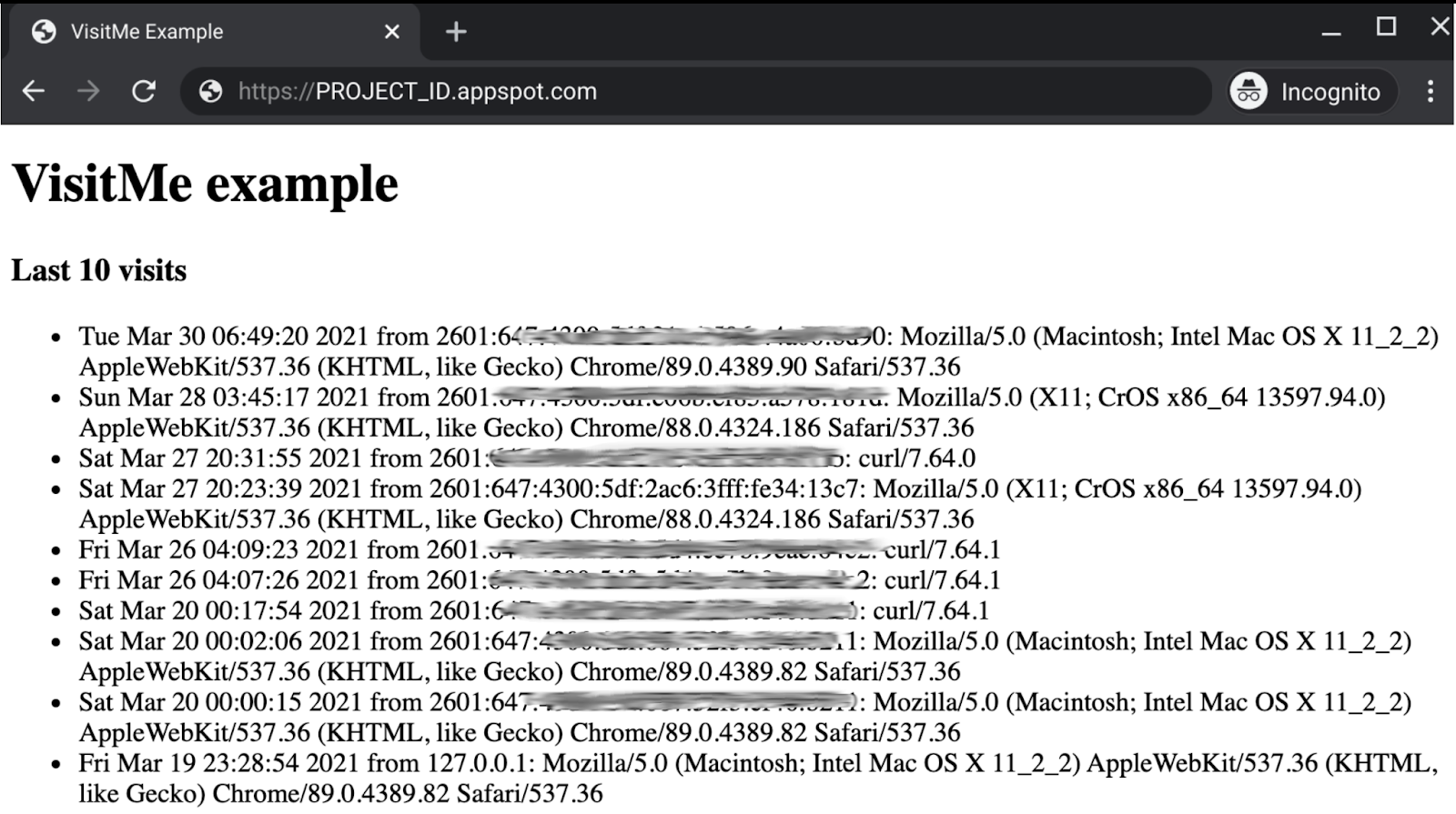

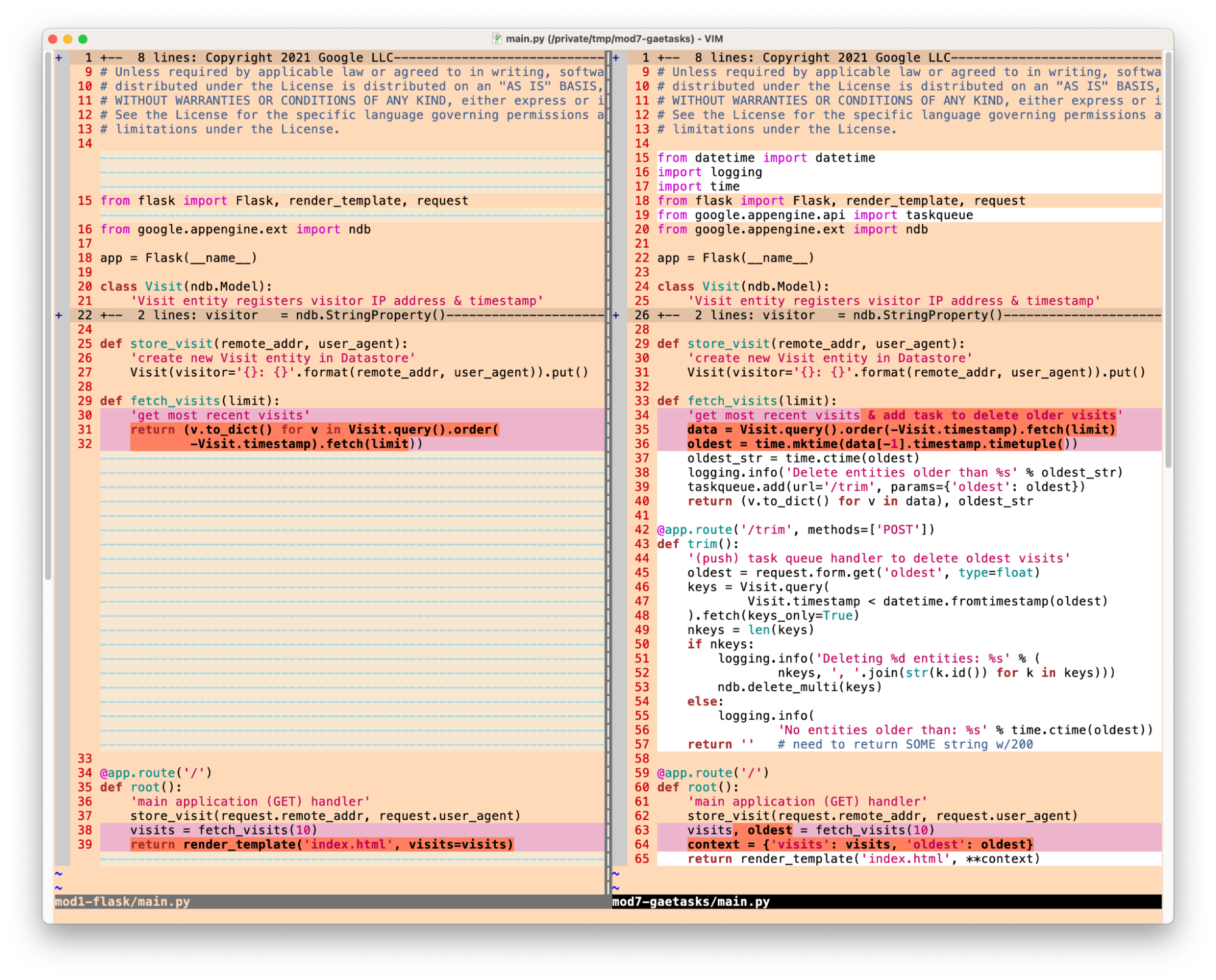

What should you expect from the migration codelabs? Let's preview a pair, starting with the web framework: below is the main driver for a simple webapp2-based "guestbook" app registering website visits as Datastore entities:

class MainHandler(webapp2.RequestHandler):

'main application (GET) handler'

def get(self):

store_visit(self.request.remote_addr, self.request.user_agent)

visits = fetch_visits(LIMIT)

tmpl = os.path.join(os.path.dirname(__file__), 'index.html')

self.response.out.write(template.render(tmpl, {'visits': visits}))

A "visit" consists of a request's IP address and user agent. After visit registration, the app queries for the latest LIMIT visits to display to the end-user via the app's HTML template. The tutorial leads developers a migration to Flask, a web framework with broader support in the Python community. An Flask equivalent app will use decorated functions rather than webapp2's object model:

@app.route('/')

def root():

'main application (GET) handler'

store_visit(request.remote_addr, request.user_agent)

visits = fetch_visits(LIMIT)

return render_template('index.html', visits=visits)

The framework codelab walks users through this and other required code changes in its sample app. Since Flask is more broadly used, this makes your apps more portable.

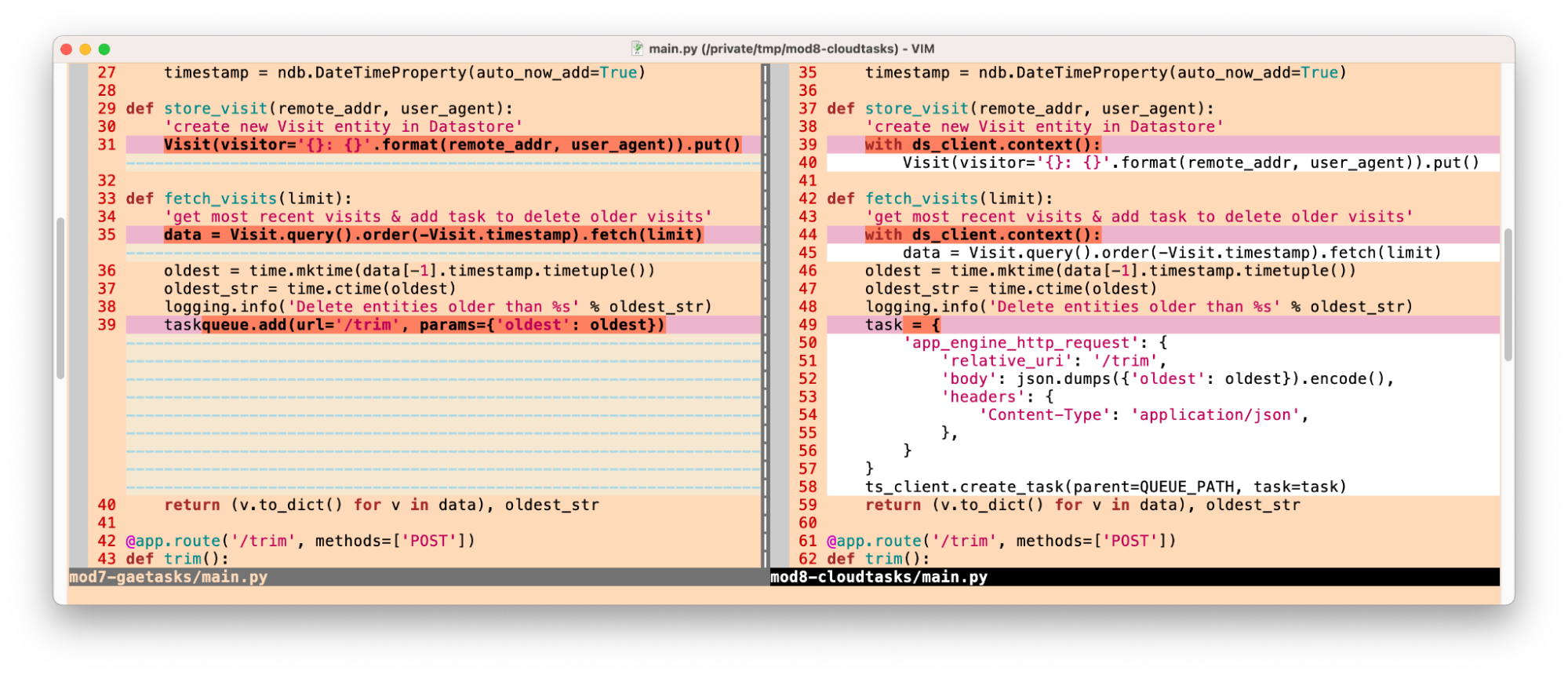

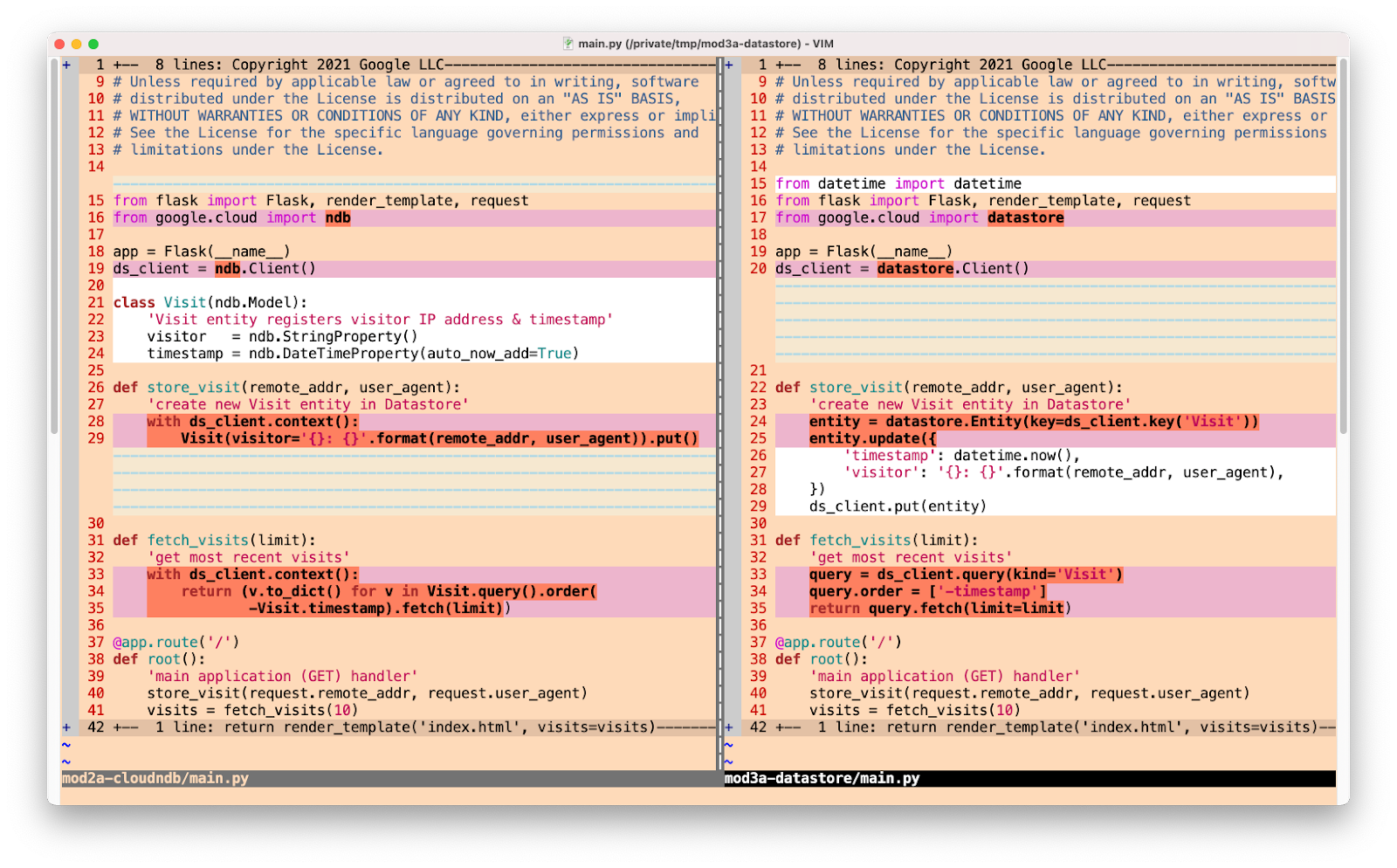

The second example pertains to Datastore access. Whether you're using App Engine's ndb or the Cloud NDB client libraries, the code to query the Datastore for the most recent limit visits may look like this:

def fetch_visits(limit):

'get most recent visits'

query = Visit.query()

visits = query.order(-Visit.timestamp).fetch(limit)

return (v.to_dict() for v in visits)

If you decide to switch to the Cloud Datastore client library, that code would be converted to:

def fetch_visits(limit):

'get most recent visits'

query = DS_CLIENT.query(kind='Visit')

query.order = ['-timestamp']

return query.fetch(limit=limit)

The query styles are similar but different. While the sample apps are just that, samples, giving you this kind of hands-on experience is useful when planning your own application upgrades. The goal of the migration modules is to help you separate moving to the next-generation service and making programming language updates so as to avoid doing both sets of changes simultaneously.

As mentioned above, some migrations are more optional than others. For example, moving away from the App Engine bundled ndb library to Cloud NDB is strongly recommended, but because Cloud NDB is available for both Python 2 and 3, it's not necessary for users to migrate further to Cloud Datastore nor Cloud Firestore unless they have specific reasons to do so. Moving to unbundled services is the primary step to giving users more flexibility, choices, and ultimately, makes their apps more portable.

Next steps

For those who are interested in modernizing their apps, a complete table describing each module and links to corresponding codelabs and expected START and FINISH code samples can be found in the migration module repository. We are also working on video content based on these migration modules as well as producing similar content for Java, so stay tuned.

In addition to the migration modules, our team has also setup a separate repo to support community-sourced migration samples. We hope you find all these resources helpful in your quest to modernize your App Engine apps!

Posted by Wesley Chun (

Posted by Wesley Chun (