This blog post is part of our series: Adaptive Spotlight Week where we provide resources—blog posts, videos, sample code, and more—all designed to help you adapt your apps to phones, foldables, tablets, ChromeOS and even cars. You can read more in the overview of the Adaptive Spotlight Week, which will be updated throughout the week.

We’ve heard the news, creating adaptive layouts in Jetpack Compose is easier than ever. As a declarative UI toolkit, Jetpack Compose is well suited for designing and implementing layouts that adjust themselves to render content differently across a variety of sizes. By using logic coupled with Window Size Classes, Flow layouts, movableContentOf and LookaheadScope, we can ensure fluid responsive layouts in Jetpack Compose.

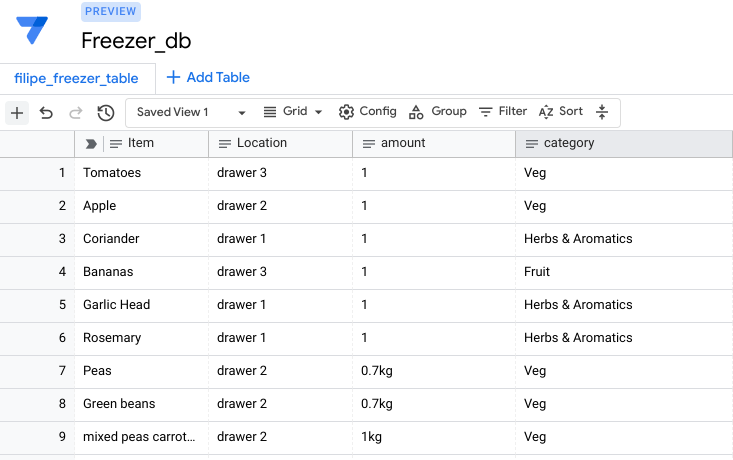

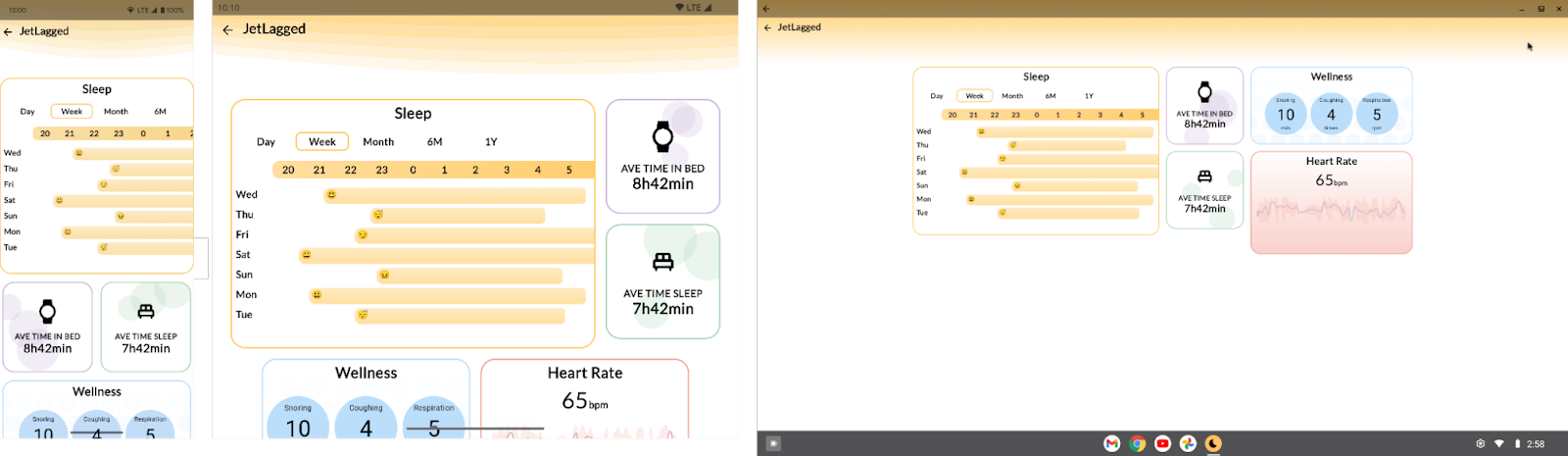

Following the release of the JetLagged sample at Google I/O 2023, we decided to add more examples to it. Specifically, we wanted to demonstrate how Compose can be used to create a beautiful dashboard-like layout. This article shows how we’ve achieved this.

Use FlowRow and FlowColumn to build layouts that respond to different screen sizes

Using Flow layouts ( FlowRow and FlowColumn ) make it much easier to implement responsive, reflowing layouts that respond to screen sizes and automatically flow content to a new line when the available space in a row or column is full.

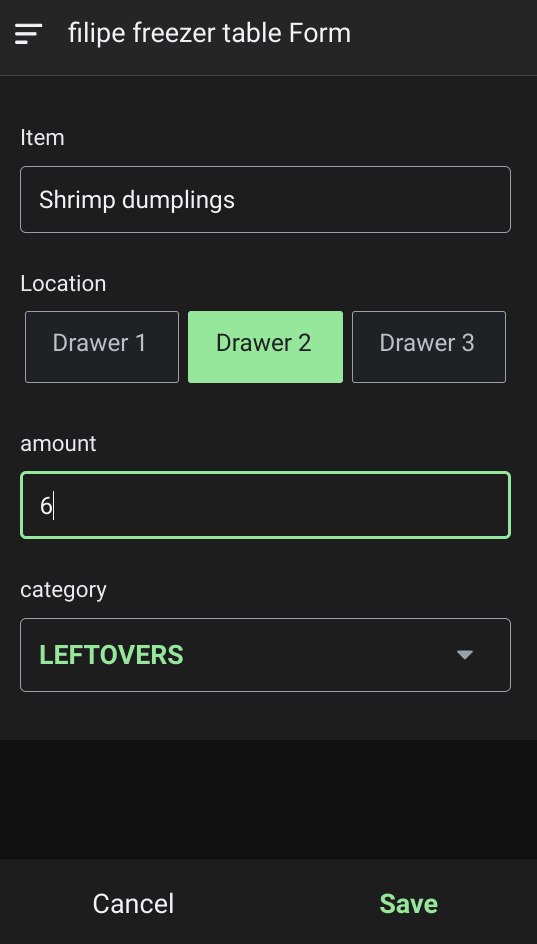

In the JetLagged example, we use a FlowRow, with a maxItemsInEachRow set to 3. This ensures we maximize the space available for the dashboard, and place each individual card in a row or column where space is used wisely, and on mobile devices, we mostly have 1 card per row, only if the items are smaller are there two visible per row.

Some cards leverage Modifiers that don’t specify an exact size, therefore allowing the cards to grow to fill the available width, for instance using Modifier.widthIn(max = 400.dp), or set a certain size, like Modifier.width(200.dp).

FlowRow(

modifier = Modifier.fillMaxSize(),

horizontalArrangement = Arrangement.Center,

verticalArrangement = Arrangement.Center,

maxItemsInEachRow = 3

) {

Box(modifier = Modifier.widthIn(max = 400.dp))

Box(modifier = Modifier.width(200.dp))

Box(modifier = Modifier.size(200.dp))

// etc

}

We could also leverage the weight modifier to divide up the remaining area of a row or column, check out the documentation on item weights for more information.

Use WindowSizeClasses to differentiate between devices

WindowSizeClasses are useful for building up breakpoints in our UI for when elements should display differently. In JetLagged, we use the classes to know whether we should include cards in Columns or keep them flowing one after the other.

For example, if WindowWidthSizeClass.COMPACT, we keep items in the same FlowRow, where as if the layout it larger than compact, they are placed in a FlowColumn, nested inside a FlowRow:

FlowRow(

modifier = Modifier.fillMaxSize(),

horizontalArrangement = Arrangement.Center,

verticalArrangement = Arrangement.Center,

maxItemsInEachRow = 3

) {

JetLaggedSleepGraphCard(uiState.value.sleepGraphData)

if (windowSizeClass == WindowWidthSizeClass.COMPACT) {

AverageTimeInBedCard()

AverageTimeAsleepCard()

} else {

FlowColumn {

AverageTimeInBedCard()

AverageTimeAsleepCard()

}

}

if (windowSizeClass == WindowWidthSizeClass.COMPACT) {

WellnessCard(uiState.value.wellnessData)

HeartRateCard(uiState.value.heartRateData)

} else {

FlowColumn {

WellnessCard(uiState.value.wellnessData)

HeartRateCard(uiState.value.heartRateData)

}

}

}

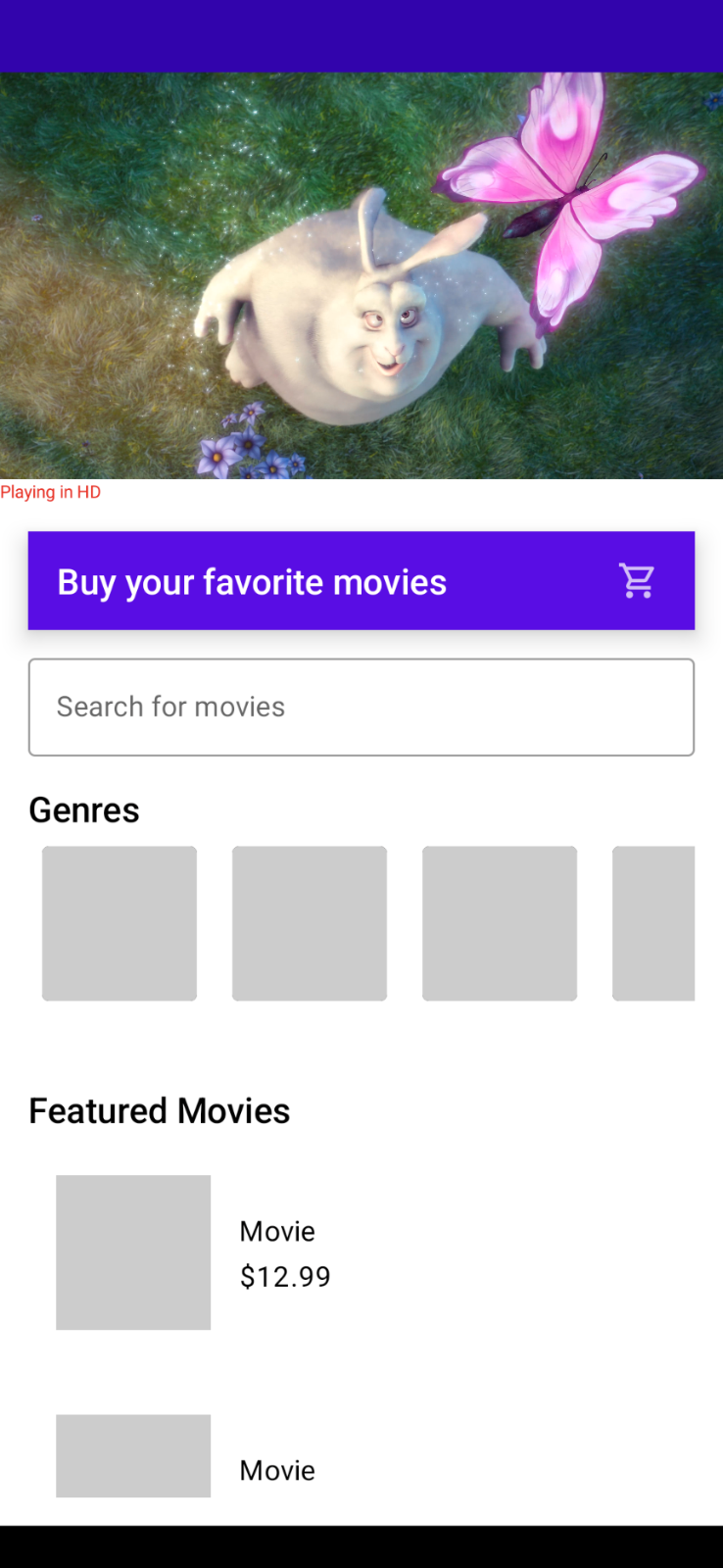

From the above logic, the UI will appear in the following ways on different device sizes:

Use movableContentOf to maintain bits of UI state across screen resizes

Movable content allows you to save the contents of a Composable to move it around your layout hierarchy without losing state. It should be used for content that is perceived to be the same - just in a different location on screen.

Imagine this, you are moving house to a different city, and you pack a box with a clock inside of it. Opening the box in the new home, you’d see that the time would still be ticking from where it left off. It might not be the correct time of your new timezone, but it will definitely have ticked on from where you left it. The contents inside the box don’t reset their internal state when the box is moved around.

What if you could use the same concept in Compose to move items on screen without losing their internal state?

Take the following scenario into account: Define different Tile composables that display an infinitely animating value between 0 and 100 over 5000ms.

@Composable fun Tile1() { val repeatingAnimation = rememberInfiniteTransition() val float = repeatingAnimation.animateFloat( initialValue = 0f, targetValue = 100f, animationSpec = infiniteRepeatable(repeatMode = RepeatMode.Reverse, animation = tween(5000)) ) Box(modifier = Modifier .size(100.dp) .background(purple, RoundedCornerShape(8.dp))){ Text("Tile 1 ${float.value.roundToInt()}", modifier = Modifier.align(Alignment.Center)) } }

We then display them on screen using a Column Layout - showing the infinite animations as they go:

But what If we wanted to lay the tiles differently, based on if the phone is in a different orientation (or different screen size), and we don’t want the animation values to stop running? Something like the following:

@Composable fun WithoutMovableContentDemo() { val mode = remember { mutableStateOf(Mode.Portrait) } if (mode.value == Mode.Landscape) { Row { Tile1() Tile2() } } else { Column { Tile1() Tile2() } } }

This looks pretty standard, but running this on device - we can see that switching between the two layouts causes our animations to restart.

This is the perfect case for movable content - it is the same Composables on screen, they are just in a different location. So how do we use it? We can just define our tiles in a movableContentOf block, using remember to ensure its saved across compositions:

val tiles = remember {

movableContentOf {

Tile1()

Tile2()

}

}

Now instead of calling our composables again inside the Column and Row respectively, we call tiles() instead.

@Composable fun MovableContentDemo() { val mode = remember { mutableStateOf(Mode.Portrait) } val tiles = remember { movableContentOf { Tile1() Tile2() } } Box(modifier = Modifier.fillMaxSize()) { if (mode.value == Mode.Landscape) { Row { tiles() } } else { Column { tiles() } } Button(onClick = { if (mode.value == Mode.Portrait) { mode.value = Mode.Landscape } else { mode.value = Mode.Portrait } }, modifier = Modifier.align(Alignment.BottomCenter)) { Text("Change layout") } } }

This will then remember the nodes generated by those Composables and preserve the internal state that these composables currently have.

We can now see that our animation state is remembered across the different compositions. Our clock in the box will now keep state when it's moved around the world.

Using this concept, we can keep the animating bubble state of our cards, by placing the cards in movableContentOf:

Language

val timeSleepSummaryCards = remember { movableContentOf { AverageTimeInBedCard() AverageTimeAsleepCard() } } LookaheadScope { FlowRow( modifier = Modifier.fillMaxSize(), horizontalArrangement = Arrangement.Center, verticalArrangement = Arrangement.Center, maxItemsInEachRow = 3 ) { //.. if (windowSizeClass == WindowWidthSizeClass.Compact) { timeSleepSummaryCards() } else { FlowColumn { timeSleepSummaryCards() } } // } }

This allows the cards state to be remembered and the cards won't be recomposed. This is evident when observing the bubbles in the background of the cards, on resizing the screen the bubble animation continues without restarting the animation.

Use Modifier.animateBounds() to have fluid animations between different window sizes

From the above example, we can see that state is maintained between changes in layout size (or layout itself), but the difference between the two layouts is a bit jarring. We’d like this to animate between the two states without issue.

In the latest compose-bom-alpha (2024.09.03), there is a new experimental custom Modifier, Modifier.animateBounds(). The animateBounds modifier requires a LookaheadScope.

LookaheadScope enables Compose to perform intermediate measurement passes of layout changes, notifying composables of the intermediate states between them. LookaheadScope is also used for the new shared element APIs, that you may have seen recently.

To use Modifier.animateBounds(), we wrap the top-level FlowRow in a LookaheadScope, and then apply the animateBounds modifier to each card. We can also customize how the animation runs, by specifying the boundsTransform parameter to a custom spring spec:

val boundsTransform = { _ : Rect, _: Rect -> spring( dampingRatio = Spring.DampingRatioNoBouncy, stiffness = Spring.StiffnessMedium, visibilityThreshold = Rect.VisibilityThreshold ) } LookaheadScope { val animateBoundsModifier = Modifier.animateBounds( lookaheadScope = this@LookaheadScope, boundsTransform = boundsTransform) val timeSleepSummaryCards = remember { movableContentOf { AverageTimeInBedCard(animateBoundsModifier) AverageTimeAsleepCard(animateBoundsModifier) } } FlowRow( modifier = Modifier .fillMaxSize() .windowInsetsPadding(insets), horizontalArrangement = Arrangement.Center, verticalArrangement = Arrangement.Center, maxItemsInEachRow = 3 ) { JetLaggedSleepGraphCard(uiState.value.sleepGraphData, animateBoundsModifier.widthIn(max = 600.dp)) if (windowSizeClass == WindowWidthSizeClass.Compact) { timeSleepSummaryCards() } else { FlowColumn { timeSleepSummaryCards() } } FlowColumn { WellnessCard( wellnessData = uiState.value.wellnessData, modifier = animateBoundsModifier .widthIn(max = 400.dp) .heightIn(min = 200.dp) ) HeartRateCard( modifier = animateBoundsModifier .widthIn(max = 400.dp, min = 200.dp), uiState.value.heartRateData ) } } }

Applying this to our layout, we can see the transition between the two states is more seamless without jarring interruptions.

Applying this logic to our whole dashboard, when resizing our layout, you will see that we now have a fluid UI interaction throughout the whole screen.

Summary

As you can see from this article, using Compose has enabled us to build a responsive dashboard-like layout by leveraging flow layouts, WindowSizeClasses, movable content and LookaheadScope. These concepts can also be used for your own layouts that may have items moving around in them too.

For more information on these different topics, be sure to check out the official documentation, for the detailed changes to JetLagged, take a look at this pull request.

Posted by Rebecca Franks - Developer Relations Engineer

Posted by Rebecca Franks - Developer Relations Engineer

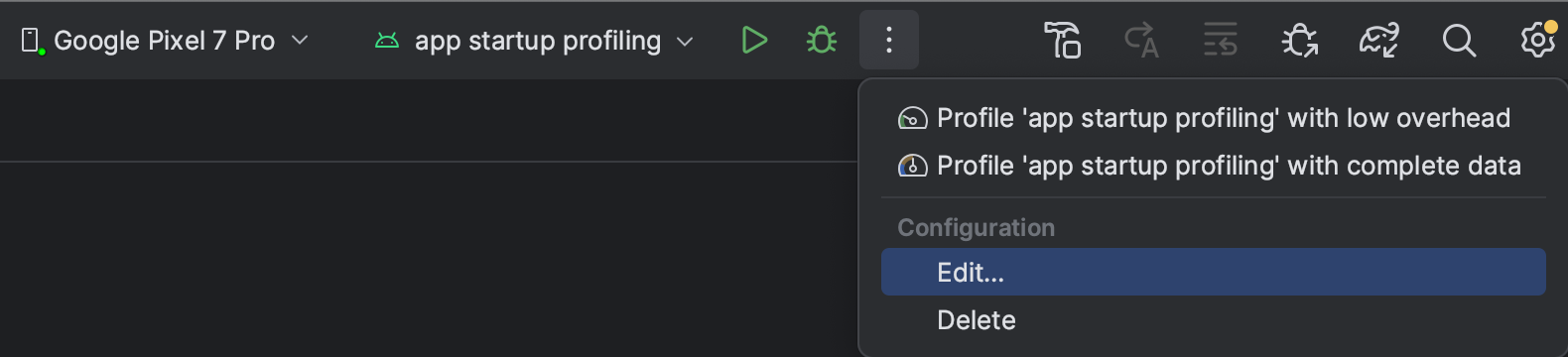

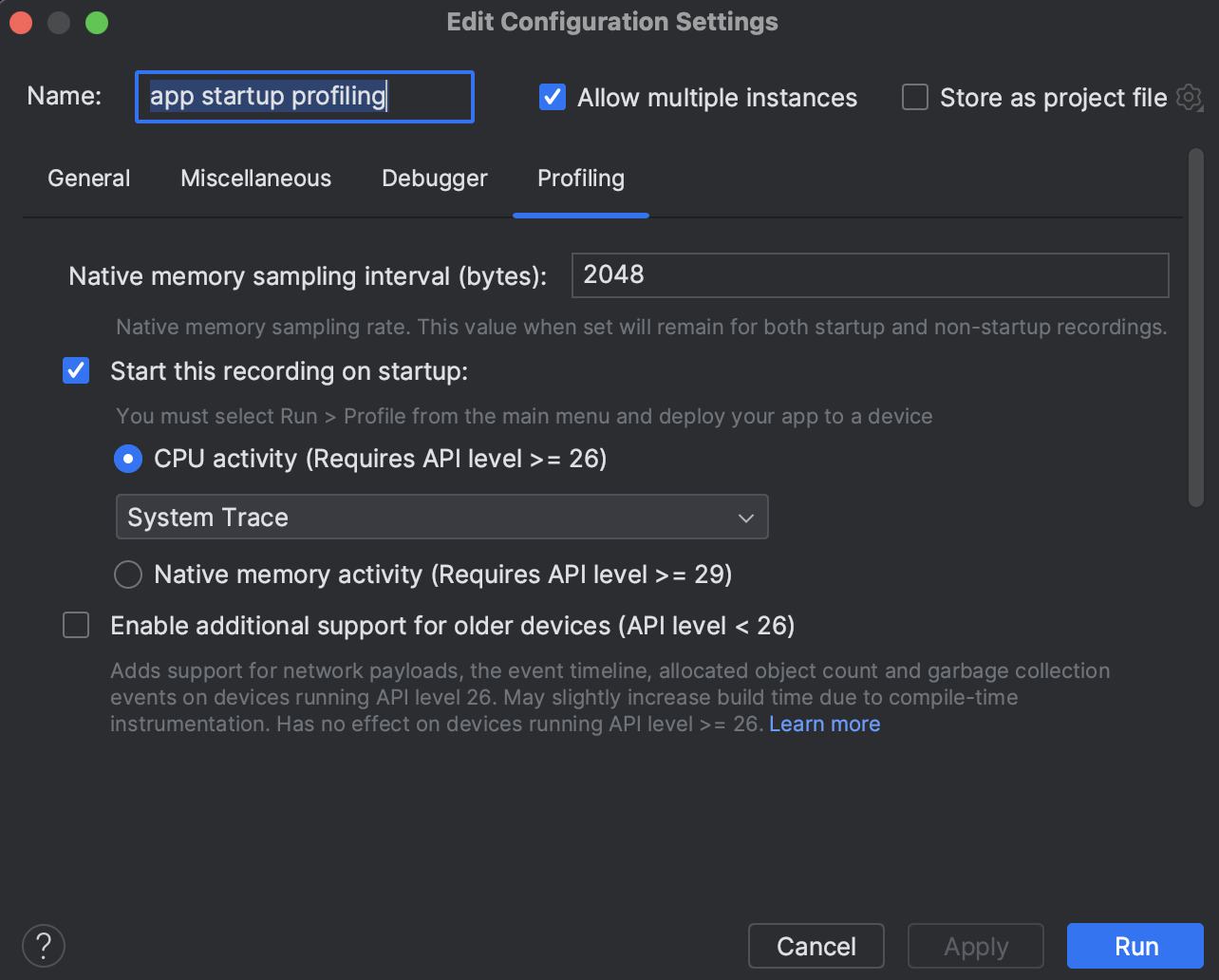

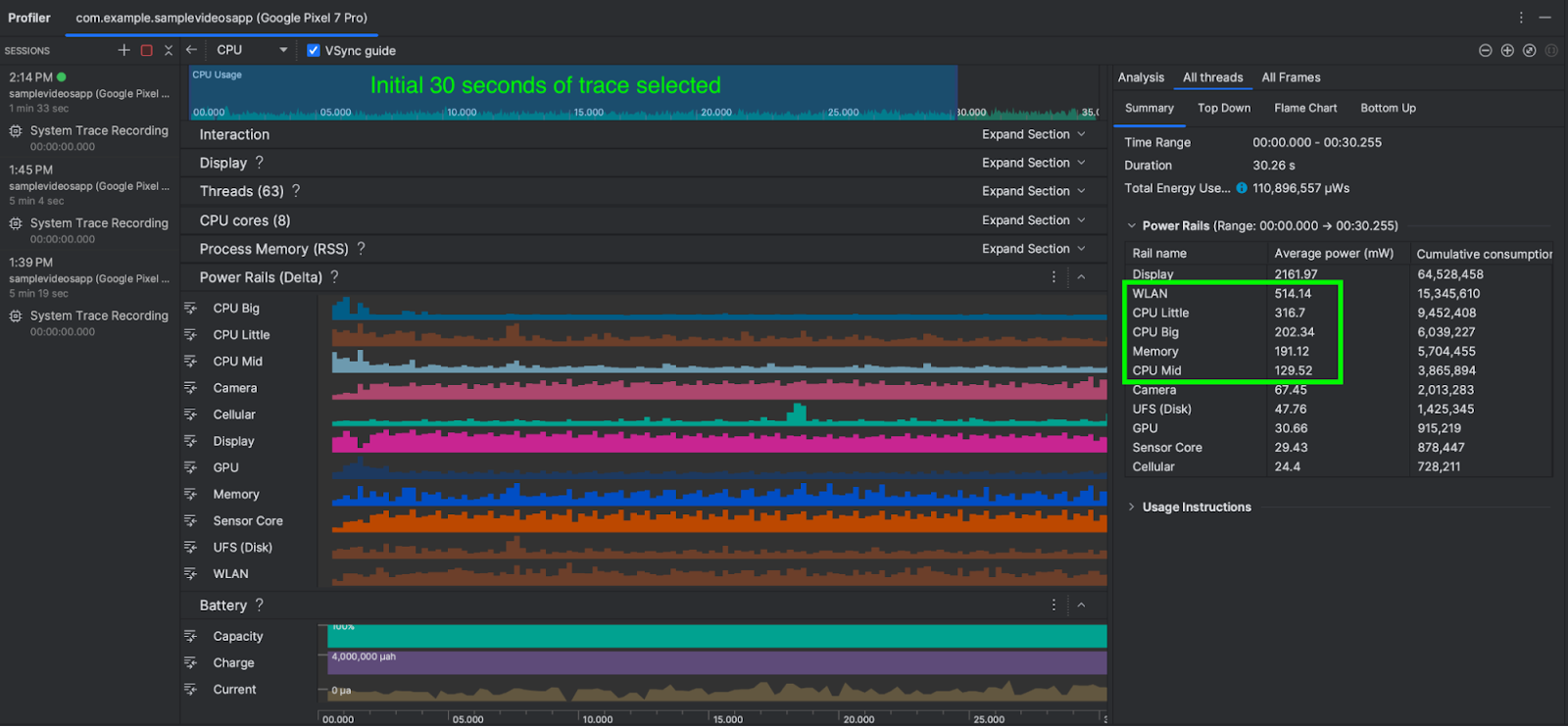

Posted by Mayank Jain - Product Manager, and Yasser Dbeis - Software Engineer; Android Studio

Posted by Mayank Jain - Product Manager, and Yasser Dbeis - Software Engineer; Android Studio

Posted by

Posted by

Posted by

Posted by

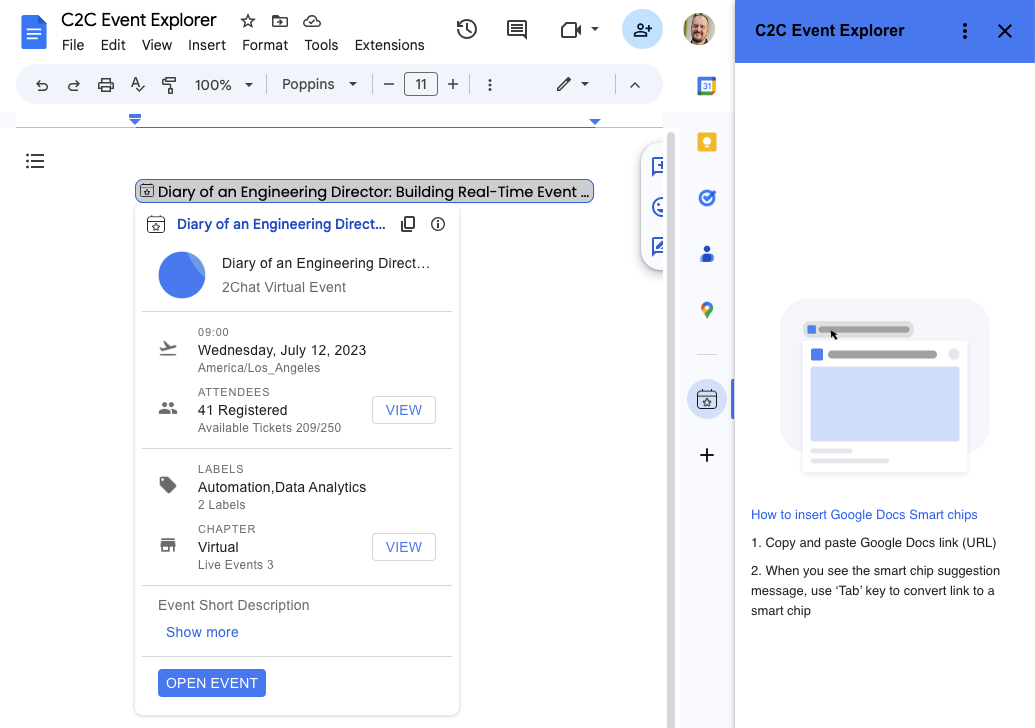

Posted by Aditya Kumar – Software Engineer

Posted by Aditya Kumar – Software Engineer

Posted by

Posted by