Hello everyone,

Thank you for making this year another incredible one! Your innovative experiences continue to inspire us and bring joy to billions. We recently celebrated some of your amazing work in our Best of 2024 awards, showcasing moments of delight across phones, large-screen devices, watches, and PCs.

This year, we shared our vision for the next phase of Play where Play leans into being more than a store and becomes a dynamic platform that connects people with your content, when and where they need it most. To help people discover all you have to offer, truly engage with your experiences, and keep them coming back for more, we’re making Play:

- A destination for discovery: Helping people find their new favorite apps and games and the content within

- The best place for gaming: So people can play more of the games they love across more surfaces, with exclusive rewards available only through Play Points, and

- Go beyond the store: Where people can get relevant content from installed apps directly on their home screen through our new Collections experience

New tools and features built in 2024

Launch with confidence

Launching a new app or update is a critical moment and we want to make this process as smooth and successful as possible.

- Pre-review checks help you catch policy and compatibility issues before launch.

- The new quality panel gives you a centralized view of your app's quality so you can proactively find and address issues like crashes and ANRs, and see recommendations related to user experience.

- And with SDK Console, we’re connecting you with SDK owners who can alert you in Android Studio and Play Console when new versions may address quality issues or help your app or game comply with Play policies.

Accelerate your growth and deepen your engagement with users

We've made Google Play even more content-forward with a visually engaging design that helps people discover the best of what you have to offer, wherever they are.

- We integrated Gemini models to make it easier for everyone to find what they're looking for with AI-generated app review summaries, FAQs, and app highlights, providing key information at a glance.

- Seamless app discovery helps users enjoy amazing experiences across their devices. Now, when people search for apps on their phone, they'll easily discover and install relevant apps for their TV, watch, and more.

- Enhanced custom store listings give you even more ways to tailor your content. And now, with the ability to segment by search keyword, you can connect with users who are actively searching for the specific benefits your app offers. Play Console will even give you keyword suggestions.

- Deep links help you create seamless web-to-app journeys to take users directly to the content they want, right inside your app. And now, we’ve made it even easier for you to manage and experiment with these deep links in Play Console, where you can make quick changes without waiting to publish a new app release.

Optimize revenue with Google Play Commerce

We're continuing to make it easier and more convenient for over 2.5 billion users in over 190 markets to have seamless and secure purchase experiences.

- This year, we've helped over half a billion people be ready to make purchases by proactively encouraging them to set up payment and authentication methods in advance. With new secure biometric authentication options like fingerprint and facial recognition, checkout is now faster and more secure.

- Our extensive payment method library, which includes over 300 local forms of payment in more than 65 markets, continues to grow. This year, we added CashApp (US), Blik Banking (Poland), Pix (Brazil), and MoMo (Vietnam).

- Expanded payment options give more ways for users to pay for content. Parents with Google Family setup can now approve their child's in-app purchases from any OS, not just on Android devices.

- And new subscription platform improvements, like flexible payment plans for long-term subscriptions, give users more options throughout the purchase experience, which helps drive higher conversions and new subscribers.

Reinforcing trust, safety, and security

We continue to invest in more ways to protect users, your business, and the ecosystem. This includes actively combating bad actors who try to deceive users or spread malware, and giving you tools to combat abuse.

- Google Play Protect scans 200 billion apps daily. When it finds a potentially harmful app, we let people know and may even disable particularly dangerous apps.

- Easier automatic app updates help ensure users have the latest features and improved security. Users with limited Wi-Fi access have the option to get their app updates over mobile data, and within their data budgets. We also launched a new tool that empowers you to prompt users for timely updates.

- Play Integrity API helps you detect suspicious activity so you can decide how to respond to abuse, like fraud, cheating, or data theft. Now, Play integrity verdicts are faster, more resilient, and more privacy-friendly.

These are just the highlights. To see how we're continuously improving the experience, check out our quarterly roundup of programs and launches on The Latest.

Investing in our app and game community

We’re continuing to help app and game businesses of all sizes reach their full potential.

- This year, we’ve doubled the size of our global Indie Games Accelerator program and selected 60 game studios from around the world to participate in a 10-week program of masterclasses, workshops, and access to industry experts.

- Ten studios from across Latin America were selected to receive a share of $2 million in equity-free funding and hands-on guidance from the Google Play team as part of our Indie Games Fund.

- 500 aspiring developers in Indonesia participated in our Google Play x Unity Game Developer Training Program to build top-notch skills in game design, development, and monetization to kick-start their game development careers.

- And the ChangGoo initiative in Korea has nurtured a thriving startup ecosystem, supporting over 500 startups and attracting over KRW 147.6 billion in investments.

And with another year of #WeArePlay, we shared and celebrated the stories of 300 app and game businesses from all over the world. Take a look back at just a few of the inspiring founders we’ve featured.

Looking ahead

I’m excited about the future of Google Play as a dynamic platform that connects users with your amazing content, wherever they are.

Next year, we're going to continue helping you maximize your investments on Play by:

- Leaning into content-rich and interactive experiences for apps both within and beyond the Play store,

- Building on our gaming destination to make it even more personalized, engaging, and part of daily routines, and,

- Simplifying the payment and checkout experience for your apps and content.

Thanks again for your continued partnership and the innovation you’ve put into your apps and games. From our team to yours, happy holidays and best wishes for an amazing 2025!

Posted by Sam Bright – VP & GM, Google Play + Developer Ecosystem

Posted by Sam Bright – VP & GM, Google Play + Developer Ecosystem

Posted by Aaron Labiaga- Android Developer Relations Engineer

Posted by Aaron Labiaga- Android Developer Relations Engineer

Posted by Maru Ahues Bouza – Product Management Director, Android Developer

Posted by Maru Ahues Bouza – Product Management Director, Android Developer

Posted by

Posted by

Posted by Tram Bui, Developer Programs Engineer, Developer Relations

Posted by Tram Bui, Developer Programs Engineer, Developer Relations

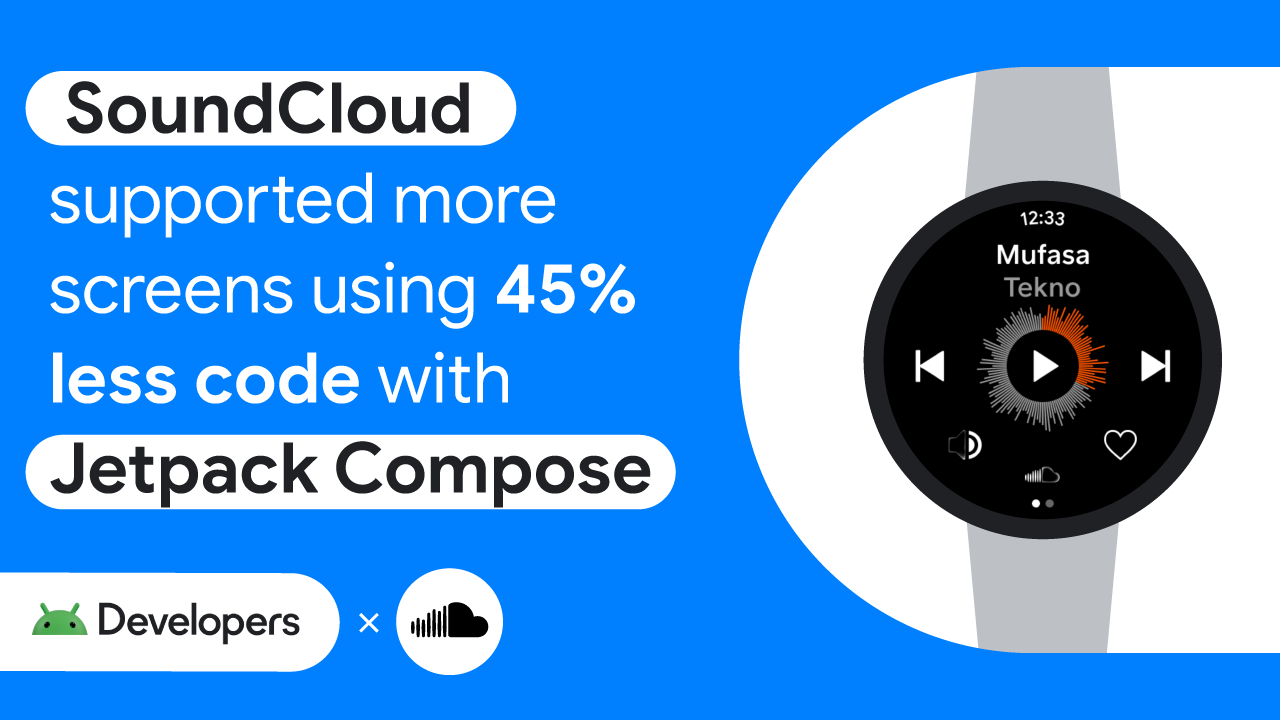

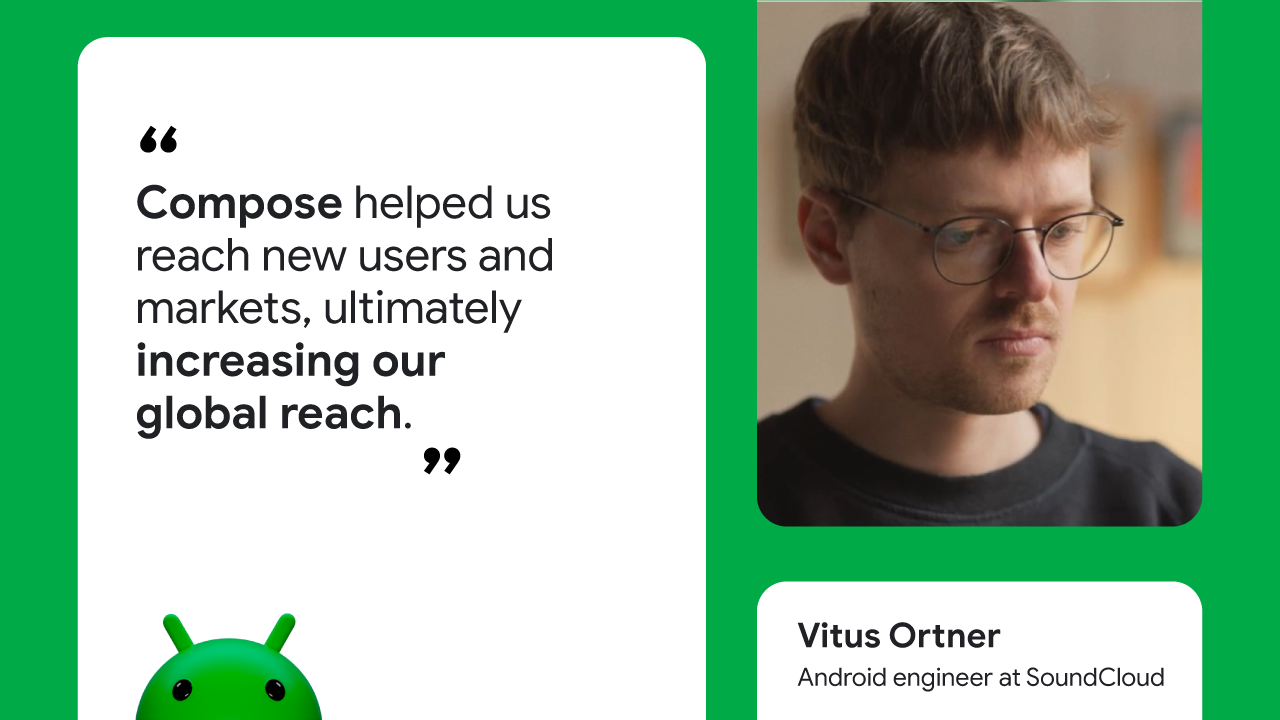

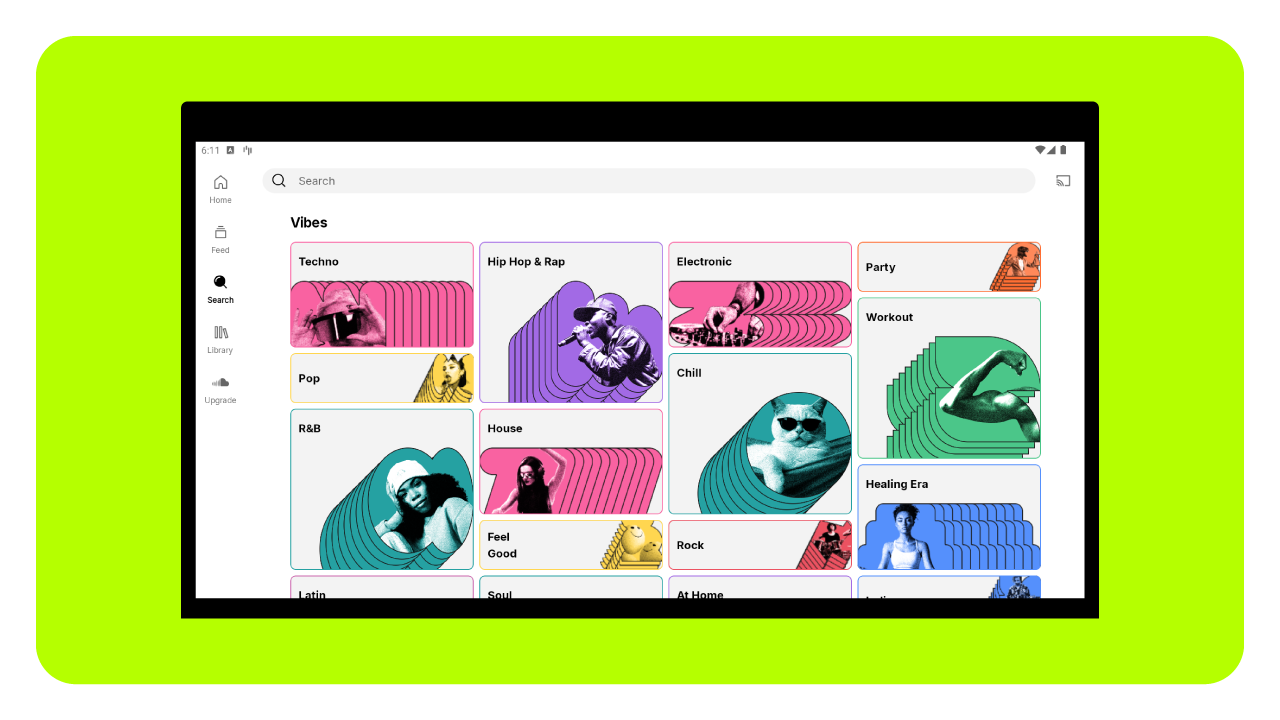

Posted by Chris Arriola, Developer Relations Engineer and Nick Butcher, Product Manager for Jetpack Compose

Posted by Chris Arriola, Developer Relations Engineer and Nick Butcher, Product Manager for Jetpack Compose

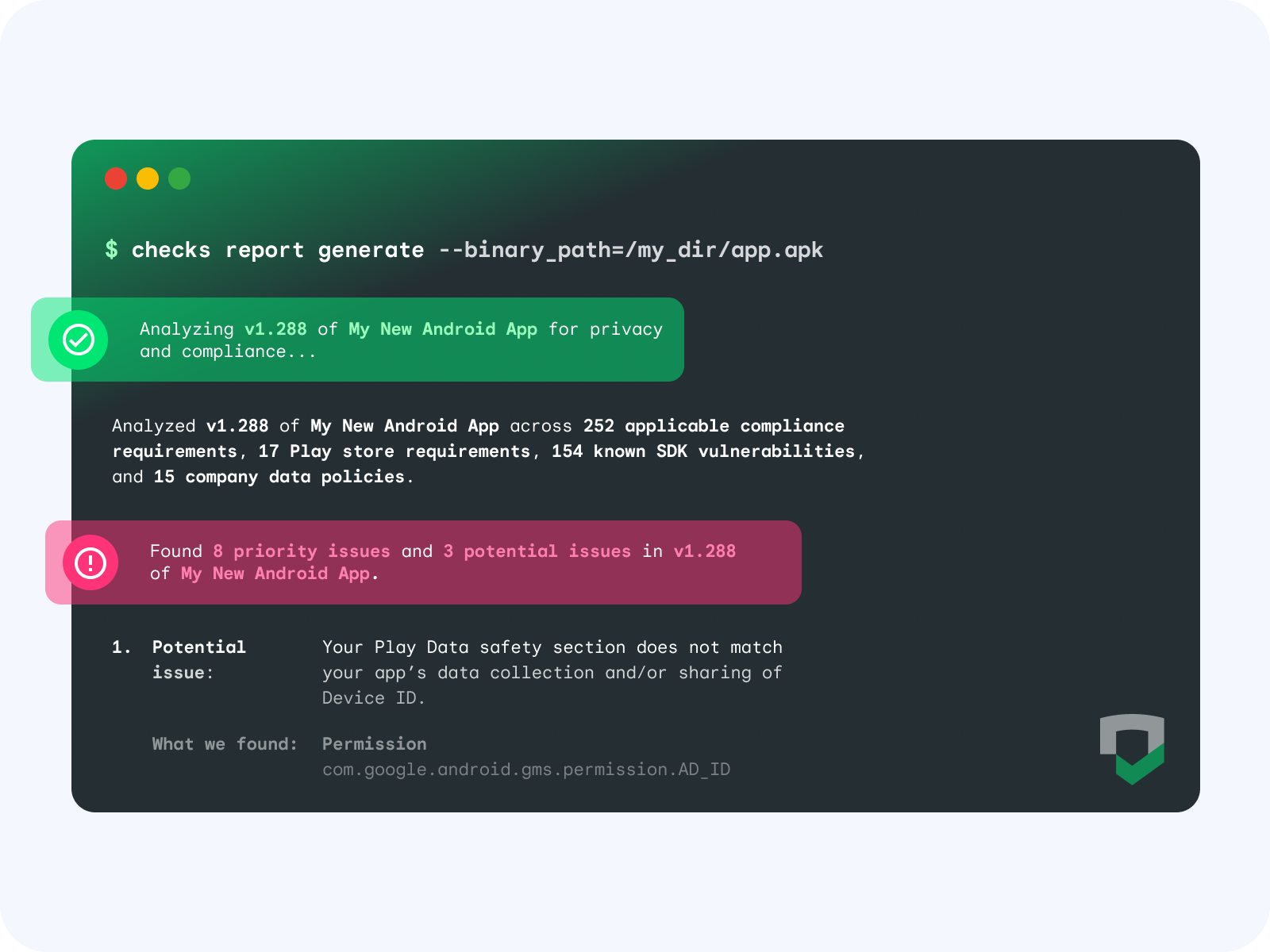

Posted by Fergus Hurley – Co-Founder & GM, Checks, and Evan Otero – Product Manager, Checks

Posted by Fergus Hurley – Co-Founder & GM, Checks, and Evan Otero – Product Manager, Checks