Posted by Lyanne Alfaro, DevRel Program Manager, Google Developer Studio

Posted by Lyanne Alfaro, DevRel Program Manager, Google Developer Studio

Developer Journey is a monthly series highlighting diverse and global developers sharing relatable challenges, opportunities, and wins in their journey. Every month, we will spotlight developers around the world, the Google tools they leverage, and the kinds of products they are building.

This month we speak with global developers across Google Developer Experts, and Women Techmakers, to learn more about their favorite Google tools, the applications they’ve built to serve diverse communities and the role of inclusive design in their process.

Miguel Ángel Durán Garcí

Barcelona, Spain

Content Creator & Software Engineer

What Google tools have you used to build?

I've been using Firebase, Google Cloud Platform, CrUX Dashboard, and Chrome DevTools for years. As a web developer, I'm always excited about the new features that DevTools brings to us to improve our productivity and the performance of our applications.

Which tool has been your favorite to use? Why?

Lately, I've been trying Project IDX, an entirely web-based workspace for full-stack application development, and I'm really excited about the future of this project. I love the idea of being able to develop and deploy applications from the browser, without having to install anything on my computer.

Please share with us about something you’ve built in the past using Google tools.

Most recently, I've deployed AdventJS, a holiday calendar for developers. For optimizing the images, I've used Squoosh from the GoogleChromeLabs team. To ensure the website was accessible and to tweak performance, I've used Lighthouse from Chrome DevTools. Also, I used Google Bard to translate the content of the website into English and Portuguese.

What will you create with Google Bard?

I'm planning to expand a website I've created for the Spanish-speaking community to teach JavaScript from scratch. With Google Bard, I can check the content, create some code, and make it help me create challenges for the students.

What advice would you give someone starting in their developer journey?

I would tell them to be patient and to enjoy the process. It's a long journey, but it's worth it. Also, I would tell them to be curious and avoid sticking to only a few technologies. And finally, I would tell them to share their knowledge with the community, because it's the best way to learn and meet new people. You don't need to be an expert to share your knowledge; you just need to be one step ahead of the people you're teaching.

Marian Villa

Medellín, Colombia

What Google tools have you used to build?

Development and Creativity:

- Google Chrome DevTools

- Bard

- TensorflowJS

Productivity and Communication:

- Gmail

- Google Calendar

- Google Drive

- Google Docs

- Google Sheets

- Google Slides

- Google Meet

Marketing and Business:

- Google Ads

- Google Analytics

- Google My Business

- Google Workspace

- Google Cloud Platform

- Google Marketing Platform

Education and Learning:

- Google Classroom

- Google Forms

- Google Sites

- YouTube

Which tool has been your favorite to use? Why?

Choosing a favorite tool is quite a task given the unique strengths of Bard, TensorflowJS and Google Chrome DevTools, but I'd have to say that Google Chrome DevTools stands out for me. Its versatility in inspecting and debugging web pages, testing code variations, and providing insights into JavaScript behavior has been crucial in my web development endeavors. That being said, both Bard and TensorFlow.js have incredible capabilities. Bard plays a vital role in generating creative content, answering queries, and even composing code. TensorFlow.js, on the other hand, is a game-changer, enabling machine learning in JavaScript, and making it accessible for a wide range of applications. Each tool has its unique appeal, and the choice will depend on the context and specific requirements of the task at hand.

Please share with us about something you’ve built in the past using Google tools.

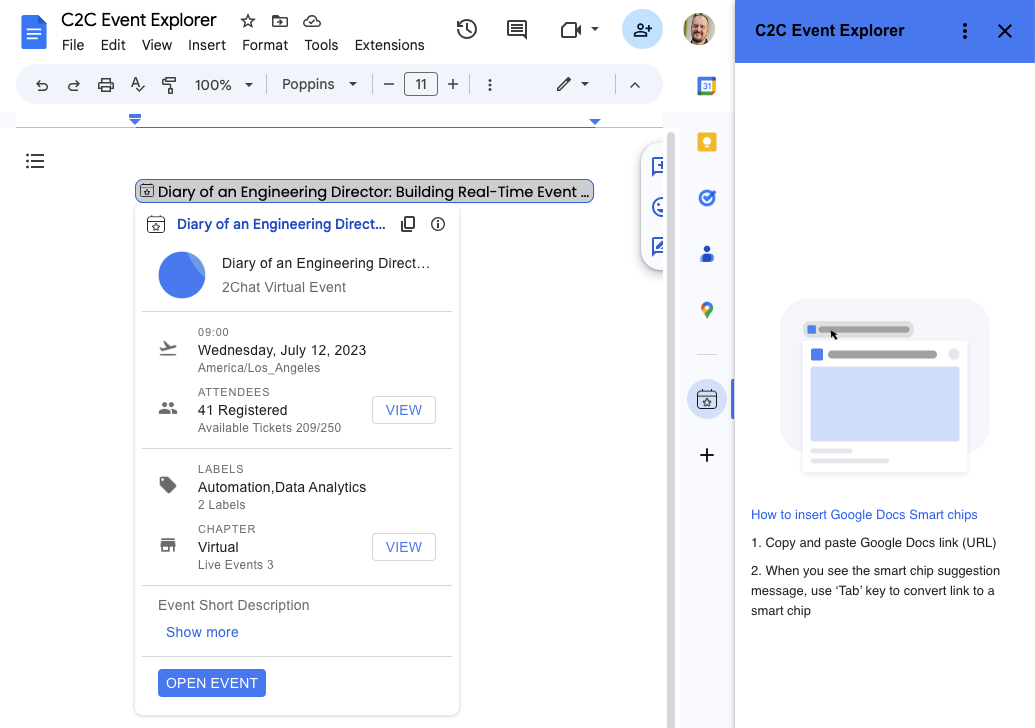

On our latest website, we use all the Google technologies at hand to enhance our image as an NGO. Find it here.

What will you create with Google Bard?

We are once again resuming a winning mentorship project to advance our career as developers, so Bard and Duet AI are great allies to inspect our code and once again create an MVP of this product for our community.

What advice would you give someone starting in their developer journey?

First, think about the problem you want to solve, or what you want to contribute to the world, then create and make it come true. This is easier if you rely on communities, and people who help you as mentors, sponsors and guides.

Rubens de Almeida Zimbres

São Paulo - Brazil

ML Engineer

What Google tools have you used to build?

I’ve been using the full stack of Google Products. I use Google Workspace daily in my life, my personal website is made on Google Sites, and Google Cloud; I started with Compute Engine and Jupyter Notebooks, customized to my needs.

As I acquired more knowledge through practical experience, Coursera and Google Cloud Skills Boost, I started building end to-end solutions using BigQuery, SQL, lots of Vertex AI (Generative AI Studio, Matching Engine, Speech-to-text, Pipelines, AutoML, Model Fine-Tuning), Cloud Run (and a little GKE - Kubernetes), Cloud Functions, Dialogflow and Document AI.

As the requirements of clients change according to the industry, like recruiting (Virtual Career Center) and contact center (Contact Center AI), I was able to test and deploy in production different Google products to solve the clients’ needs.

Which tool has been your favorite to use? Why?

Vertex AI is my favorite, as it is pure ML and Deep Learning optimized. Using AutoML with NAS (Neural Architecture Search) was a very interesting experience with awesome results. Developing Machine Learning pipelines with Kubeflow is a special pleasure, as this is going into production and the whole MLOps is involved.

Please share with us about something you’ve built in the past using Google tools.

I’ve built a recruiting solution that was implemented in six countries of Latin America, benefiting more than 365,000 people. This solution automatically analyzes resumes using OCR via Document AI.

I delivered a revenue prediction for a hotel chain using Tensorflow, where we increased the accuracy of the client’s model by 0.95%. I also built a Contact Center solution which uses Google Speech-to-Text and analytics to make management easier and also to generate strategic insights.

Lately, I was part of the team that delivered an end-to-end Virtual Career Center solution that matches job candidates to job vacancies using Vertex AI Matching Engine via text embeddings and SCANN. Both the recruiting solution and the contact center solution generated patents in Brazil, in the field of NLP (Natural Language Processing).

What will you create with Google Bard?

Google Bard is part of my daily routine. It helps me while coding, it helps me to plan trips, get to the right public transportation, visit interesting places around the world and it also helps by retrieving the Google search in an organized way, with updated content. My idea is to use Bard along with LangChain to perform optimizations in the finance industry.

What advice would you give someone starting in their developer journey?

Learn the basics first.

The temptation of learning this magnificent field as Machine Learning is gigantic, but coding is a great part of the solution. Learn to code properly, in whatever language you want. This brings efficiency and security if your solution needs to scale, decreasing infrastructure costs and improving user experience.

The same applies to Machine Learning: learn basic disciplines such as Calculus, Computer Science fundamentals and you will understand most of the content is shared today online. Only after learning ML you should dive into Deep Learning and the disciplines associated. Don’t fake it. Make it.

Posted by Rachel Francois, Global Program Manager, Google Developer Student Clubs

Posted by Rachel Francois, Global Program Manager, Google Developer Student Clubs

Posted by Fergus Hurley – Co-Founder & GM,

Posted by Fergus Hurley – Co-Founder & GM,

Posted by

Posted by

Posted by

Posted by

Posted by Leticia Lago, Developer Marketing

Posted by Leticia Lago, Developer Marketing

Posted by Bradford Lee – Product Marketing Manager, Augmented Reality, and Ahsan Ashraf – Product Marketing Manager, Google Maps Platform

Posted by Bradford Lee – Product Marketing Manager, Augmented Reality, and Ahsan Ashraf – Product Marketing Manager, Google Maps Platform

Posted by Jeanine Banks – VP/GM, Developer X and Developer Relations, and Burak Gokturk – VP/GM, Cloud AI and Industry Solutions

Posted by Jeanine Banks – VP/GM, Developer X and Developer Relations, and Burak Gokturk – VP/GM, Cloud AI and Industry Solutions

Posted by the Google Bazel team

Posted by the Google Bazel team

Posted by Karina Govindji Senior Director – LEAD - Global Workforce Diversity, and Noa Havazelet – Head of Google's accelerator programs across Europe and Israel

Posted by Karina Govindji Senior Director – LEAD - Global Workforce Diversity, and Noa Havazelet – Head of Google's accelerator programs across Europe and Israel