Last week, we released Gemini 1.0 Ultra in Gemini Advanced. You can try it out now by signing up for a Gemini Advanced subscription. The 1.0 Ultra model, accessible via the Gemini API, has seen a lot of interest and continues to roll out to select developers and partners in Google AI Studio.

Today, we’re also excited to introduce our next-generation Gemini 1.5 model, which uses a new Mixture-of-Experts (MoE) approach to improve efficiency. It routes your request to a group of smaller "expert” neural networks so responses are faster and higher quality.

Developers can sign up for our Private Preview of Gemini 1.5 Pro, our mid-sized multimodal model optimized for scaling across a wide-range of tasks. The model features a new, experimental 1 million token context window, and will be available to try out in Google AI Studio. Google AI Studio is the fastest way to build with Gemini models and enables developers to easily integrate the Gemini API in their applications. It’s available in 38 languages across 180+ countries and territories.

1,000,000 tokens: Unlocking new use cases for developers

Before today, the largest context window in the world for a publicly available large language model was 200,000 tokens. We’ve been able to significantly increase this — running up to 1 million tokens consistently, achieving the longest context window of any large-scale foundation model. Gemini 1.5 Pro will come with a 128,000 token context window by default, but today’s Private Preview will have access to the experimental 1 million token context window.

We’re excited about the new possibilities that larger context windows enable. You can directly upload large PDFs, code repositories, or even lengthy videos as prompts in Google AI Studio. Gemini 1.5 Pro will then reason across modalities and output text.

- Upload multiple files and ask questions

- Query an entire code repository

- Add a full length video

We’ve added the ability for developers to upload multiple files, like PDFs, and ask questions in Google AI Studio. The larger context window allows the model to take in more information — making the output more consistent, relevant and useful. With this 1 million token context window, we’ve been able to load in over 700,000 words of text in one go.

The large context window also enables a deep analysis of an entire codebase, helping Gemini models grasp complex relationships, patterns, and understanding of code. A developer could upload a new codebase directly from their computer or via Google Drive, and use the model to onboard quickly and gain an understanding of the code.

Gemini 1.5 Pro can help developers boost productivity when learning a new codebase. [Video sped up for demo purposes]

Gemini 1.5 Pro can also reason across up to 1 hour of video. When you attach a video, Google AI Studio breaks it down into thousands of frames (without audio), and then you can perform highly sophisticated reasoning and problem-solving tasks since the Gemini models are multimodal.

Gemini 1.5 Pro can perform reasoning and problem-solving tasks across video and other visual inputs. [Video sped up for demo purposes]

More ways for developers to build with Gemini models

In addition to bringing you the latest model innovations, we’re also making it easier for you to build with Gemini:

- Easy tuning. Provide a set of examples, and you can customize Gemini for your specific needs in minutes from inside Google AI Studio. This feature rolls out in the next few days.

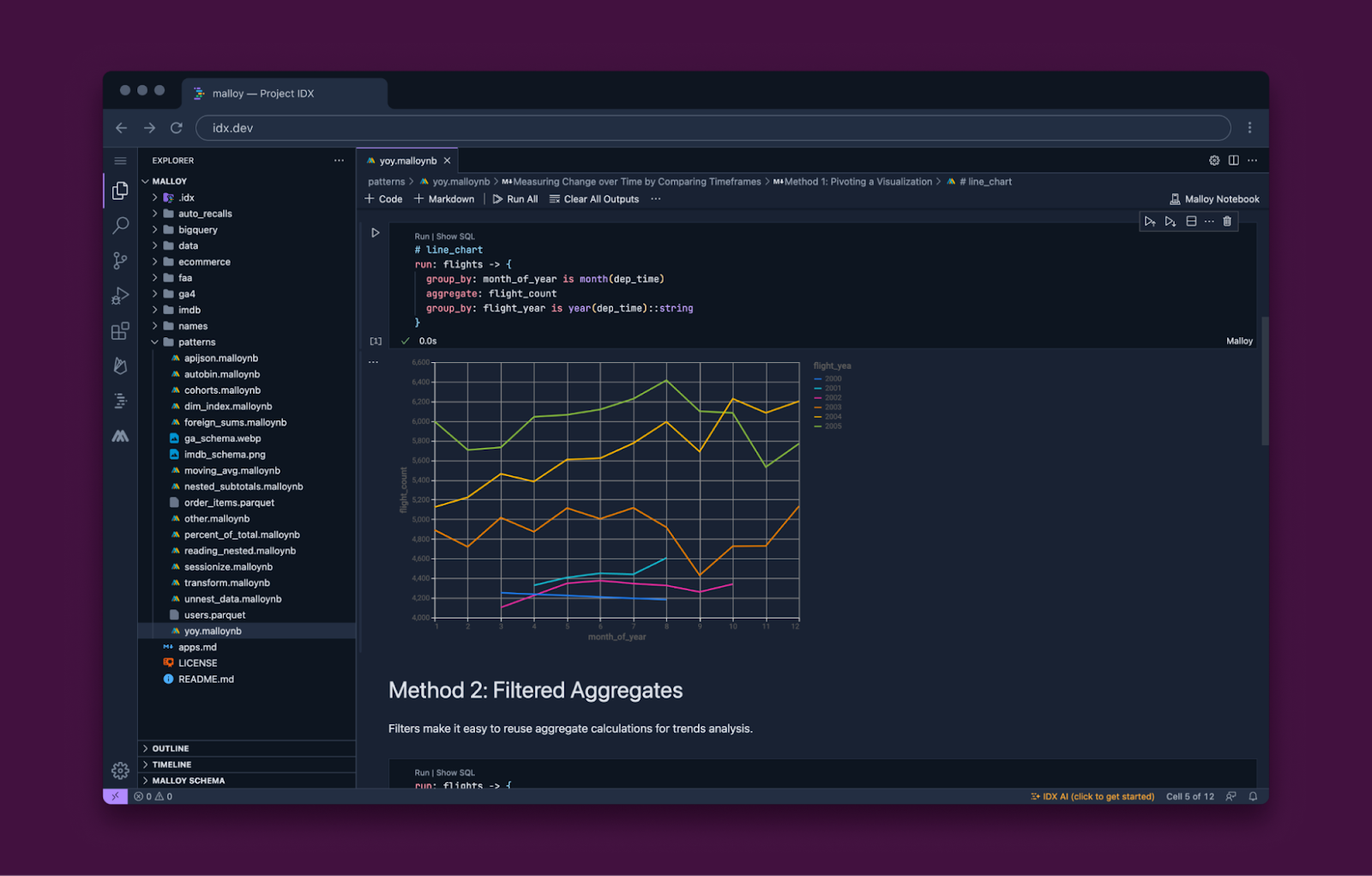

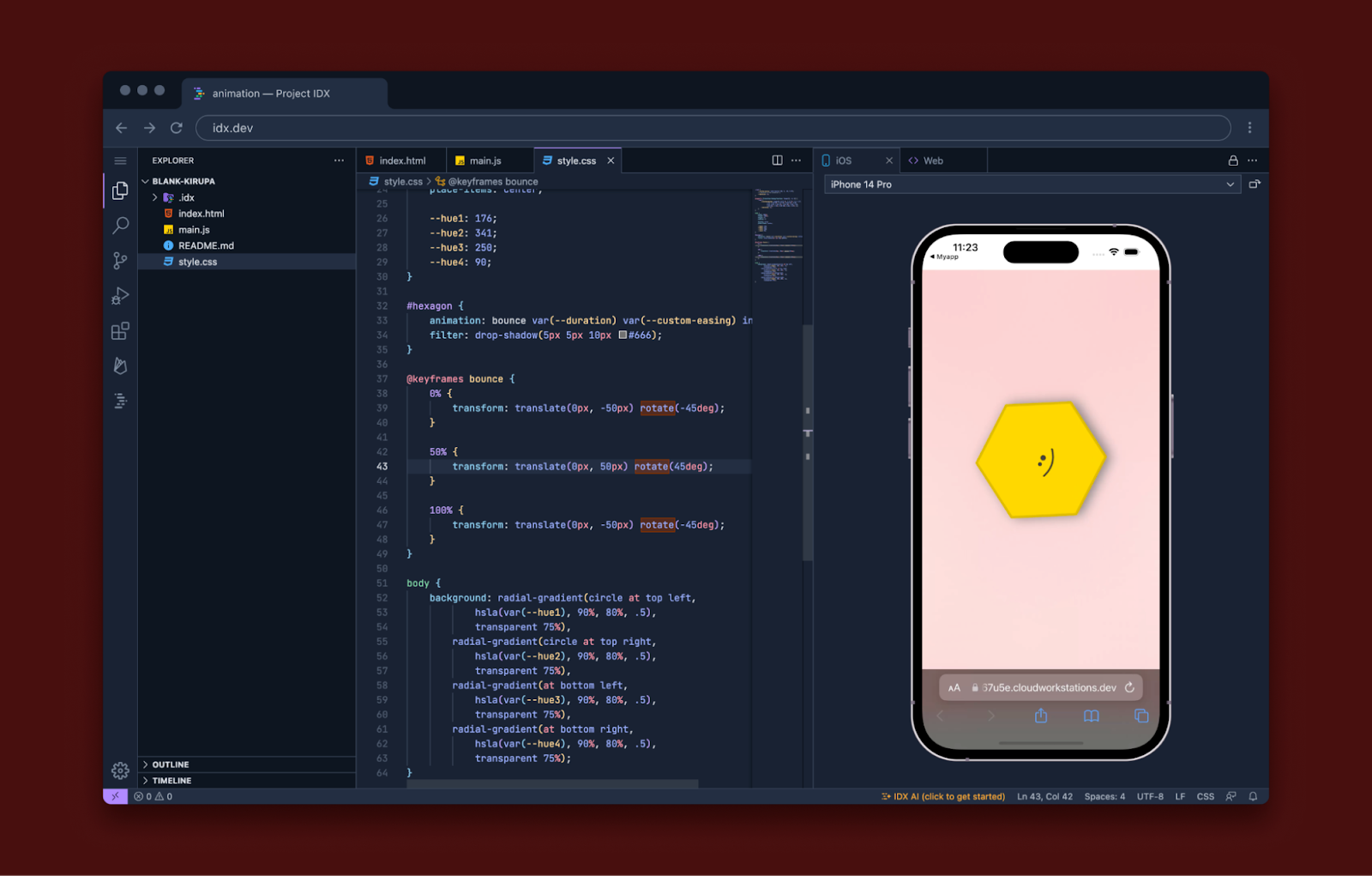

- New developer surfaces. Integrate the Gemini API to build new AI-powered features today with new Firebase Extensions, across your development workspace in Project IDX, or with our newly released Google AI Dart SDK.

- Lower pricing for Gemini 1.0 Pro. We’re also updating the 1.0 Pro model, which offers a good balance of cost and performance for many AI tasks. Today’s stable version is priced 50% less for text inputs and 25% less for outputs than previously announced. The upcoming pay-as-you-go plans for AI Studio are coming soon.

Since December, developers of all sizes have been building with Gemini models, and we’re excited to turn cutting edge research into early developer products in Google AI Studio. Expect some latency in this preview version due to the experimental nature of the large context window feature, but we’re excited to start a phased rollout as we continue to fine-tune the model and get your feedback. We hope you enjoy experimenting with it early on, like we have.

Posted by Jaclyn Konzelmann and Wiktor Gworek – Google Labs

Posted by Jaclyn Konzelmann and Wiktor Gworek – Google Labs

Posted by the IDX team

Posted by the IDX team

Posted by

Posted by