Posted by Matthew Izatt, Product Lead, Google Workspace Platform

For millions of our customers, 2022 brought an abundance of change in the way they connect, collaborate, and get things done. Frontline workers at customers like Globe Telecom and general contractor BHI benefited from digital transformation on all fronts by quickly getting the apps they needed to do their jobs in the field. Office and remote workers, meanwhile, adjusted to hybrid work by leveraging ready-made tools from partners like DocuSign and Asana or they built custom desk booking applications.

2022 was also a year of growth. Google Workspace now has more than 3 billion users and over 8 million paying customers across the globe. And the Google Workspace Marketplace passed a lifetime milestone of driving more than 5 billion app installs. To wrap up a year marked by so much change, we’ve recapped some of the biggest updates that make Google Workspace the most open and extensible platform for users, customers, and developers alike.

1. Build software with more agility with our DevOps integrationsGoogle Workspace gives you real-time visibility into project progress and decisions to help you ship quality code fast and stay connected with your stakeholders, all without switching tools and tabs. By leveraging integrated applications from our partners, you can pull valuable information out of silos, making collaborating on requirements docs, code reviews, bug triage, deployment updates, and monitoring operations easy for the whole team. This year we partnered with popular DevOps tools to help you do your job better:

- Asana: Plan and execute together, with Asana integrations you can coordinate and manage everything from daily tasks to cross-functional strategic initiatives.

- GitHub: Teams can quickly push new commits, make pull requests, do code reviews, and provide real-time feedback that improves the quality of their code—all from Google Chat.

- Jira: Accelerate the entire QA process in the development workflow. The Jira for Google Chat app acts as a team member in the conversation, sending new issues and contextual updates as they are reported to improve the quality of your code and keep everyone informed on your Jira projects.

- PagerDuty: Enables developers, DevOps, IT operations, and business leaders to prevent and resolve business-impacting incidents for an exceptional customer experience—all from Google Chat.

This year we launched the Google Workspace Developer Preview program to get you access to the new APIs and stay in touch with the latest updates on the Google Workspace platform. Features in developer preview have already completed early development phases, so they're ready for implementation. This program gives you the chance to shape the final stages of feature development with feedback, get pre-release support, and have your integration ready for public use on launch day. Apply to the Developer Preview Program today.

For Google Chat this year we announced that you could programmatically create new spaces and add members on behalf of users via the Google Chat API. These latest additions to the Chat API unlock some sought-after scenarios for developers looking for new ways to extend Chat. For example, PagerDuty leveraged the API as part of their PagerDuty for Google Chat app. The app allows the incident team to isolate and focus on the problem at hand without being distracted by having to set up a new space, or further distract any folks in the current space who aren’t a part of the resolution team for a specific incident. All of this will be done seamlessly through PagerDuty for Chat as part of the natural flow of working with Google Chat.

|

| PagerDuty for Google Chat keeps the business up to date on service-impacting incidents. |

We are adding functionality to Chat apps so developers can soon add widgets like a date time picker, or design their layout with multiple columns to make better use of space. We believe these new layout options will open more ways for developers to build engaging apps for users. To help users find and learn more about apps we’ve added “About pages” for apps and making apps discoverable in the compose bar in Chat. Apply to our Developer Preview Program to get early access to the Google Chat APIs.

We also announced new functionality for app developers to leverage the Google Meet video conferencing product through our new Meet Live Sharing API. Users can now come together and share experiences with each other inside an app, such as streaming a TV show, queuing up videos to watch on YouTube, collaborating on a music playlist, joining in a dance party, or working out together though Google Meet. If you want to try out the APIs, you can apply for access through the Developer Preview Program.

3. Connect your customers with critical information with Smart Chips for Google DocsWe expanded smart chips to our ecosystem of partners, allowing our users to add even more rich data, more context, and critical information right into the flow of their work. With these new third-party smart chips, you will be able to tag and see critical information from partner applications using @-mentions, and easily insert interactive information and previews from third-party apps directly into a Google Doc. Several of our partners, including AO Docs, Atlassian, Asana, Figma, Miro, Tableau, and ZenDesk, are now developing third-party smart chips to add more value to your Google Docs experience. Smart chips will be available to developers to build out their app integrations in 2023.

|

| Smart Chips will be available to third-party developers in 2023. |

4. Grow your business with the Recommended for Google Workspace program

Each year, we evaluate the apps on Google Workspace Marketplace and recommend a select number that are enhancing the Google Workspace experience and helping people work in powerful new ways. Each undergoes reviews by both Google and an independent third-party security firm to ensure they meet our highest standards of integration and security requirements. For 2022 here’s the selection of Recommended for Google Workspace: AODocs, Copper, Dialpad, DocuSign, LumApps, Mailmeteor, Miro, RingCentral, Sheetgo, Signeasy, Supermetrics, and Yet Another Mail Merge. Our application for Recommended for Google Workspace is now open, apply today.

|

| Become an app Recommended for Google Workspace for 2023, apply today. |

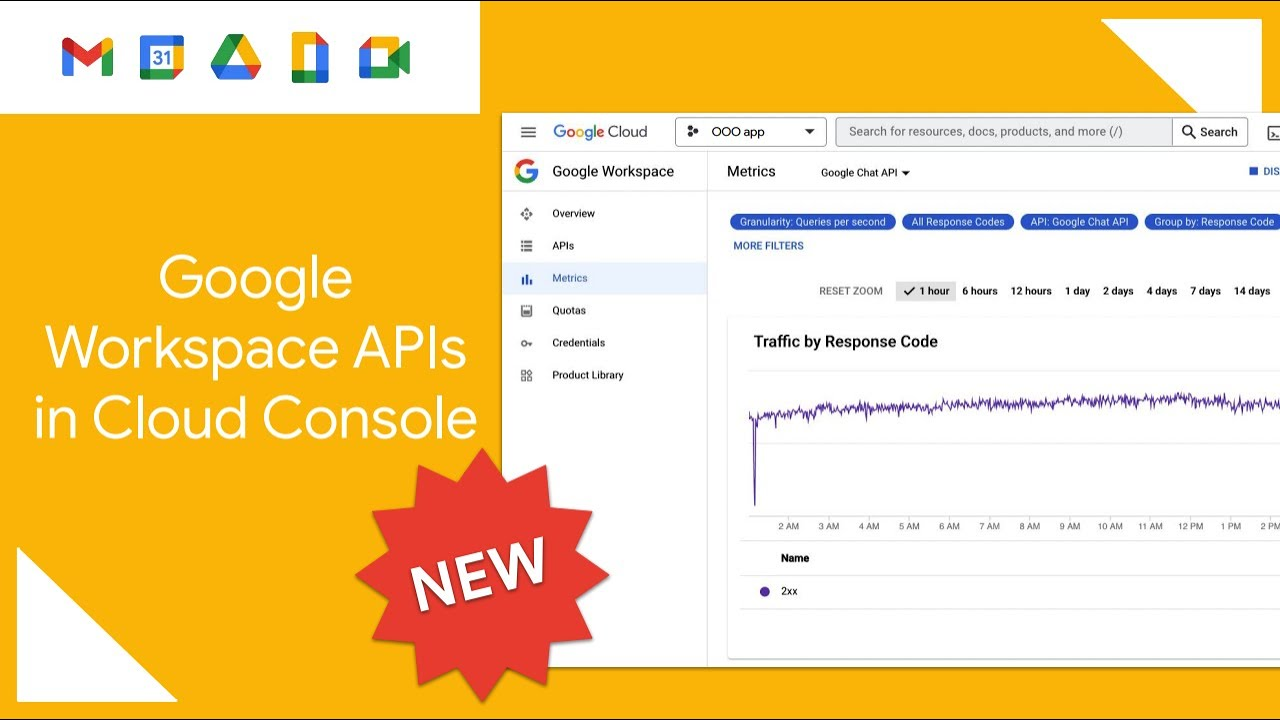

We recently added a unified way to access Google Workspace APIs through the Google Cloud Console—APIs for Gmail, Google Drive, Docs, Sheets, Chat, Slides, Calendar, and many more. From there, you now have a central location to manage all your Google Workspace APIs and view all of the aggregated metrics for the API in use. Watch this how-to video to get started.

|

| Developers can now manage their Google Workspace APIs from within the Google Cloud Console. |

The new Google Forms API joins the large family of APIs available to developers under Google Workspace. The Forms API provides programmatic access for managing forms, acting on responses, and empowering developers to build powerful integrations on top of Forms. Watch this introduction to the Google Forms API to get started.

|

| The new Google Forms API allows you to programmatically create and manage Forms. |

Google Apps Script is a low-code, cloud based JavaScript development environment for Google Workspace that makes it easy for anyone to build custom business solutions across several Google products. This year we completed the updates for our new IDE v2, offering a more modern and simplified development experience which makes it quicker and easier to build solutions that make Google Workspace apps more useful for your organization.

If you are new to Apps Script, figuring out where to begin can be a hurdle, this year we released 10 new sample solutions to help you get started to bring our number to more than 30! From data analysis to automated emails, you’ll find sample solutions to get you started quickly.

AppSheet is Google’s platform for building no-code custom apps and workflows to automate business processes. It lets app creators build and deploy end-to-end apps and automations without writing code.

The new Apps Script connector for AppSheet, launched this year, ties everything together: AppSheet, Apps Script, Google Workspace, and Google Workspace’s many developer APIs. This integration lets no-code app developers using AppSheet greatly extend the capabilities of their no-code apps by allowing them to call and pass data to Apps Script functions. One way to think about this integration is that it bridges no-code (AppSheet) with low-code (Apps Script).

AppSheet databases, announced in preview this year, is a built-in database for professional and citizen developers to easily and securely manage their data. AppSheet databases will give users access to an easy to use, first party database for creating and managing data. Get started and try AppSheet for free.

|

| AppSheet databases are now available in preview. |

This year, we introduced our dedicated YouTube channel for Google Workspace Developers. The channel serves as an ever-growing collection of our most helpful videos, allowing developers of all skill levels and interests to learn about building solutions with Google Workspace.

|

| Our new YouTube channel for Google Workspace developers has dozens of how-to videos for you. |

Community building is one of the most effective ways to support developers, which is why we created Google Cloud Innovators.This new community program was designed for developers and technical practitioners using Google Cloud and everyone is welcome. In 2022, we kicked off the inaugural Innovators Hive, a live, interactive, and virtual event for our global Innovators community. Hive offered rich technical content presented by Champion Innovators and Google engineering leaders. Become a Google Cloud Innovator today.

|

| The Google Cloud Innovators program is open to all levels of creators and developers. |

Learn about the latest innovations and discover how developers can integrate and extend Google Workspace. Here are a few of my favorite sessions from I/O:

- The cloud built for developers: Learn how Google Cloud and Workspace teams are building cloud services to help developers and technologists create transformative applications.

- Learn how to enable shared experiences across platforms with Google Meet: Explore how to enable shared experiences across platforms (Android, iOS, web).

- What's new in the world of Google Chat apps: Integrate services with Google Chat, explore visual improvements to Google Chat apps, and discover new Google Chat API features.

- Extending Google Workspace with AppSheet’s no-code platform and Apps Script: Learn how to configure the new Apps Script Connector in your AppSheet apps.

|

Watch on-demand videos from our biggest Cloud event of the year and learn from product experts and partners to level up your skills.

- Top 10 Cloud Technology Predictions: Watch our top 10 cloud technology predictions for the future based on industry trends we're seeing, customer usage patterns, and some exciting demos we've got lined up.

- What’s next in productivity and hybrid work: See how Google Workspace is helping organizations collaborate, create and innovate securely from anywhere, anytime.

- Create modern applications for Google Workspace: Learn about the extensibility opportunities Google Workspace brings to your organization and how it can be instrumental in adding business value.

- Better together: Google Cloud and Google Workspace: Learn how to empower IT teams, developers, knowledge workers and frontline workers with Google Workspace and Google Cloud.

- How to modernize your frontline workforce w/ Google Workspace: Improve collaboration easily, train employees quickly and effectively, and automate simple workflows and processes with Google Workspace.

We also had our inaugural Google Workspace Developer Summit series take place in Paris and London. It was an amazing time meeting developers and IT teams from customers and partners that attended from throughout the EMEA region. Watch out for a summit near you in 2023 to learn more about the latest development features for Google Workspace from our Developer Advocates and build connections with the developer community, subscribe to our newsletter to get notified.

|

| Developers gather at Google Paris for the Google Workspace Developer Summit. |

2022 Wrap-up

We are thankful to you in helping make 2022 a great year for the Google Workspace developer community. We look forward to announcing more innovations and having more conversations with you in 2023. To keep track of all the latest announcements and developer updates for Google Workspace please subscribe to our monthly newsletter. Happy holidays and a peaceful New Year!

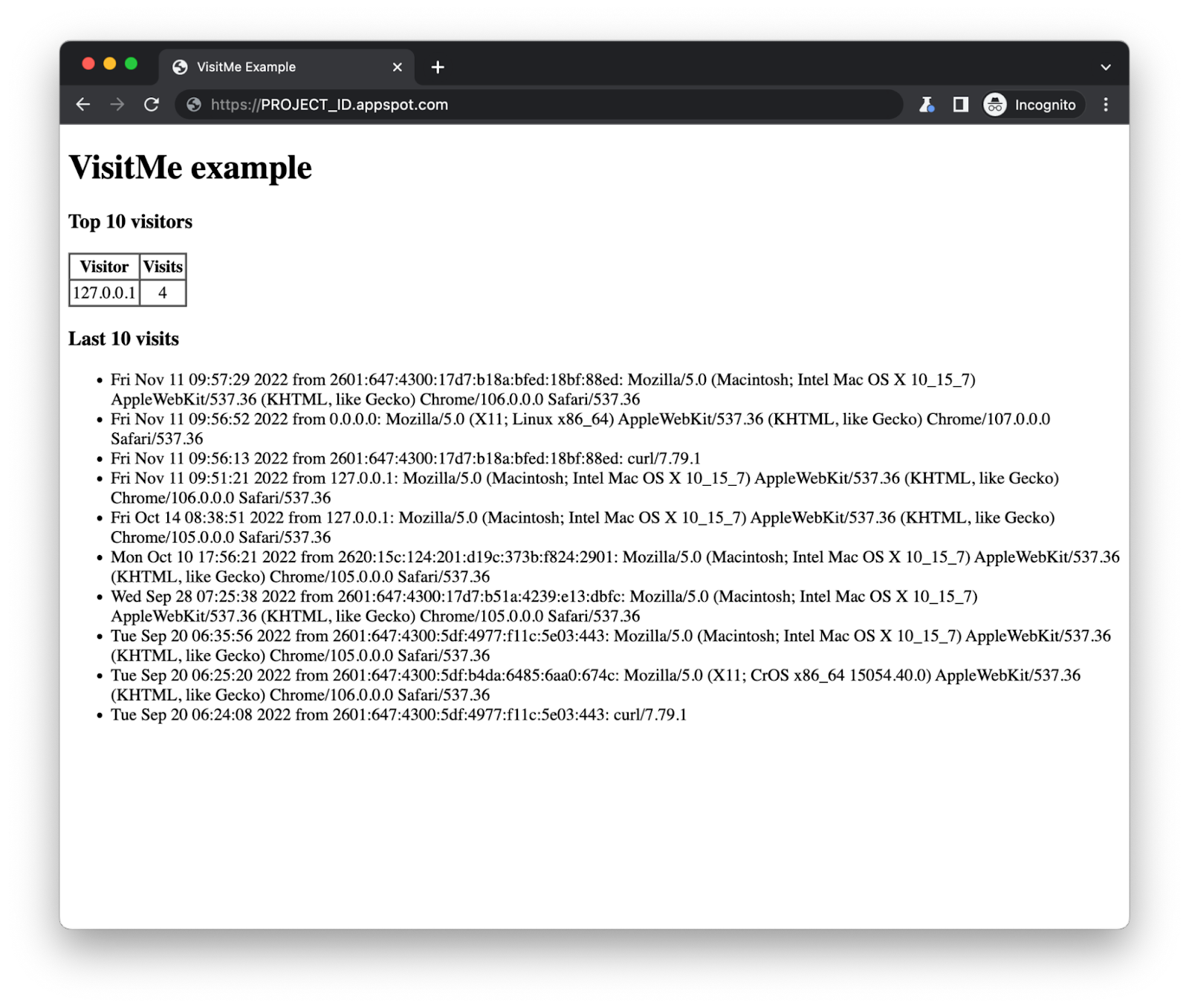

![Adding App Engine Task Queue pull task usage to sample app showing 'Before'[Module 1] on the left and 'After' [Module 18] with altered code on the right](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEjRVGVYc9fl4xI9xUpOUaAwXup8p-wUf5nbKllG7OYJSVAYSPVCGw7DU8EbMoTE3kBBgVZiICFvsn_7fHP2oymA_1ASKBrAE2Qt8PzCGAAkK7_WnyvAIEMKQUrxP8FSz3tGykLrlu9nyluN5vgEPrWZrBqIAalCoRmos169g9m9NHz3cGqQyych9eEG/s1600/Screen%20Shot%202022-11-29%20at%205.19.28%20PM.png)