Google for Games is coming to GDC in San Francisco! Join us on March 19 for the Game Developers Conference (GDC) at the Moscone Center, where game developers from across the world will gather to learn, network, problem-solve, and help shape the future of the industry. From March 18 to March 22, experience our comprehensive suite of multi-platform game development tools and explore the new features from Play Pass at the West Hall, Level 2 Lobby.

This year, we’re proud to host eight sessions for developers, designers, business and marketing teams, and everyone else in the gaming community with an interest to grow their game business. Take a look at this year’s sessions below and if you’re interested in learning more about topics from Google Play and Android, check out key product updates from the Google for Games Developer Summit.

Scaling your game development

We’re hosting three sessions designed to help scale your game development using tools from Firebase, Android, and Google Cloud. Learn more about building high quality games with case studies from industry experts.

Beyond "Set and Forget": Advanced Debugging with Firebase Crashlytics

Tuesday, March 19, 9:30 am - 10:00 am

Crashlytics has added a number of features that make detecting, tracking, and understanding bugs even easier, from high-level to native code. Take your fixes to another level with native stack traces, memory debugging, issue annotation, and the ability to log uncaught exceptions as fatal.

Enhancing Game Performance: Vulkan and Android Adaptability Technology

Tuesday, March 19, 10:50 am - 11:50 am

Learn how to leverage Vulkan graphics API to improve your graphics quality or performance, including performance tuning with dynamic upscaling. Find out how the Android Dynamic Performance Framework (ADPF) can enhance game performance and power in Unity and native C++, with easy integration through the Unreal Engine plugin. We're also sharing how NCSoft Lineage W improved thermal status and performance using ADPF.

Creating a global-scale game with Google Cloud

Tuesday, March 19, 4:40 pm - 5:10 pm

This session will cover the best of Google Cloud's open source projects (Agones, Open Match, and more) and products (GKE, Spanner, Anthos Service Mesh, Cloud Build, Cloud Deploy, and more) to teach you how to build, deploy, and scale world-scale multiplayer games with Google Cloud.

Increasing user engagement

We’re hosting two sessions designed to help you increase engagement by creating dynamic gameplay experiences using generative AI and expanding opportunities on Google Play to grow your community of players with exclusive rewards.

Reimagine the Future of Gaming with Google AI

Tuesday, March 19, 10:50 am - 11:50 am

In our keynote session, senior executives from Google Cloud, Google Play, and Labs will share their unique perspectives on generative AI in the gaming landscape. Learn more about cutting-edge AI solutions from Google Cloud, Android, Google Play, and Labs designed to simplify game development, publishing, and business operations, plus actionable strategies to leverage AI for faster development, better player experiences, and sustainable growth.

Grow your community of loyal gamers with Google Play

Tuesday, March 19, 1:20 pm - 1:50 pm

In this session, we’ll cover new features and insights from Google Play to create rewarding experiences for gamers using Play Pass, Play Points, and Play Games Services. Get a behind-the-scenes look at how Google Play rewards a growing community of passionate gamers, and how to use this to super-charge your business.

Maximizing reach across screens

These sessions, from Google Play, Android, and Flutter, introduce ways to expand your mobile games to PC. Learn about the latest tools that will help you accelerate growth across large screens.

Bringing more users to your Google Play Games on PC game

Tuesday, March 19, 2:10 pm - 2:40 pm

Join us for an overview of Google Play Games on PC, how it has grown in the past year, and a walkthrough of how to optimize and attribute your PC advertisements for your Google Play Games on PC titles. Learn how to use Google Play Games to increase your reach and acquisition of PC users for your mobile game, as well as how to effectively use the Google Play Install Referrer API to attribute and optimize your ads across mobile and PC.

Android input on desktop: How to delight your users

Tuesday, March 19, 3:00 pm - 3:30 pm

Give your players a first-class gaming experience with our best practices for handling input between mobile and PC games, including technical details on how to implement these best practices across mobile, tablets, Chromebooks and Windows PCs1. Learn how Android handles keyboard, mouse, and controller input across different form factors, with case studies for designing for both touch and hardware input.

Building Multiplatform Games with Flutter

Tuesday, March 19, 3:50 pm - 4:20 pm

Learn why game developers are choosing Flutter to build casual games on mobile, desktop, and web browsers. We’ll cover the free, open-source tools and resources available through the Casual Games Toolkit, a collection of free and open-source tools, templates, and resources to make game dev more productive with Flutter.

Learn more about all of our sessions coming to you on March, 19, at GDC in San Francisco.

________________

1Windows is a trademark of the Microsoft group of companies.

User enrollment for managed iOS devices is now generally available

What’s changing

Getting started

- Admins: Use our Help Center to learn more about separating work and personal data on iOS devices,

- End users: The user enrollment process starts when a user signs-in to an app for the first time or re-signs into an app. They’ll be prompted to begin downloading the configuration profile, which will open in an internet browser with more instructions and information. Once the profile has been downloaded, the user will be directed to their devices settings to complete user enrollment.

Visit our Help Center for more information about how to install the Google Device Policy app and a configuration profile on your device, how your iOS device is managed and getting approved work apps on your iOS device.

Rollout pace

- Rapid Release and Scheduled Release domains: Extended rollout (potentially longer than 15 days for feature visibility) starting on March 7, 2024 with anticipated completion by the end of the month.

Availability

- Available to Google Workspace Enterprise Plus, Enterprise Standard, Enterprise Essentials, Enterprise Essentials Plus, Frontline Standard, Frontline Starter, Business Plus, Cloud Identity Premium, Education Standard, Education Plus and Nonprofits customers.

Source: Google Workspace Updates

Large Language Models On-Device with MediaPipe and TensorFlow Lite

TensorFlow Lite has been a powerful tool for on-device machine learning since its release in 2017, and MediaPipe further extended that power in 2019 by supporting complete ML pipelines. While these tools initially focused on smaller on-device models, today marks a dramatic shift with the experimental MediaPipe LLM Inference API.

This new release enables Large Language Models (LLMs) to run fully on-device across platforms. This new capability is particularly transformative considering the memory and compute demands of LLMs, which are over a hundred times larger than traditional on-device models. Optimizations across the on-device stack make this possible, including new ops, quantization, caching, and weight sharing.

The experimental cross-platform MediaPipe LLM Inference API, designed to streamline on-device LLM integration for web developers, supports Web, Android, and iOS with initial support for four openly available LLMs: Gemma, Phi 2, Falcon, and Stable LM. It gives researchers and developers the flexibility to prototype and test popular openly available LLM models on-device.

On Android, the MediaPipe LLM Inference API is intended for experimental and research use only. Production applications with LLMs can use the Gemini API or Gemini Nano on-device through Android AICore. AICore is the new system-level capability introduced in Android 14 to provide Gemini-powered solutions for high-end devices, including integrations with the latest ML accelerators, use-case optimized LoRA adapters, and safety filters. To start using Gemini Nano on-device with your app, apply to the Early Access Preview.

LLM Inference API

Starting today, you can test out the MediaPipe LLM Inference API via our web demo or by building our sample demo apps. You can experiment and integrate it into your projects via our Web, Android, or iOS SDKs.

Using the LLM Inference API allows you to bring LLMs on-device in just a few steps. These steps apply across web, iOS, and Android, though the SDK and native API will be platform specific. The following code samples show the web SDK.

1. Pick model weights compatible with one of our supported model architectures

2. Convert the model weights into a TensorFlow Lite Flatbuffer using the MediaPipe Python Package

from mediapipe.tasks.python.genai import converter config = converter.ConversionConfig(...) converter.convert_checkpoint(config)

3. Include the LLM Inference SDK in your application

import { FilesetResolver, LlmInference } from "https://cdn.jsdelivr.net/npm/@mediapipe/tasks-genai”

4. Host the TensorFlow Lite Flatbuffer along with your application.

5. Use the LLM Inference API to take a text prompt and get a text response from your model.

const fileset = await FilesetResolver.forGenAiTasks("https://cdn.jsdelivr.net/npm/@mediapipe/tasks-genai/wasm"); const llmInference = await LlmInference.createFromModelPath(fileset, "model.bin"); const responseText = await llmInference.generateResponse("Hello, nice to meet you"); document.getElementById('output').textContent = responseText;

Please see our documentation and code examples for a detailed walk through of each of these steps.

Here are real time gifs of Gemma 2B running via the MediaPipe LLM Inference API.

|

| Gemma 2B running on-device in browser via the MediaPipe LLM Inference API |

|

| Gemma 2B running on-device on iOS (left) and Android (right) via the MediaPipe LLM Inference API |

Models

Our initial release supports the following four model architectures. Any model weights compatible with these architectures will work with the LLM Inference API. Use the base model weights, use a community fine-tuned version of the weights, or fine tune weights using your own data.

|

Model |

Parameter Size |

|

Falcon 1B |

1.3 Billion |

|

Gemma 2B |

2.5 Billion |

|

Phi 2 |

2.7 Billion |

|

Stable LM 3B |

2.8 Billion |

Model Performance

Through significant optimizations, some of which are detailed below, the MediaPipe LLM Inference API is able to deliver state-of-the-art latency on-device, focusing on CPU and GPU to support multiple platforms. For sustained performance in a production setting on select premium phones, Android AICore can take advantage of hardware-specific neural accelerators.

When measuring latency for an LLM, there are a few terms and measurements to consider. Time to First Token and Decode Speed will be the two most meaningful as these measure how quickly you get the start of your response and how quickly the response generates once it starts.

|

Term |

Significance |

Measurement |

|

Token |

LLMs use tokens rather than words as inputs and outputs. Each model used with the LLM Inference API has a tokenizer built in which converts between words and tokens. |

100 English words ≈ 130 tokens. However the conversion is dependent on the specific LLM and the language. |

|

Max Tokens |

The maximum total tokens for the LLM prompt + response. |

Configured in the LLM Inference API at runtime. |

|

Time to First Token |

Time between calling the LLM Inference API and receiving the first token of the response. |

Max Tokens / Prefill Speed |

|

Prefill Speed |

How quickly a prompt is processed by an LLM. |

Model and device specific. Benchmark numbers below. |

|

Decode Speed |

How quickly a response is generated by an LLM. |

Model and device specific. Benchmark numbers below. |

The Prefill Speed and Decode Speed are dependent on model, hardware, and max tokens. They can also change depending on the current load of the device.

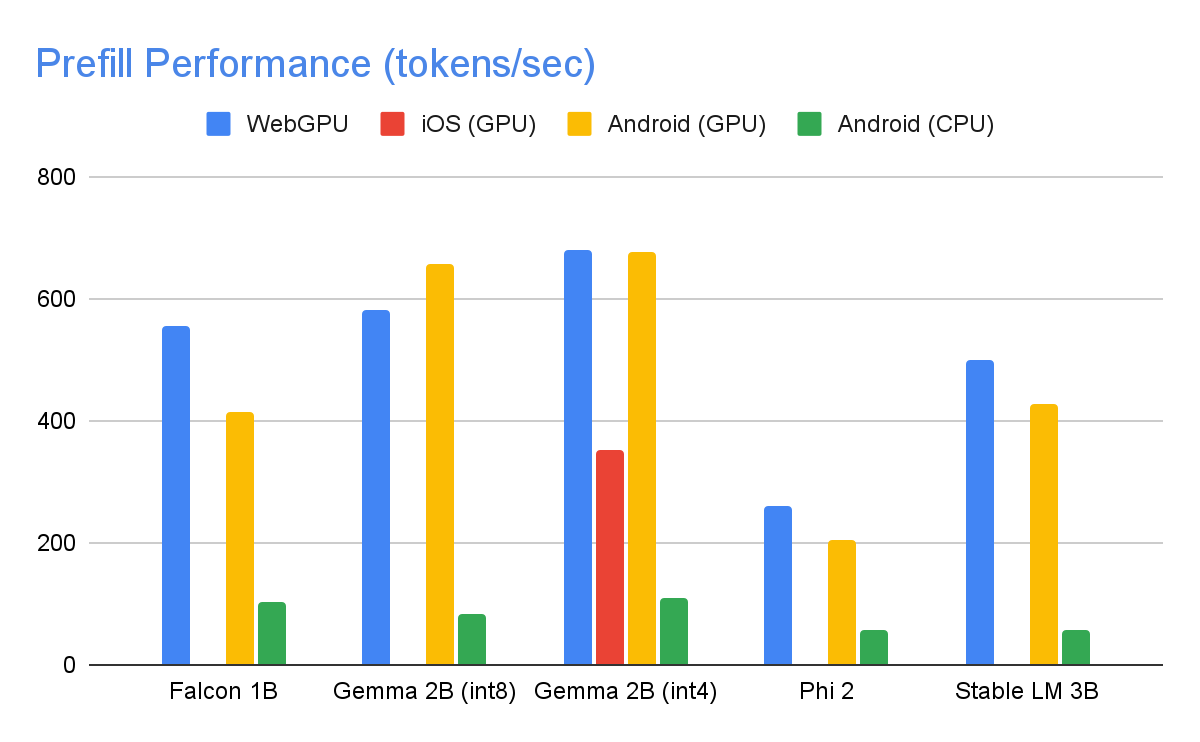

The following speeds were taken on high end devices using a max tokens of 1280 tokens, an input prompt of 1024 tokens, and int8 weight quantization. The exception being Gemma 2B (int4), found here on Kaggle, which uses a mixed 4/8-bit weight quantization.

Benchmarks

|

|

| On the GPU, Falcon 1B and Phi 2 use fp32 activations, while Gemma and StableLM 3B use fp16 activations as the latter models showed greater robustness to precision loss according to our quality eval studies. The lowest bit activation data type that maintained model quality was chosen for each. Note that Gemma 2B (int4) was the only model we could run on iOS due to its memory constraints, and we are working on enabling other models on iOS as well. |

Performance Optimizations

To achieve the performance numbers above, countless optimizations were made across MediaPipe, TensorFlow Lite, XNNPack (our CPU neural network operator library), and our GPU-accelerated runtime. The following are a select few that resulted in meaningful performance improvements.

Weights Sharing: The LLM inference process comprises 2 phases: a prefill phase and a decode phase. Traditionally, this setup would require 2 separate inference contexts, each independently managing resources for its corresponding ML model. Given the memory demands of LLMs, we've added a feature that allows sharing the weights and the KV cache across inference contexts. Although sharing weights might seem straightforward, it has significant performance implications when sharing between compute-bound and memory-bound operations. In typical ML inference scenarios, where weights are not shared with other operators, they are meticulously configured for each fully connected operator separately to ensure optimal performance. Sharing weights with another operator implies a loss of per-operator optimization and this mandates the authoring of new kernel implementations that can run efficiently even on sub-optimal weights.

Optimized Fully Connected Ops: XNNPack’s FULLY_CONNECTED operation has undergone two significant optimizations for LLM inference. First, dynamic range quantization seamlessly merges the computational and memory benefits of full integer quantization with the precision advantages of floating-point inference. The utilization of int8/int4 weights not only enhances memory throughput but also achieves remarkable performance, especially with the efficient, in-register decoding of 4-bit weights requiring only one additional instruction. Second, we actively leverage the I8MM instructions in ARM v9 CPUs which enable the multiplication of a 2x8 int8 matrix by an 8x2 int8 matrix in a single instruction, resulting in twice the speed of the NEON dot product-based implementation.

Balancing Compute and Memory: Upon profiling the LLM inference, we identified distinct limitations for both phases: the prefill phase faces restrictions imposed by the compute capacity, while the decode phase is constrained by memory bandwidth. Consequently, each phase employs different strategies for dequantization of the shared int8/int4 weights. In the prefill phase, each convolution operator first dequantizes the weights into floating-point values before the primary computation, ensuring optimal performance for computationally intensive convolutions. Conversely, the decode phase minimizes memory bandwidth by adding the dequantization computation to the main mathematical convolution operations.

|

| During the compute-intensive prefill phase, the int4 weights are dequantized a priori for optimal CONV_2D computation. In the memory-intensive decode phase, dequantization is performed on the fly, along with CONV_2D computation, to minimize the memory bandwidth usage. |

Custom Operators: For GPU-accelerated LLM inference on-device, we rely extensively on custom operations to mitigate the inefficiency caused by numerous small shaders. These custom ops allow for special operator fusions and various LLM parameters such as token ID, sequence patch size, sampling parameters, to be packed into a specialized custom tensor used mostly within these specialized operations.

Pseudo-Dynamism: In the attention block, we encounter dynamic operations that increase over time as the context grows. Since our GPU runtime lacks support for dynamic ops/tensors, we opt for fixed operations with a predefined maximum cache size. To reduce the computational complexity, we introduce a parameter enabling the skipping of certain value calculations or the processing of reduced data.

Optimized KV Cache Layout: Since the entries in the KV cache ultimately serve as weights for convolutions, employed in lieu of matrix multiplications, we store these in a specialized layout tailored for convolution weights. This strategic adjustment eliminates the necessity for extra conversions or reliance on unoptimized layouts, and therefore contributes to a more efficient and streamlined process.

What’s Next

We are thrilled with the optimizations and the performance in today’s experimental release of the MediaPipe LLM Inference API. This is just the start. Over 2024, we will expand to more platforms and models, offer broader conversion tools, complimentary on-device components, high level tasks, and more.

You can check out the official sample on GitHub demonstrating everything you’ve just learned about and read through our official documentation for even more details. Keep an eye on the Google for Developers YouTube channel for updates and tutorials.

Acknowledgements

We’d like to thank all team members who contributed to this work: T.J. Alumbaugh, Alek Andreev, Frank Ban, Jeanine Banks, Frank Barchard, Pulkit Bhuwalka, Buck Bourdon, Maxime Brénon, Chuo-Ling Chang, Yu-hui Chen, Linkun Chen, Lin Chen, Nikolai Chinaev, Clark Duvall, Rosário Fernandes, Mig Gerard, Matthias Grundmann, Ayush Gupta, Mohammadreza Heydary, Ekaterina Ignasheva, Ram Iyengar, Grant Jensen, Alex Kanaukou, Prianka Liz Kariat, Alan Kelly, Kathleen Kenealy, Ho Ko, Sachin Kotwani, Andrei Kulik, Yi-Chun Kuo, Khanh LeViet, Yang Lu, Lalit Singh Manral, Tyler Mullen, Karthik Raveendran, Raman Sarokin, Sebastian Schmidt, Kris Tonthat, Lu Wang, Tris Warkentin, and the Gemma Team

Embracing Android 14: Meta’s Early Adoption Empowered Enhanced User Experience

With the first Developer Preview of Android 15 now released, another new Android release that brings new features and under-the-hood improvements for billions of users worldwide will be coming shortly. As Android developers, you are key players in this evolution; by staying on top of the targetSDK upgrade cycle, you are making sure that your users have the best possible experience.

The way Meta, the parent company of Instagram, Facebook, WhatsApp, and Messenger, approached Android 14 provides a blueprint for both developer success and user satisfaction. Meta improved their velocity towards targetSDK adoption by 4x, and so to understand more about how they built this, we spoke to the team at Meta, with an eye towards insights that all developers could build into their testing programs.

Meta’s journey on A14: A blueprint for faster adoption

When Android 11 launched, some of Meta’s apps experienced challenges with existing features, such as Chat Heads, and with new requirements, like scoped storage integration. Fixing these issues was complicated by slow developer tooling adoption and a decentralized app strategy. This experience motivated Meta to create an internal Android OS Readiness Program which focuses on prioritizing early and thorough testing throughout the Android release window and accelerating their apps’ targetSDK adoption.

The program officially launched last year. By compiling apps against each Android 14 beta and conducting thorough automated and smoke tests to proactively identify potential issues, Meta was able to seamlessly adopt new Android 14 features, like Foreground Service types and send timely feedback and bug reports to the Android team, contributing to improvements in the OS.

Meta also accelerated their targetSDK adoption for Android 14—updating Messenger, Facebook, and Instagram within one to two months of the AOSP release, compared to seven to nine months for Android 12 (an increase of velocity of more than 4x!). Meta’s newly created readiness program unlocked this achievement by working across each app to adopt latest Android changes while still maintaining compatibility. For example, by automating and simplifying their SDK release process, Meta was able to cut rollout time from three weeks to under three hours, enhancing cooperation between individual app teams by providing immediate access to the latest SDKs and allowing for rapid testing of new OS features. The centralized approach also meant Threads adopted Android 14 support quickly despite the fast-growing new app being supported by a minimal team.

Reaping the rewards: The impact on users

Meta's early targetSDK adoption strategy delivers significant benefits for users as well. Here's how:

- Improved reliability and compatibility: Early adoption of Android previews and betas prevented surprises near the OS launch, guaranteeing a smooth day-one experience for users upgrading to the latest Android version. For example, with partial media permissions, Meta's extensive experimentation with permission flows ensured “users felt informed about the change and in control over their privacy settings,” while maximizing the app's media-sharing functionality.

- Robust experimentation with new release features: Early Android release adoption gave Meta ample time to collaborate across privacy, design, and content strategy teams, enabling them to thoughtfully integrate the new Android features that come with every release. This enhanced the collaboration on other features, allowing Meta to roll out Ultra HDR image experience on Instagram within 3 months of platform release in an “Android first” manner is a great example of this, delighting users with brighter and richer colors with a higher dynamic range in their Instagram posts and stories.

Embrace the latest Android versions

Meta's journey highlights the compelling reasons for Android developers to adopt a similar forward-thinking mindset in working with the Android betas:

- Test your apps early: Anticipate Android OS changes, ensuring your apps are prepared for the latest target SDK as soon as they become available to create a seamless transition for users who update to the newest Android version.

- Utilize latest tools to optimize user experience: Test your apps thoroughly against each beta to identify and address any potential issues. Check the Android Studio Upgrade Assistant to highlight major breaking changes in each targetSDKVersion, and integrate the compatibility framework tool into your testing process to help uncover potential app issues in the new OS version.

- Collaborate with Google: Provide your valuable feedback and bug reports using the Google issue tracker to contribute directly to the improvement of the Android ecosystem.

We encourage you to take full advantage of the Android Developer Previews & Betas program, starting with the newly-released Android 15 Developer Preview 1.

The team behind the success

A big thank you to the entire Meta team for their collaboration in Android 14 and in writing this blog! We’d especially like to recognize the following folks from Meta for their outstanding contributions in establishing a culture of early adoption:

- Tushar Varshney - Partner Engineering, Partner Engineer

- Allen Bae - Partner Engineering, EM

- Abel Del Pino - Facebook, SWE

- Matias Hanco - Facebook, SWE

- Summer Kitahara - Instagram, SWE

- Tom Rozanski - Messenger, SWE

- Ashish Gupta - WhatsApp, SWE

- Daniel Hill - Mobile Infra, SWE

- Jason Tang - Facebook, SWE

- Jane Li - Meta Quest, SWE

Source: Android Developers Blog

New goodies from Android, Wearables at Mobile World Congress + tune in to a new episode of #TheAndroidShow next week!

Earlier today, at Mobile World Congress (MWC), an annual conference showcasing the latest in mobile, Android and our partners unveiled a range of new goodies, including new wearables, foldables, as well as a number of new features for Android users. Keep reading below to see how you, as developers, can take advantage of these new features and devices that are being released. And in just over a week, on Thursday March 7 at 10AM PT, we’ll be kicking off another episode of #TheAndroidShow, our quarterly live show on YouTube and on developer.android.com, where we’ll dive more into these topics.

Meet the new watch from OnePlus and how we’re boosting power with the Wear OS hybrid interface

Wearables are on display across MWC this week, and one of our favorites is OnePlus Watch 2, powered with the latest version of Wear OS (Wear OS 4). As part of our ongoing work to improve the Wear OS by Google user experience, we’ve made fundamental changes to the platform and substantially expanded the capabilities of the Wear OS hybrid interface that improve two key areas: power and performance. As a developer, you can leverage existing Wear OS APIs to get underneath optimizations without any added effort – no code changes required! You can read more about the updates here.

A few new features for Android users

Google released 9 new features Android users can take advantage of across Google apps, you can read more about those features here. For developers, we wanted to highlight a few ways you can take advantage of this news across experiences you build into your apps:

- More places for users to see their Health Connect data, now in the Fitbit app: With permission from your users, Health Connect is a central way to connect and sync their favorite health and fitness apps, see all their data in one place, and stay in control of their privacy. By setting up Health Connect in the Fitbit mobile app for Android, users will have an overview of their health and fitness data from across their apps in one place. You can join developers like Peloton, ŌURA, and Lifesum who are using Health Connect to provide their users with deeper health and fitness insights, get started now!

- Add Stylus support, like Google Docs did: With Google Docs markups, you can add handwritten annotations to Docs from your Android phone or tablet using just your finger or stylus. Google Docs took advantage of stylus support; you can learn more about adding support for Stylus here.

- Use Tiles for Wear OS, like Google Maps did: With public transit directions on Google Maps for Wear OS, you can leave your phone in your pocket and glance at your wrist to make sure you catch your bus, train or ferry. Users can see these public transit directions through Google Maps use of Tiles which provide quick access to the information and actions users need to get things done. You can learn more about building a Tile for your app here.

A new episode of #TheAndroidShow, live on March 7 at 10AM PT. Send us your #AskAndroid questions now!

You can join us on March 7 at 10AM PT for a new episode of #TheAndroidShow. In this quarterly show, we’ll unpack the latest Android foldables and large screens for you to get building on, plus a behind-the-scenes on Gemini Nano and AICore.

We’ll have a live #AskAndroid Q&A with the team about building Android; you can ask us about building excellent apps across devices, Android 15, Compose, Gemini and more, using #AskAndroid on X or on YouTube. Our experts are ready to answer your questions live!

#TheAndroidShow: March 7 at 10AM PT, broadcast live on YouTube and d.android.com/events/show!

Source: Android Developers Blog

The First Developer Preview of Android 15

We're releasing the first Developer Preview of Android 15 today so you, our developers, can collaborate with us to build a better Android.

Android 15 continues our work to build a platform that helps improve your productivity while giving you new capabilities to produce superior media experiences, minimize battery impact, maximize smooth app performance, and protect user privacy and security all on the most diverse lineup of devices out there.

Android enables your apps to take advantage of premium device hardware, including high-end camera capabilities, powerful GPUs, dazzling displays, and AI processing. The demand for large-screen devices, including tablets, foldables and flippables, continues to grow, offering an opportunity to reach high-value users. Also, Android is committed to providing tooling and libraries to help your apps take advantage of the latest advances in AI.

Your feedback on the Android 15 Developer Preview and QPR beta program plays a key role in helping Android continuously improve. The Android 15 developer site has more information about the preview, including downloads for Pixel and detailed documentation about changes. This preview is just the beginning, and we’ll have lots more to share as we move through the release cycle. Thank you in advance for your help in making Android a platform that works for everyone.

Protecting user privacy and security

Android is constantly working to create solutions that maximize user privacy and security.

Privacy Sandbox on Android

Android 15 brings Android AD Services up to extension level 10, incorporating the latest version of the Privacy Sandbox on Android, part of our work to develop new technologies that improve user privacy and enable effective, personalized advertising experiences for mobile apps. Our website has more about the Privacy Sandbox on Android developer preview and beta programs to help you get started.

Health Connect

Android 15 integrates Android 14 extensions 10 around Health Connect by Android, a secure and centralized platform to manage and share app-collected health and fitness data. This update adds support for new data types across fitness, nutrition, and more.

File integrity

Android 15's FileIntegrityManager includes new APIs that tap into the power of the fs-verity feature in the Linux kernel. With fs-verity, files can be protected by custom cryptographic signatures, helping you ensure they haven't been tampered with or corrupted. This leads to enhanced security, protecting against potential malware or unauthorized file modifications that could compromise your app's functionality or data.

Partial screen sharing

Android 15 supports partial screen sharing so users can share or record just an app window rather than the entire device screen. This feature, enabled first in Android 14 QPR2, includes MediaProjection callbacks that allow your app to customize the partial screen sharing experience. Note that user consent is now required for each MediaProjection capture session.

Supporting creators

Android continues its work to give you access to tools and hardware to support creators to bring their vision to life on Android.

In-app Camera Controls

Android 15 adds new extensions for more control over the camera hardware and its algorithms on supported devices:

- Low light enhancements that give developers control to boost the brightness of the camera preview.

Virtual MIDI 2.0 Devices

Android 13 added support for connecting to MIDI 2.0 devices via USB, which communicate using Universal MIDI Packets (UMP). Android 15 extends UMP support to virtual MIDI apps, enabling composition apps to control synthesizer apps as a virtual MIDI 2.0 device just like they would with an USB MIDI 2.0 device.

Performance and quality

Android continues its focus on helping you improve the quality of your apps. Much of this focus is around tooling and libraries, including Jetpack Compose, Android Studio, and more.

Dynamic Performance

Android 15 continues our investment in the Android Dynamic Performance Framework (ADPF), a set of APIs that allow games and performance intensive apps to interact more directly with power and thermal systems of Android devices. On supported devices, Android 15 will add new ADPF capabilities:

- A power-efficiency mode for hint sessions to indicate that their associated threads should prefer power saving over performance, great for long-running background workloads.

- GPU and CPU work durations can both be reported in hint sessions, allowing the system to adjust CPU and GPU frequencies together to best meet workload demands.

- Thermal headroom thresholds to interpret possible thermal throttling status based on headroom prediction.

To learn more about how to use ADPF in your apps and games, head over to the documentation.

Developer Productivity

Android 15 continues to add OpenJDK APIs, including quality-of-life improvements around NIO buffers, streams, security, and more. These APIs are updated on over a billion devices running Android 12+ through Google Play System updates, so you can target the latest programming features.

App compatibility

To give you more time to plan for app compatibility work, we’re letting you know our Platform Stability milestone well in advance.

At this milestone, we’ll deliver final SDK/NDK APIs and also final internal APIs and app-facing system behaviors. We’re expecting to reach Platform Stability in June 2024, and from that time you’ll have several months before the official release to do your final testing. The release timeline details are here.

Get started with Android 15

The Developer Preview has everything you need to try the Android 15 features, test your apps, and give us feedback. You can get started today by flashing a system image onto a Pixel 6, 7, or 8 series device, along with the Pixel Fold and Pixel Tablet. If you don’t have a Pixel device, you can use the 64-bit system images with the Android Emulator in Android Studio.

For the best development experience with Android 15, we recommend that you use the latest preview of Android Studio Jellyfish (or more recent Jellyfish+ versions). Once you’re set up, here are some of the things you should do:

- Try the new features and APIs – your feedback is critical during the early part of the developer preview. Report issues in our tracker on the feedback page.

- Test your current app for compatibility – learn whether your app is affected by changes in Android 15; install your app onto a device or emulator running Android 15 and extensively test it.

We’ll update the preview system images and SDK regularly throughout the Android 15 release cycle. This initial preview release is for developers only and not intended for daily or consumer use, so we're making it available by manual download only. Once you’ve manually installed a preview build, you’ll automatically get future updates over-the-air for all later previews and Betas. Read more here.

If you intend to move from the Android 14 QPR Beta program to the Android 15 Developer Preview program and don't want to have to wipe your device, we recommend that you move to Developer Preview 1 now. Otherwise you may run into time periods where the Android 14 Beta will have a more recent build date which will prevent you from going directly to the Android 15 Developer Preview without doing a data wipe.

As we reach our Beta releases, we'll be inviting consumers to try Android 15 as well, and we'll open up enrollment for the Android Beta program at that time. For now, please note that the Android Beta program is not yet available for Android 15.

For complete information, visit the Android 15 developer site.

Java and OpenJDK are trademarks or registered trademarks of Oracle and/or its affiliates.

Source: Android Developers Blog

Google Pay – Enabling liability shift for eligible Visa device token transactions globally

We are excited to announce the general availability [1] of liability shift for Visa device tokens for Google Pay.

For Mastercard device tokens the liability already lies with the issuing bank, whereas, for Visa, only eligible device tokens with issuing banks in the European region benefit from liability shift.

What is liability shift?

If liability shift is granted for a transaction, the responsibility of covering the losses from fraudulent transactions is moving from the merchant to the issuing bank. With this change, qualifying Google Pay Visa transactions done with a device token will benefit from this liability shift.

How to know if the liability was shifted to the issuing bank for my transaction?

Eligible Visa transactions will carry an eciIndicator value of 05. PSPs can access the eciIndicator value after decrypting the payment method token. Merchants can check with their PSPs to get a report on liability shift eligible transactions.

{

"gatewayMerchantId": "some-merchant-id",

"messageExpiration": "1561533871082",

"messageId": "AH2Ejtc8qBlP_MCAV0jJG7Er",

"paymentMethod": "CARD",

"paymentMethodDetails": {

"expirationYear": 2028,

"expirationMonth": 12,

"pan": "4895370012003478",

"authMethod": "CRYPTOGRAM_3DS",

"eciIndicator": "05",

"cryptogram": "AgAAAAAABk4DWZ4C28yUQAAAAAA="

}

}

| A decrypted payment token for a Google Pay Visa transaction with an eciIndicator value of 05 (liability shifted) |

Check out the following table for a full list of eciIndicator values we return for our Visa and Mastercard device token transactions:

|

eciIndicator value |

Card Network |

Liable Party |

authMethod |

|

"" (empty) |

Mastercard |

Merchant/Acquirer |

CRYPTOGRAM_3DS |

|

"02" |

Mastercard |

Card issuer |

CRYPTOGRAM_3DS |

|

"06" |

Mastercard |

Merchant/Acquirer |

CRYPTOGRAM_3DS |

|

"05" |

Visa |

Card issuer |

CRYPTOGRAM_3DS |

|

"07" |

Visa |

Merchant/Acquirer |

CRYPTOGRAM_3DS |

|

"" (empty) |

Other networks |

Merchant/Acquirer |

CRYPTOGRAM_3DS |

Any other eciIndicator values for VISA and Mastercard that aren't present in this table won't be returned.

How to enroll

Merchants may opt-in from within the Google Pay & Wallet console starting this month. Merchants in Europe (already benefiting from liability shift) do not need to take any actions as they will be auto enrolled.

In order for your Google Pay transaction to qualify for enabling liability shift, the following API parameters are required:

|

totalPrice |

Make sure that totalPrice matches with the amount that you use to charge the user. Transactions with totalPrice=0 will not qualify for liability shift to the issuing bank. |

|

totalPriceStatus |

Valid values are: FINAL or ESTIMATED Transactions with the totalPriceStatus value of NOT_CURRENTLY_KNOWN do not qualify for liability shift. |

Not all transactions get liability shift

Ineligible merchants

In the US, the following MCC codes are excluded from getting liability shift:

|

4829 |

Money Transfer |

|

5967 |

Direct Marketing – Inbound Teleservices Merchant |

|

6051 |

Non-Financial Institutions – Foreign Currency, Non-Fiat Currency (for example: Cryptocurrency), Money Orders (Not Money Transfer), Account Funding (not Stored Value Load), Travelers Cheques, and Debt Repayment |

|

6540 |

Non-Financial Institutions – Stored Value Card Purchase/Load |

|

7801 |

Government Licensed On-Line Casinos (On-Line Gambling) (US Region only) |

|

7802 |

Government-Licensed Horse/Dog Racing (US Region only) |

|

7995 |

Betting, including Lottery Tickets, Casino Gaming Chips, Off-Track Betting, Wagers at Race Tracks and games of chance to win prizes of monetary value |

Ineligible transactions

In order for your Google Pay transactions to qualify for liability shift, make sure to include the above mentioned parameters totalPrice and totalPriceStatus. Transactions with totalPrice=0 or a hard coded totalPrice (always the same amount but the users get charged a different amount) will not qualify for liability shift.

Processing transactions

Google Pay API transactions with Visa device tokens are qualified for liability shift at facilitation time if all the conditions are met, but a transaction qualified for liability shift can be downgraded by network during transaction authorization processing.

Getting started with Google Pay

Not yet using Google Pay? Refer to the documentation to start integrating Google Pay today. Learn more about the integration by taking a look at our sample application for Android on GitHub or use one of our button components for your web integration. When you are ready, head over to the Google Pay & Wallet console and submit your integration for production access.

Follow @GooglePayDevs on X (formerly Twitter) for future updates. If you have questions, tag @GooglePayDevs and include #AskGooglePayDevs in your tweets.

[1] For merchants and PSPs using dynamic price updates or other callback mechanisms the Visa device token liability shift changes will be rolled out later this year.

Federated Credential Management (FedCM) Migration for Google Identity Services

Chrome is phasing out support for third-party cookies this year, subject to addressing any remaining concerns of the CMA. A relatively new web API, Federated Credential Management (FedCM), will enable sign-in for the Google Identity Services (GIS) library after the phase out of third-party cookies. Starting in April, GIS developers will be automatically migrated to the FedCM API. For most developers, this migration will occur seamlessly through backwards-compatible updates to the GIS library. However, some websites with custom integrations may require minor changes. We encourage all developers to experiment with FedCM, as previously announced through the beta program, to ensure flows will not be interrupted. Developers have the ability to temporarily exempt traffic from using FedCM until Chrome enforces the restriction of third-party cookies.

Audience

This update is for all GIS web developers who rely on the Chrome browser and use:

- One Tap, or

- Automatic Sign-In

Context

As part of the Privacy Sandbox initiative to keep people’s activity private and support free experiences for everyone, Chrome is phasing out support for third-party cookies, subject to addressing any remaining concerns of the CMA. Scaled testing began at 1% in January and will continue throughout the year.

GIS currently uses third-party cookies to allow users to sign up and sign in to websites easily and securely by reducing reliance on passwords. The FedCM API is a new privacy-preserving alternative to third-party cookies for federated identity providers. It allows Google to continue providing a secure, streamlined experience for signing up and signing in to websites. Last August, the Google Identity team announced a beta program for developers to test the Chrome browser’s new FedCM API supporting GIS.

What to Expect in the Migration

Partners who offer GIS’s One Tap and Automatic Sign-In features will automatically be migrated to FedCM in April. For most developers, this migration will occur seamlessly through backwards-compatible updates to the GIS JavaScript library; the GIS library will call the FedCM APIs behind the scenes, without requiring any developer changes. The new FedCM APIs have minimal impact to existing user flows.

Some Developers May be Required to Make Changes

Some websites with custom integrations may require minor changes, such as updates to custom layouts or positioning of sign-in prompts. Websites using embedded iframes for sign-in or a non-compliant Content Security Policy may need to be updated. To learn if your website will require changes, please review the migration guide. We encourage you to enable and experiment with FedCM, as previously announced through the beta program, to ensure flows will not be interrupted.

Migration Timeline

If you are using GIS One Tap or Automatic Sign-in on your website, please be aware of the following timelines:

- January 2024: Chrome began scaled testing of third-party cookie restrictions at 1%.

- April 2024: GIS begins a migration of all websites to FedCM on the Chrome browser.

- Q3 2024: Chrome begins ramp-up of third-party cookie restrictions, reaching 100% of Chrome clients by the end of Q4, subject to adddressing any remaining concerns of the CMA.

Once the Chrome browser restricts third-party cookies by default for all Chrome clients, the use of FedCM will be required for partners who use GIS One Tap and Automatic Sign-In features.

Checklist for Developers to Prepare

✅ Be aware of migration plans and timelines that will affect your traffic. Determine your migration approach. Developers will be migrated by default starting in April.

✅ All developers should verify that their website will be unaffected by the migration. Opt-in to FedCM to test and make any necessary changes to ensure a smooth transition. For developers with implementations that require changes, make changes ahead of the migration deadline.

✅ For developers that use Automatic Sign-In, review the FedCM changes to the user gesture requirement. We recommend all automatic sign-in developers migrate to FedCM as soon as possible, to reduce disruption to automatic sign-in conversion rates.

✅ If you need more time to verify FedCM functionality on your site and make changes to your code, you can temporarily exempt your traffic from using FedCM until the enforcement of third-party cookie restrictions by Chrome.

To get started and learn more about FedCM, visit our developer site and check out the google-signin tag on Stack Overflow for technical assistance. We invite developers to share their feedback with us at [email protected].

What’s new in the Jetpack Compose January ’24 release

Today, as part of the Compose January ‘24 Bill of Materials, we’re releasing version 1.6 of Jetpack Compose, Android's modern, native UI toolkit that is used by apps such as Threads, Reddit, and Dropbox. This release largely focuses on performance improvements, as we continue to migrate modifiers and improve the efficiency of major parts of our API.

To use today’s release, upgrade your Compose BOM version to 2024.01.01

Performance

Performance continues to be our top priority, and this release of Compose has major performance improvements across the board. We are seeing an additional ~20% improvement in scroll performance and ~12% improvement to startup time in our benchmarks, and this is on top of the improvements from the August ‘23 release. As with that release, most apps will see these benefits just by upgrading to the latest version, with no other code changes needed.

The improvement to scroll performance and startup time comes from our continued focus on memory allocations and lazy initialization, to ensure the framework is only doing work when it has to. These improvements can be seen across all APIs in Compose, especially in text, clickable, Lazy lists, and graphics APIs, including vectors, and were made possible in part by the Modifier.Node refactor work that has been ongoing for multiple releases.

There is also new guidance for you to create your own custom modifiers with Modifier.Node.

Configuring the stability of external classes

Compose compiler 1.5.5 introduces a new compiler option to provide a configuration file for what your app considers stable. This option allows you to mark any class as stable, including your own modules, external library classes, and standard library classes, without having to modify these modules or wrap them in a stable wrapper class. Note that the standard stability contract applies; this is just another convenient method to let the Compose compiler know what your app should consider stable. For more information on how to use stability configuration, see our documentation.

Generated code performance

The code generated by the Compose compiler plugin has also been improved. Small tweaks in this code can lead to large performance improvements due to the fact the code is generated in every composable function. The Compose compiler tracks Compose state objects to know which composables to recompose when there is a change of value; however, many state values are only read once, and some state values are never read at all but still change frequently! This update allows the compiler to skip the tracking when it is not needed.

Compose compiler 1.5.6 also enables “intrinsic remember” by default. This mode transforms remember at compile time to take into account information we already have about any parameters of a composable that are used as a key to remember. This speeds up the calculation of determining if a remembered expression needs reevaluating, but also means if you place a breakpoint inside the remember function during debugging, it may no longer be called, as the compiler has removed the usage of remember and replaced it with different code.

Composables not being skipped

We are also investing in making the code you write more performant, automatically. We want to optimize for the code you intuitively write, removing the need to dive deep into Compose internals to understand why your composable is recomposing when it shouldn’t.

This release of Compose adds support for an experimental mode we are calling “strong skipping mode”. Strong skipping mode relaxes some of the rules about which changes can skip recomposition, moving the balance towards what developers expect. With strong skipping mode enabled, composables with unstable parameters can also skip recomposition if the same instances of objects are passed in to its parameters. Additionally, strong skipping mode automatically remembers lambdas in composition that capture unstable values, in addition to the current default behavior of remembering lambdas with only stable captures. Strong skipping mode is currently experimental and disabled by default as we do not consider it ready for production usage yet. We are evaluating its effects before aiming to turn it on by default in Compose 1.7. See our guidance to experiment with strong skipping mode and help us find any issues.

Text

Changes to default font padding

This release now makes the includeFontPadding setting false by default. includeFontPadding is a legacy property that adds extra padding based on font metrics at the top of the first line and bottom of the last line of a text. Making this setting default to false brings the default text layout more in line with common design tools, making it easier to match the design specifications generated. Upon upgrading to the January ‘24 release, you may see small changes in your text layout and screenshot tests. For more information about this setting, see the Fixing Font Padding in Compose Text blog post and the developer documentation.

Support for nonlinear font scaling

The January ‘24 release uses nonlinear font scaling for better text readability and accessibility. Nonlinear font scaling prevents large text elements on screen from scaling too large by applying a nonlinear scaling curve. This scaling strategy means that large text doesn't scale at the same rate as smaller text.

Drag and drop

Compose Foundation adds support for platform-level drag and drop, which allows for content to be dragged between apps on a device running in multi-window mode. The API is 100% compatible with the View APIs, which means a drag and drop started from a View can be dragged into Compose and vice versa. To use this API, see the code sample.

Additional features

Other features landed in this release include:

- Support for LookaheadScope in Lazy lists.

- Fixed composables that have been deactivated but kept alive for reuse in a Lazy list not being filtered by default from semantics trees.

- Spline-based keyframes in animations.

- Added support for selection by mouse, including text.

Get started!

We’re grateful for all of the bug reports and feature requests submitted to our issue tracker — they help us to improve Compose and build the APIs you need. Continue providing your feedback, and help us make Compose better!

Wondering what’s next? Check out our roadmap to see the features we’re currently thinking about and working on. We can’t wait to see what you build next!

Happy composing!

Source: Android Developers Blog

Star messages in Google Chat on mobile

What’s changing

Getting started

- Admins: There is no admin control for this feature.

- End users:

- To star a message, longpress it and click star.

- You can unstar a message from the original message or unstar messages from the shortcut.

- Starring messages is unavailable in existing spaces organized by conversation topic (legacy threaded). Learn about the upgrade from conversation topics to in-line threading in spaces.

- Visit the Help Center to learn how to star messages in Chat.

Rollout pace

- Rapid Release and Scheduled Release domains: Gradual rollout (up to 15 days for feature visibility) starting on January 19, 2024

- Rapid Release and Scheduled Release domains: Gradual rollout (up to 15 days for feature visibility) starting on January 16, 2024

Availability

- Available to all Google Workspace customers and users with personal Google Accounts

Posted by Aurash Mahbod – General Manager, Games on Google Play

Posted by Aurash Mahbod – General Manager, Games on Google Play

Posted by Mark Sherwood – Senior Product Manager and Juhyun Lee – Staff Software Engineer

Posted by Mark Sherwood – Senior Product Manager and Juhyun Lee – Staff Software Engineer

Posted by Anirudh Dewani, Director of Android Developer Relations

Posted by Anirudh Dewani, Director of Android Developer Relations

Posted by

Posted by

Posted by Dominik Mengelt– Developer Relations Engineer, Payments and Florin Modrea - Product Solutions Engineer, Google Pay

Posted by Dominik Mengelt– Developer Relations Engineer, Payments and Florin Modrea - Product Solutions Engineer, Google Pay

Posted by Gina Biernacki, Product Manager

Posted by Gina Biernacki, Product Manager

Posted by

Posted by