The in-car experience continues to evolve rapidly, and Google remains committed to pushing the boundaries of what's possible. At Google I/O 2025, we're excited to unveil the latest advancements for drivers, car manufacturers, and developers, furthering our goal of a safe, seamless, and helpful connected driving experience.

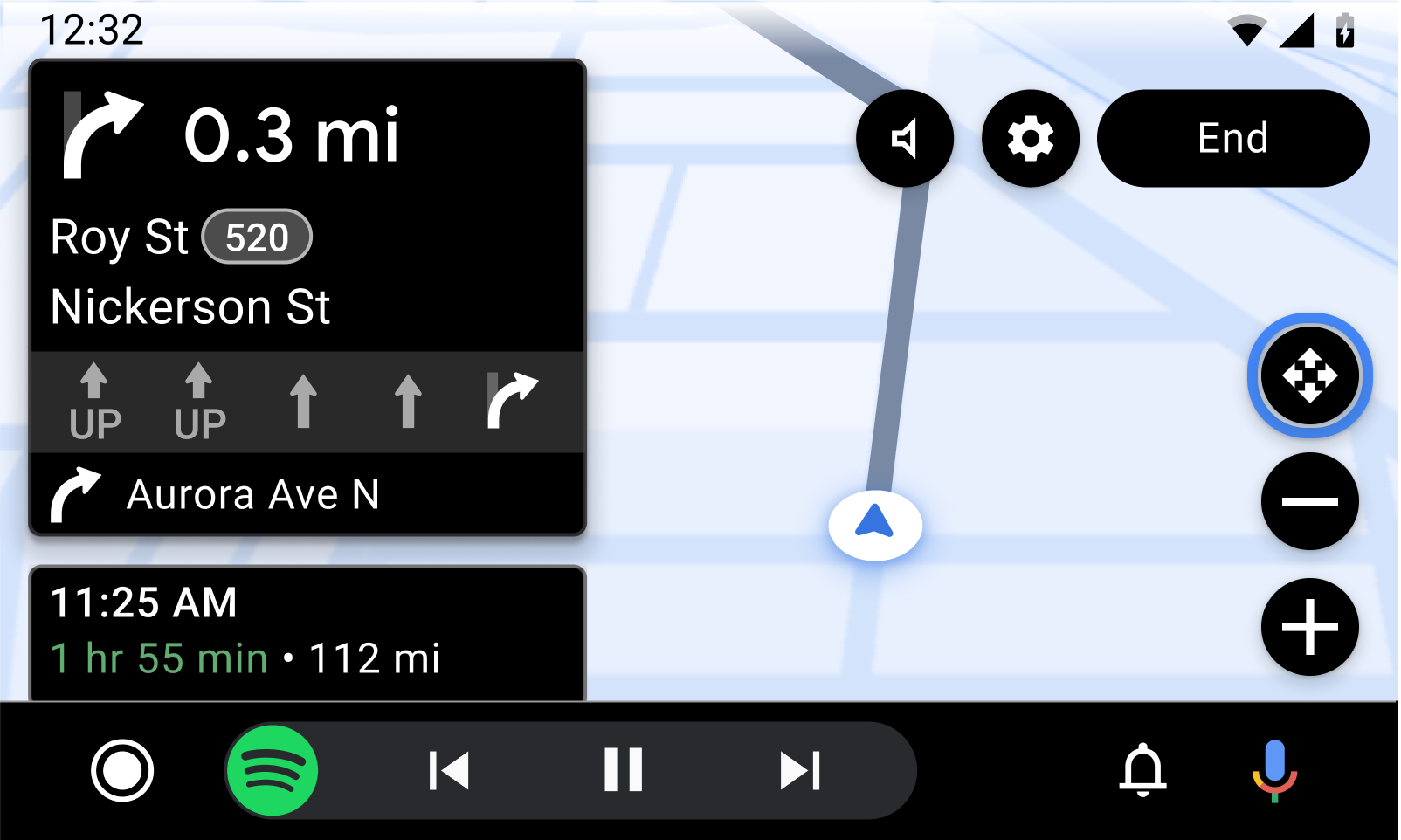

Today's car cabins are increasingly digital, offering developers exciting new opportunities with larger displays and more powerful computing. Android Auto is now supported in nearly all new cars sold, with almost 250 million compatible vehicles on the road.

We're also seeing significant growth in cars powered by Android Automotive OS with Google built-in. Over 50 models are currently available, with more launching this year. This growth is fueled by a thriving app ecosystem, including over 300 apps already available on the Play Store. These include apps optimized for a safe and seamless experience while driving as well as entertainment apps for while you're parked and waiting in your car—many of which are adaptive mobile apps that have been seamlessly brought to cars through the Car Ready Mobile Apps Program.

A vibrant developer community is essential to delivering these innovative in-car experiences utilizing the different screens within the car cabin. This past year, we've focused on key areas to help empower developers to build more differentiated experiences in cars across both platforms, as we embark on the Gemini era in cars!

Gemini for Cars

Exciting news for in-car experiences: Gemini, Google's advanced AI, is coming to vehicles! This unlocks a new era of safe and helpful interactions on the go.

Gemini enables natural voice conversations and seamless multitasking, empowering drivers to get more done simply by speaking naturally. Imagine effortlessly finding charging stations or navigating to a location pulled directly from an email, all with just your voice.

You can learn how to leverage Gemini's potential to create engaging in-car experiences in your app.

Navigation apps can integrate with Gemini using three core intent formats, allowing you to start navigation, display relevant search results, and execute custom actions, such as enabling users to report incidents like traffic congestion using their voice.

Gemini for cars will be rolling out in the coming months. Get ready to build the next generation of in-car AI experiences!

New developer programs and tools

Last year, we introduced car app quality tiers to inspire developers to create high quality in-car experiences. By developing your app in compliance with the Car ready tier, you can bring video, gaming, or browser apps to run while parked in cars with Google built-in with almost no additional effort. Learn more about Car Ready Mobile Apps.

Your app can further shine in cars within the Car optimized and Car differentiated tiers to unlock experiences while the car is in motion, and also when transitioning between parked and driving modes, while utilizing the different screens within the modern car cabin. Check the car app quality guidelines for details.

To start with, across both Android Auto and for cars with Google built-in, we've made some exciting improvements for Car App Library:

- The Weather app category has graduated from beta: any developer can now publish weather apps to production tracks on both Android Auto and cars with Google Built-in. Before you publish your app, check that it meets the quality guidelines for weather apps.

- Designing templated apps is easier than ever with the Car App Templates Design Kit we just published on Figma.

- Two new templates, the SectionedItemTemplate and MediaPlaybackTemplate, are now available in the Car App Library 1.8 alpha release for use on Android Auto. These templates are a great fit for building templated media apps, allowing for increased customization in layout and browsing structure.

On Android Auto, many new app categories and capabilities are now in beta:

- We are adding support for Building media apps with the Car App Library, enabling media app developers to build both richer and more complete experiences that users are used to on their phones. During beta, developers can build and publish media apps built using the Car App Library to internal testing and closed testing tracks. You can also express interest in being an early access partner to publish to production while the category is in beta.

- The communications category is in beta. We've simplified calling integration for calling apps by utilizing the CallsManager Jetpack API. Together with the templates provided by the Car App Library, this enables communications apps to build features like full message history, upcoming meetings list, rich in-call views, and more. During beta, developers can build and publish communications apps to internal testing and closed testing tracks. You can also express interest in being an early access partner to publish to production while the category is in beta.

- Games are now supported in Android Auto, while parked, on phones running Android 15 and above. You can already find some popular titles like Angry Birds 2, Farm Heroes Saga, Candy Crush Soda Saga and Beach Buggy Racing 2. The Games category is in Beta and developers can publish games to internal testing and closed testing tracks. You can also express interest in being an early access partner to publish to production while the category is in beta.

Finally, we have further simplified building, testing and distribution experience for developers building apps for Android Automotive OS cars with Google built-in:

- Games Category now in Beta for Google Built-In, allowing developers to distribute their adaptive games to cars. You can express interest to release the production track. Google Play Games Services (v2) are now available on Cars with Google built-in, enabling seamless login flows, cross device save states, and more. Get started with Google Play Games Services to learn more.

- Distribution through Google Play is more flexible than ever. It’s now possible for apps in the parked categories to distribute in the same APK or App Bundle to cars with Google built-in as to phones, including through the mobile release track. Learn more on how to Distribute to cars.

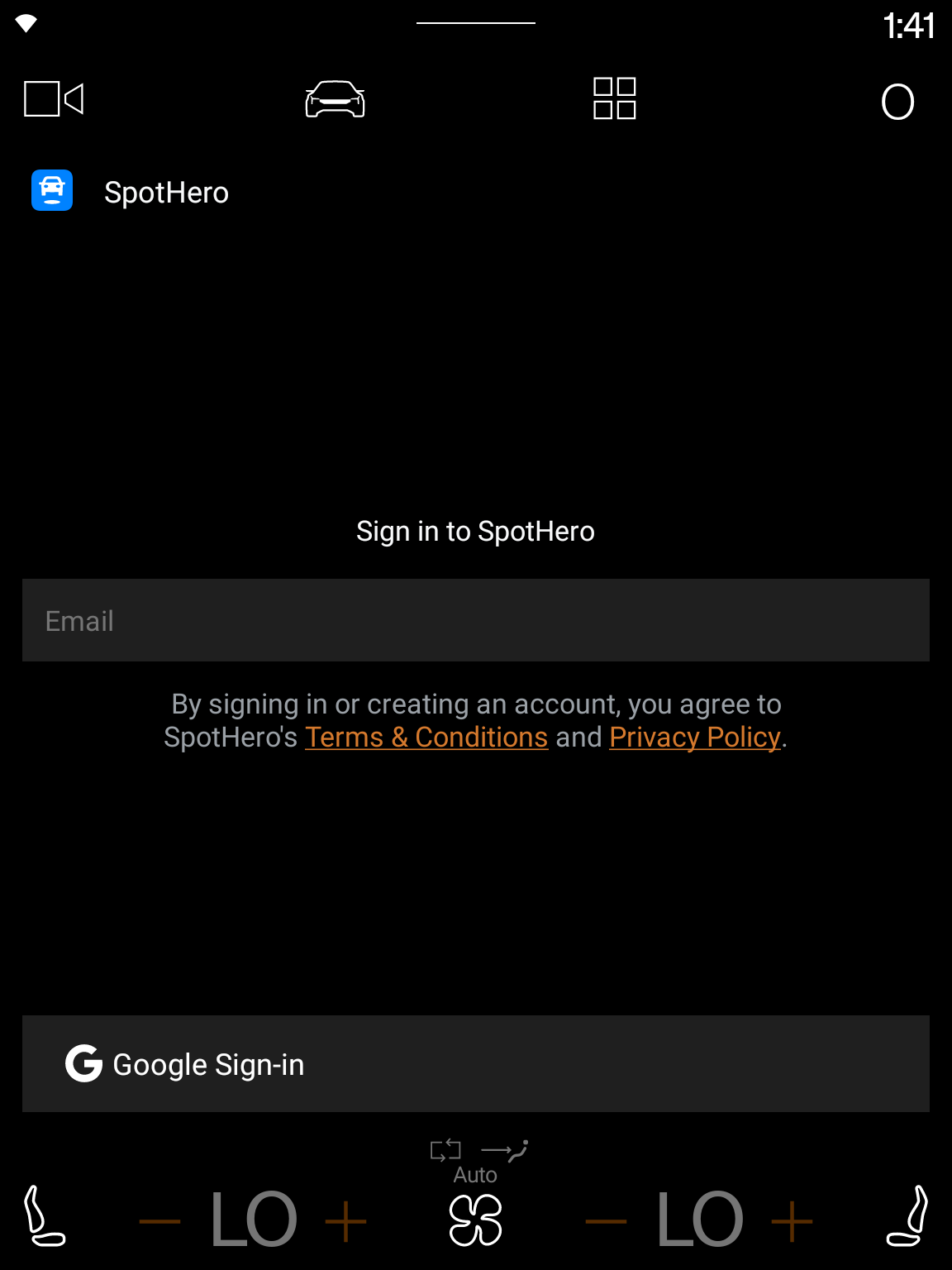

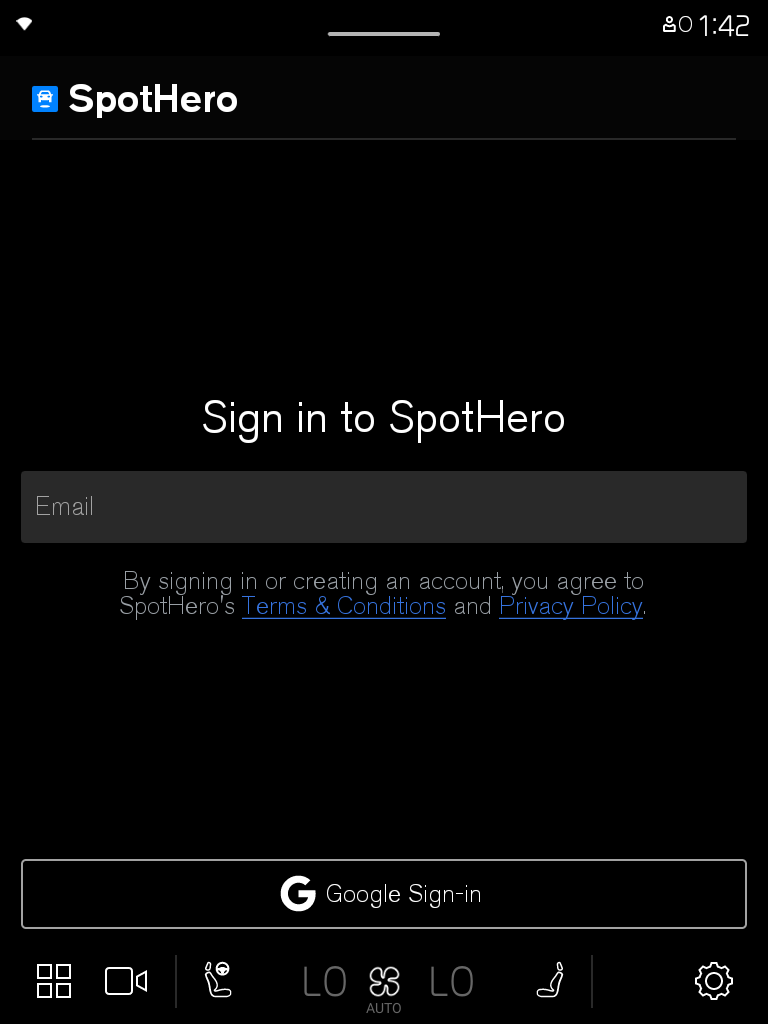

- Android Automotive OS on Pixel Tablet is now generally available, giving you a physical device option for testing Android Automotive OS apps without buying or renting a car. Additionally, the most recent system images include support for acting as an Android Auto receiver, meaning you can use the same device to test both your app’s experience on Android Auto and Android Automotive OS. Apply for access to these images.

The road ahead

You can look forward to more updates later this year, including:

- Video apps will be supported on Android Auto, starting with phones running Android 16 on select compatible cars. If your app is already adaptive, enabling your app experience while parked only requires minimal steps to distribute to cars.

- For Android Automotive OS cars running Android 14+ with Google built-in, we are working with car manufacturers to add additional app compatibility, to enable thousands of adaptive mobile apps in the next phase of the Car Ready Mobile Apps Program.

- Updated design documentation that visualizes car app quality guidelines and integration paths to simplify designing your app for cars.

- Google Play Services for cars with Google built-in are expanding to bring them on-par with mobile, including:

- Audio while driving for video apps: For cars with Google built-in, we're working with OEMs to enable audio-only listening for video apps while driving. Sign up to express interest in participating in the early access program.

- Firebase Test Lab is adding Android Automotive OS devices to its device catalog, making it possible to test on real car hardware without needing to buy or rent a car. Sign up to express interest in becoming an early access partner.

- Pre-launch reports for Android Automotive OS are coming soon to the Play Console, helping you ensure app quality before distributing your app to cars.

-

a. Passkeys and Credential Manager APIs for a more seamless user sign-in experience.

-

b. Quick Share, which will enable easy cross-device sharing from phone to car.

Be sure to keep up to date through goo.gle/cars-whats-new on these features and more as we continuously invest in the future of Android in the car. Stay tuned for more resources to help you build innovative and engaging experiences for drivers and passengers.

Ready to publish your car app? Check our guidance for distributing to cars.

Explore this announcement and all Google I/O 2025 updates on io.google starting May 22.

Posted by Ben Sagmoe - Developer Relations Engineer

Posted by Ben Sagmoe - Developer Relations Engineer

Posted by Vivek Radhakrishnan – Technical Program Manager, and Seung Nam – Product Manager

Posted by Vivek Radhakrishnan – Technical Program Manager, and Seung Nam – Product Manager

Posted by

Posted by

Posted by Jennifer Tsau, Product Management Lead and David Dandeneau, Engineering Lead

Posted by Jennifer Tsau, Product Management Lead and David Dandeneau, Engineering Lead