Posted by Mickey Kataria, Director of Product Management

For over a decade, Google has been committed to automotive, with a vision of creating a safe and seamless connected experience in every car. Developers like all of you are a crucial part of helping people stay connected while on the go. We’re seeing strong momentum across our in-car experiences, Android Auto and Android Automotive OS, and today, we’re excited to share the latest updates and opportunities to reach users in the car.

Check out our I/O session: What's new with Android for Cars

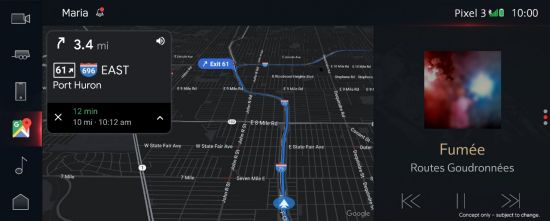

Android Auto

Android Auto, which allows users to connect their phone to their car display, now has over 100 million compatible cars on the road and is supported by nearly every major car manufacturer. Porsche is our newest partner and they will begin shipping Android Auto on new cars, starting this summer with the Porsche 911.

We’ve been working closely with car manufacturers to build an even better Android Auto experience by enabling wireless projection in more vehicles, extending availability to more countries, and continuing to launch new features, like integration into the instrument cluster. To see some of the newest Android Auto technology in the BMW iX, check out the video below.

Android Auto projecting to the cluster display in a BMW iX.

Android Automotive OS

Our newest in-car experience, Android Automotive OS with Google apps and services built-in, also has strong momentum. With this experience, the entire infotainment system is powered by Android and users can access Google Assistant, Google Maps, and more apps from Google Play directly from the car screen without relying on a phone. Cars from Polestar and Volvo, like the Polestar 2 and the Volvo XC40 Recharge, are already available to customers. And by the end of 2021, this experience will be available to order in more than 10 car models from Volvo, General Motors and Renault. You can get a sneak peek of this customized experience in the new GMC HUMMER EV below.

The all-electric GMC HUMMER EV infotainment features Android Automotive OS with Google built-in. Preproduction model shown. Actual production models may vary. Initial availability Fall 2021.

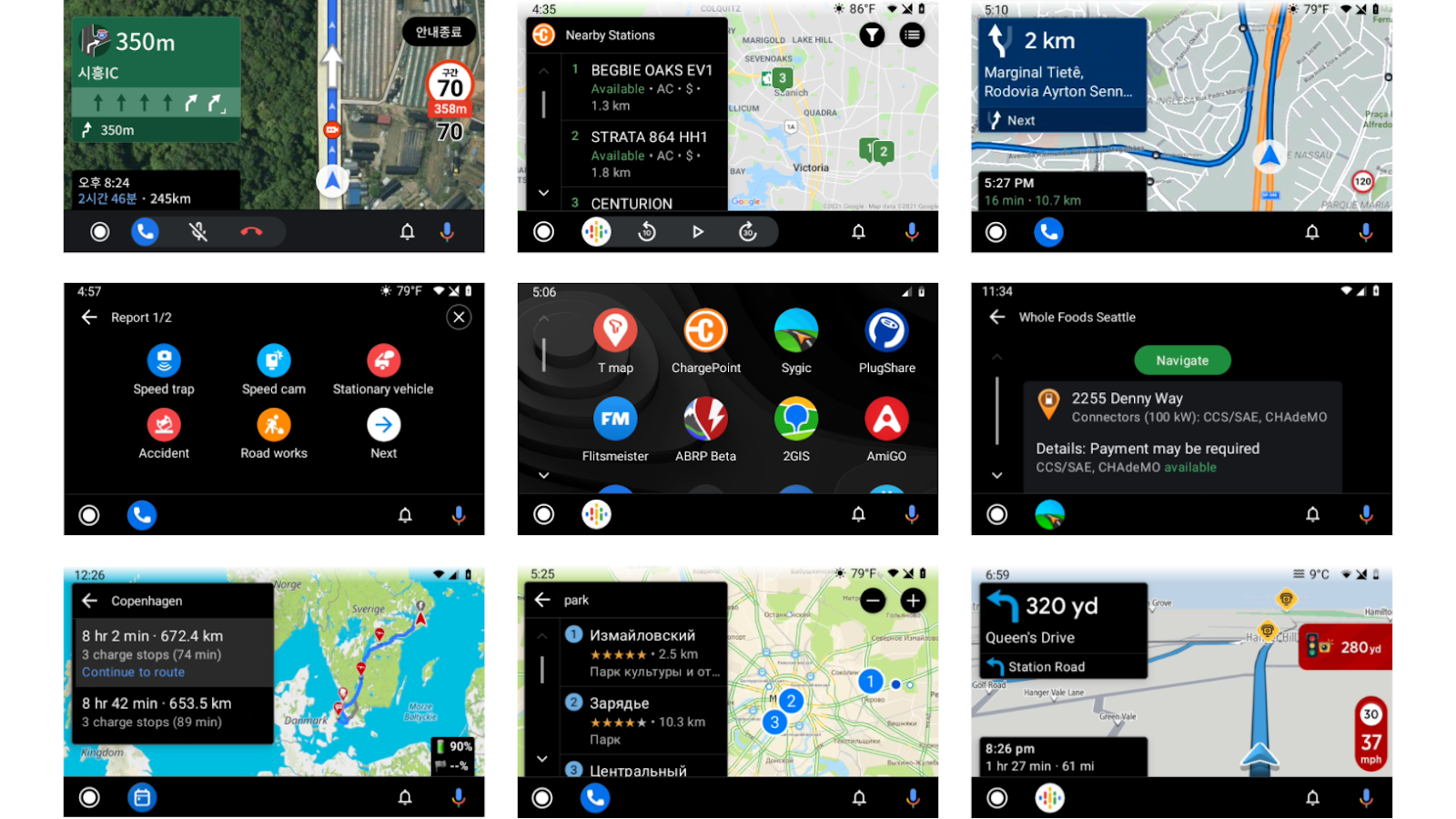

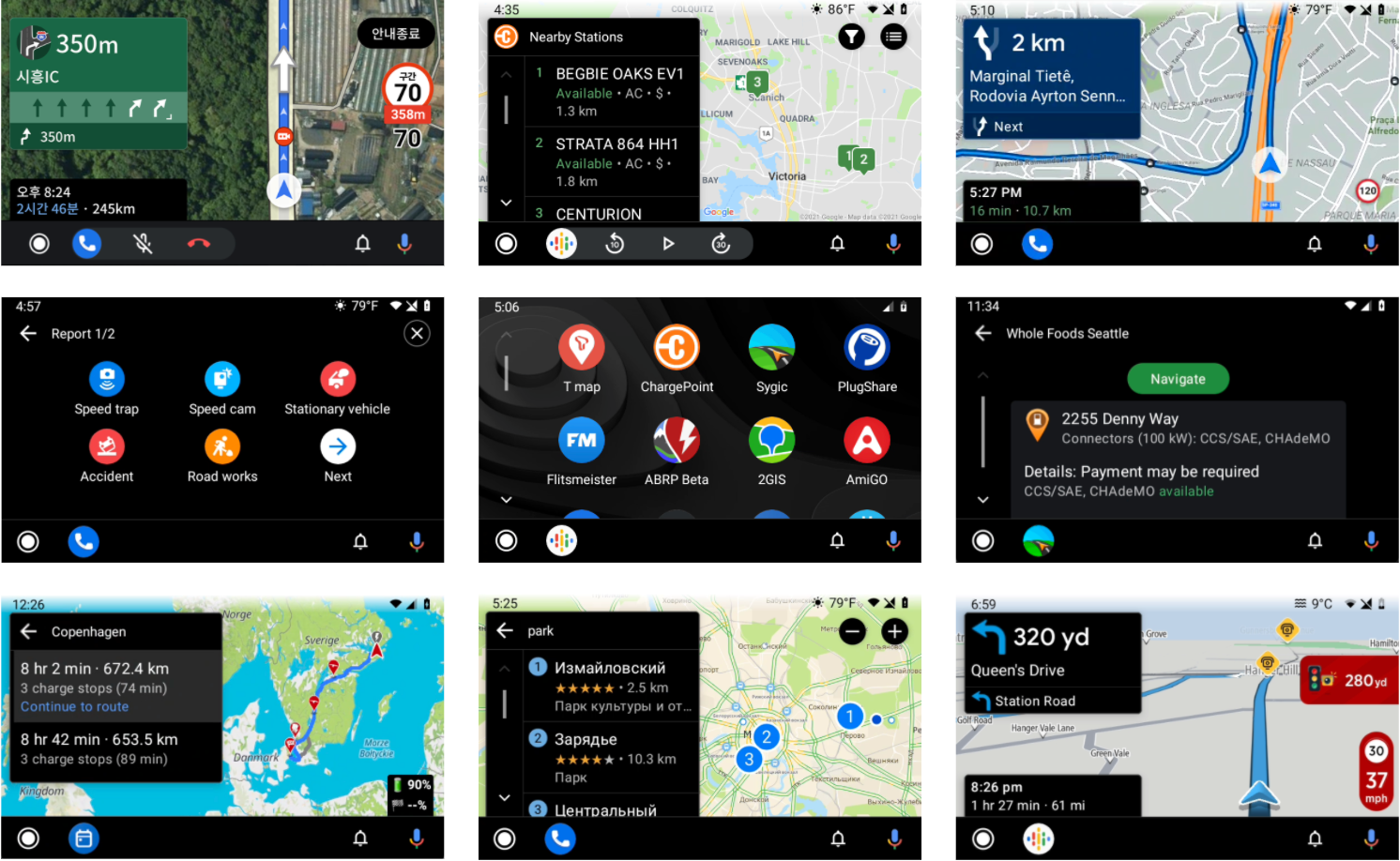

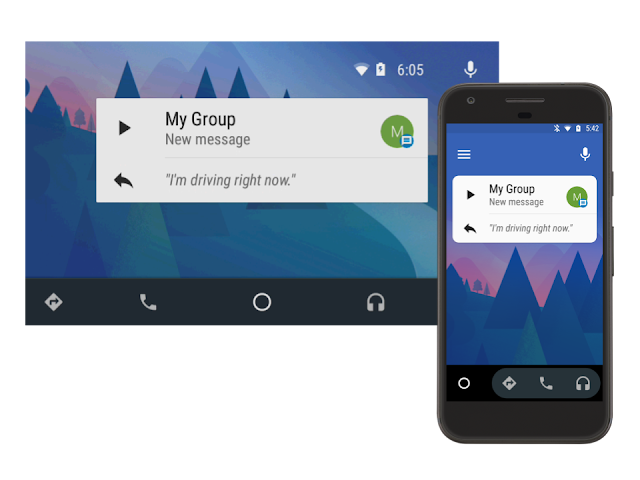

Developing new apps for cars

To support this growing ecosystem, we recently made the Android for Cars App Library available as part of Jetpack. It allows developers of navigation, EV charging and parking apps to bring their apps to Android Auto compatible cars. Many of these developers have already published their Android Auto apps to the Play Store and we’re now extending this library to also support Android Automotive OS, making it easy for you to build once and generate apps that are compatible with both platforms. We’re already working with Early Access Partners — including Parkwhiz, Plugshare, Sygic, ChargePoint, Flitsmeister, SpotHero and others — to bring apps in these categories to cars powered by Android Automotive OS.

PlugShare, an app for finding EV chargers, has used the Android for Cars App Library and Google Assistant App Actions to build for Android Auto.

We plan to expand to more app categories in the future, so if you’re interested in joining our Early Access Program, please fill out this interest form. You can also get started with the Android for Cars App Library today, by visiting g.co/androidforcars. Lastly, you can always get help from the developer community at Stack Overflow using the android-automotive and android-auto tags. We can’t wait to see what you build next!