Today we are launching Android Studio Iguana 🦎 in the stable release channel to make it easier for you to create high quality apps. With features like Version Control System support in App Quality Insights, to the new built-in support to create Baseline Profiles for Jetpack Compose apps, this version should enhance your development workflow as you optimize your app. Download the latest version today!

Check out the list of new features in Android Studio Iguana below, organized by key developer flows.

Debugging

Version control system integration in App Quality Insights

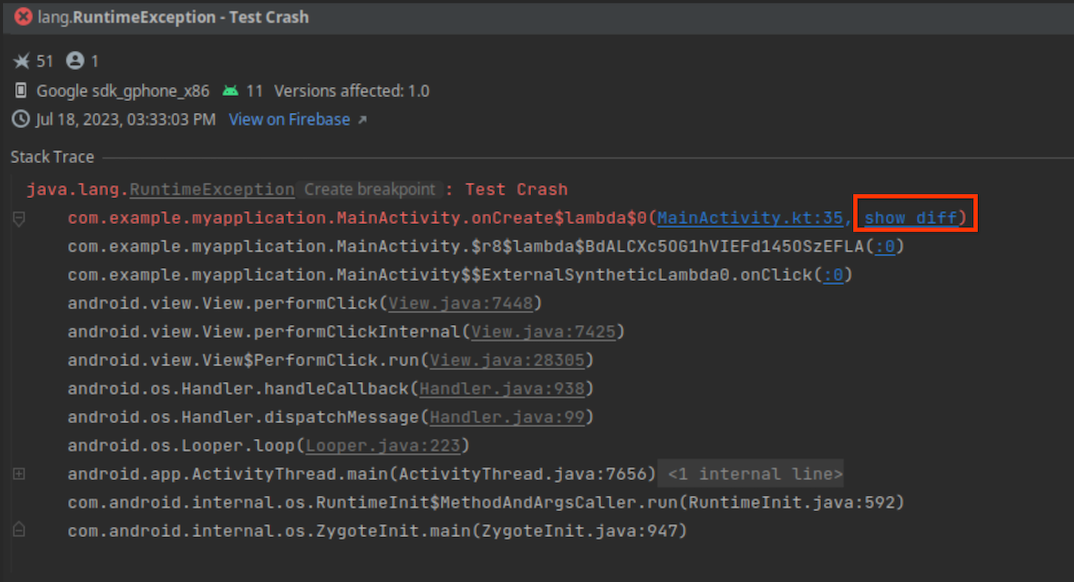

When your release build is several commits behind your local source code, line numbers in Firebase Crashlytics crash reports can easily go stale, making it more difficult to accurately navigate from crash to code when using App Quality Insights. If you’re using git for your version control, there’s now a solution to this problem.

When you build your app using Android Gradle Plugin 8.3 or later and the latest version of the Crashlytics SDK, AGP includes git commit information as part of the build artifact that is published to the Play Store. When a crash occurs, Crashlytics attaches the git information to the report, and Android Studio Iguana uses this information to compare your local checkout with the exact code that caused the crash from your git history.

After you build your app using Android Gradle Plugin 8.3 or higher with the latest Crashlytics SDK, and publish it, new crash reports in the App Quality Insights window let you either navigate to the line of code in your current git checkout or view a diff report between the current checkout and the version of your app codebase that generated the crash report. Learn more.

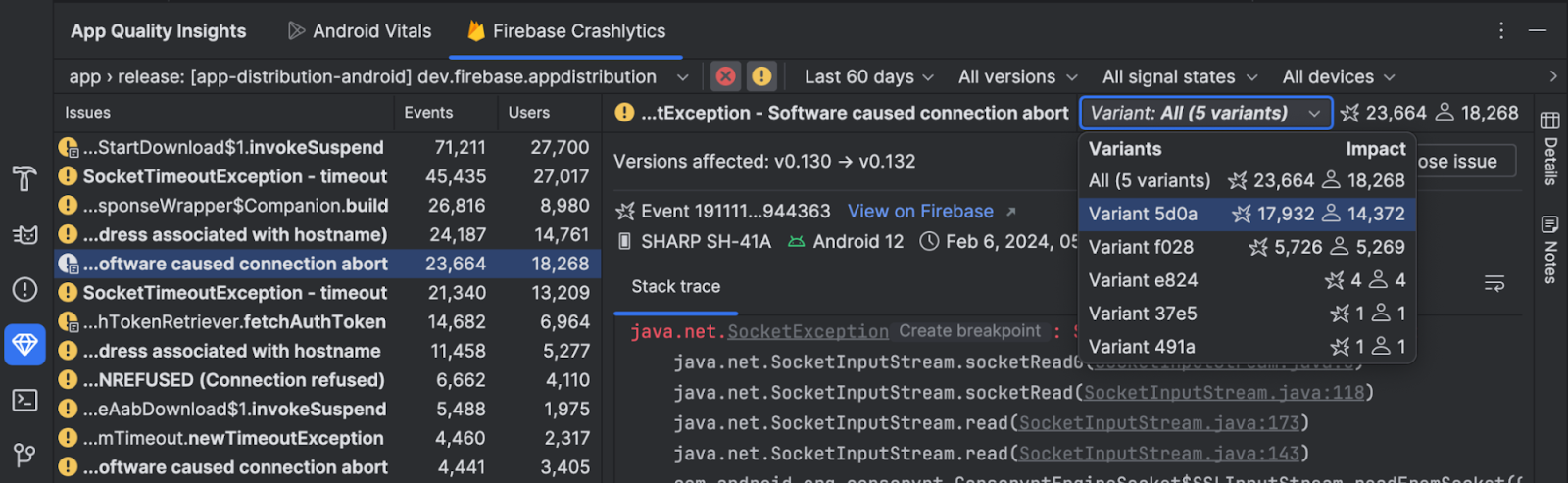

View Crashlytics crash variants in App Quality Insights

Today, when you select a Crashlytics issue in App Quality Insights, you see aggregated data from events that share identical points of failure in your code, but may have different root causes. To aid in your analysis of the root causes of a crash, Crashlytics now groups events that share very similar stack traces as issue variants. You can now view events in each variant of a crash report in App Quality Insights by selecting a variant from the dropdown. Alternatively, you can view aggregate information for all variants by selecting All.

Design

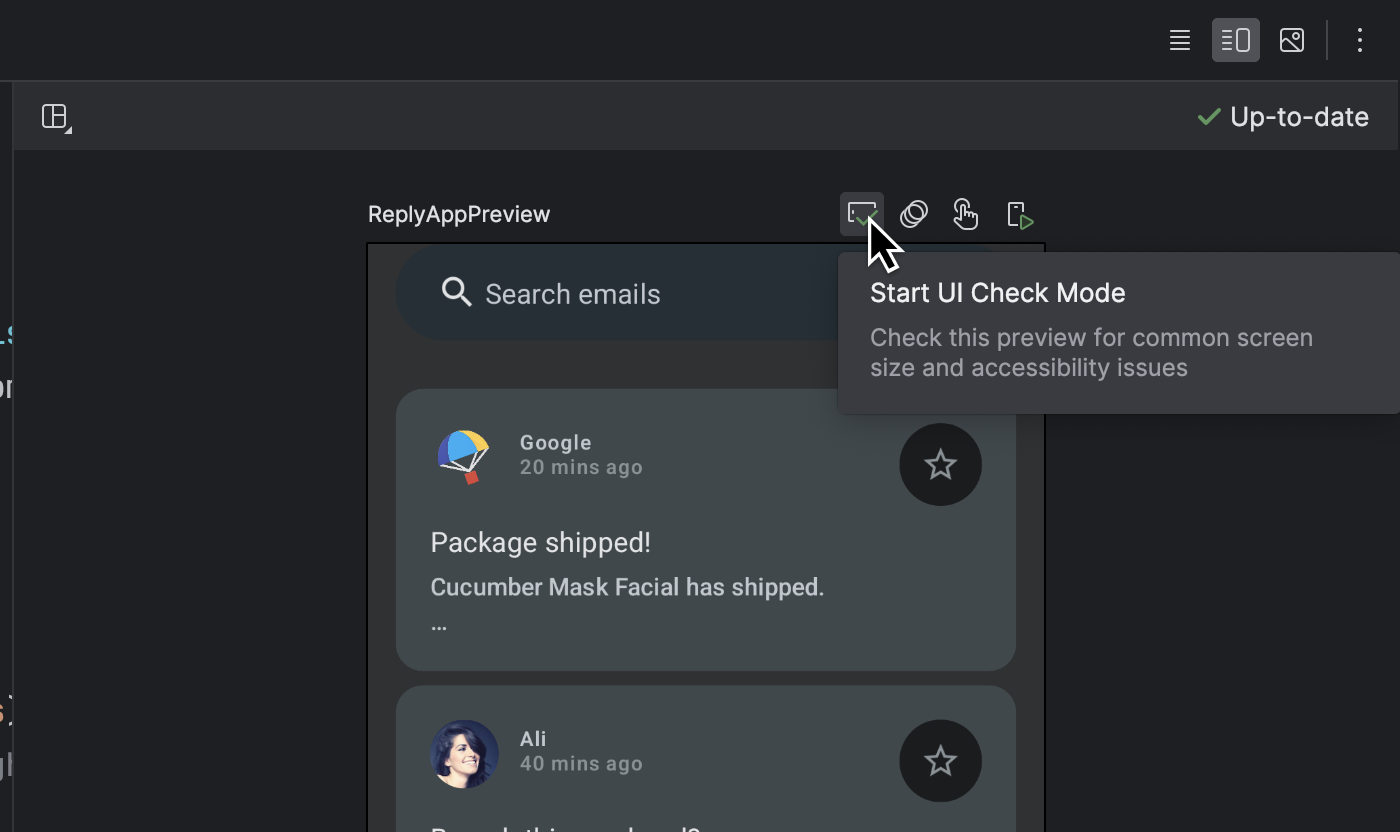

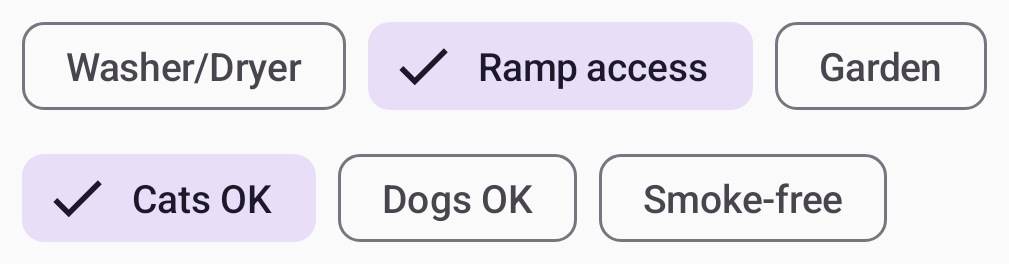

Jetpack Compose UI Check

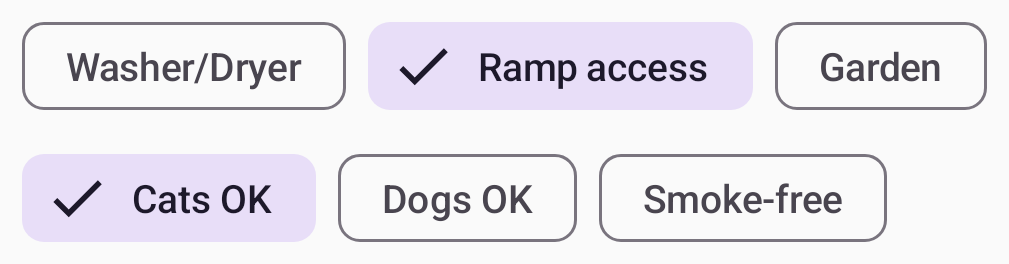

To help developers build adaptive and accessible UI in Jetpack Compose, Iguana introduces a new UI Check mode in Compose Preview. This feature works similarly to visual linting and accessibility checks integrations for views. Activate Compose UI check mode to automatically audit your Compose UI and check for adaptive and accessibility issues across different screen sizes, such as text that's stretched on large screens or low color contrast. The mode highlights issues found in different preview configurations and lists them in the problems panel.

Try it out by clicking the UI Check icon in Compose Preview.

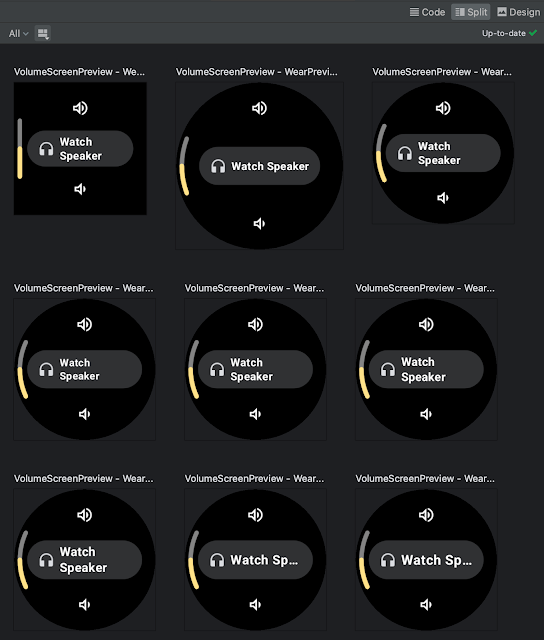

Progressive rendering for Compose Preview

Compose Previews in Android Studio Iguana now implement progressive rendering, allowing you to iterate on your designs with less loading time. This feature automatically lowers the detail of out-of-view previews to boost performance, meaning you can scroll through even the most complex layouts without lag.

Develop

Intellij Platform Update

Android Studio Iguana includes the IntelliJ 2023.2 platform release, which has many new features such as support for GitLab, text search in Search Everywhere, color customization updates to the new UI and a host of new improvements. Learn more.

Testing

Baseline Profiles module wizard

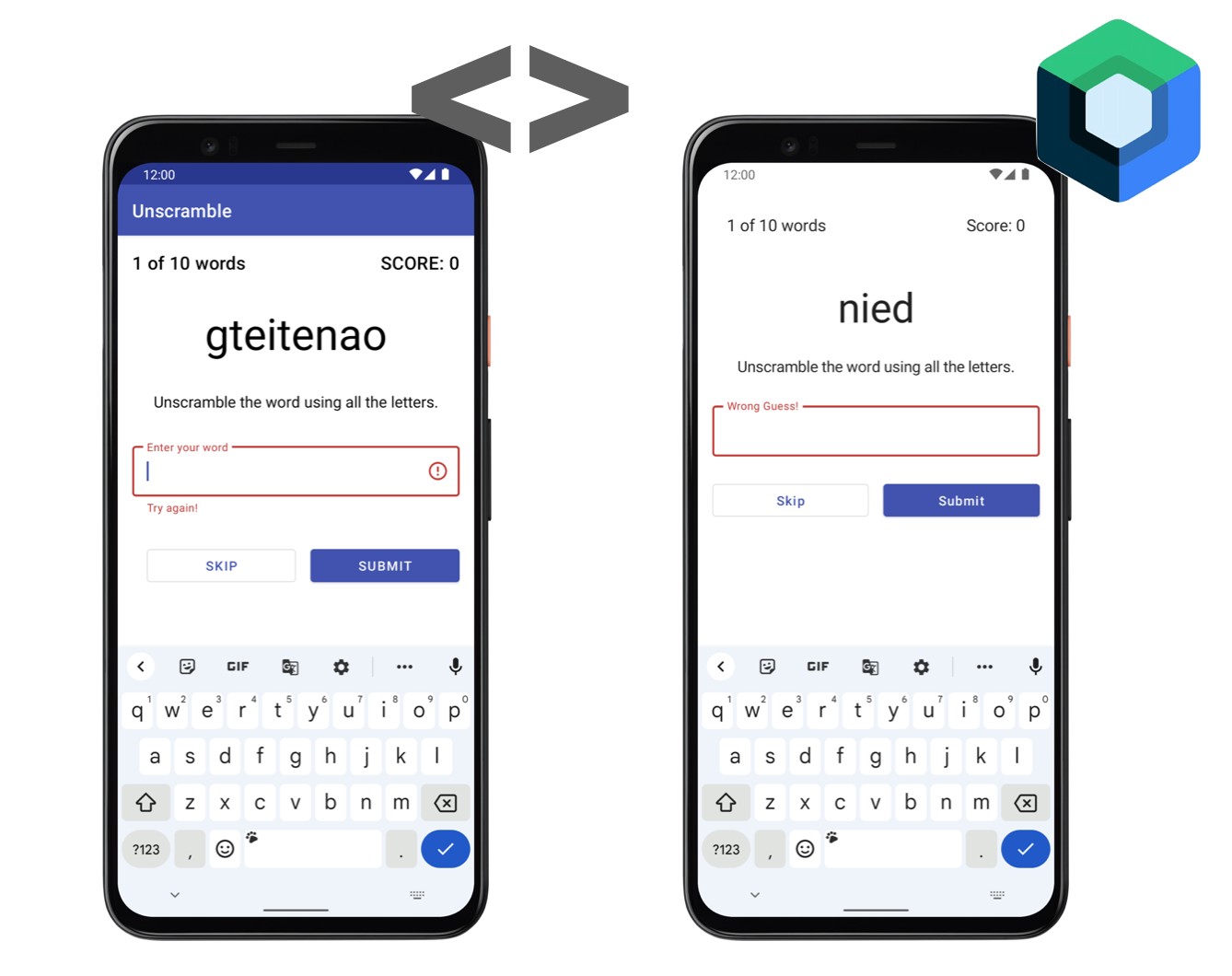

Many times when you run an Android app for the first time on a device, the app can appear to have a slow start time because the operating system has to run just-in-time compilation. To improve this situation, you can create Baseline Profiles that help Android improve aspects like app start-up time, scrolling, and navigation speed in your apps. We are simplifying the process of setting up a Baseline Profile by offering a new Baseline Profile Generator template in the new module wizard (File > New > New Module). This template configures your project to support Baseline Profiles and employs the latest Baseline Profiles Gradle plugin, which simplifies setup by automating required tasks with a single Gradle command.

Furthermore, the template creates a run configuration that enables you to generate a Baseline Profile with a single click from the "Select Run/Debug Configuration" dropdown list.

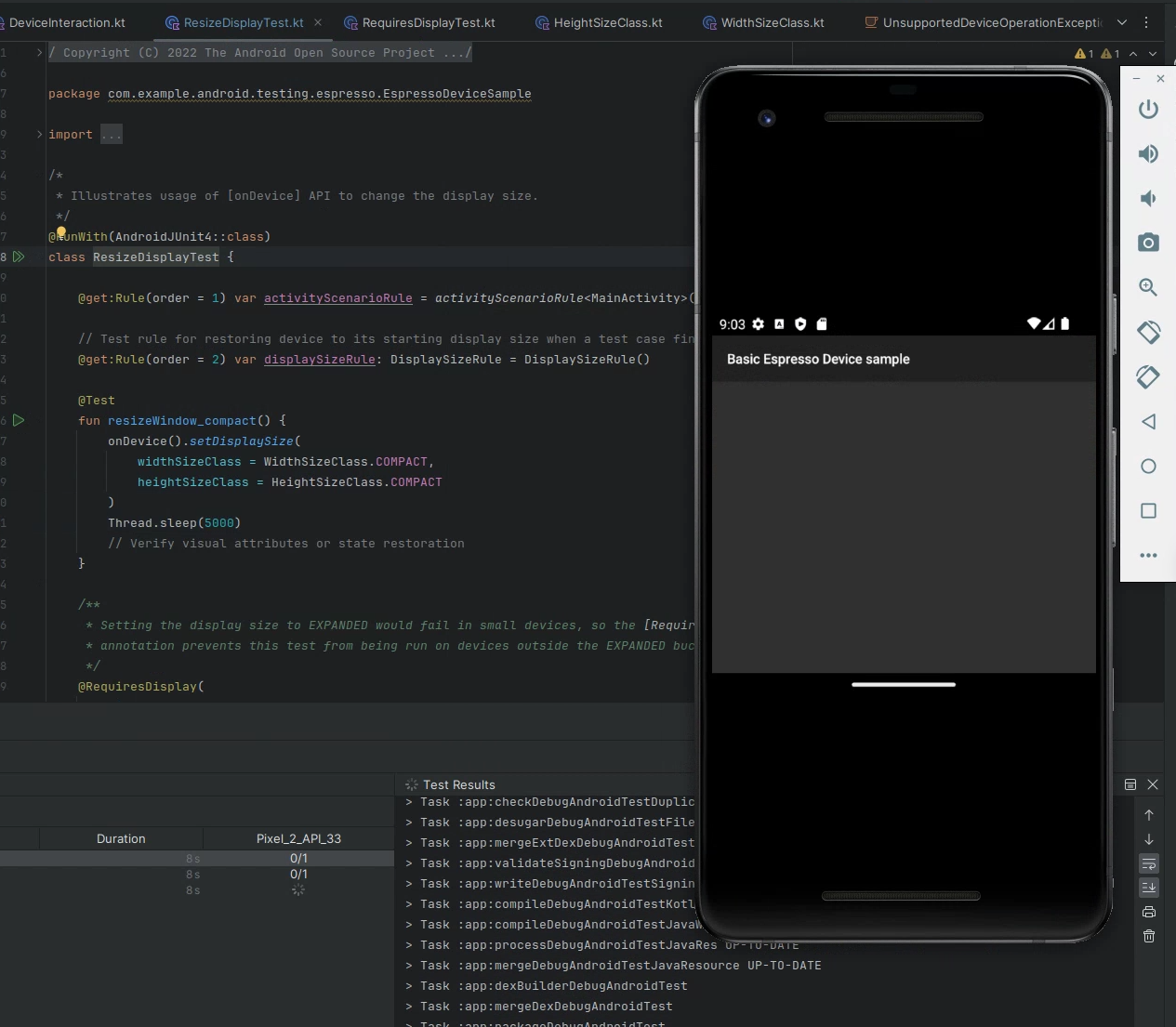

Test against configuration changes with the Espresso Device API

Catch layout problems early and ensure your app delivers a seamless user experience across devices and orientations. The Espresso Device API simulates how your app reacts to configuration changes—such as screen rotation, device folding/unfolding, or window size changes—in a synchronous way on virtual devices. These APIs help you rigorously test and preemptively fix issues that frustrate users so you build more reliable Android apps with confidence. These APIs are built on top of new gRPC endpoints introduced in Android Emulator 34.2, which enables secure bidirectional data streaming and precise sensor simulation.

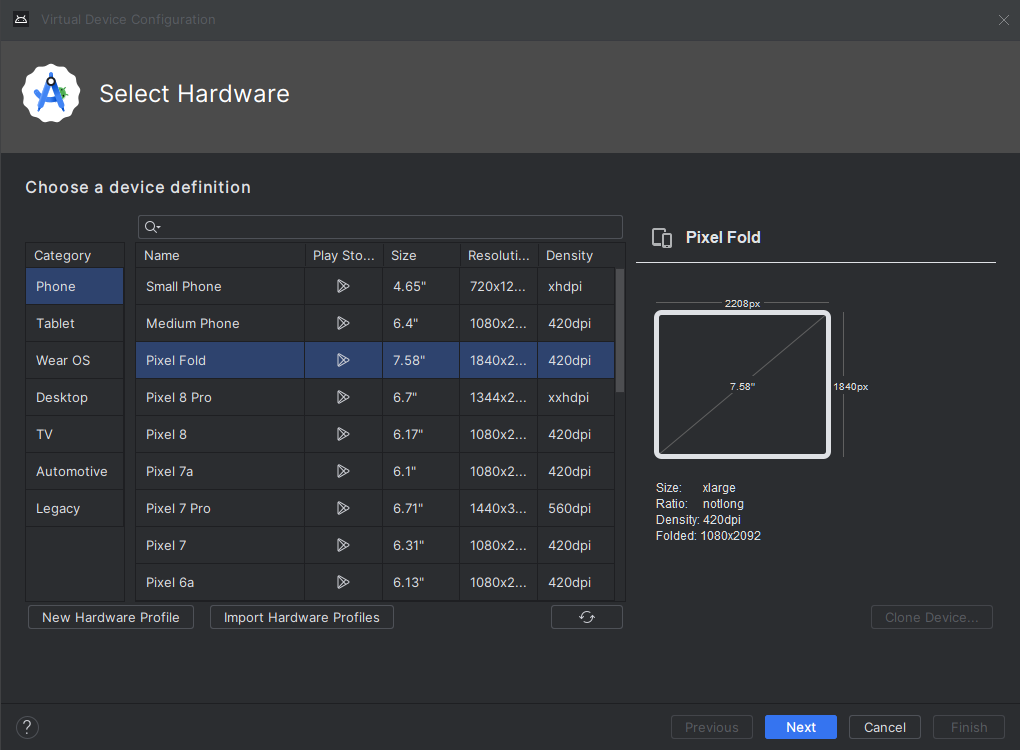

Pixel 8 and Pixel 8 Pro devices in Android Emulator (34.2)

Test your app on the latest Google Pixel device configurations with the updated Android Virtual Device definitions in Android Studio. With Android Studio Iguana and the latest Android Emulator (34.2+), access the Pixel Fold, Pixel Tablet, Pixel 7a, Pixel 8, and Pixel 8 Pro. Validating your app on these virtual devices is a convenient way to ensure that your app reacts correctly to a variety of screen sizes and device types.

Build

Support for Gradle Version Catalogs

Android Studio Iguana streamlines dependency management with its enhanced support for TOML-based Gradle Version Catalogs. You'll benefit from:

- Centralized dependency management: Keep all your project's dependencies organized in a single file for easier editing and updating.

- Time-saving features: Enjoy seamless code completion, smart navigation within your code, and the ability to quickly edit project dependencies through the convenient Project Structure dialog.

- Increased efficiency: Say goodbye to scattered dependencies and manual version updates. Version catalogs give you a more manageable, efficient development workflow.

New projects will automatically use version catalogs for dependency management. If you have an existing project, consider making the switch to benefit from these workflow improvements. To learn how to update to Gradle version catalogs, see Migrate your build to version catalogs.

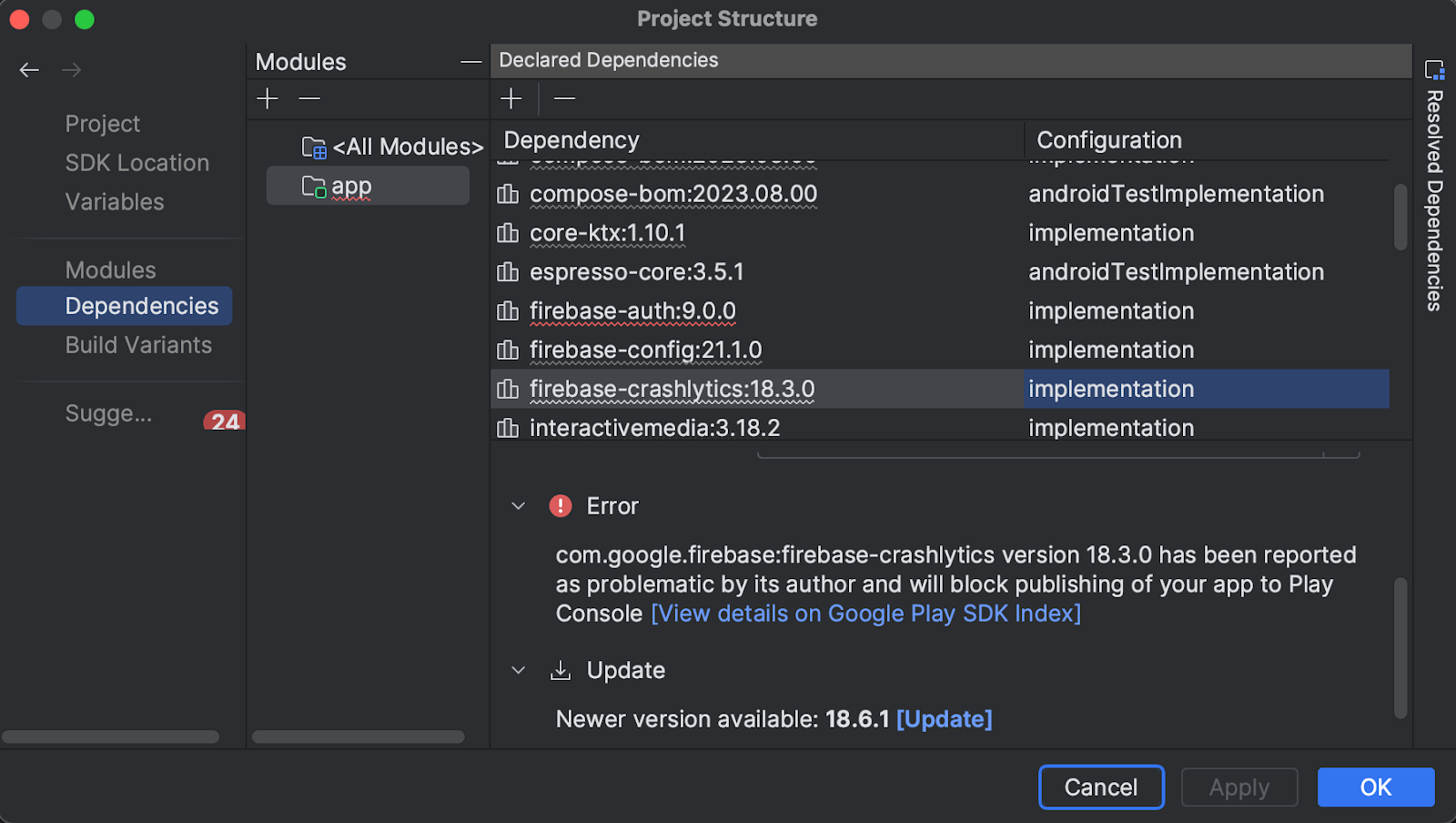

Additional SDK insights: policy issues

Android Studio Iguana now proactively alerts you to potential Google Play policy violations through integration with the Google Play SDK Index. Easily see Play policy issues right in your build files and Project Structure Dialog. This streamlines compliance, helping you avoid unexpected publishing delays or rejections on the Google Play Store.

Android Studio compileSdk version support

Using Android Studio to develop a project that has an unsupported compileSdk version can lead to unexpected errors because older versions of Android Studio may not handle the new Android SDK correctly. To avoid these issues, Android Studio Iguana now explicitly warns you if your project’s intended compileSdk is for a newer version that it does not officially support. If available, it also suggests moving to a version of Android Studio that supports the compileSdk used by your project. Keep in mind that upgrading Android Studio might also require that you upgrade AGP.

Summary

To recap, Android Studio Iguana 🦎includes the following enhancements and features:

Debugging

Design

- Jetpack Compose UI Check

- Progressive rendering for Compose Preview

Develop

- Intellij platform update

Testing

Build

- Support for Gradle Version Catalogs

- Policy issue warnings in Google Play SDK Index

- CompileSDK version support

Download Android Studio Today

Download Android Studio Iguana 🦎 today and take advantage of the latest features to streamline your workflow and help you make better apps. Your feedback is essential – check known issues, report bugs, suggest improvements, and be part of our vibrant community on LinkedIn Medium, YouTube, or X (formerly known as Twitter). Let's build the future of Android apps together!

Posted by Anirudh Dewani, Director of Android Developer Relations

Posted by Anirudh Dewani, Director of Android Developer Relations

Posted by

Posted by

Posted by Anna Bernbaum, Product Manager and

Posted by Anna Bernbaum, Product Manager and

.gif)

Posted by

Posted by

Posted by Amanda Alexander, Product Manager, Android

Posted by Amanda Alexander, Product Manager, Android

Posted by Paul Lammertsma, Developer Relations Engineer

Posted by Paul Lammertsma, Developer Relations Engineer