TL;DR: Remove unnecessary feature requirements that prevent users from downloading your app on devices that don’t support the features. Automate tracking feature requirements and maximize app availability with badging!

Required features reduce app availability

<uses-feature> is an app manifest element that specifies whether your app depends on a hardware or software feature. By default, <uses-feature> specifies that a feature is required. To indicate that the feature is optional, you must add the android:required="false" attribute.

Google Play filters which apps are available to download based on required features. If the user’s device doesn’t support some hardware or software feature, then an app that requires that feature won’t be available for the user to download.

<uses-permission>, another app manifest element, complicates things by implicitly requiring features for permissions such as CAMERA or BLUETOOTH (see Permissions that imply feature requirements). The initial declared orientations for your activities can also implicitly require hardware features.

The system determines implicitly required features after merging all modules and dependencies, so it may not be clear to you which features your app ultimately requires. You might not even be aware when the list of required features has changed. For example, integrating a new dependency into your app might introduce a new required feature. Or the integration might request additional permissions, and the permissions could introduce new, implicitly required features.

This behavior has been around for a while, but Android has changed a lot over the years. Android apps now run on phones, foldables, tablets, laptops, cars, TVs and watches, and these devices are more varied than ever. Some devices don’t have telephony services, some don’t have touchscreens, some don’t have cameras.

Expectations based on permissions have also changed. With runtime permissions, a <uses-permission> declaration in the manifest no longer guarantees that your app will be granted that permission. Users can choose to deny access to hardware in favor of other ways to interact with the app. For example, instead of giving an app permission to access the device’s location, a user may prefer to always search for a particular location instead.

Banking apps shouldn’t require the device to have an autofocusing camera for check scanning. They shouldn’t specify that the camera must be a front or rear camera or that the device has a camera at all! It should be enough to allow the user to upload a picture of a check from another source.

Apps should support keyboard navigation and mouse input for accessibility and usability reasons, so strictly requiring a hardware touchscreen should not be necessary.

Apps should support both landscape and portrait orientations, so they shouldn’t require that the screen could be landscape-oriented or could be portrait-oriented. For example, screens built in to cars may be in a fixed landscape orientation. Even if the app supports both landscape and portrait, the app could be unnecessarily requiring that the device supports being used in portrait, which would exclude those cars.

Determine your app’s required features

You can use aapt2 to output information about your APK, including the explicitly and implicitly required features. The logic matches how the Play Store filters app availability.

aapt2 dump badging <path_to_.apk>In the Play Console, you can also check which devices are being excluded from accessing your app.

Increase app availability by making features optional

Most apps should not strictly require hardware and software features. There are few guarantees that the user will allow using that feature in the first place, and users expect to be able to use all parts of your app in the way they see fit. To increase your app’s availability across form factors:

- Provide alternatives in case the feature is not available, ensuring your app doesn’t need the feature to function.

- Add android:required="false" to existing <uses-feature> tags to mark the feature as not required (or remove the tag entirely if the app no longer uses a feature).

- Add the <uses-feature> tag with android:required="false" for implicitly required feature due to declaring permissions that imply feature requirements.

Prevent regressions with CI and badging

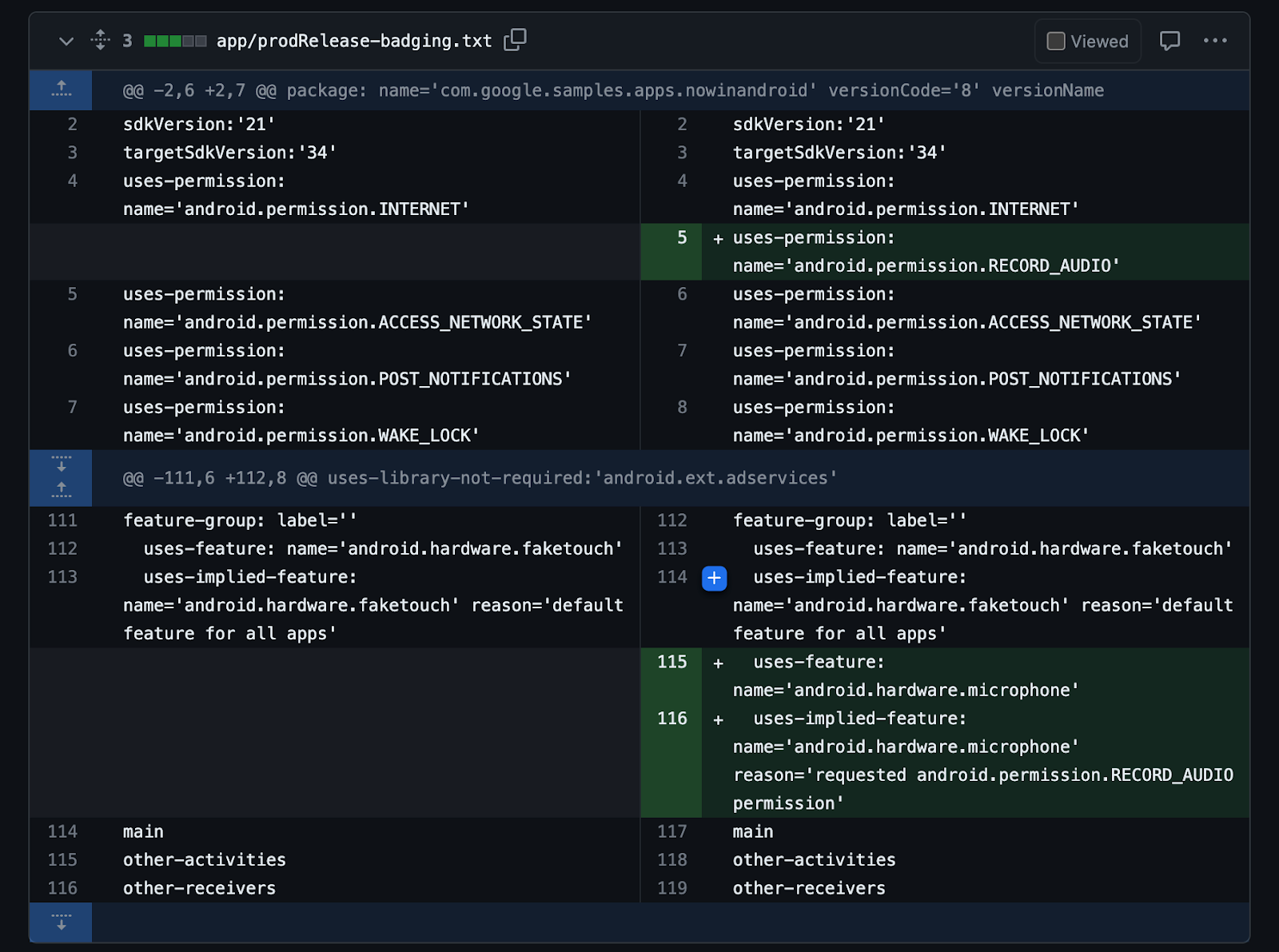

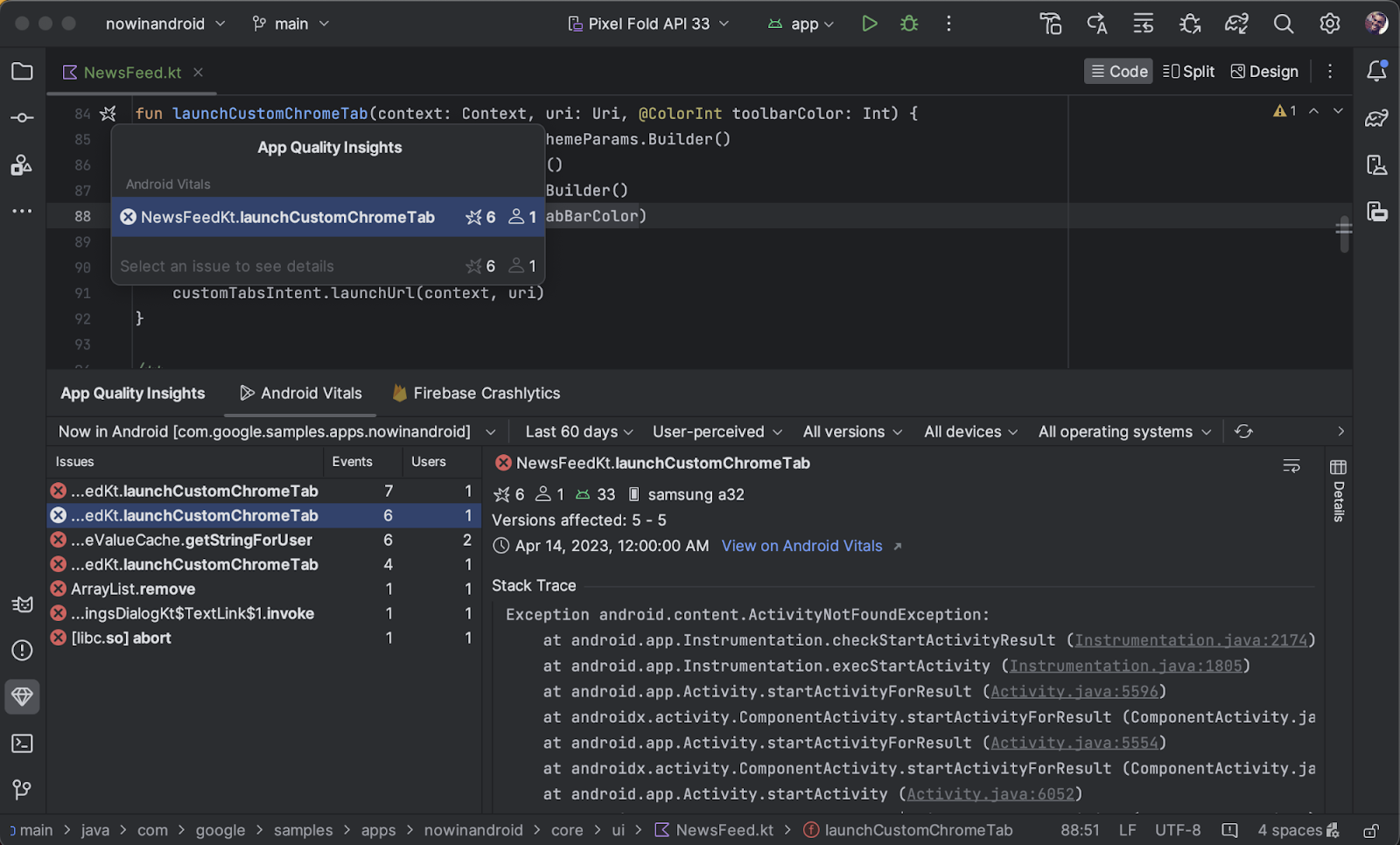

To guard against regressions caused by inadvertently adding a new feature requirement that reduces device availability, automate the task of determining your app’s features as part of your build system. By storing the badging output of the aapt2 tool in a text file and checking the file into version control, you can track all declared permissions and explicitly and implicitly required features from your final universal apk. This includes all features and permissions included by transitive dependencies, in addition to your own.

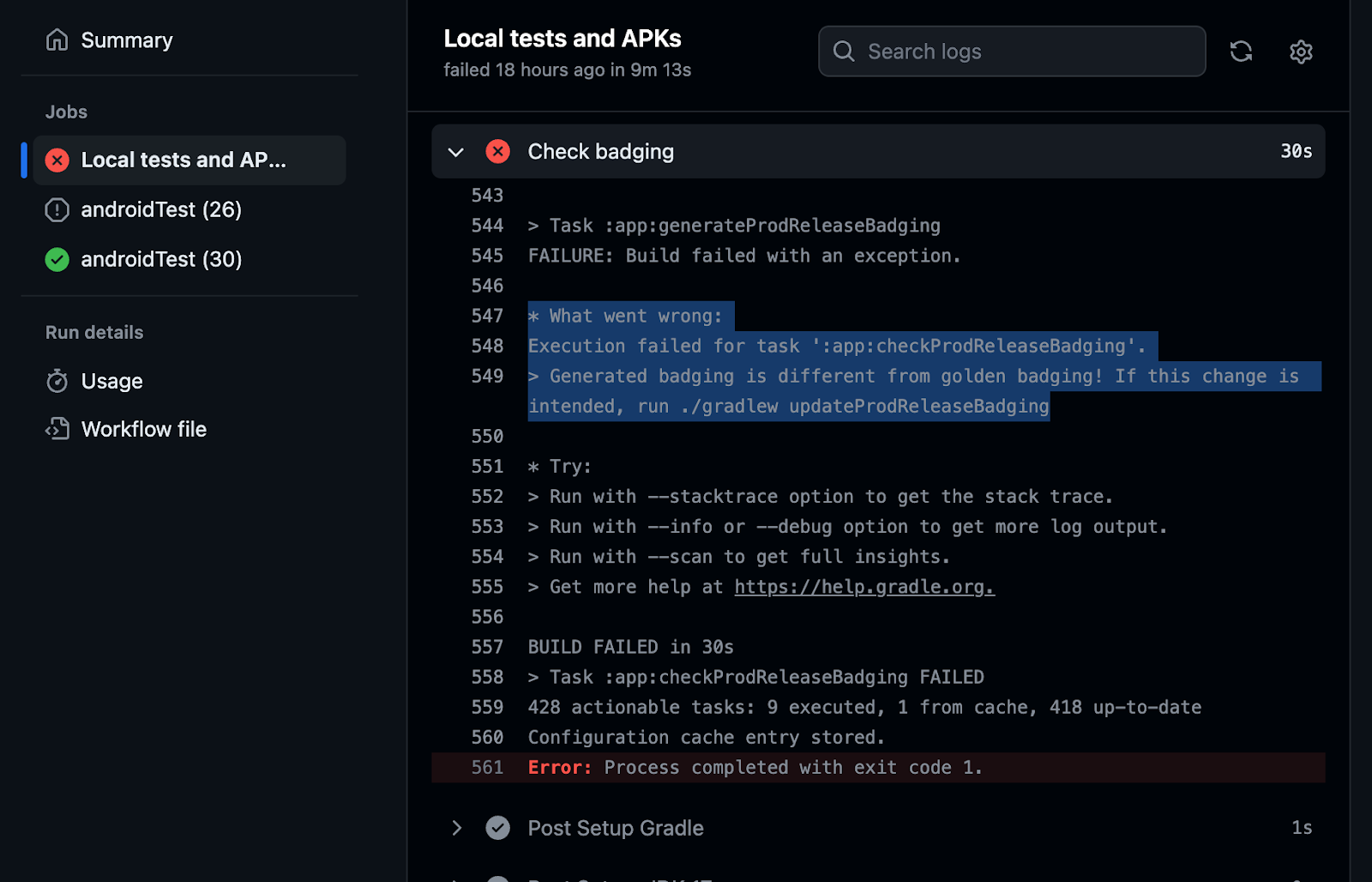

You can automate badging as part of your continuous integration setup by setting up three Gradle tasks for each variant of your app you want to validate. Using release as an example, create these three tasks:

- generateReleaseBadging – Generates the badging file from the universal APK using the aapt2 executable. The output of this task (the badging information) is used for the following two tasks.

- updateReleaseBadging – Copies the generated badging file into the main project directory. The file is checked into source control as a golden badging file.

- checkReleaseBadging – Validates the generated badging file against the golden badging file.

CI should run checkReleaseBadging to verify that the checked-in golden badging file still matches the generated badging file for the current code. If code changes or dependency updates have caused the badging file to change in any way, CI fails.

When changes are intentional, run updateReleaseBadging to update the golden badging file and recheck it into source control. Then, this change will surface in code review to be validated by reviewers that the badging changes are expected.

CI-automated badging guards against changes inadvertently causing a new feature to be required, which would reduce availability of the app.

For a complete working example of a CI system verifying the badging file, check out the setup in the Now in Android app.

Keep features optional

Android devices are continually becoming more varied, with users expecting a great experience from your Android app regardless of the type of device they’re using. While some software or hardware features might be essential to your app’s function, in many cases they should not be strictly required, needlessly preventing some users from downloading your app.

Use the badging output from aapt2 to check which features your app requires, and use the Play Console to verify which devices the requirements are preventing from downloading your app. You can automatically check your app’s badging in CI and catch regressions.

Bottom line: If you don’t absolutely need a feature for your entire app to function, make the feature optional to ensure your app’s availability to the greatest number of devices and users.

Learn more by checking out our developer guide.

Posted by Alex Vanyo – Developer Relations Engineer

Posted by Alex Vanyo – Developer Relations Engineer

Posted by Leticia Lago, Developer Marketing

Posted by Leticia Lago, Developer Marketing

Posted by Chris Wailes - Senior Software Engineer

Posted by Chris Wailes - Senior Software Engineer

Posted by Ting-Yuan Huang – Software Engineer, and Jiaxiang Chen – Software Engineer

Posted by Ting-Yuan Huang – Software Engineer, and Jiaxiang Chen – Software Engineer

Posted by Dave Burke, VP of Engineering

Posted by Dave Burke, VP of Engineering

Posted by Sandeep Patil – Principal Software Engineer, and Irene Ang – Product Manager

Posted by Sandeep Patil – Principal Software Engineer, and Irene Ang – Product Manager

Posted by Sandhya Mohan, Product Manager

Posted by Sandhya Mohan, Product Manager

Posted by Ben Weiss, Senior Developer Relations Engineer

Posted by Ben Weiss, Senior Developer Relations Engineer

Posted by Sam Bright, Vice President & General Manager, Google Play

Posted by Sam Bright, Vice President & General Manager, Google Play