Since the launch of Google’s Next Billion Users (NBU) initiative in 2015, nearly 3 billion people worldwide came online for the very first time. In the next four years, we expect another 1.2 billion new internet users, and building for and with these users allows us to build better for the rest of the world.

For this year’s I/O, the NBU team has created sessions that will showcase how organizations can address representation bias in data, learn how new users experience the web, and understand Africa’s fast-growing developer ecosystem to drive digital inclusion and equity in the world around us.

We invite you to join these developers sessions and hear perspectives on how to build for the next billion users. Together, we can make technology helpful, relevant, and inclusive for people new to the internet.

Session: Building for everyone: the importance of representative data

Mike Knapp, Hannah Highfill and Emila Yang from Google’s Next Billion Users team, in partnership with Ben Hutchinson from Google’s Responsible AI team, will be leading a session on how to crowdsource data to build more inclusive products.

Data gathering is often the most overlooked aspect of AI, yet the data used for machine learning directly impacts a project’s success and lasting potential. Many organizations—Google included—struggle to gather the right datasets required to build inclusively and equitably for the next billion users. “We are going to talk about a very experimental product and solution to building more inclusive technology,” says Knapp of his session. “Google is testing a paid crowdsourcing app [Task Mate] to better serve underrepresented communities. This tool enables developers to reach ‘crowds’ in previously underrepresented regions. It is an incredible step forward in the mission to create more inclusive technology.”

Bookmark this session to your I/O developer profile.

Session: What we can learn from the internet’s newest users

“The first impression that your product makes matters,” says Nicole Naurath, Sr. UX Researcher - Next Billion Users at Google. “It can either spark curiosity and engagement, or confuse your audience.”

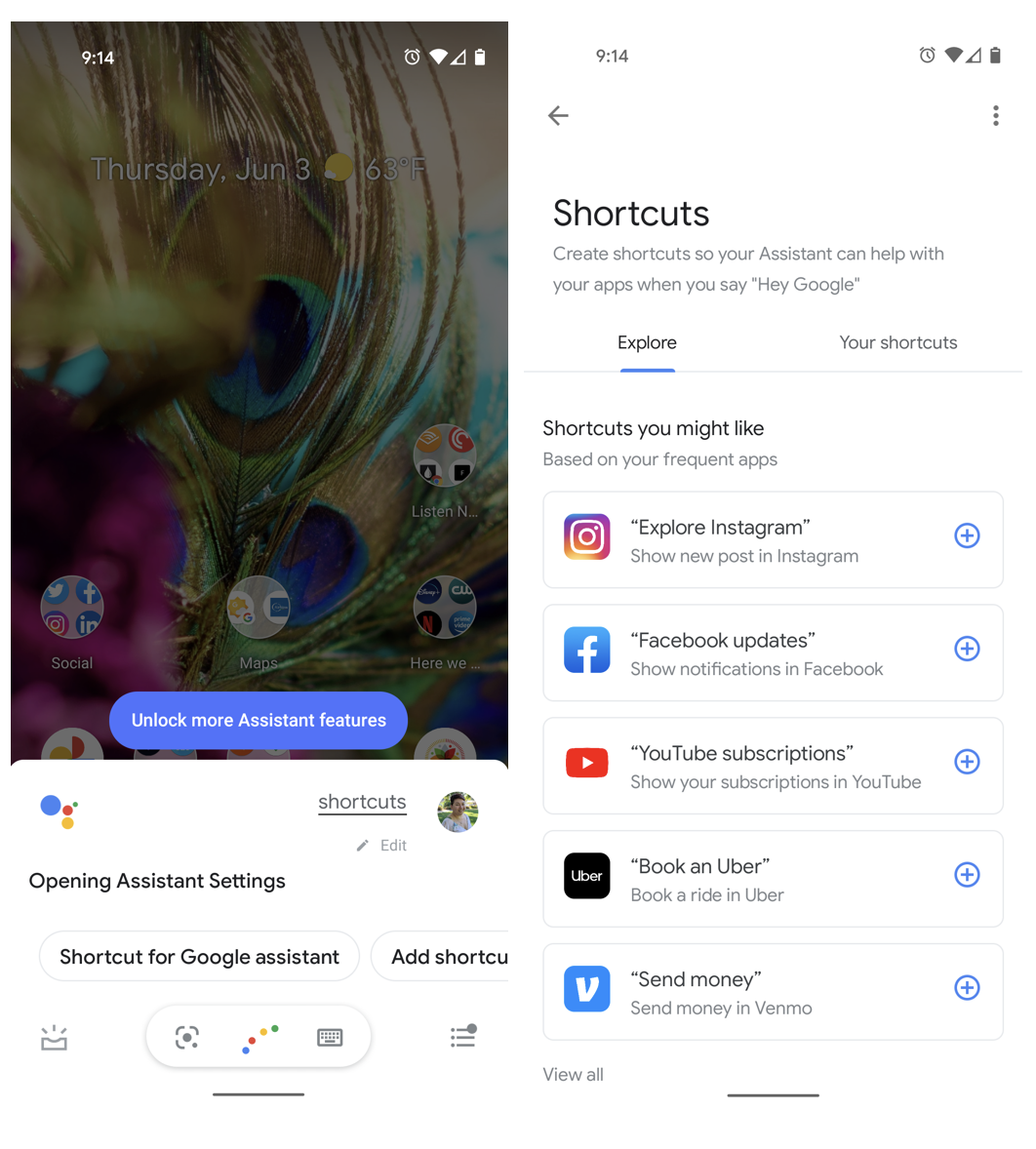

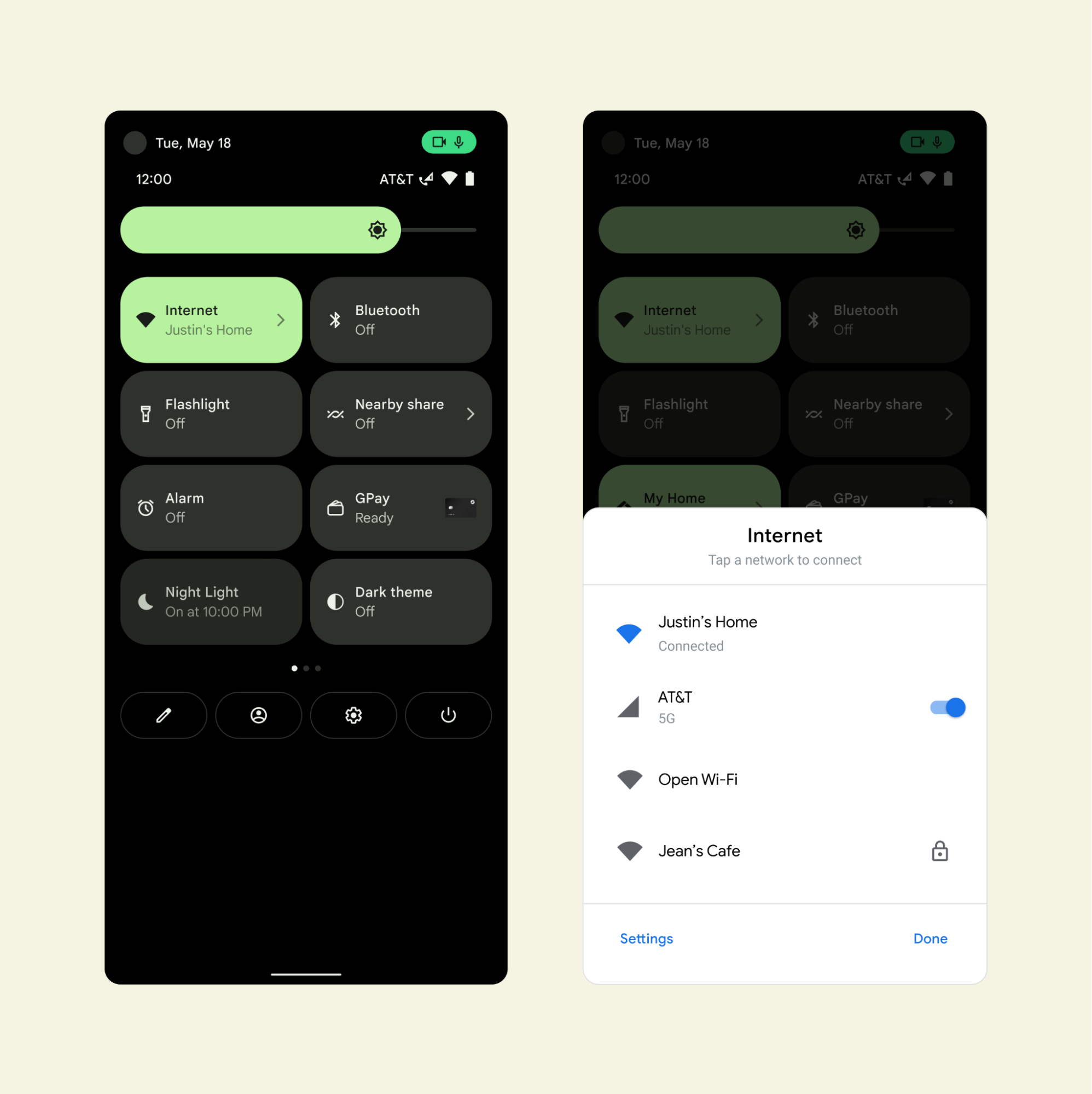

Everyday, thousands of people are coming online for the first time. Their experience can be directly impacted by how familiar they are with technology. People with limited digital experience, or novice internet users, experience the web differently and sometimes developers are not used to building for them. Design elements such as images, icons, and colors play a key role in digital experience. If images are not relatable, icons are irrelevant, and colors are not grounded in cultural context, the experience can confuse anyone, especially someone new to the internet.

Nicole Naurath and Neha Malhotra, from Google’s Next Billion Users team, will be leading the session on what we can learn from the internet’s newest users, how users experience the web and share a framework for evaluating products that work for novice internet users.”

Bookmark this session to your I/O developer profile.

Session: Africa’s booming developer ecosystem

Software developers are the catalyst for digital transformation in Africa. They empower local communities, spark growth for businesses, and drive innovation in a continent which more than 1.3 billion people call home. Demand for African developers reached an all-time high last year, driven by both local and remote opportunities, and is growing even faster than the continent's developer population.

Andy Volk and John Kimani from the Developer and Startup Ecosystem team in Sub-Saharan Africa will share findings from the Africa Developer Ecosystem 2021 report.

In their words, “This session is for anyone who wants to find out more about how African developers are building for the world or who is curious to find out more about this fast-growing opportunity on the continent. We are presenting trends, case studies and new research from Google and its partners to illustrate how people and organizations are coming together to support the rapid growth of the developer ecosystem.”

Bookmark this session to your I/O developer profile.

To learn more about Google’s Next Billion Users initiative, visit nextbillionusers.google