Posted by Nick Felker, Developer Relations Engineer

Interactive Canvas DevTool Extension

Using Interactive Canvas DevTools

After installing the Interactive Canvas DevTools from the Chrome Web Store, you’ll see a new Interactive Canvas tab when you Open Chrome DevTools.

When you load your web app in your browser, from a publicly hosted URL, localhost, or a remote device on your network, this tab lets you directly interface with the Interactive Canvas callbacks registered on the page to quickly and iteratively test your experience. Suggestion chips are created after every execution to let you replay the same command later.

To get started even faster, you can go to the Preferences tab and click the Import /SDK button. This will open a directory picker. You can select your project’s SDK folder. The extension will identify JSON payloads and TTS marks in your project and surface them as suggestion chips automatically.

JSON historical object changes

When the fields of the JSON object changed, you can view the changes in a colored diff.

Methods that send data to your webhook are instead rerouted to the History tab. This tab hosts a list of every text query and canvas state change in reverse chronological order. This allows you to view how changes in your web app would affect your conversational state. Each time the canvas state changes, you can see a visual representation of which fields changed.

Different levels of notice when using an operation unsupported in Interactive Canvas

Different levels of notice when using an operation unsupported in Interactive Canvas.

There are a number of other features that enhance the developer experience. For example, for browser methods that are not supported in Interactive Canvas, you can optionally log a warning or throw an error when you try to use them. This will make it easier to identify compatibility issues sooner and enforce these policies while debugging.

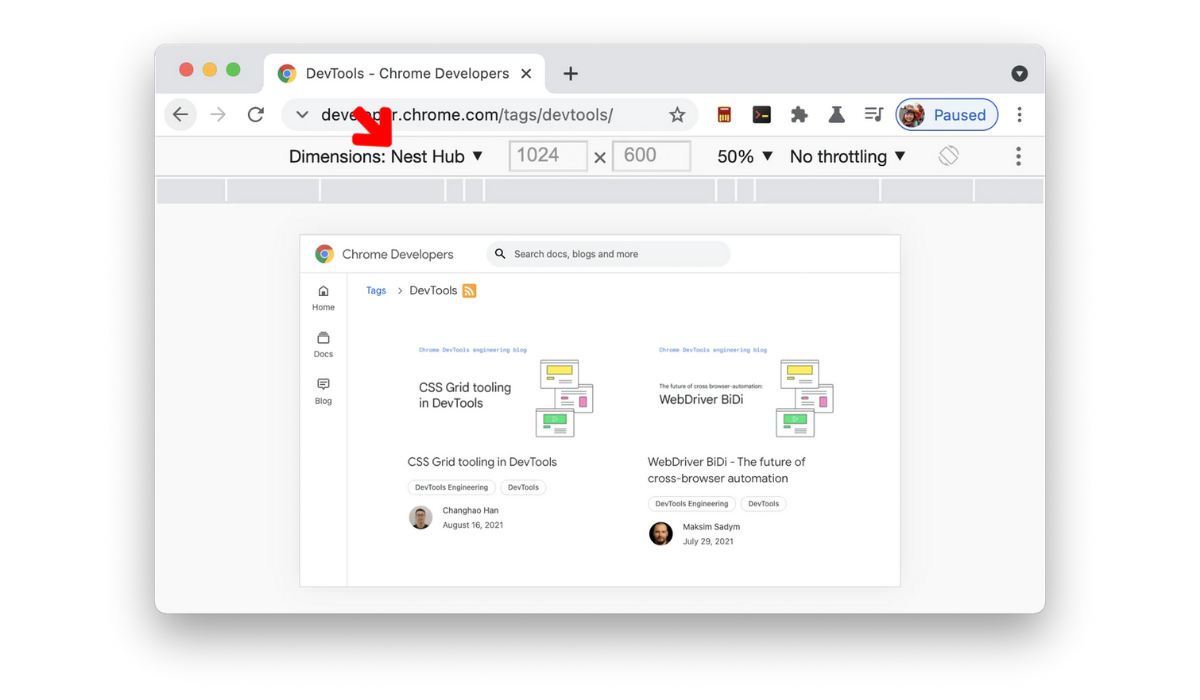

Nest Hub devices in the Device list

You are able to set the window to match the Nest Hub screen.

You can also add a header element onto your page that will help you optimize your layout for a smart display. Combined with the Nest Hub and Nest Hub Max as new device presets in Chrome DevTools, you are able to set your development environment to be an accurate representation of how your Action will look and behave on a physical device.

Interactive Canvas tab on a remote device

You can also send data to your remote device.

This extension also works if you have a physical device. If it is on the same network, you can connect to your smart display and open up the Interactive Canvas tab on that remote device. From there, you are able to send commands using the same interface.

You can install the extension now through the Chrome Web Store. We’re also happy to announce that the DevTools are Open Source! The source code for this extension has been published on GitHub, where you can find instructions on how to set up the project locally, and how to submit pull requests.

Thanks for reading! To share your thoughts or questions, join us on Reddit at /r/GoogleAssistantDev.

Follow @ActionsOnGoogle on Twitter for more of our team's updates, and tweet using #AoGDevs to share what you’re working on. Can’t wait to see what you build!

Source: Google Developers Blog

Assistant Developer Relations is hiring!

Posted by Mike Bifulco, Developer Relations Engineer

Every day, millions of users ask Google Assistant for help with the things that matter to them: managing a connected home, setting reminders and timers, adding to their shopping list, communicating with friends and family, and countless other imaginative uses. Developers use Assistant APIs and tools to add voice interactivity to their apps for everything from building games, to ordering food, to listening to the news, and much more.

The Google Assistant Developer Relations team works with our community and our engineering teams to help developers build, integrate, and innovate with voice-driven technology on the Assistant platform. We help developers build Conversational Actions, Smart Home hardware and tools, and App Actions integrations with Android. As we continue our mission to bring accessible voice technology to Android devices, smart speakers and screens, we’re excited to announce that we are hiring for several roles!

What Assistant DevRel does

In Developer Relations (DevRel), we wear many hats - our developer ecosystem stretches across several Google products, and work with our community wherever we can. Our team consists of engineers, technical writers, and content producers who work to help developers build with Assistant, while providing active feedback and validation to the engineering teams to make Google Assistant even better. These are just some of the ways we do our work:

Google I/O and other conferences

Google I/O is Google’s annual developer conference, where Googlers from across the company share the latest product releases, insights from Google experts, as well as hands-on learning. The Assistant DevRel team is heavily involved in I/O, writing, producing, and delivering a variety of content types, including: keynotes, technical talks, hands-on workshops, codelabs, and technical demos. We also meet and talk to developers who are building cool things with Assistant.

We also participate in a variety of other conferences, and while most have been virtual for the past year or so, we’re looking forward to traveling to places near and far to deliver technical content to the global community.

Our team members contribute to creation and presentation of content at events like Google I/O.

Google Developers YouTube channel

One of the best ways to get our content out to the world is via YouTube. Members of our team make frequent appearances on the Google Developers channel, producing segments and episodes for The Developer Show, Assistant On Air, AoG Pro Tips, as well as tutorials on new features and developer tools.

Open Source Projects

Another exciting part of our work is the creation and maintenance of Open Source libraries used as samples, demos, and starter kits for devs working with Assistant. As a part of the team, you’ll contribute to projects in GitHub organizations including github.com/actions-on-google and github.com/actions-on-google-labs, as well as projects and libraries created outside of Google.

Developer Platform Tools

The Assistant DevRel team also helps build and maintain the Assistant Developer Platform - we contribute to the tools, policies and features which allow developers to distribute their Assistant apps to Android devices, smart screens and speakers. This engineering work is a truly unique opportunity to shape the future of a growing developer platform, and to support the future of voice-driven and multi-modal technology – all built from the ground up.

Open positions on our team

Our team is headquartered in Mountain View, California, US. If contributing to the next generation of Google Assistant excites you, read below about our openings to find out more.

Developer Relations Engineer

Location: Mountain View, CA, New York, NY, Seattle, WA, or Austin, TX

As a Developer Relations Engineer (or DRE), you’ll work to build developer tools, code samples, and demos for Google Assistant. You’ll work with our community to educate and support developers using our APIs to build their software. You will also be the 0th customer for new features on Assistant - testing, verifying, and giving active feedback to the PM, UX, and Engineering teams that make Assistant come to life. You’ll work with Google Developer Experts to build and scale content to be shared at conferences, events, and hackathons. DREs may also occasionally contribute to blog posts, help write and produce scripts for educational videos on YouTube, and speak at events like conferences, Google Developer Groups, and meetups. Candidates should have experience building native Android apps with Java or Kotlin - experience creating web applications with HTML, JavaScript, and CSS is a plus.

Sound interesting? Learn more and apply to be a Developer Relations Engineer.

Developer Relations Engineering Manager

Location: Mountain View, CA, New York, NY, Seattle, WA, or Austin, TX

Developer Relations Engineering Managers help coordinate and direct teams of engineers to build and update developer tools, APIs, reference documentation, and code samples. As an Engineering Manager, you’ll work with leadership across the company to prioritize new features, goals, and programs for developer relations within Assistant. You’ll manage a variety of roles, including Developer Relations Engineers, Program Managers, and Technical Writers. You’ll be asked to work across a variety of technologies, with a strong focus on building tools and libraries for Android.

Sound interesting? learn more and apply to be a Developer Relations Engineering Manager

Thanks for reading! To share your thoughts or questions, join us on Reddit at r/GoogleAssistantDev.

Follow @ActionsOnGoogle on Twitter for more of our team's updates, and tweet using #AoGDevs to share what you’re working on. Can’t wait to see what you build!

Source: Google Developers Blog

Assistant Recap Google I/O 2021

Written by: Jessica Dene Earley-Cha, Mike Bifulco and Toni Klopfenstein, Developer Relations Engineers for Google Assistant

Now that we’ve packed up all of the virtual stages from Google I/O 2021, let's take a look at some of the highlights and new product announcements for App Actions, Conversational Actions, and Smart Home Actions. We also held a number of amazing live events and meetups that happened during I/O - which we’ll summarize as well.

App Actions

App Actions allows developers to extend their Android App to Google Assistant. For our Android Developers, we are happy to announce that App Actions is now part of the Android framework. With the introduction of the beta shortcuts.xml configuration resource and our latest Google Assistant Plug App Actions is moving closer to the Android platform.

Capabilities

Capabilities is a new Android framework API that allows you to declare the types of actions users can take to launch your app and jump directly to performing a specific task. Assistant provides the first available concrete implementation of the capabilities API. You can utilize capabilities by creating shortcuts.xml resources and defining your capabilities. Capabilities specify two things: how it's triggered and what to do when it's triggered. To add a capability, use Built-In intents (BIIs), which are pre-built intents that provide all the Natural Language Understanding to map the user's input to individual fields. When a BII is matched by the user’s speech, your capability will trigger an Android Intent that delivers the understood BII fields to your app, so you can determine what to show in response.

This framework integration is in the Beta release stage, and will eventually replace the original implementation of App Actions that uses actions.xml. If your app provides both the new shortcuts.xml and old actions.xml, the latter will be disregarded.

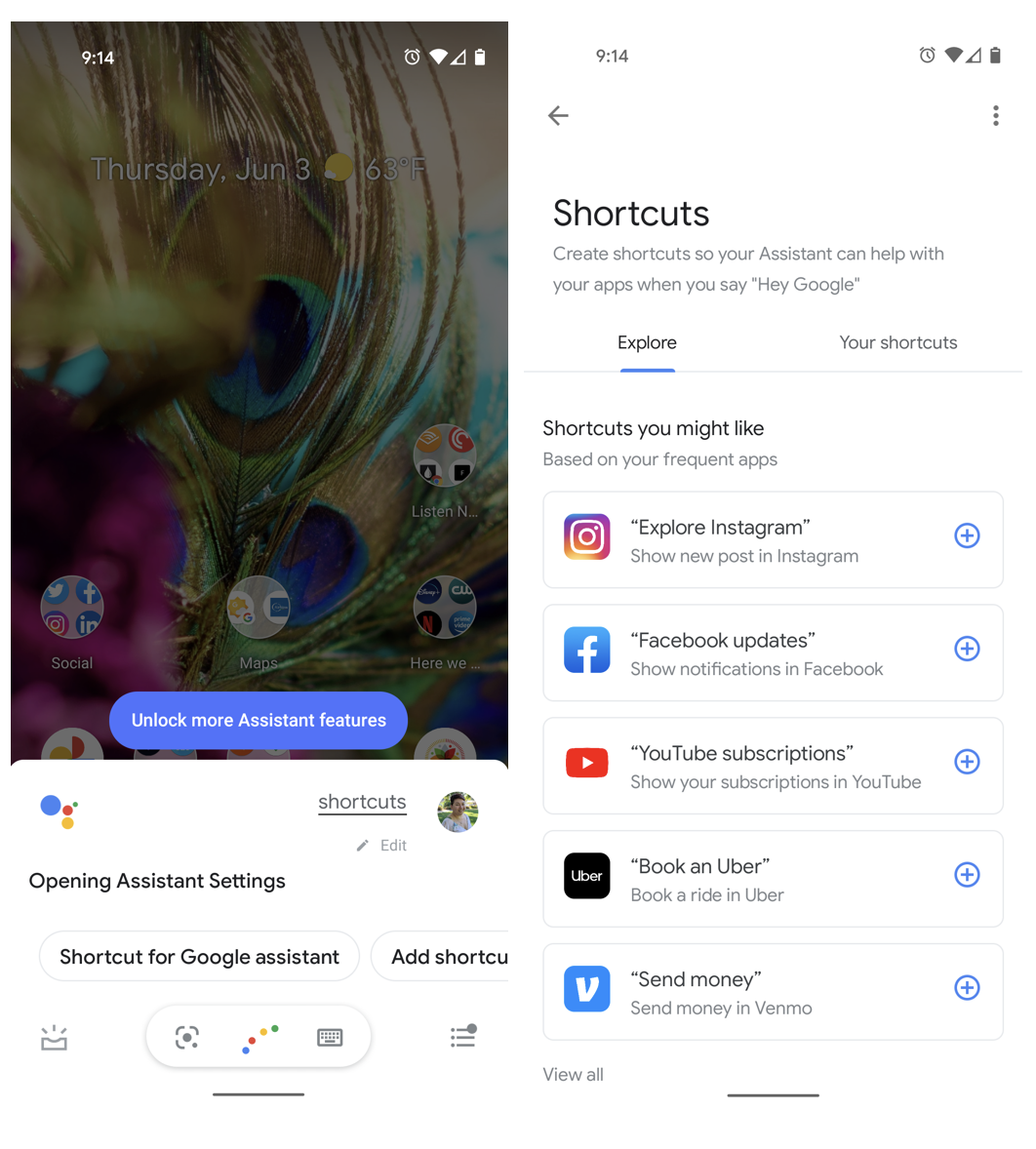

Voice shortcuts for Discovery

Google Assistant suggests relevant shortcuts to users and has made it easier for users to discover and add shortcuts by saying “Hey Google, shortcuts.”

You can use the Google Shortcuts Integration library, currently in beta, to push an unlimited number of dynamic shortcuts to Google to make your shortcuts visible to users as voice shortcuts. Assistant can suggest relevant shortcuts to users to help make it more convenient for the user to interact with your Android app.

In-App Promo SDK

Not only can Assistant suggest shortcuts, with In-App Promo SDK you can proactively suggest shortcuts in your app for actions that the user can repeat with a voice command to Assistant, in beta. The SDK allows you to check if the shortcut you want to suggest already exists for that user and prompt the user to create the suggested shortcut.

Google Assistant plugin for Android Studio

To support testing Capabilities, Google Assistant plugin for Android Studio was launched. It contains an updated App Action Test Tool that creates a preview of your App Action, so you can test an integration before publishing it to the Play store.

New App Actions resources

Learn more with new or updated content:

- Updated and new Documentation, including a new App Action Overview Video

- New beta codelab that walks you through adding two common BIIs using capabilities defined in shortcuts.xml

- 9 new videos on the App Actions Playlist, which include How to voicify your Android app and Android shortcuts for Assistant sessions, App Actions AMA, short 2 min demos of How to create your first App Action and App Actions test tool

Conversational Actions

During the What's New in Google Assistant keynote, Director of Product for the Google Assistant Developer Platform Rebecca Nathenson mentioned several coming updates and changes for Conversational Actions.

Updates to Interactive Canvas

Over the coming weeks, we’ll introduce new functionality to Interactive Canvas. Canvas developers will be able to manage intent fulfillment client-side, removing the need for intermediary webhooks in some cases. For use cases which require server-side fulfillment, like transactions and account linking, developers will be able to opt-in to server-side fulfillment as needed.

We’re also introducing a new function, outputTts(), which allows you to trigger Text to Speech client-side. This should help reduce latency for end users.

Additionally, there will be updates to the APIs available to get and set storage for both the home and individual users, allowing for client-side storage of user information. You’ll be able to persist user information within your web app, which was previously only available for access by webhook.

These new features for Interactive Canvas will be made available soon as part of a developer preview for Conversational Actions Developers. For more details on these new features, check out the preview page.

Updates to Transaction UX for Smart Displays

Also coming soon to Conversational Actions - we’re updating the workflow for completing transactions, allowing users to complete transactions from their smart screens, by confirming the CVC code from their chosen payment method. Watch our demo video showing new transaction features on smart devices to get a feel for these changes.

Tips on Launching your Conversational Action

Make sure to catch our technical session Driving a successful launch for Conversational Actions to learn about some strategies for putting together a marketing team and go-to-market plan for releasing your Conversational Action.

AMA: Games on Google Assistant

If you’re interested in building Games for Google Assistant with Conversational Actions, you should check out the recording of our AMA, where Googlers answered questions from I/O attendees about designing, building, and launching games.

Smart Home Actions

The What's new in Smart Home keynote covered several updates for Smart Home Actions. Following our continued emphasis on quality smart home integrations with the updated policy launch, we added new features to help you build engaging, reliable Actions for your users.

Test Suite and Analytics

The updated Test Suite for Smart Home now supports automatic testing, without the use of TTS. Additionally, the Analytics dashboards have been expanded with more detailed logs and in-depth error reporting to help you more quickly identify any potential issues with your Action. For a deeper dive into these enhancements, try out the Debugging the Smart Home workshop. There are also two new debugging codelabs to help you get more familiar with using these tools to improve the quality of your Action.

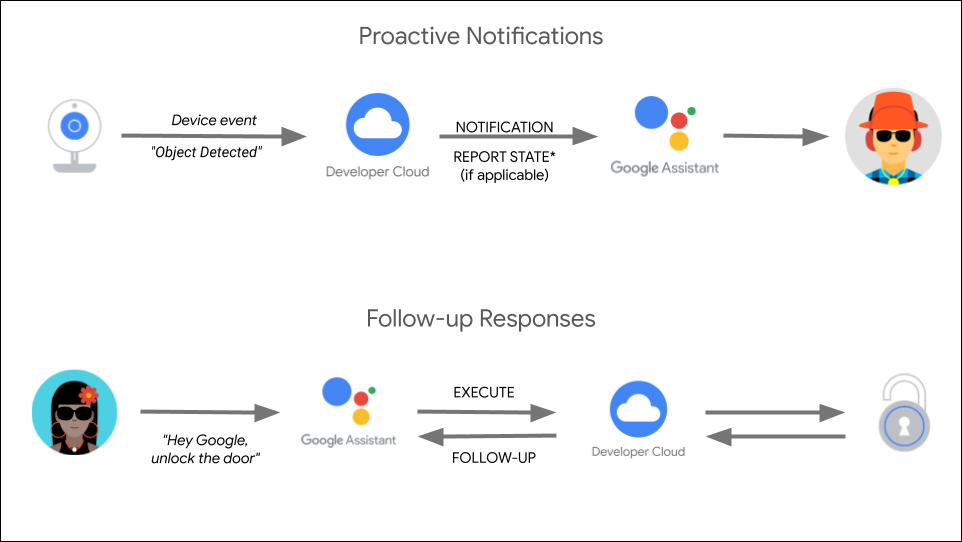

Notifications

We expanded support for proactive notifications to include the device traits RunCycle and SensorState. Users can now be proactively notified for multiple different device events. We also announced the release of follow-up responses. These follow-up responses enable your smart devices to notify users asynchronously to device changes succeeding or failing.

WebRTC

We added support for WebRTC to the CameraStream trait. Smart camera users can now benefit from lower latency and half-duplex talk between devices. As mentioned in the keynote, we will also be making updates to the other currently supported protocols for smart cameras.

Bluetooth Seamless Setup

To improve the on-boarding experience, developers can now enable BLE (bluetooth low energy) for device onboarding with Bluetooth Seamless Setup. Google Home and Nest devices can act as local hubs to provision and register nearby devices for any Action configured with local fulfillment.

Matter

Project CHIP has officially rebranded as Matter. Once the IP-based connectivity protocol officially launches, we will be supporting devices running the protocol. Watch the Getting started with Project CHIP tech session to learn more.

Ecosystem and Community

The women building voice AI and their role in the voice revolution

Voice AI is fundamentally changing how we interact with technology and its future will be a product of the people that build it. Watch this session to hear about the talented women shaping the Voice AI field, including an interview with Lilian Rincon, Sr. Director of Product Management at Google. Leslie also discusses strategies for achieving equal gender representation in Voice AI, an ambitious but essential goal.

AMA: How the Assistant Investment Program can help fund your startup

This "Ask Me Anything" session was hosted by the all-star team who runs the Google for Startups Accelerator: Voice AI. The team fielded questions from startups and investors around the world who are interested in building businesses based on voice technology. Check out the recording of this event here. The day after the AMA session, the 2021 cohort for the Voice AI accelerator had their demo day - you can catch the recording of their presentations here.

Women in Voice Meetup

We connected with amazing women in Voice AI and discussed ways allies can help women in Voice to be more successful while building a more inclusive ecosystem. It was hosted by Leslie Garcia-Amaya, Jessica Dene Earley-Cha, Karina Alarcon, Mike Bifulco, Cathy Pearl, Toni Klopfenstein, Shikha Kapoor & Walquiria Saad

Smart home developer Meetups

One of the perks of I/O being virtual this year was the ability to connect with students, hobbyists, and developers around the globe to discuss the current state of Smart Home, as well as some of the upcoming features. We hosted 3 meetups for the APAC, Americas, and EMEA regions and gathered some great feedback from the community.

Assistant Google Developers Experts Meetup

Every year we host an Assistant Google Developer Expert meetup to connect and share knowledge. This year we were able to invite everyone who is interested in building for Google Assistant to network and connect with one another. At the end several attendees came together at the Assistant Sandbox for a virtual photo!

Thanks for reading! To share your thoughts or questions, join us on Reddit at r/GoogleAssistantDev.

Follow @ActionsOnGoogle on Twitter for more of our team's updates, and tweet using #AoGDevs to share what you’re working on. Can’t wait to see what you build!

Source: Google Developers Blog

Getting Started with Smart Home Notifications and Follow-up Responses

Posted by Toni Klopfenstein, Developer Advocate

Alerts for important device events, such as a delivery person arriving or the back door failing to lock, create a more beneficial and reassuring experience for your smart home device users.

As we announced at I/O, you can now add proactive notifications and follow-up responses to your Smart Home Action to alert users to events in a timely, relevant and helpful fashion and better engage with your end users.

Notifications can either alert a user to an event that has occurred without them proactively issuing a request through the Assistant, or as a follow-up to verify that the user's request has been fulfilled. Each device event that triggers one of these notifications has a unique event id, which helps the Assistant route it to the appropriate Home Graph users and Google Home Smart Speakers or Nest Smart Displays, depending on the notification type and priority. Notifications and follow-up responses can also provide users with additional information, such as error and exception codes, or timestamps for the event.

You can enable notifications on your existing devices once users opt-in to receive alerts by updating the device definition and requesting a SYNC intent. You can then send device notifications along with any applicable device state changes using the Home Graph API.

We are adding support for traits where asynchronous requirements are a core use case.The following device traits now support follow-up responses to user queries:

Additionally, we are launching proactive notification alerts for the following traits:

For more information, check out the developer guides and samples, or check out the Notifications video.

We want to hear from you, so continue sharing your feedback with us through the issue tracker, and engage with other smart home developers in the /r/GoogleAssistantDev community. Follow @ActionsOnGoogle on Twitter for more of our team's updates, and tweet using #AoGDevs to share what you’re working on. We can’t wait to see what you build!

Source: Google Developers Blog

Everything Assistant at I/O

Posted by Mike Bifulco

We’re excited to host the first ever virtual Google I/O Conference this year, from May 18-20, 2021 – and everyone's invited! Developers around the world will join us for keynotes, technical sessions, codelabs, demos, meetups, workshops, and Ask Me Anything (AMA) sessions hosted by Googlers whose teams have been hard at work preparing new features, APIs, and tools for you to try out. We can’t wait for you to explore everything Google has to share. Given the sheer amount of content that will be shared during those 3 days, this guide is meant to help you find sessions that might interest you if you’re interested in building and integrating with Google Assistant.

With that in mind, here’s a rundown of everything Assistant at Google I/O 2021:

Keynote: What’s New in Google Assistant (register)

We’ll kick off news from Assistant with our keynote session, which will be livestreamed on May 19th at 9:45am PST. Expect to hear about what’s happened in Assistant over the past year, new product announcements, feature updates, and tooling changes.

Keynote: What’s New in Smart Home (register)

In celebration of Google Assistant's 5th birthday, we'll share our Smart Home journey and the things we’ve learned along the way. We'll also dive into product vision, new product announcements, and showcase great Assistant experiences built by our developer community. Catch the Smart Home keynote on May 19th at 4:15pm PST.

Technical Sessions

Technical sessions are 15 minute deep dives into new features, tools, and other announcements from product teams. These 4 sessions will be available on demand, so you can watch them any time after they officially launch during the event.

Driving a Successful Launch for Conversational Actions (register)

In this session, we’ll discuss marketing activities that will help users discover and engage with what you’ve built on Google Assistant. Learn some of the basics of putting together a marketing team, a go-to-market plan, and some recommended activities for promoting engagement with your Conversational Actions.

How to Voicify Your Android App (register)

In this session, you’ll learn how to implement voice capabilities in your Android App. Get users into your app with a voice command using App Actions.

Android Shortcuts for Assistant (register)

Now that you've added a layer of voice interaction to your Android app, learn what's new with Android Shortcuts and how they can be extended to the Google Assistant.

Refreshing Widgets (register)

Widgets in Android 12 are coming with a fresh new look and feel. Come to this session to learn how you can make the most of what’s coming to Widgets, while also making them more useful and discoverable through integrations with Assistant and Assistant Auto.

Ask Me Anything (AMA)

AMAs are a great opportunity for you to have your questions fielded by Googlers. If you register for I/O, you’ll be able to pre-submit questions to any of these AMAs. Teams of Googlers will be answering audience questions live during I/O. All AMA sessions will be livestreamed at specific dates and times, so be sure to add them to your calendar.

App Actions: Ask Me Anything

May 19th, 10:15am PST (register)

This is the place to bring all of your burning questions about App Actions for Android. Our App Actions team will include Program Managers, Developer Advocates, and Engineers who are looking forward to answering your questions. Maybe you’re building an app which uses Custom Intents, or you’ve got questions about some of the new feature announcements from our Technical Sessions (see above!) - the team is looking forward to helping.

Games on Google Assistant: Ask Me Anything

May 19th, 11:00pm PST (register)

Join a panel of Googlers to ask your questions about building Games with Google Assistant. Our team of Product Managers and Game developers are here to help you - from designing and building games, to toolchain questions, to figuring out what types of games people are playing on their smart devices.

Workshops

This year, our workshops will be conducted online via livestream. Each workshop will be led by a Googler providing instruction alongside a team of Googler TAs, who will be there to answer your questions via live chat. Workshops will show you how to apply the things you learn at I/O by giving you hands-on experience with new tools and APIs. Each workshop has limited space for registrations, so be sure to sign up early if you’re interested.

Extend an Android app to Google Assistant with App Actions

May 19th, 11:00am PST (register)

Learn to develop App Actions using common built-in intents in this intermediate codelab, enabling users to open app features and search for in-app content, with Google Assistant.

Debugging the Smart Home

May 19th, 11:30pm PST (register)

Improve your products' reliability and user experience with Google's new smart home quality tools in this intermediate codelab. Learn how to view, analyze, debug and fix issues with your smart home integrations.

Meetups

Women in Voice Meetup

May 20th, 4:00pm PST (register)

This meetup will be a chance for developers to share influential work by women in Voice AI and to discuss ways allies can help women in Voice to be more successful while building a more inclusive ecosystem.

Smart Home Developer Meetup

[Americas] May 18, 3:00pm PST (register)

[APAC] May 19th, 9:00pm PST (register)

[EMEA] May 20th, 6:00am PST (register)

This meetup will be a chance for developers interested in Smart Home to chat with the Smart Home partner engineering team about developing and debugging smart home integrations, share projects, or ask questions.

Register now

Registration for Google I/O 2021 is now open - and attending I/O 2021 is entirely free and open to all. We hope to see you there, and can’t wait to share what we’ve been working on with you. To register for the event, head over to the Google I/O registration page.

Source: Google Developers Blog

Recommended strategies and best practices for designing and developing games and stories on Google Assistant

Posted by Wally Brill and Jessica Dene Earley-Cha

Since we launched Interactive Canvas, and especially in the last year we have been helping developers create great storytelling and gaming experiences for Google Assistant on smart displays. Along the way we’ve learned a lot about what does and doesn’t work. Building these kinds of interactive voice experiences is still a relatively new endeavor, and so we want to share what we've learned to help you build the next great gaming or storytelling experience for Assistant.

Here are three key things to keep in mind when you’re designing and developing interactive games and stories. These three were selected from a longer list of lessons learned (stay tuned to the end for the link for the 10+ lessons) because they are dependent on Action Builder/SDK functionality and can be slightly different for the traditional conversation design for voice only experiences.

1. Keep the Text-To-Speech (TTS) brief

Text-to-speech, or computer generated voice, has improved exponentially in the last few years, but it isn’t perfect. Through user testing, we’ve learned that users (especially kids) don’t like listening to long TTS messages. Of course, some content (like interactive stories) should not be reduced. However, for games, try to keep your script simple. Wherever possible, leverage the power of the visual medium and show, don’t tell. Consider providing a skip button on the screen so that users can read and move forward without waiting until the TTS is finished. In many cases the TTS and text on a screen won’t always need to mirror each other. For example the TTS may say "Great job! Let's move to the next question. What’s the name of the big red dog?" and the text on screen may simply say "What is the name of the big red dog?"

Implementation

You can provide different audio and screen-based prompts by using a simple response, which allows different verbiage in the speech and text sections of the response. With Actions Builder, you can do this using the node client library or in the JSON response. The following code samples show you how to implement the example discussed above:

candidates:

- first_simple:

variants:

- speech: Great job! Let's move to the next question. What’s the name of the big red dog?

text: What is the name of the big red dog?Note: implementation in YAML for Actions Builder

app.handle('yourHandlerName', conv => {

conv.add(new Simple({

speech: 'Great job! Let\'s move to the next question. What’s the name of the big red dog?',

text: 'What is the name of the big red dog?'

}));

});Note: implementation with node client library

2. Consider both first-time and returning users

Frequent users don't need to hear the same instructions repeatedly. Optimize the experience for returning users. If it's a user's first time experience, try to explain the full context. If they revisit your action, acknowledge their return with a "Welcome back" message, and try to shorten (or taper) the instructions. If you noticed the user has returned more than 3 or 4 times, try to get to the point as quickly as possible.

An example of tapering:

- Instructions to first time users: “Just say words you can make from the letters provided. Are you ready to begin?”

- For a returning user: “Make up words from the jumbled letters. Ready?”

- For a frequent user: “Are you ready to play?”

Implementation

You can check the lastSeenTime property in the User object of the HTTP request. The lastSeenTime property is a timestamp of the last interaction with this particular user. If this is the first time a user is interacting with your Action, this field will be omitted. Since it’s a timestamp, you can have different messages for a user who’s last interaction has been more than 3 months, 3 weeks or 3 days. Below is an example of having a default message that is tapered. If the lastSeenTime property is omitted, meaning that it's the first time the user is interacting with this Action, the message is updated with the longer message containing more details.

app.handle('greetingInstructions', conv => {

let message = 'Make up words from the jumbled letters. Ready?';

if (!conv.user.lastSeenTime) {

message = 'Just say words you can make from the letters provided. Are you ready to begin?';

}

conv.add(message);

});Note: implementation with node client library

3. Support strongly recommended intents

There are some commonly used intents which really enhance the user experience by providing some basic commands to interact with your voice app. If your action doesn’t support these, users might get frustrated. These intents help create a basic structure to your voice user interface, and help users navigate your Action.

- Exit / Quit

Closes the action

- Repeat / Say that again

Makes it easy for users to hear immediately preceding content at any point

- Play Again

Gives users an opportunity to re-engage with their favorite experiences

- Help

Provides more detailed instructions for users who may be lost. Depending on the type of Action, this may need to be context specific. Defaults returning users to where they left off in game play after a Help message plays.

- Pause, Resume

Provides a visual indication that the game has been paused, and provides both visual and voice options to resume.

- Skip

Moves to the next decision point.

- Home / Menu

Moves to the home or main menu of an action. Having a visual affordance for this is a great idea. Without visual cues, it’s hard for users to know that they can navigate through voice even when it’s supported.

- Go back

Moves to the previous page in an interactive story.

Implementation

Actions Builder & Actions SDK support System Intents that cover a few of these use case which contain Google support training phrase:

- Exit / Quit ->

actions.intent.CANCELThis intent is matched when the user wants to exit your Actions during a conversation, such as a user saying, "I want to quit." - Repeat / Say that again ->

actions.intent.REPEATThis intent is matched when a user asks the Action to repeat.

For the remaining intents, you can create User Intents and you have the option of making them Global (where they can be triggered at any Scene) or add them to a particular scene. Below are examples from a variety of projects to get you started:

- Play Again -> Snow Pal's play_again

- Skip -> Gnome Garden's skip

- Home / Menu -> Gnome Garden's setting_menu

So there you have it. Three suggestions to keep in mind for making amazing interactive games and story experiences that people will want to use over and over again. To check out the full list of our recommendations go to the Lessons Learned page.

Thanks for reading! To share your thoughts or questions, join us on Reddit at r/GoogleAssistantDev.

Follow @ActionsOnGoogle on Twitter for more of our team's updates, and tweet using #AoGDevs to share what you’re working on. Can’t wait to see what you build!

Source: Google Developers Blog

Recommended strategies and best practices for designing and developing games and stories on Google Assistant

Posted by Wally Brill and Jessica Dene Earley-Cha

Since we launched Interactive Canvas, and especially in the last year we have been helping developers create great storytelling and gaming experiences for Google Assistant on smart displays. Along the way we’ve learned a lot about what does and doesn’t work. Building these kinds of interactive voice experiences is still a relatively new endeavor, and so we want to share what we've learned to help you build the next great gaming or storytelling experience for Assistant.

Here are three key things to keep in mind when you’re designing and developing interactive games and stories. These three were selected from a longer list of lessons learned (stay tuned to the end for the link for the 10+ lessons) because they are dependent on Action Builder/SDK functionality and can be slightly different for the traditional conversation design for voice only experiences.

1. Keep the Text-To-Speech (TTS) brief

Text-to-speech, or computer generated voice, has improved exponentially in the last few years, but it isn’t perfect. Through user testing, we’ve learned that users (especially kids) don’t like listening to long TTS messages. Of course, some content (like interactive stories) should not be reduced. However, for games, try to keep your script simple. Wherever possible, leverage the power of the visual medium and show, don’t tell. Consider providing a skip button on the screen so that users can read and move forward without waiting until the TTS is finished. In many cases the TTS and text on a screen won’t always need to mirror each other. For example the TTS may say "Great job! Let's move to the next question. What’s the name of the big red dog?" and the text on screen may simply say "What is the name of the big red dog?"

Implementation

You can provide different audio and screen-based prompts by using a simple response, which allows different verbiage in the speech and text sections of the response. With Actions Builder, you can do this using the node client library or in the JSON response. The following code samples show you how to implement the example discussed above:

candidates:

- first_simple:

variants:

- speech: Great job! Let's move to the next question. What’s the name of the big red dog?

text: What is the name of the big red dog?Note: implementation in YAML for Actions Builder

app.handle('yourHandlerName', conv => {

conv.add(new Simple({

speech: 'Great job! Let\'s move to the next question. What’s the name of the big red dog?',

text: 'What is the name of the big red dog?'

}));

});Note: implementation with node client library

2. Consider both first-time and returning users

Frequent users don't need to hear the same instructions repeatedly. Optimize the experience for returning users. If it's a user's first time experience, try to explain the full context. If they revisit your action, acknowledge their return with a "Welcome back" message, and try to shorten (or taper) the instructions. If you noticed the user has returned more than 3 or 4 times, try to get to the point as quickly as possible.

An example of tapering:

- Instructions to first time users: “Just say words you can make from the letters provided. Are you ready to begin?”

- For a returning user: “Make up words from the jumbled letters. Ready?”

- For a frequent user: “Are you ready to play?”

Implementation

You can check the lastSeenTime property in the User object of the HTTP request. The lastSeenTime property is a timestamp of the last interaction with this particular user. If this is the first time a user is interacting with your Action, this field will be omitted. Since it’s a timestamp, you can have different messages for a user who’s last interaction has been more than 3 months, 3 weeks or 3 days. Below is an example of having a default message that is tapered. If the lastSeenTime property is omitted, meaning that it's the first time the user is interacting with this Action, the message is updated with the longer message containing more details.

app.handle('greetingInstructions', conv => {

let message = 'Make up words from the jumbled letters. Ready?';

if (!conv.user.lastSeenTime) {

message = 'Just say words you can make from the letters provided. Are you ready to begin?';

}

conv.add(message);

});Note: implementation with node client library

3. Support strongly recommended intents

There are some commonly used intents which really enhance the user experience by providing some basic commands to interact with your voice app. If your action doesn’t support these, users might get frustrated. These intents help create a basic structure to your voice user interface, and help users navigate your Action.

- Exit / Quit

Closes the action

- Repeat / Say that again

Makes it easy for users to hear immediately preceding content at any point

- Play Again

Gives users an opportunity to re-engage with their favorite experiences

- Help

Provides more detailed instructions for users who may be lost. Depending on the type of Action, this may need to be context specific. Defaults returning users to where they left off in game play after a Help message plays.

- Pause, Resume

Provides a visual indication that the game has been paused, and provides both visual and voice options to resume.

- Skip

Moves to the next decision point.

- Home / Menu

Moves to the home or main menu of an action. Having a visual affordance for this is a great idea. Without visual cues, it’s hard for users to know that they can navigate through voice even when it’s supported.

- Go back

Moves to the previous page in an interactive story.

Implementation

Actions Builder & Actions SDK support System Intents that cover a few of these use case which contain Google support training phrase:

- Exit / Quit ->

actions.intent.CANCELThis intent is matched when the user wants to exit your Actions during a conversation, such as a user saying, "I want to quit." - Repeat / Say that again ->

actions.intent.REPEATThis intent is matched when a user asks the Action to repeat.

For the remaining intents, you can create User Intents and you have the option of making them Global (where they can be triggered at any Scene) or add them to a particular scene. Below are examples from a variety of projects to get you started:

- Play Again -> Snow Pal's play_again

- Skip -> Gnome Garden's skip

- Home / Menu -> Gnome Garden's setting_menu

So there you have it. Three suggestions to keep in mind for making amazing interactive games and story experiences that people will want to use over and over again. To check out the full list of our recommendations go to the Lessons Learned page.

Thanks for reading! To share your thoughts or questions, join us on Reddit at r/GoogleAssistantDev.

Follow @ActionsOnGoogle on Twitter for more of our team's updates, and tweet using #AoGDevs to share what you’re working on. Can’t wait to see what you build!

Source: Google Developers Blog

Recommended strategies and best practices for designing and developing games and stories on Google Assistant

Posted by Wally Brill and Jessica Dene Earley-Cha

Since we launched Interactive Canvas, and especially in the last year we have been helping developers create great storytelling and gaming experiences for Google Assistant on smart displays. Along the way we’ve learned a lot about what does and doesn’t work. Building these kinds of interactive voice experiences is still a relatively new endeavor, and so we want to share what we've learned to help you build the next great gaming or storytelling experience for Assistant.

Here are three key things to keep in mind when you’re designing and developing interactive games and stories. These three were selected from a longer list of lessons learned (stay tuned to the end for the link for the 10+ lessons) because they are dependent on Action Builder/SDK functionality and can be slightly different for the traditional conversation design for voice only experiences.

1. Keep the Text-To-Speech (TTS) brief

Text-to-speech, or computer generated voice, has improved exponentially in the last few years, but it isn’t perfect. Through user testing, we’ve learned that users (especially kids) don’t like listening to long TTS messages. Of course, some content (like interactive stories) should not be reduced. However, for games, try to keep your script simple. Wherever possible, leverage the power of the visual medium and show, don’t tell. Consider providing a skip button on the screen so that users can read and move forward without waiting until the TTS is finished. In many cases the TTS and text on a screen won’t always need to mirror each other. For example the TTS may say "Great job! Let's move to the next question. What’s the name of the big red dog?" and the text on screen may simply say "What is the name of the big red dog?"

Implementation

You can provide different audio and screen-based prompts by using a simple response, which allows different verbiage in the speech and text sections of the response. With Actions Builder, you can do this using the node client library or in the JSON response. The following code samples show you how to implement the example discussed above:

candidates:

- first_simple:

variants:

- speech: Great job! Let's move to the next question. What’s the name of the big red dog?

text: What is the name of the big red dog?Note: implementation in YAML for Actions Builder

app.handle('yourHandlerName', conv => {

conv.add(new Simple({

speech: 'Great job! Let\'s move to the next question. What’s the name of the big red dog?',

text: 'What is the name of the big red dog?'

}));

});Note: implementation with node client library

2. Consider both first-time and returning users

Frequent users don't need to hear the same instructions repeatedly. Optimize the experience for returning users. If it's a user's first time experience, try to explain the full context. If they revisit your action, acknowledge their return with a "Welcome back" message, and try to shorten (or taper) the instructions. If you noticed the user has returned more than 3 or 4 times, try to get to the point as quickly as possible.

An example of tapering:

- Instructions to first time users: “Just say words you can make from the letters provided. Are you ready to begin?”

- For a returning user: “Make up words from the jumbled letters. Ready?”

- For a frequent user: “Are you ready to play?”

Implementation

You can check the lastSeenTime property in the User object of the HTTP request. The lastSeenTime property is a timestamp of the last interaction with this particular user. If this is the first time a user is interacting with your Action, this field will be omitted. Since it’s a timestamp, you can have different messages for a user who’s last interaction has been more than 3 months, 3 weeks or 3 days. Below is an example of having a default message that is tapered. If the lastSeenTime property is omitted, meaning that it's the first time the user is interacting with this Action, the message is updated with the longer message containing more details.

app.handle('greetingInstructions', conv => {

let message = 'Make up words from the jumbled letters. Ready?';

if (!conv.user.lastSeenTime) {

message = 'Just say words you can make from the letters provided. Are you ready to begin?';

}

conv.add(message);

});Note: implementation with node client library

3. Support strongly recommended intents

There are some commonly used intents which really enhance the user experience by providing some basic commands to interact with your voice app. If your action doesn’t support these, users might get frustrated. These intents help create a basic structure to your voice user interface, and help users navigate your Action.

- Exit / Quit

Closes the action

- Repeat / Say that again

Makes it easy for users to hear immediately preceding content at any point

- Play Again

Gives users an opportunity to re-engage with their favorite experiences

- Help

Provides more detailed instructions for users who may be lost. Depending on the type of Action, this may need to be context specific. Defaults returning users to where they left off in game play after a Help message plays.

- Pause, Resume

Provides a visual indication that the game has been paused, and provides both visual and voice options to resume.

- Skip

Moves to the next decision point.

- Home / Menu

Moves to the home or main menu of an action. Having a visual affordance for this is a great idea. Without visual cues, it’s hard for users to know that they can navigate through voice even when it’s supported.

- Go back

Moves to the previous page in an interactive story.

Implementation

Actions Builder & Actions SDK support System Intents that cover a few of these use case which contain Google support training phrase:

- Exit / Quit ->

actions.intent.CANCELThis intent is matched when the user wants to exit your Actions during a conversation, such as a user saying, "I want to quit." - Repeat / Say that again ->

actions.intent.REPEATThis intent is matched when a user asks the Action to repeat.

For the remaining intents, you can create User Intents and you have the option of making them Global (where they can be triggered at any Scene) or add them to a particular scene. Below are examples from a variety of projects to get you started:

- Play Again -> Snow Pal's play_again

- Skip -> Gnome Garden's skip

- Home / Menu -> Gnome Garden's setting_menu

So there you have it. Three suggestions to keep in mind for making amazing interactive games and story experiences that people will want to use over and over again. To check out the full list of our recommendations go to the Lessons Learned page.

Thanks for reading! To share your thoughts or questions, join us on Reddit at r/GoogleAssistantDev.

Follow @ActionsOnGoogle on Twitter for more of our team's updates, and tweet using #AoGDevs to share what you’re working on. Can’t wait to see what you build!

Source: Google Developers Blog

Policy changes and certification requirement updates for Smart Home Actions

Posted by Toni Klopfenstein, Developer Advocate

As more developers onboard to the Smart Home Actions platform, we have gathered feedback about the certification process for launching an Action. Today, we are pleased to announce we have updated our Actions policy to enable developers to more quickly develop their Actions, and to help streamline the certification and launch process for developers. These updates will also help to provide a consistent, cohesive experience for smart device users.

Device quality guidelines

Ensuring each device type meets quality benchmark metrics provides end users with reliable and timely responses from their smart devices.With these policy updates, minimum latency and reliability metrics have been added to each device type guide. To ensure consistent device control and timely updates to Home Graph, all cloud controlled smart devices need to maintain a persistent connection through a hub or the device itself, and cannot rely on mobile devices and tablets.

Along with these quality benchmarks, we have also updated our guides with required and recommended traits for each device. By implementing these within an Action, developers can ensure their end users can trigger devices in a consistent manner and access the full range of device capabilities. To assist you in ensuring your Action is compliant with the updated policy, the Test Suite testing tool will now more clearly flag any device type or trait issues.

Safety and security

Smart home users care deeply about the safety and security of the devices integrated into their homes, so we have also updated our requirements for secondary user verification. This verification step must be implemented for any Action that can set a device in an unprotected state, such as unlocking a door, regardless of whether you are building a Conversational Action or Smart Home Action. Once configured with a secondary verification method, developers can provide users a way to opt out of this flow. For any developer wishing to include an opt-out selection to their customers, we have provided a warning message template to ensure users understand the security implications for turning this feature off.

For devices that may pose heightened safety risks, such as cooking appliances, we require UL certificates or similar certification forms to be provided along with the Test Suite results before an Action can be released to production.

Works With 'Hey Google' badge

These policy updates also will affect the use of the Works With Hey Google badge. The badge will only be available for use on marketing materials for new Smart Home Direct Actions that have successfully integrated any device types referenced.

Any Conversational Actions currently using the badge will not be approved for use for any new marketing assets, including packaging/product refreshes. Any digital assets using the badge will need to be updated to remove the badge by the end of 2021.

Timeline

With the roll-out today, there will be a 1 month grace period for developers to update new integrations to match the new policy requirements. For Actions currently deployed to production, compliance will be evaluated when the Action is recertified. Once integrations have been certified and launched to production, Actions will need to be recertified annually, or any time new devices or device functionality is added to the Action. Notifications for recertification will be shared with the developer account associated with your Action in the console.

This policy grace-period ends April 12, 2021.

Please review the updated policy, as well as our updated docs for launching your Smart Home Action. You can also check out our policy video for more information.

We want to hear from you, so continue sharing your feedback with us through the issue tracker, and engage with other smart home developers in the /r/GoogleAssistantDev community. Follow @ActionsOnGoogle on Twitter for more of our team's updates, and tweet using #AoGDevs to share what you’re working on. We can’t wait to see what you build!

Source: Google Developers Blog

Announcing New Smart Home App Discovery Features

Posted by Toni Klopfenstein, Developer Advocate

When a user connects a smart device to the Google Assistant via the Home app, the user must select the appropriate related Action from the list of all available Actions. The user then clicks through multiple screens to complete their device setup. Today, we're releasing two new features to improve this device discovery process and drive customer adoption of your Smart Home Action through the Google Home app. App Discovery and Deep Linking are two convenience features that help users find your Google-Assistant compatible smart devices quickly and onboard faster.

App Discovery enables users to quickly find your smart home Action thanks to suggestion chips within the Google Home app. You can implement this new feature through the Actions Console by creating a verified brand link between your Action, your website, and your mobile app. App Discovery doesn't require any coding work to implement, making this a development-light feature that provides great improvements to the user experience of device linking.

In addition to helping users discover your Action directly through suggestion chips, Deep Linking enables you to guide users to your account linking flow within the Google Home app in one step. These deep links are easily added to your mobile app or web content, guiding users to your smart home integration with a single tap.

Deep Linking and App Discovery can help you create a more streamlined onboarding experience for your users, driving increased engagement and user satisfaction, and can be implemented with minimal engineering work.

To implement App Discovery and Deep Linking for your Smart Home Action, check out the developer documents, or watch the video covering these new features.

You can also check out the smart home codelabs if you are just starting to build out your Action.

We want to hear from you, so continue sharing your feedback with us through the issue tracker, and engage with other smart home developers in the /r/GoogleAssistantDev community. Follow @ActionsOnGoogle on Twitter for more of our team's updates, and tweet using #AoGDevs to share what you’re working on. We can’t wait to see what you build!