For the 10th anniversary of Kubernetes, I wanted to look at the ecosystem we created together.

I recently wrote about the pervasiveness and magnitude of the Kubernetes and CNCF ecosystem. This was the result of a deliberate flywheel. This is a diagram I used several years ago:

|

Because Kubernetes runs on public clouds, private clouds, on the edge, etc., it is attractive to developers and vendors to build solutions targeting its users. Most tools built for Kubernetes or integrated with Kubernetes can work across all those environments, whereas integrating directly with cloud providers directly entails individual work for each one. Thus, Kubernetes created a large addressable market with a comparatively lower cost to build.

We also deliberately encouraged open source contribution, to Kubernetes and to other projects. Many tools in the ecosystem, not just those in CNCF, are open source. This includes many tools built by Kubernetes users and tools built by vendors but were too small to be products, as well as those intended to be the cores of products. Developers built and/or wrote about solutions to problems they experienced or saw, and shared them with the community. This made Kubernetes more usable and more visible, which likely attracted more users.

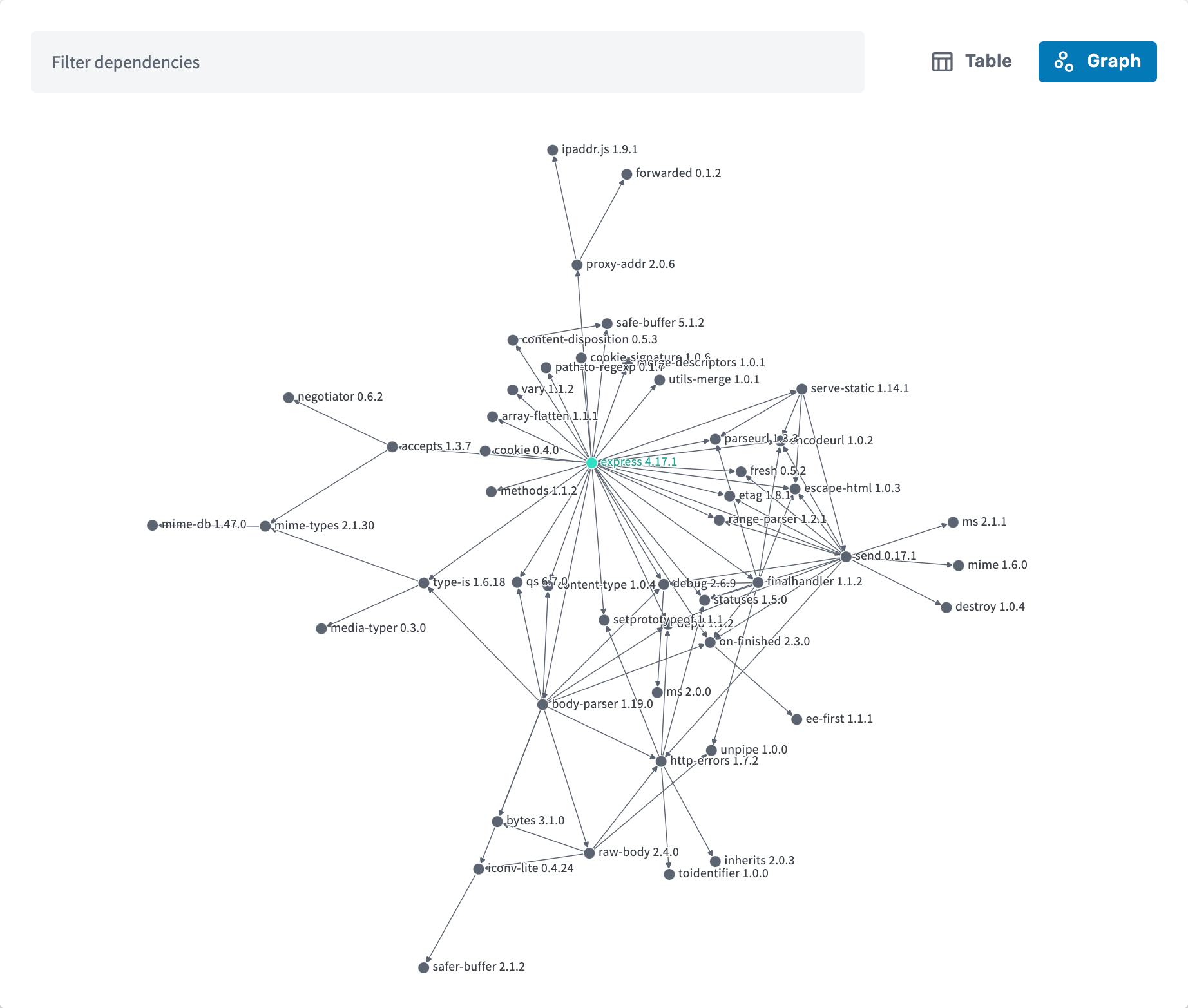

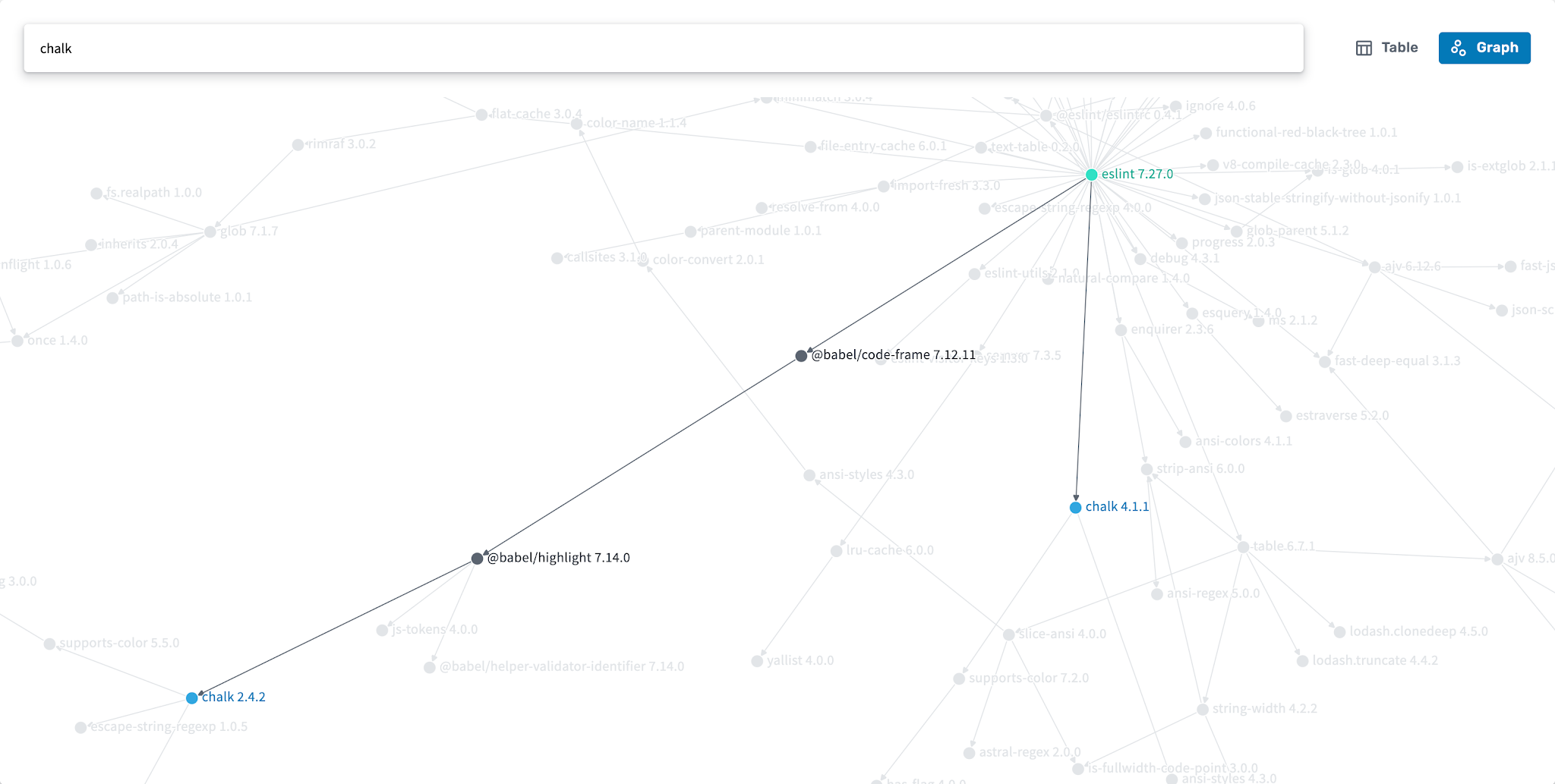

Today, the result is that if you need a tool, extension, or off-the-shelf component for pretty much anything, you can probably find one compatible with Kubernetes rather than having to build it yourself, and it’s more likely that you can find one that works out of the box with Kubernetes than for your cloud provider. And often there are several options to choose from. I’ll just mention a few. Also, I want to give a shout out to Kubetools, which has a great list of Kubernetes tools that helped me discover a few new ones.

For example, if you’re an application developer whose application runs on Kubernetes, you can build and deploy with Skaffold, test it on Kubernetes locally with Minikube, or connect to Kubernetes remotely with Telepresence, or sync to a preview environment with Gitpod or Okteto. When you need to debug multiple instances, you can use kubetail to view the logs in real time.

To deploy to production, you can use GitOps tools like FluxCD, ArgoCD, or Google Cloud’s Config Sync. You can perform database migrations with Schemahero. To aggregate logs from your production deployments, you can use fluentbit. To monitor them, you have your pick of observability tools, including Prometheus, which was inspired by Google’s Borgmon tool similar to how Kubernetes was inspired by Borg, and which was the 2nd project accepted into the CNCF.

If your application needs to receive traffic from the Internet, you can use one of the many Ingress controllers or Gateway implementations to configure HTTPS routing, and cert-manager to obtain and renew the certificates. For mutual TLS and advanced routing, you can use a service mesh like Istio, and take advantage of it for progressive delivery using tools like Flagger.

If you have a more specialized type of workload to run, you can run event-driven workloads using Knative, batch workloads using Kueue, ML workflows using Kubeflow, and Kafka using Strimzi.

If you’re responsible for operating Kubernetes workloads, to monitor costs, there’s kubecost. To enforce policy constraints, there’s OPA Gatekeeper and Kyverno. For disaster recovery, you can use Velero. To debug permissions issues, there are RBAC tools. And, of course, there are AI-powered assistants.

You can manage infrastructure using Kubernetes, such as using Config Connector or Crossplane, so you don’t need to learn a different syntax and toolchain to do that.

There are tools with a retro experience like K9s and Ktop, fun tools like xlskubectl, and tools that are both retro and fun like Kubeinvaders.

If this makes you interested in migrating to Kubernetes, you can use a tool like move2kube or kompose.

This just scratched the surface of the great tools available for Kubernetes. I view the ecosystem as more of a candy store than as a hellscape. It can take time to discover, learn, and test these tools, but overall I believe they make the Kubernetes ecosystem more productive. To develop any one of these tools yourself would require a significant time investment.

I expect new tools to continue to emerge as the use cases for Kubernetes evolve and expand. I can’t wait to see what people come up with.

By Brian Grant, Distinguished Engineer, Google Cloud Developer Experience