Android Studio 3.4

Posted by Jamal Eason, Product Manager, Android

After nearly six months of development, Android Studio 3.4 is ready to download today on the stable release channel. This is a milestone release of the Project Marble effort from the Android Studio team. Project Marble is our focus on making the fundamental features and flows of the Integrated Development Environment (IDE) rock-solid. On top of many performance improvements and bug fixes we made in Android Studio 3.4, we are excited to release a small but focused set of new features that address core developer workflows for app building & resource management.

Part of the effort of Project Marble is to address user facing issues in core features in the IDE. At the top of the list of issues for Android Studio 3.4 is an updated Project Structure Dialog (PSD) which is a revamped user interface to manage dependencies in your app project Gradle build files. In another build-related change, R8 replaces Proguard as the default code shrinker and obfuscator. To aid app design, we incorporated your feedback to create a new app resource management tool to bulk import, preview, and manage resources for your project. Lastly, we are shipping an updated Android Emulator that takes less system resources, and also supports the Android Q Beta. Overall, these features are designed to make you more productive in your day-to-day app development workflow.

Alongside the stable release of Android Studio 3.4, we recently published in-depth blogs on how we are investigating & fixing a range of issues under the auspices of Project Marble. You should check them out as you download the latest update to Android Studio:

- Project Marble: Apply Changes

- Improving build speed in Android Studio

- Android Emulator: Project Marble Improvements

- Android Studio Project Marble: Lint Performance

The development work for Project Marble is still on-going, but Android Studio 3.4 incorporates productivity features and over 300 bug & stability enhancements that you do not want to miss. Watch and read below for some of the notable changes and enhancements that you will find in Android Studio 3.4.

Develop

- Resource Manager - We have heard from you that asset management and navigation can be clunky and tedious in Android Studio, especially as your app grows in complexity. The resource manager is a new tool to visualize the drawables, colors, and layouts across your app project in a consolidated view. In addition to visualization, the panel supports drag & drop bulk asset import, and, by popular request, bulk SVG to VectorDrawable conversion. These accelerators will hopefully help manage assets you get from a design team, or simply help you have a more organized view of project assets. Learn more.

Resource Manager

- Import Intentions - As you work with new Jetpack and Firebase libraries, Android Studio 3.4 will recognize common classes in these libraries and suggest, via code intentions, adding the required import statement and library dependency to your Gradle project files. This optimization can be a time saver since it keeps you in the context of your code. Moreover, since Jetpack libraries are modularized, Android Studio can find the exact library or minimum set of libraries required to use a new Jetpack class.

Jetpack Import Intentions

- Layout Editor Properties Panel - To improve product refinement and polish we refreshed the Layout Editor Properties panel. Now we just have one single pane, with collapsible sections for properties. Additionally, errors and warnings have their own highlight color, we have a resource binding control for each property, and we have an updated color picker.

Layout Editor Properties Panel

- IntelliJ Platform Update - Android Studio 3.4 includes Intellij 2018.3.4. This update has a wide range of improvements from support for multi-line TODOs to an updated search everywhere feature. Learn more.

Build

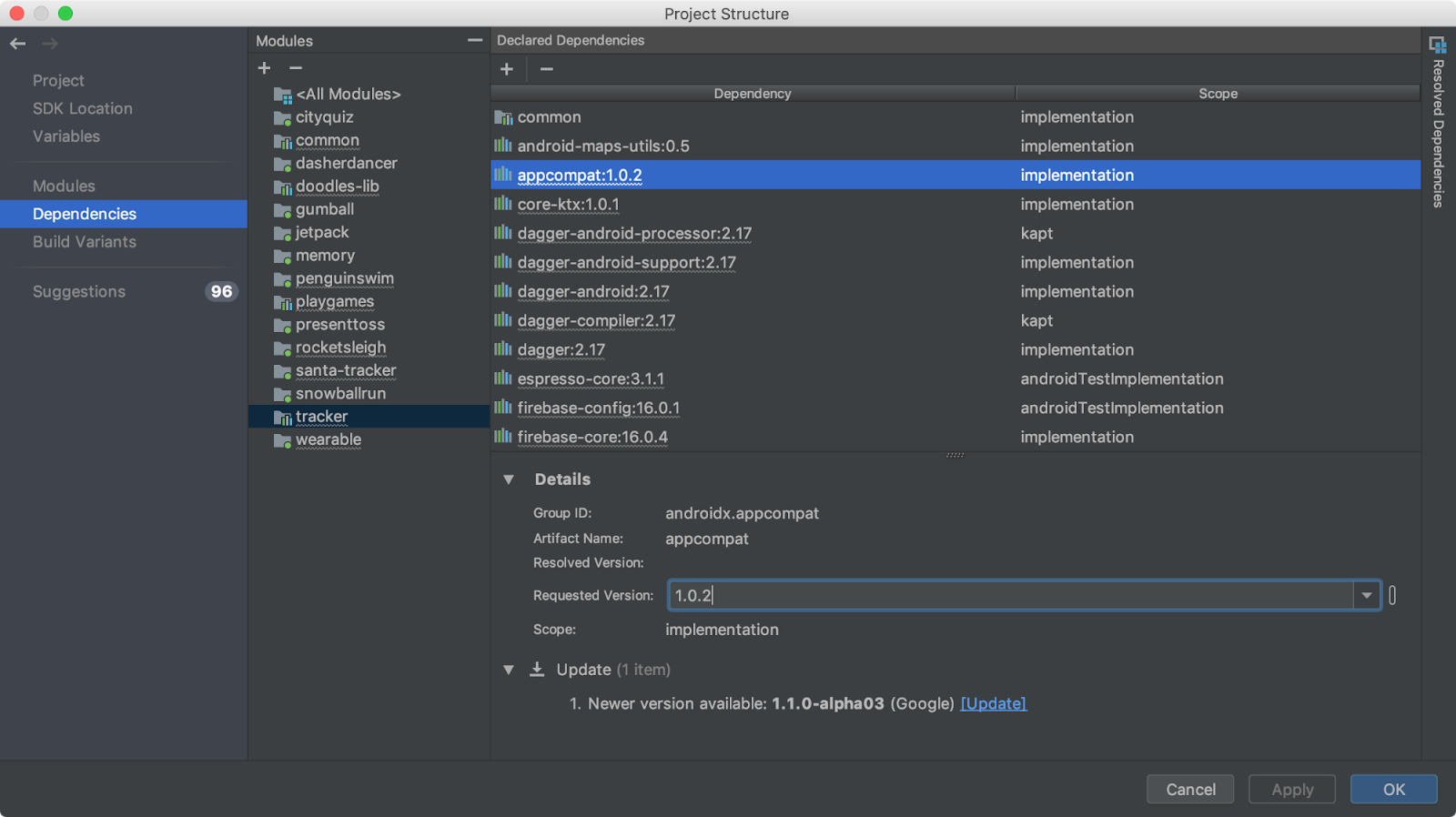

- Project Structure Dialog - A long standing request from many developers is to have a user interface front end to manage Gradle project files. We have more plans for this area, but Android Studio 3.4 includes the next phase of improvement in the Product Structure Dialog (PSD). The new PSD allows you to see and add dependencies to your project at a module level. Additionally, the new PSD displays build variables, suggestions to improve your build file configuration, and more! Although the latest Gradle plugin v3.4 also has improvements, you do not have to upgrade your Gradle plugin version number to take advantage of the new PSD. Learn more.

Project Structure Dialogue

- R8 by Default - Almost two years ago we previewed R8 as the replacement for Proguard. R8 code shrinking helps reduce the size of your APK by getting rid of unused code and resources as well as making your actual code take less space. Additionally, in comparison to Proguard, R8 combines shrinking, desugaring and dexing operations into one step, which ends up to be a more efficient approach for Android apps. After additional validation and testing last year, R8 is now the default code shinker for new projects created with Android Studio 3.4 and for projects using Android Gradle plugin 3.4 and higher. Learn more.

Test

- Android Emulator Skin updates & Android Q Beta Emulator System Image - Inside of Android Studio 3.4 we released the latest Google Pixel 3 & Google Pixel 3 XL device skins. Also with this release, you can also download Android Q Beta emulator system images for app testing on Android Q. Please note that we do recommend running the canary version of Android Studio and the emulator to get the latest compatibility changes during the Android Q Beta program.

Android Emulator - Pixel 3 XL Emulator Skin

To recap, Android Studio 3.4 includes these new enhancements & features:

Develop

- Resource Manager

- Import Intentions

- Layout Editor Properties Panel

- IntelliJ 2018.3.4 Platform Update

Build

- Incremental Kotlin annotation processing (Kotlin 1.3.30 Update)

- Project Structure DialogR8 by Default

Test

- Emulator Device Skins

- Android Q Beta Emulator System Image Support

Check out the Android Studio release notes, Android Gradle plugin release notes, and the Android Emulator release notes for more details.

Getting Started

Download

Download the latest version of Android Studio 3.4 from the download page. If you are using a previous release of Android Studio, you can simply update to the latest version of Android Studio. If you want to maintain a stable version of Android Studio, you can run the stable release version and canary release versions of Android Studio at the same time. Learn more.

To use the mentioned Android Emulator features make sure you are running at least Android Emulator v28.0.22 downloaded via the Android Studio SDK Manager.

We appreciate any feedback on things you like, and issues or features you would like to see. If you find a bug or issue, feel free to file an issue. Follow us -- the Android Studio development team ‐ on Twitter and on Medium.

Source: Android Developers Blog

MorphNet: Towards Faster and Smaller Neural Networks

Deep neural networks (DNNs) have demonstrated remarkable effectiveness in solving hard problems of practical relevance such as image classification, text recognition and speech transcription. However, designing a suitable DNN architecture for a given problem continues to be a challenging task. Given the large search space of possible architectures, designing a network from scratch for your specific application can be prohibitively expensive in terms of computational resources and time. Approaches such as Neural Architecture Search and AdaNet use machine learning to search the design space in order to find improved architectures. An alternative is to take an existing architecture for a similar problem and, in one shot, optimize it for the task at hand.

Here we describe MorphNet, a sophisticated technique for neural network model refinement, which takes the latter approach. Originally presented in our paper, “MorphNet: Fast & Simple Resource-Constrained Structure Learning of Deep Networks”, MorphNet takes an existing neural network as input and produces a new neural network that is smaller, faster, and yields better performance tailored to a new problem. We've applied the technique to Google-scale problems to design production-serving networks that are both smaller and more accurate, and now we have open sourced the TensorFlow implementation of MorphNet to the community so that you can use it to make your models more efficient.

How it Works

MorphNet optimizes a neural network through a cycle of shrinking and expanding phases. In the shrinking phase, MorphNet identifies inefficient neurons and prunes them from the network by applying a sparsifying regularizer such that the total loss function of the network includes a cost for each neuron. However, rather than applying a uniform cost per neuron, MorphNet calculates a neuron cost with respect to the targeted resource. As training progresses, the optimizer is aware of the resource cost when calculating gradients, and thus learns which neurons are resource-efficient and which can be removed.

As an example, consider how MorphNet calculates the computation cost (e.g., FLOPs) of a neural network. For simplicity, let's think of a neural network layer represented as a matrix multiplication. In this case, the layer has 2 inputs (xn), 6 weights (a,b,...,f), and 3 outputs (yn; neurons). Using the standard textbook method of multiplying rows and columns, you can work out that evaluating this layer requires 6 multiplications.

|

| Computation cost of neurons. |

In the expanding phase, we use a width multiplier to uniformly expand all layer sizes. For example, if we expand by 50%, then an inefficient layer that started with 100 neurons and shrank to 10 would only expand back to 15, while an important layer that only shrank to 80 neurons might expand to 120 and have more resources with which to work. The net effect is re-allocation of computational resources from less efficient parts of the network to parts of the network where they might be more useful.

One could halt MorphNet after the shrinking phase to simply cut back the network to meet a tighter resource budget. This results in a more efficient network in terms of the targeted cost, but can sometimes yield a degradation in accuracy. Alternatively, the user could also complete the expansion phase, which would match the original target resource cost but with improved accuracy. We'll cover an example of this full implementation later.

Why MorphNet?

There are four key value propositions offered by MorphNet:

- Targeted Regularization: The approach that MorphNet takes towards regularization is more intentional than other sparsifying regularizers. In particular, the MorphNet approach to induce better sparsification is targeted at the reduction of a particular resource (such as FLOPs per inference or model size). This enables better control of the network structures induced by MorphNet, which can be markedly different depending on the application domain and associated constraints. For example, the left panel of the figure below presents a baseline network with the commonly used ResNet-101 architecture trained on JFT. The structures generated by MorphNet when targeting FLOPs (center, with 40% fewer FLOPs) or model size (right, with 43% fewer weights) are dramatically different. When optimizing for computation cost, higher-resolution neurons in the lower layers of the network tend to be pruned more than lower-resolution neurons in the upper layers. When targeting smaller model size, the pruning tradeoff is the opposite.

- Topology Morphing: As MorphNet learns the number of neurons per layer, the algorithm could encounter a special case of sparsifying all the neurons in a layer. When a layer has 0 neurons, this effectively changes the topology of the network by cutting the affected branch from the network. For example, in the case of a ResNet architecture, MorphNet might keep the skip-connection but remove the residual block as shown below (left). For Inception-style architectures, MorphNet might remove entire parallel towers as shown on the right.

|

| Left: MorphNet can remove residual connections in ResNet-style networks. Right: It can also remove parallel towers in Inception-style networks. |

- Scalability: MorphNet learns the new structure in a single training run and is a great approach when your training budget is limited. MorphNet can also be applied directly to expensive networks and datasets. For example, in the comparison above, MorphNet was applied directly to ResNet-101, which was originally trained on JFT at a cost of 100s of GPU-months.

- Portability: MorphNet produces networks that are "portable" in the sense that they are intended to be retrained from scratch and the weights are not tied to the architecture learning procedure. You don't have to worry about copying checkpoints or following special training recipes. Simply train your new network as you normally would!

Morphing Networks

As a demonstration, we applied MorphNet to Inception V2 trained on ImageNet by targeting FLOPs (see below). The baseline approach is to use a width multiplier to trade off accuracy and FLOPs by uniformly scaling down the number of outputs for each convolution (red). The MorphNet approach targets FLOPs directly and produces a better trade-off curve when shrinking the model (blue). In this case, FLOP cost is reduced 11% to 15% with the same accuracy as compared to the baseline.

Conclusion

We’ve applied MorphNet to several production-scale image processing models at Google. Using MorphNet resulted in significant reduction in model-size/FLOPs with little to no loss in quality. We invite you to try MorphNet—the open source TensorFlow implementation can be found here, and you can also read the MorphNet paper for more details.

Acknowledgements

This project is a joint effort of the core team including: Elad Eban, Ariel Gordon, Max Moroz, Yair Movshovitz-Attias, and Andrew Poon. We also extend a special thanks to our collaborators, residents and interns: Shraman Ray Chaudhuri, Bo Chen, Edward Choi, Jesse Dodge, Yonatan Geifman, Hernan Moraldo, Ofir Nachum, Hao Wu, and Tien-Ju Yang for their contributions to this project.

Source: Google AI Blog

Spring into healthy habits with help from Google Home

Spring is (finally) here. Not only am I using the warmer weather and longer days as a reason to clean out my closet, but I'm also taking the opportunity to clean up my routine and stick to my wellness goals. I'd like to read one book per week, cook more healthy recipes for my family and get at least seven hours of sleep per night… with a full time job and two toddlers. Life is a lot to manage, so I use my Google Home products to keep track of everything I want to accomplish.

Here are a few ways that your Google Assistant on Google Home devices can help with your wellness goals and put some spring in your step:

Get more sleep

Starting today, our Gentle Sleep & Wake feature lets you use any Google Home device to set a routine that gradually turns your Philips Hue smart lights on (wake) or off (sleep) over the course of 30 minutes, to mimic the sunrise or help you prepare for bed. This gradual change of light helps improve the quality of your sleep. Just say, “Hey Google,” then:

- “Turn on Gentle Wake up" to have your daily morning alarms pair with gradual brightening. Make sure to enable Gentle Wake Up on the same Google Home device you’ll set your alarms on.

- "Wake up my lights." You can also say, “Hey Google, wake up my lights in the bedroom at 6:30 a.m.” This will start to gradually brighten your Philips Hue lights at the time that you set and can be set up to 24 hours in advance.

- "Sleep my lights." You can also say, “Hey Google, sleep the lights in the living room.” This will gradually start to dim your Philips Hue lights and can be programmed up to 24 hours in advance.

The Gentle Sleep & Wake feature is available in the U.S., U.K., Canada, Australia, Singapore and India in English only. For other ways to wind down at the end of the day, you can also tune out noises from street traffic or construction next door by saying, “Hey Google, play white noise.”

Put your mind to bed

With Headspace on Google Home, you can try out a short meditation or a sleep exercise. Just say, "Hey Google, tell Headspace I'm ready for bed.” You can also say, “Hey Google, I want to meditate” to get recommendations like healing sounds, sleep sounds and more.

Cook healthy recipes

Cooking is easy with Google Home’s step-by-step recipes, and the convenience of a screen on Google Home Hub means that I can search for healthy family recipes and save them to “My Cookbook” for later. Use your voice to browse millions of recipes, get guided cooking instructions, set cooking timers and more.

Get some exercise

Use your Google Home to play your workout playlist, set alarms for working out or cast workout videos from YouTube to your TV with Google Home and Chromecast. If you’ve got a Google Home Hub, you can also watch workout videos right on the device. Try “Hey Google, show me barre workout videos” to get started.

Read more books

With Audiobooks on Google Play, you can buy an audiobook and listen to it on Google Home. Say, “Hey Google, read my book” to listen to your favorite audiobook hands-free with the Google Assistant. You can also use the Assistant on your phone to pick up where you left off. You can say, “Hey Google, stop playing in 20 minutes” to set a timer for bedtime reading each night, or multitask by listening to a book while tackling laundry or doing the dishes.

Hopefully your Google Home Hub, Mini or Max can help keep you healthy, happy and mindful for the remainder of 2019 and beyond.

Source: The Official Google Blog

With the Roav Bolt, use your Google Assistant safely when driving

I spend so much time on the road every day, whether I’m hustling to the office or picking up the kids at school. While I’m driving, it can be very tempting to pull out my phone to check text messages, make phone calls or fumble around to find my favorite podcast.

One way to safely stay connected and use your phone hands-free while on the road is by using the Roav Bolt, an affordable aftermarket device by Anker Innovations. The Roav Bolt is built to work with Android devices, and brings the Google Assistant to almost any car. Just say, “Hey Google,” when you’re in the car or tap the button on Roav Bolt to find the nearest coffee shop, play your favorite song or podcast, navigate home, read texts, make calls, set reminders and check your schedule for the day.

Roav Bolt plugs into your car’s charging socket, connecting to your phone’s Bluetooth and then to your car’s stereo. The far-field built-in mics on the Roav Bolt ensure that your Assistant hears you clearly, whether your phone is locked or stashed away or if you’re blasting music.

Here’s a closer look at what you can do with the Assistant using Roav Bolt, starting with “Hey Google”:

Navigation

Ask the Google Assistant to help you get to your destination and find the things you need as you’re driving, such as current traffic along your route, nearby gas stations, or restaurants and businesses.

- “Navigate home/to work”

- “How’s the traffic to Los Angeles?”

- “Share my ETA with Evelyn”

- “Find a gas station on the way”

- “Find dry cleaners nearby”

Entertainment

The Google Assistant can play your favorite songs, radio stations, audiobooks and podcasts through your car’s sound system.

- “Play {song / artist / album}”

- “Play {Jazz / Country / Top 40}”

- “Play {content} on {app name}”

- “Play the latest episode of {podcast}”

- “What’s on the news?”

Communication

Call, send a message and have your notifications read out loud while you’re driving, all with just with your voice. And with proactive notifications, stay connected without needing to look at your phone so you don’t miss important updates while you’re in the car.

- “Call” or “text [contact, or business name]”

- “Send message to [name] on [App: Whatsapp / Wechat / Hangouts]”

- “Read my messages”

Get things done

Control compatible smart home devices on the go—check that the lights are turned off when you leave home and adjust the thermostat when you’re on your way home. You can also check what’s on your calendar, add things to a shopping list and set up important reminders like picking up your dry cleaning at a specific time.

- “What’s on my agenda today?”

- “Turn off all the lights at home”

- “Remind me to pick up dry cleaning at 6:00 p.m.”

- “Add milk to my shopping list”

The Roav Bolt will also be available for iOS in a beta experience with limited capabilities to begin with. You can find the Roav Bolt online and in store at major retailers, including Best Buy retail locations, bestbuy.com and walmart.com for $49.99.

Source: The Official Google Blog

Indie Games Accelerator – Applications open for class of 2019

Source: Official Google India Blog

Indie Games Accelerator – Applications open for class of 2019

Posted by Anuj Gulati, Developer Marketing Manager and Sami Kizilbash, Developer Relations Program Manager

Last year we announced the Indie Games Accelerator, a special edition of Launchpad Accelerator, to help top indie game developers from emerging markets achieve their full potential on Google Play. Our team of program mentors had an amazing time coaching some of the best gaming talent from India, Pakistan, and Southeast Asia. We’re very encouraged by the positive feedback we received for the program and are excited to bring it back in 2019.

Applications for the class of 2019 are now open, and we’re happy to announce that we are expanding the program to developers from select countries* in Asia, Middle East, Africa, and Latin America.

Successful participants will be invited to attend two gaming bootcamps, all-expenses-paid at the Google Asia-Pacific office in Singapore, where they will receive personalized mentorship from Google teams and industry experts. Additional benefits include Google hardware, invites to exclusive Google and industry events and more.

Find out more about the program and apply to be a part of it.

* The competition is open to developers from the following countries: Bangladesh, Brunei, Cambodia, India, Indonesia, Laos, Malaysia, Myanmar, Nepal, Pakistan, Philippines, Singapore, Sri Lanka, Thailand, Vietnam, Egypt, Jordan, Kenya, Lebanon, Nigeria, South Africa, Tunisia, Turkey, Argentina, Bolivia, Brazil, Chile, Colombia, Costa Rica, Ecuador, Guatemala, Mexico, Panama, Paraguay, Peru, Uruguay and Venezuela.

How useful did you find this blog post?

Source: Android Developers Blog

Indie Games Accelerator – Applications open for class of 2019

Posted by Anuj Gulati, Developer Marketing Manager and Sami Kizilbash, Developer Relations Program Manager

Last year we announced the Indie Games Accelerator, a special edition of Launchpad Accelerator, to help top indie game developers from emerging markets achieve their full potential on Google Play. Our team of program mentors had an amazing time coaching some of the best gaming talent from India, Pakistan, and Southeast Asia. We’re very encouraged by the positive feedback we received for the program and are excited to bring it back in 2019.

Applications for the class of 2019 are now open, and we’re happy to announce that we are expanding the program to developers from select countries* in Asia, Middle East, Africa, and Latin America.

Successful participants will be invited to attend two gaming bootcamps, all-expenses-paid at the Google Asia-Pacific office in Singapore, where they will receive personalized mentorship from Google teams and industry experts. Additional benefits include Google hardware, invites to exclusive Google and industry events and more.

Find out more about the program and apply to be a part of it.

* The competition is open to developers from the following countries: Bangladesh, Brunei, Cambodia, India, Indonesia, Laos, Malaysia, Myanmar, Nepal, Pakistan, Philippines, Singapore, Sri Lanka, Thailand, Vietnam, Egypt, Jordan, Kenya, Lebanon, Nigeria, South Africa, Tunisia, Turkey, Argentina, Bolivia, Brazil, Chile, Colombia, Costa Rica, Ecuador, Guatemala, Mexico, Panama, Paraguay, Peru, Uruguay and Venezuela.

How useful did you find this blog post?

Source: Google Developers Blog

Instant-loading AMP pages from your own domain

Today we are rolling out support in Google Search’s AMP web results (also known as “blue links”) to link to signed exchanges, an emerging new feature of the web enabled by the IETF web packaging specification. Signed exchanges enable displaying the publisher’s domain when content is instantly loaded via Google Search. This is available in browsers that support the necessary web platform feature—as of the time of writing, Google Chrome—and availability will expand to include other browsers as they gain support (e.g. the upcoming version of Microsoft Edge).

Background on AMP’s instant loading

One of AMP's biggest user benefits has been the unique ability to instantly load AMP web pages that users click on in Google Search. Near-instant loading works by requesting content ahead of time, balancing the likelihood of a user clicking on a result with device and network constraints–and doing it in a privacy-sensitive way.

We believe that privacy-preserving instant loading web content is a transformative user experience, but in order to accomplish this, we had to make trade-offs; namely, the URLs displayed in browser address bars begin with google.com/amp, as a consequence of being shown in the Google AMP Viewer, rather than display the domain of the publisher. We heard both user and publisher feedback over this, and last year we identified a web platform innovation that provides a solution that shows the content’s original URL while still retaining AMP's instant loading.

Introducing signed exchanges

A signed exchange is a file format, defined in the web packaging specification, that allows the browser to trust a document as if it belongs to your origin. This allows you to use first-party cookies and storage to customize content and simplify analytics integration. Your page appears under your URL instead of the google.com/amp URL.

Google Search links to signed exchanges when the publisher, browser, and the Search experience context all support it. As a publisher, you will need to publish both the signed exchange version of the content in addition to the non-signed exchange version. Learn more about how Google Search supports signed exchange.

Getting started with signed exchanges

Many publishers have already begun to publish signed exchanges since the developer preview opened up last fall. To implement signed exchanges in your own serving infrastructure, follow the guide “Serve AMP using Signed Exchanges” available at amp.dev.

If you use a CDN provider, ask them if they can provide AMP signed exchanges. Cloudflare has recently announced that it is offering signed exchanges to all of its customers free of charge.

Check out our resources like the webmaster community or get in touch with members of the AMP Project with any questions. You can also provide feedback on the signed exchange specification.

Source: Google Webmaster Central Blog

Save time with new scheduling features in Calendar

What’s changing

We’re updating the creation flow for meetings in Calendar to help you save time with an easier way to schedule.You’ll see several changes when creating a meeting such as:

- Peek at calendars and automatically add guests: Now, when you add a calendar in the “Search for people” box, you can temporarily view coworkers’ calendars. Creating a new event then automatically adds those people as guests to your meeting and might suggest a title for the meeting.

- More fields in the creation pop-up dialog: The Guests, Rooms, Location, Conferencing, and Description fields are now editable directly in the meeting creation pop-up dialog. Once you add your coworkers’ calendars, they’ll load right in the background, making it even easier and faster to find an available time for everyone.

Who’s impacted

End usersWhy you’d use it

People-first scheduling makes it quick and easy to find time with others. You can add rooms, a location, a video conference and add a meeting description without having to click into “More options.”How to get started

- Admins: No action required.

- End users: No action required. This new creation flow will automatically appear in Calendar on the web.

Helpful links

Help Center: Create an eventAvailability

Rollout details- Rapid Release domains: Full rollout (1–3 days for feature visibility) starting on April 16, 2019

- Scheduled Release domains: Full rollout (1–3 days for feature visibility) starting on April 29, 2019

G Suite editions

Available to all G Suite editions

On/off by default?

This feature will be ON by default.

Stay up to date with G Suite launches