In honor of Women’s History Month, it’s our pleasure to feature members across the Women Techmakers ecosystem for March’s Developer Journey profiles. These are community leaders who have explored, navigated and built using Google tools. They are active members of the broader

.

In March, the WTM program will also celebrate International Women’s Day, centered on the theme “Dare To Be,” celebrating the courage and strength that this community demonstrates, made of thought leaders who are creating a world where women can thrive in tech. You can find more about the

Ezinne Osuamadi

Women Techmakers Mentor and Ambassador

Waldorf, Germany (A proud Nigerian!)

Software Developer/ Technical Product Manager

Twitter

Linkedln

InstagramWhat Google tools have you used to build?

Android Studio, Firebase, Google Play Services, Google Analytics. I'm a mobile developer and recently started getting my hands on technical product management and agile product owner. The tools I use for development are Android as the framework and Android Studio as the integrated development environment.

Which tool has been your favorite to use? Why?

I would say Flutter. The Flutter toolkit has a layered architecture that allows for full customization. The fact that Flutter comes with fully-customizable widgets allows you to build native interfaces in minutes. I also love the fact that some of these widgets’ features like scrolling, navigation, icons, and fonts provide a full native performance on both iOS and Android. Flutter is one code base and it makes building mobile applications much easier. I don't have to build a separate app for Android, and another separate app for IOS. Another Flutter feature I like so much is the “hot reload.” It allows me to easily build UIs, add new features, and fix bugs faster. It also allows easy compilation of Flutter code to native ARM machine code using Dart native compilers.

Please share with us about something you’ve built in the past using Google tools.

The first app I built was for one of my former employers. It happened almost three years ago, and it was the first project I worked on when I started learning Flutter. I was super excited about it. It was a timesheet app targeted specifically for employees. The sole purpose of the app is for employees to be able to schedule tasks and also give a time slot to each task.

What advice would you give someone starting in their developer journey?

From my experience running an NGO called

Ladies Crushing IT Africa and organizing a couple of tech events, I would say this: Don’t go into software development if you are not passionate or interested in it. Going into development because you think they pay developers well or because your friends are earning money from it is a wrong reason to start your development journey. A tech career journey should be about what you want to be in the future. Does it align with your future goals and objectives? How or what are strategies in achieving that path? Also note that the path to becoming a successful developer is a process. It is not all roses, and there are times when debugging will make it look difficult. But you should be resilient and diligent in making the most out of it when you encounter difficulties. It is always about continuous improvement. Never stop learning to keep yourself up to date with latest technologies and development tools.

Patty O’Callaghan

GDG Glasgow and Women Techmakers Ambassador

Glasgow, Scotland

Tech Lead @ Charles River Laboratories

Twitter

Linkedln

What Google tools have you used to build?

I use the Chrome DevTools daily. I find them very helpful. I also enjoy working on projects using TensorFlow.JS and Firebase.

Which tool has been your favorite to use? Why?

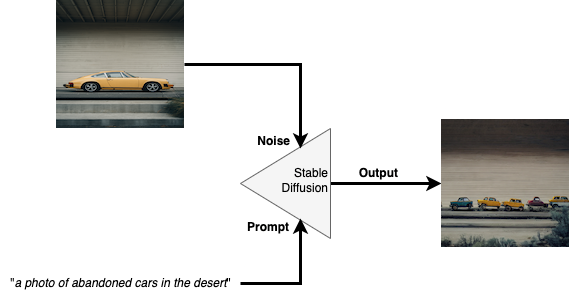

I would have to say TensorFlow.JS and its pre-made models are my favorite. I enjoy the fact that I can build cool machine learning projects directly in the browser. Even developers unfamiliar with this technology can quickly build, train, and deploy machine learning models using just a few lines of code. Some kids at my code club have used TensorFlow.JS for amazing projects, like building class attendance applications using facial recognition, or a site that checks correct form while practicing karate at home, and another for studying with the help of an AI agent.

Please share with us about something you’ve built in the past using Google tools.

I've worked on several side-projects using TensorFlow.JS for my workshops. One of my favorites is an emotion recognition app, using the Teachable Machine. Additionally, for work, I used TF.JS to develop a machine learning solution that suggests taxonomies for articles based on their content. It analyzes over 30 taxonomies to find the best match for the given article.

What advice would you give someone starting in their developer journey?

First of all, focus on learning the fundamentals of programming. A strong foundation will benefit you in the long run. Practice coding regularly and find a mentor or a community to help you along the way. For example, contributing to an open-source project is an excellent way to learn. And remember: Making mistakes is a natural part of the learning process, so don't get discouraged if you encounter difficulties. Keep pushing forward!

- Angular 15

- Material Design

- Google Cloud / Firebase

- Authentication

- Hosting

- Firestore

- Functions

- Extensions

- Storage

- Machine Learning

- PWA Standards

- Chrome / DevTools

- Android

Which tool has been your favorite to use? Why?

Firestore has been our favorite due to its scalability and real-time data capabilities, through websockets and triggers, the data flexibility, plus query capabilities. This is how we’ve built out our modern event-driven architecture to allow for a completely real-time application providing immediate data and collaboration across our entire white label application suite.

Please share with us about something you’ve built in the past using Google tools.

We built the

WeTransact Innovation Platform: From Idea to ROI which offers a learning-based distributed social platform for learning, collaborating and presenting yourself and your innovations.

For customers, we’ve created a White Label SaaS Platform, licensed by universities, incubators, developer groups and any program looking to provide education, collaboration, and AI assisted auto generated presentation and communication tools. Our platform combines features similar to LinkedIn, Coursera, AngelList and Zoom in one simple and modern unified platform for communities to make collaboration & lifelong learning globally accessible to everyone. The WeTransact platform accelerates & scales your program’s impact to solve the world's biggest problems better together.

Here’s just a few other ways we’ve used Google tools:

What advice would you give someone starting in their developer journey?

There’s a few pieces of advice we’d offer! Among them is to start early. Find a friend who is already developing or shares your passion. Find an open source project that inspires you or represents something you're passionate about. Dig in, change stuff, break stuff and then learn why. Search is your best friend – use it to always question and reset your assumptions, learn new approaches, and practice not getting stuck in a “boilerplate” or “standard” solution to each problem. It’s not about memorizing – technology changes every day and you should too. Finally, know that it’s about the process and the journey, not the destination.