Posted by Kübra Zengin, North America GDSC Regional Lead

Posted by Kübra Zengin, North America GDSC Regional Lead

Serving as a Google Developer Student Clubs (GDSC) Lead at the university level builds technical skills and leadership skills that serve alumni well in their post-graduate careers. Four GDSC Alumni Leads from universities in Canada and the U.S. have gone on to meaningful careers in the tech industry, and share their experiences.

Daniel Shirvani: The Next Frontier in Patient Data

Daniel Shirvani graduated from the University of British Columbia (UBC) in Vancouver, Canada, in 2023, with a Bachelor’s of Science in Pharmacology, and will soon return to UBC for medical school. He served as Google Developer Student Clubs (GDSC) Lead and founding team member. He also launched his own software company, Leftindust Systems, in 2019, to experiment with creating small-scale electronic medical record software (EMR) for the open source community. This project is now closed.

“I built a startup to rethink the use of medical software,” he says.

As a summer student volunteer at a Vancouver-area heart clinic, Shirvani was tasked with indexing hundreds of medical records, who had specific blood glucose HBA1C levels and factors related to kidney disease, to see who would be eligible for the new cardiac drug. However, the clinic’s medical records software didn’t have the capability to flag patients in the system, so the only way to register the hundreds of files on Shirvani’s final list would be to do so manually–and that was impossible, given the size of the list and the time remaining in his work term. He believed that the software should have been able to not only flag these patients, but also to automatically filter which patients met the criteria.

“Two to three hundred patients will not receive this life-saving drug because of

this software,” Shirvani says. “My father is a patient who would have been eligible for this type of drug. His heart attack put things into perspective. There are families just like mine who will have the same experience that my father did, only because the software couldn’t keep up.”

Shirvani decided to combine his medical knowledge and programming skills to develop an electronic medical software, or EMR, that could store patient data numerically, instead of within paragraphs. This allows doctors to instantly analyze the data of patients, both at the individual and group-level. Doctors across North America took notice, including those from UBC, Stanford, UCLA, and elsewhere.

“During the North America Connect conference, a 2-day in-person event bringing together organizers and members across North America from the Google for Developers community programs including Google Developer Group, Women Techmakers, Google Developer Experts, and Google Developer Student Clubs, I met with many GDEs and Googlers, such as Kevin A. McGrail, who is now a personal mentor,” says Shirvani, who continues to look for other ways to make change in the healthcare community.

"When systems disappoint, we see not an end, but a new beginning. It’s in that space that we shape the future.

Alex Cussell: Becoming a tech entrepreneur

Alex Cussell graduated from the University of Central Florida in 2020, where she was a GDSC Lead her senior year. She says the experience inspired her to pursue her passion of becoming a tech entrepreneur.

“Leading a group of students with such differing backgrounds, addressing the world’s most pervasive problems, and loving every second of it taught me that I was meant to be a tech entrepreneur,” she says. “We were on a mission to save the lives of those involved in traffic accidents, when the world as we knew it came to a screeching halt due to the COVID-19 pandemic.

After her virtual graduation, Cussell moved to Silicon Valley and earned a Master’s in Technology Ventures from Carnegie Mellon University. She studied product management, venture capital, and startup law, with a vision of building a meaningful company. After getting engaged and receiving multiple gift cards as bridal shower gifts, Cussell found herself confused about each card’s amount and challenged trying to keep them organized.

She created the Jisell app, which features a universal gift card e-wallet, allowing users to digitize their gift cards. The app has had over five thousand dollars in gift cards uploaded to date and a partnership with the largest gift card distributor in the U.S. Jisell product manager Emily Robertson was Cussell’s roommate at the GDSC summit.

“Without Google Developer Student Clubs, I might never have realized how much I love problem-solving or technical leadership or known so much about the great tools offered by Google,” Cussell says. "Thank you to everyone who contributes to the GDSC experience; you have truly changed the lives of so many.”

Angela Busheska: Founding a nonprofit to fight climate change

Angela Busheska is double majoring in electrical engineering and computer science, with a minor in mathematics, at Lafayette College in Easton, Pennsylvania, and anticipates graduating in 2025. A Google intern this summer and last summer, Busheska participated in Google’s Computer Science Research Mentorship Program from September 2021-January 2022, which supports the pursuit of computing research for students from historically marginalized groups through career mentorship, peer-to-peer networking, and building awareness about pathways within the field. Busheska investigated the computing processes across four different projects in the field of AI for Social Good.

During the pandemic, in 2020, Busheska founded EnRoute, a nonprofit to harness the power of everyday actions to fight climate change and break down the stigma that living sustainably is an expensive and challenging commitment. She also built a mobile app using Android and Flutter that helps users make simple daily transportation and shopping choices to reduce their carbon footprints. Since 2020, the app has guided thousands of users to reduce more than 100,000kg of CO2 emissions.

EnRoute honors Busheska’s aunt, who passed away when Busheska was 17. Busheska grew up in Skopje, in North Macedonia, one of the world’s most polluted cities.

“When I was 17 years old, Skopje’s dense air pollution led my aunt, who suffered from cardiovascular difficulties, to complete blood vessel damage, resulting in her swift passing,” says Busheska. “Inspired by my personal loss, I started researching the causes of the pollution.”

EnRoute has been featured on the Forbes 30 Under 30 Social Impact List and has been publicly recognized by Shawn Mendes, Prince William, One Young World, and the United Nations.

Sapphira Ching: Advancing Environmental, Social, and Government standards (ESG)

Sapphira Ching, a senior at the University of Pennsylvania’s Wharton School, spent her junior year as UPenn’s GDSC Lead, after joining GDSC her first year, leading social media for the club that spring and heading marketing and strategy her sophomore year. As a GDSC Lead, Sapphira expanded GDSC's campus membership and partnerships to reach an audience of over 2,000 students. In line with her passion for Environmental, Social, and Government standards (ESG) and Diversity, Equity, and Inclusion (DEI), Sapphira built a leadership team from different areas of study, including engineering, business, law, medicine, and music.

Ching’s passions for ESG, technology, and business drive her choices, and she says, “I am eager to incorporate ESG into tech to bring people together using business acumen.”

The Wharton School appointed her as an inaugural undergraduate fellow at the Turner ESG Initiative, and she founded the Penn Innovation Network, an ESG innovation club. Her summer internships have focused on ESG; her 2021 summer internship at MSCI (formerly known as Morgan Stanley Capital International) centered on on ESG, and her 2022 summer internship was at Soros Fund Management, an ESG juggernaut in finance. She is also a NCAA Division I student-athlete and Olympic hopeful in sabre fencing.

“I attribute my growth in ESG, tech, and business to how GDSC has helped me since my first year of college,” Ching says.

Are you an Alumni or current GDSC Lead? You can join the Google Developer Student Clubs (GDSC) LinkedIn Group

here. The group is a great place to share ideas and connect with current and former GDSC Leads.

Interested in joining a GDSC near you? Google Developer Student Clubs (GDSC) are university based community groups for students interested in Google developer technologies. Students from all undergraduate or graduate programs with an interest in growing as a developer are welcome. Learn more here.

Interested in becoming a GDSC Lead? GDSC Leads are responsible for starting and growing a Google Developer Student Club (GDSC) chapter at their university. GDSC Leads work with students to organize events, workshops, and projects. Learn more here.

Posted by Leticia Lago, Developer Marketing

Posted by Leticia Lago, Developer Marketing

Posted by Chanel Greco, Developer Advocate

Posted by Chanel Greco, Developer Advocate

Posted by Gina Biernacki, Product Manager

Posted by Gina Biernacki, Product Manager

Posted by Kübra Zengin, North America GDSC Regional Lead

Posted by Kübra Zengin, North America GDSC Regional Lead

Posted by

Posted by

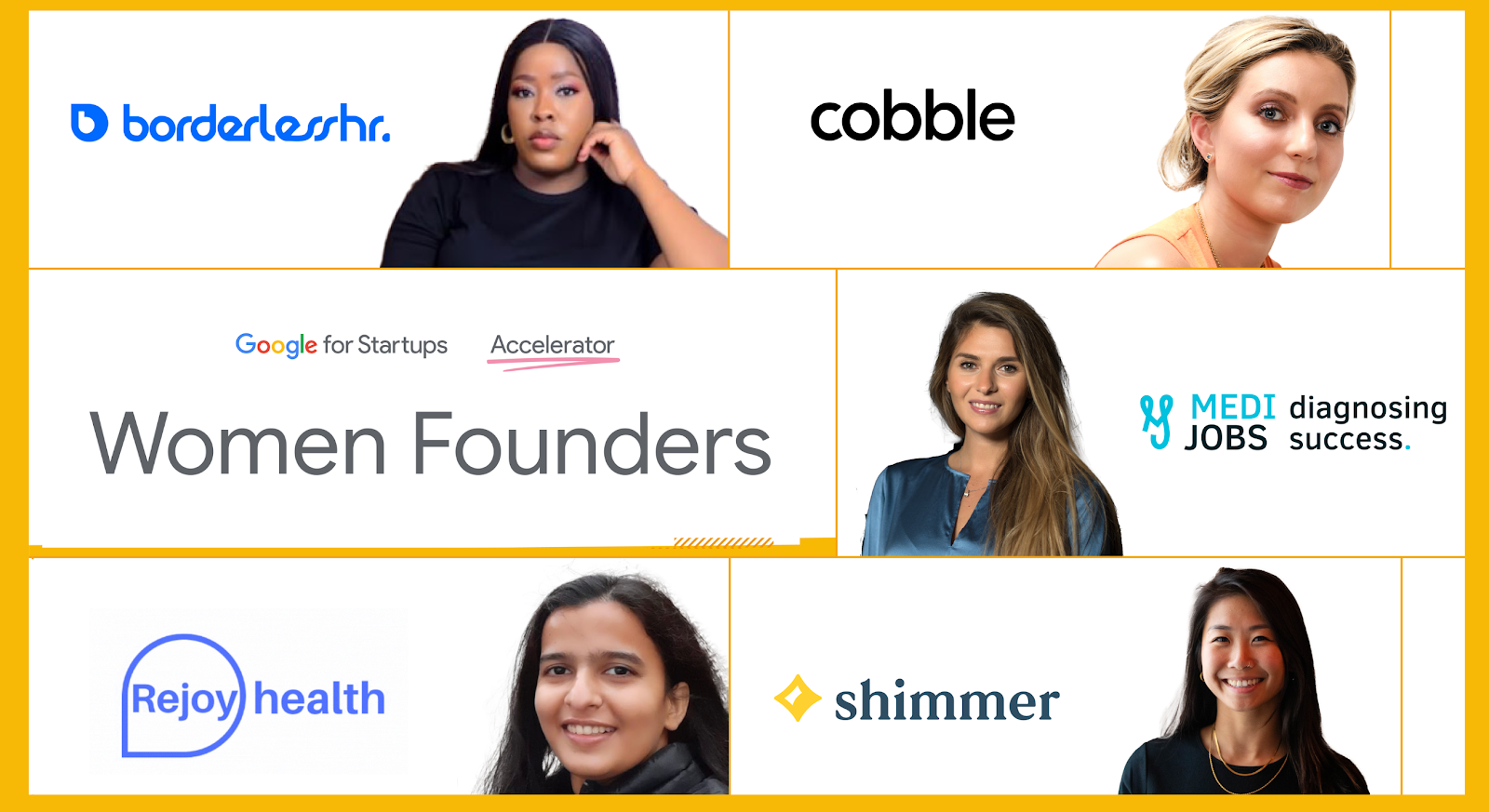

Posted by Iran Karimian, Startup Developer Ecosystem Lead, Canada

Posted by Iran Karimian, Startup Developer Ecosystem Lead, Canada

Posted by

Posted by

Posted by Harsh Dattani - Program Manager, Developer Ecosystem

Posted by Harsh Dattani - Program Manager, Developer Ecosystem

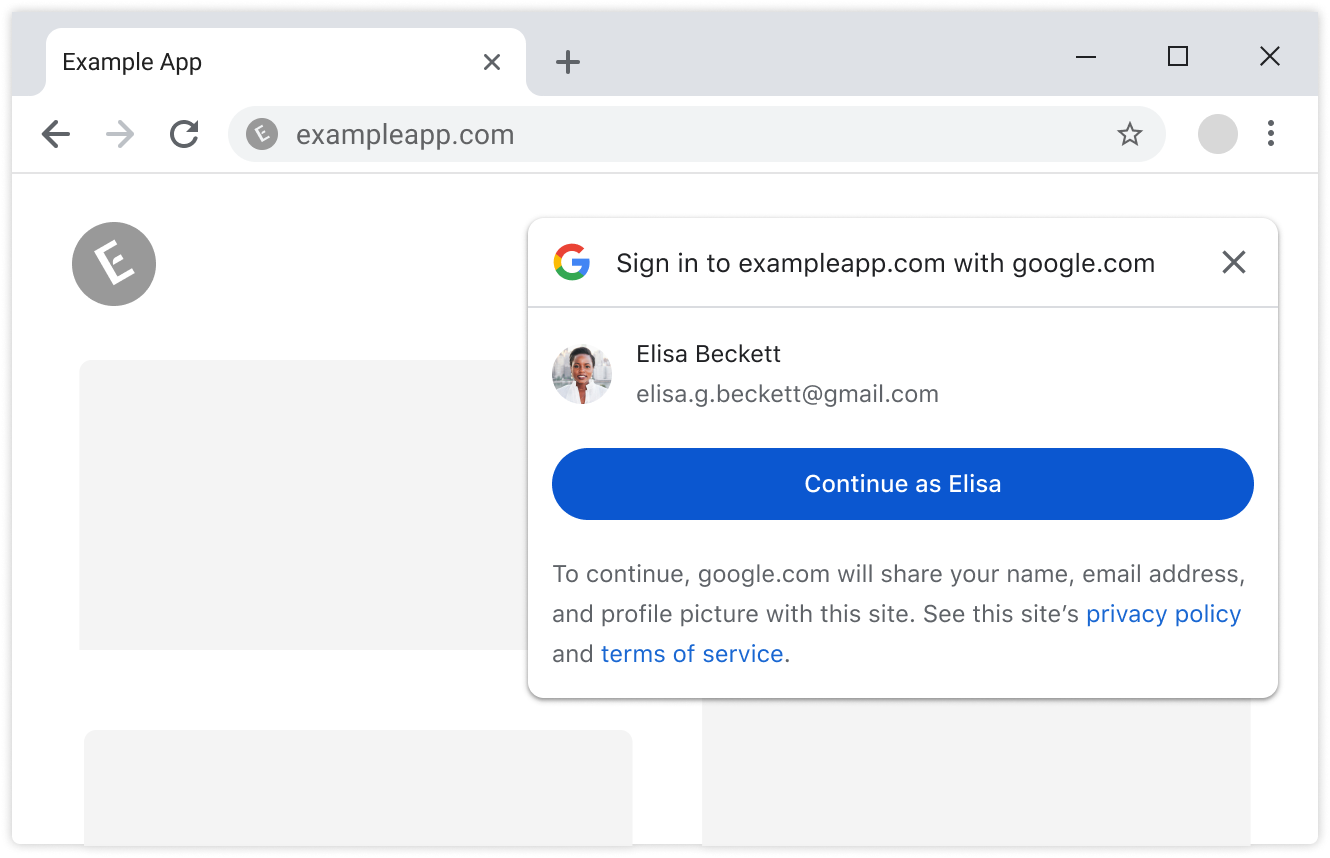

Posted by Miguel Guevara, Product Manager, Privacy and Data Protection Office

Posted by Miguel Guevara, Product Manager, Privacy and Data Protection Office

Posted by Bre Arder, UX Research Lead, Kirupa Chinnathambi, Product Lead, Ashwin Raghav Mohan Ganesh, Engineering Lead, Erin Kidwell, Director of Engineering, and Roman Nurik, Design Lead

Posted by Bre Arder, UX Research Lead, Kirupa Chinnathambi, Product Lead, Ashwin Raghav Mohan Ganesh, Engineering Lead, Erin Kidwell, Director of Engineering, and Roman Nurik, Design Lead