Today at Google I/O, we announced the many ways we’re helping you build excellent, adaptive experiences, and helping you stay more productive through updates to our tooling that put AI at your fingertips and throughout your development lifecycle. Here’s a recap of 16 of our favorite announcements for Android developers; you can also see what was announced last week in The Android Show: I/O Edition. And stay tuned over the next two days as we dive into all of the topics in more detail!

Building AI into your Apps

1: Building intelligent apps with Generative AI

Generative AI enhances apps' experience by making them intelligent, personalized and agentic. This year, we announced new ML Kit GenAI APIs using Gemini Nano for common on-device tasks like summarization, proofreading, rewrite, and image description. We also provided capabilities for developers to harness more powerful models such as Gemini Pro, Gemini Flash, and Imagen via Firebase AI Logic for more complex use cases like image generation and processing extensive data across modalities, including bringing AI to life in Android XR, and a new AI sample app, Androidify, that showcases how these APIs can transform your selfies into unique Android robots! To start building intelligent experiences by leveraging these new capabilities, explore the developer documentation, sample apps, and watch the overview session to choose the right solution for your app.

New experiences across devices

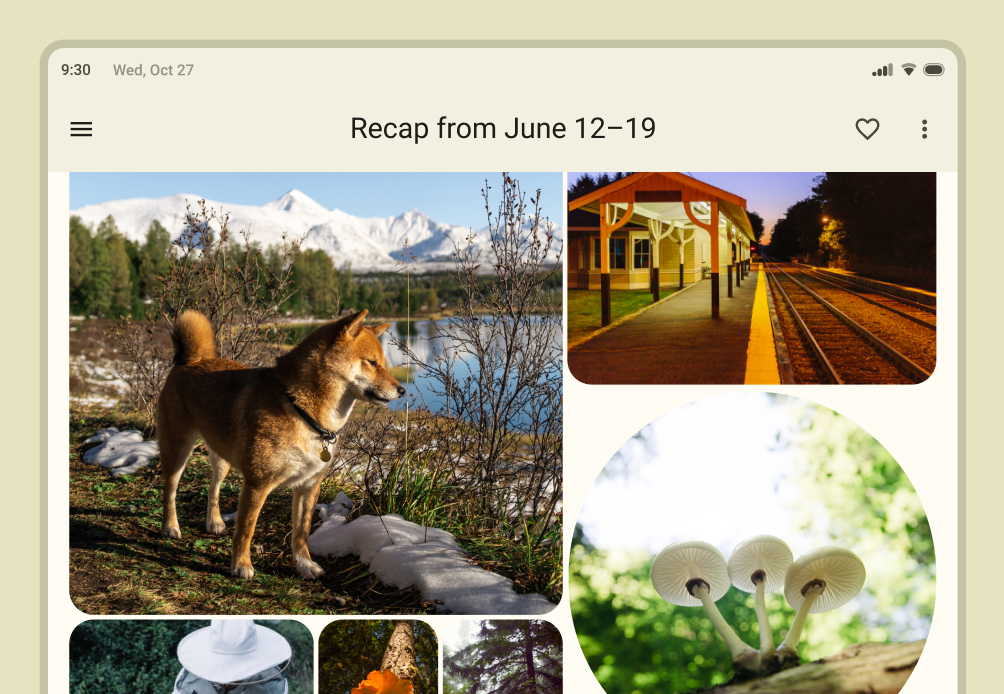

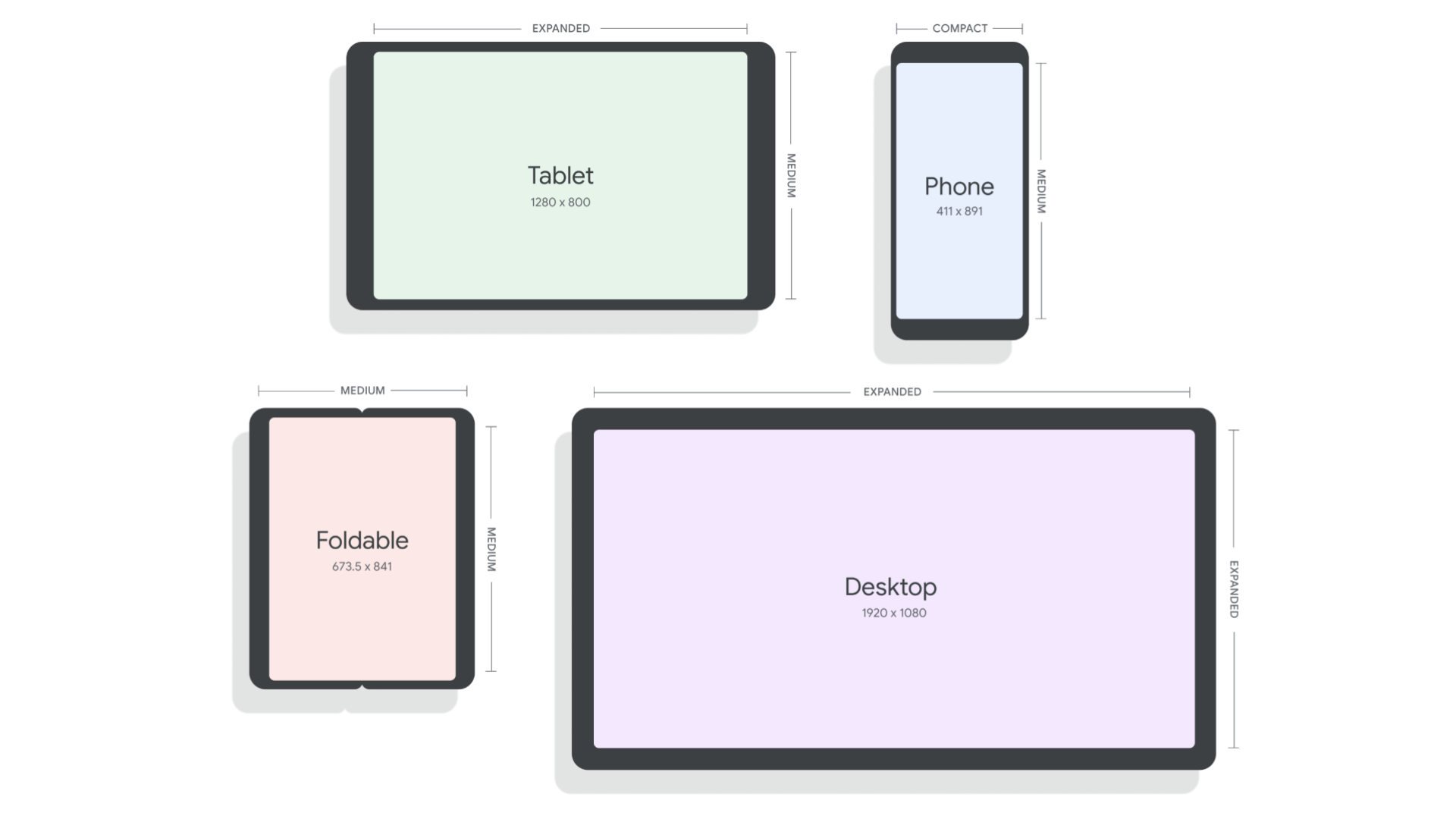

2: One app, every screen: think adaptive and unlock 500 million screens

Mobile Android apps form the foundation across phones, foldables, tablets and ChromeOS, and this year we’re helping you bring them to cars and XR and expanding usages with desktop windowing and connected displays. This expansion means tapping into an ecosystem of 500 million devices – a significant opportunity to engage more users when you think adaptive, building a single mobile app that works across form factors. Resources, including Compose Layouts library and Jetpack Navigation updates, help make building these dynamic experiences easier than before. You can see how Peacock, NBCUniveral’s streaming service (available in the US) is building adaptively to meet users where they are.

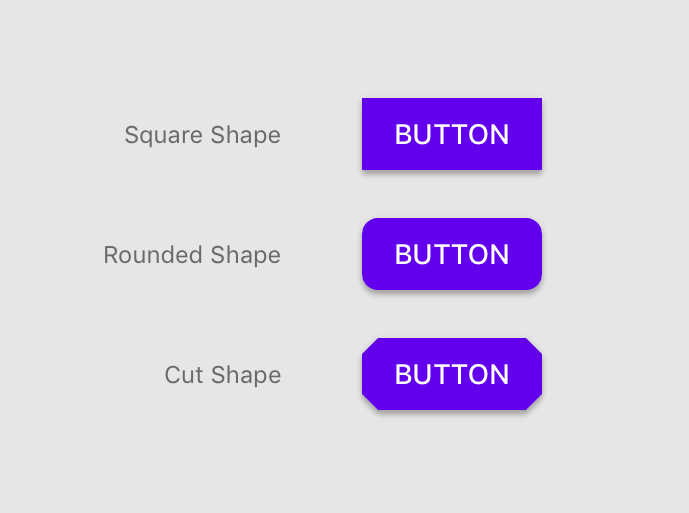

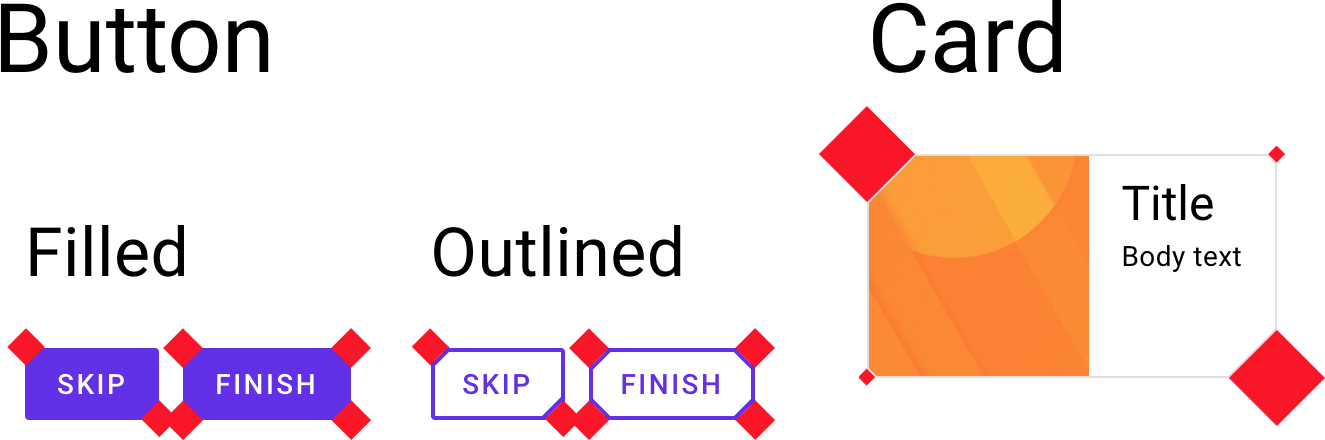

3: Material 3 Expressive: design for intuition and emotion

The new Material 3 Expressive update provides tools to enhance your product's appeal by harnessing emotional UX, making it more engaging, intuitive, and desirable for users. Check out the I/O talk to learn more about expressive design and how it inspires emotion, clearly guides users toward their goals, and offers a flexible and personalized experience.

4: Smarter widgets, engaging live updates

Measure the return on investment of your widgets (available soon) and easily create personalized widget previews with Glance 1.2. Promoted Live Updates notify users of important ongoing notifications and come with a new Progress Style standardized template.

5: Enhanced Camera & Media: low light boost and battery savings

This year's I/O introduces several camera and media enhancements. These include a software low light boost for improved photography in dim lighting and native PCM offload, allowing the DSP to handle more audio playback processing, thus conserving user battery. Explore our detailed sessions on built-in effects within CameraX and Media3 for further information.

6: Build next-gen app experiences for Cars

We're launching expanded opportunities for developers to build in-car experiences, including new Gemini integrations, support for more app categories like Games and Video, and enhanced capabilities for media and communication apps via the Car App Library and new APIs. Alongside updated car app quality tiers and simplified distribution, we'll soon be providing improved testing tools like Android Automotive OS on Pixel Tablet and Firebase Test Lab access to help you bring your innovative apps to cars. Learn more from our technical session and blog post on new in-car app experiences.

7: Build for Android XR's expanding ecosystem with Developer Preview 2 of the SDK

We announced Android XR in December, and today at Google I/O we shared a bunch of updates coming to the platform including Developer Preview 2 of the Android XR SDK plus an expanding ecosystem of devices: in addition to the first Android XR headset, Samsung’s Project Moohan, you’ll also see more devices including a new portable Android XR device from our partners at XREAL. There’s lots more to cover for Android XR: Watch the Compose and AI on Android XR session, and the Building differentiated apps for Android XR with 3D content session, and learn more about building for Android XR.

8: Express yourself on Wear OS: meet Material Expressive on Wear OS 6

This year we are launching Wear OS 6: the most powerful and expressive version of Wear OS. Wear OS 6 features Material 3 Expressive, a new UI design with personalized visuals and motion for user creativity, coming to Wear, Android, and Google apps later this year. Developers gain access to Material 3 Expressive on Wear OS by utilizing new Jetpack libraries: Wear Compose Material 3, which provides components for apps and Wear ProtoLayout Material 3 which provides components and layouts for tiles. Get started with Material 3 libraries and other updates on Wear.

9: Engage users on Google TV with excellent TV apps

You can leverage more resources within Compose's core and Material libraries with the stable release of Compose for TV, empowering you to build excellent adaptive UIs across your apps. We're also thrilled to share exciting platform updates and developer tools designed to boost app engagement, including bringing Gemini capabilities to TV in the fall, opening enrollment for our Video Discovery API, and more.

Developer productivity

10: Build beautiful apps faster with Jetpack Compose

Compose is our big bet for UI development. The latest stable BOM release provides the features, performance, stability, and libraries that you need to build beautiful adaptive apps faster, so you can focus on what makes your app valuable to users.

11: Kotlin Multiplatform: new Shared Template lets you build across platforms, easily

Kotlin Multiplatform (KMP) enables teams to reach new audiences across Android and iOS with less development time. We’ve released a new Android Studio KMP shared module template, updated Jetpack libraries and new codelabs (Getting started with Kotlin Multiplatform and Migrating your Room database to KMP) to help developers who are looking to get started with KMP. Shared module templates make it easier for developers to craft, maintain, and own the business logic. Read more on what's new in Android's Kotlin Multiplatform.

12: Gemini in Android Studio: AI Agents to help you work

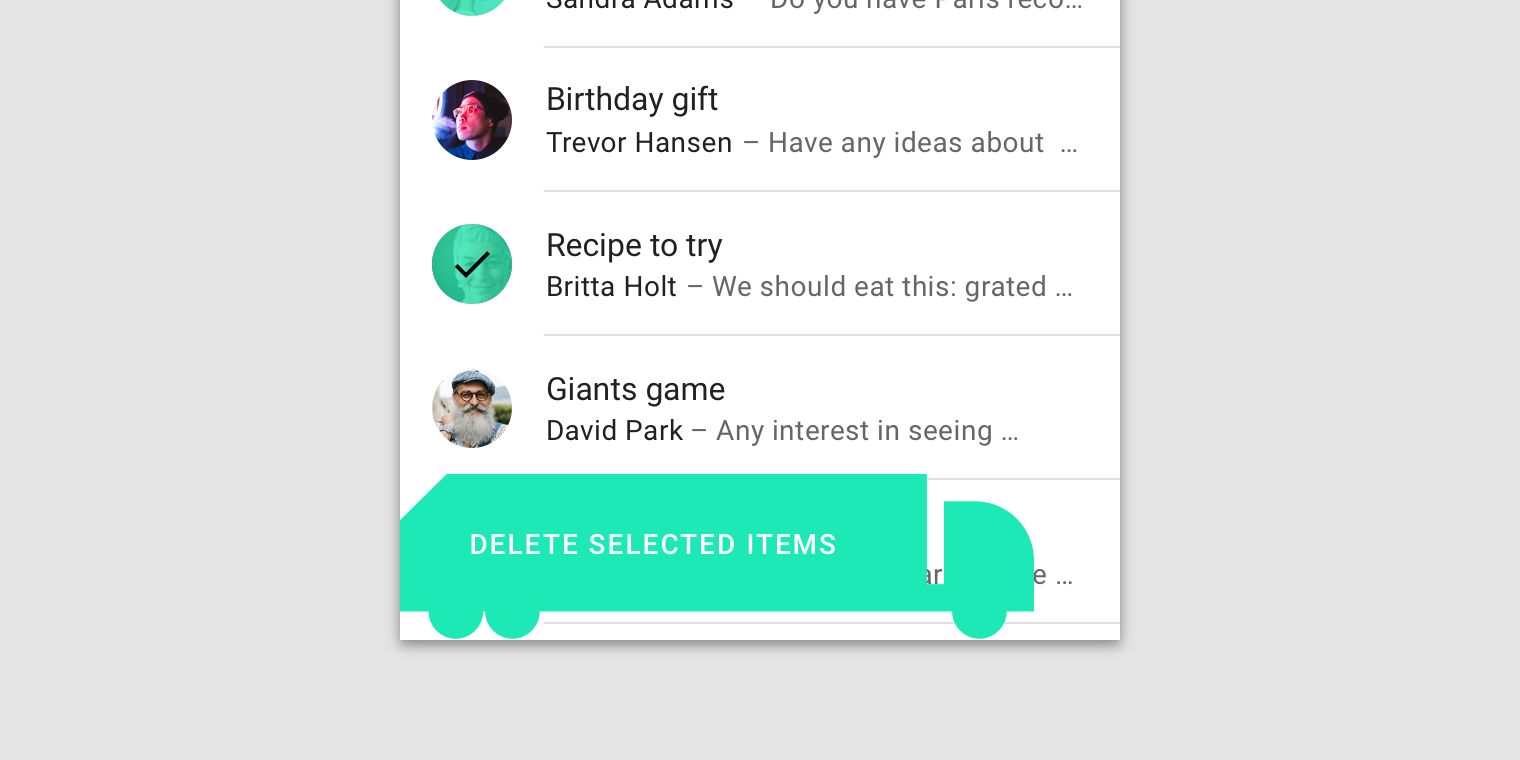

Gemini in Android Studio is the AI-powered coding companion that makes Android developers more productive at every stage of the dev lifecycle. In March, we introduced Image to Code to bridge the gap between UX teams and software engineers by intelligently converting design mockups into working Compose UI code. And today, we previewed new agentic AI experiences, Journeys for Android Studio and Version Upgrade Agent. These innovations make it easier to build and test code. You can read more about these updates in What’s new in Android development tools.

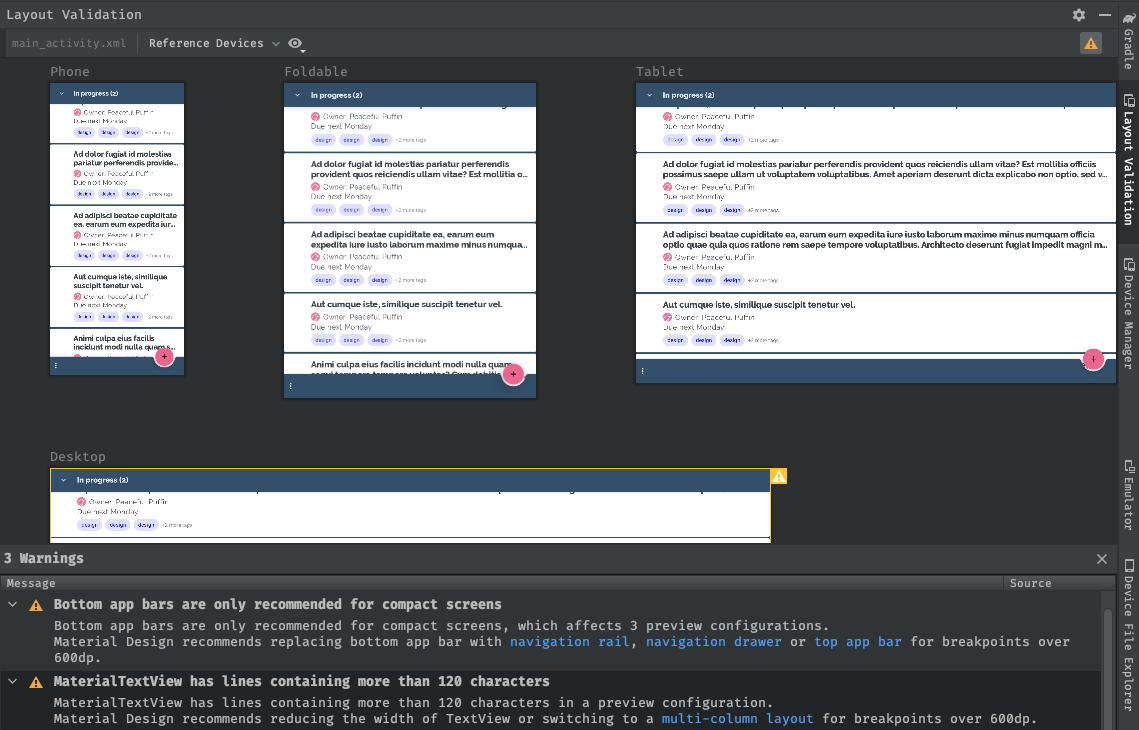

13: Android Studio: smarter with Gemini

In this latest release, we're empowering devs with AI-driven tools like Gemini in Android Studio, streamlining UI creation, making testing easier, and ensuring apps are future-proofed in our ever-evolving Android ecosystem. These innovations accelerate development cycles, improve app quality, and help you stay ahead in a dynamic mobile landscape. To take advantage, upgrade to the latest Studio release. You can read more about these innovations in What’s new in Android development tools.

And the latest on driving business growth

14: What’s new in Google Play

Get ready for exciting updates from Play designed to boost your discovery, engagement and revenue! Learn how we’re continuing to become a content-rich destination with enhanced personalization and fresh ways to showcase your apps and content. Plus, explore powerful new subscription features designed to streamline checkout and reduce churn. Read I/O 2025: What's new in Google Play to learn more.

15: Start migrating to Play Games Services v2 today

Play Games Services (PGS) connects over 2 billion gamer profiles on Play, powering cross-device gameplay, personalized gaming content and rewards for your players throughout the gaming journey. We are moving PGS v1 features to v2 with more advanced features and an easier integration path. Learn more about the migration timeline and new features.

16: And of course, Android 16

We unpacked some of the latest features coming to users in Android 16, which we’ve been previewing with you for the last few months. If you haven’t already, make sure to test your apps with the latest Beta of Android 16. Android 16 includes Live Updates, professional media and camera features, desktop windowing and connected displays, major accessibility enhancements and much more.

Check out all of the Android and Play content at Google I/O

This was just a preview of some of the cool updates for Android developers at Google I/O, but stay tuned to Google I/O over the next two days as we dive into a range of Android developer topics in more detail. You can check out the What’s New in Android and the full Android track of sessions, and whether you’re joining in person or around the world, we can’t wait to engage with you!

Explore this announcement and all Google I/O 2025 updates on io.google starting May 22.

Posted by

Posted by  Posted by Matthew McCullough – Vice President, Product Management, Android Developer

Posted by Matthew McCullough – Vice President, Product Management, Android Developer

Posted by

Posted by