Give developers the best tools to be efficient

Developers choose to build applications with managed database services on Google Cloud to benefit from velocity, scalability, security, and performance. To enable you to be most efficient and deliver your best possible work, we deliver tools and frameworks that work with your preferred development environments, no matter if you develop in the cloud or on premises. To make local testing, building and continuous integration easier for our cloud-native databases, we released emulators for Cloud Spanner, Firestore, and Cloud Bigtable so that you can test your code wherever you develop it - without the need to create or re-create cloud infrastructure with every test run.Another area where we are helping developers is with instrumentation of Cloud SQL for easier debugging and performance tuning. With Cloud SQL Insights it is easier than ever to pinpoint underperforming SQL statements. That said, without additional instrumentation, it can be cumbersome to identify the source code or microservice that issued that SQL - let alone tying a SQL statement to a client session and its context. So we released Sqlcommenter as an open source library that will automatically add this instrumentation as SQL comments in queries that are generated by popular ORMs like Hibernate, Django, Sqlalchemy, and others (repo blog). We didn’t stop there, but merged Sqlcommenter with OpenTelemetry (blog) to add SQL insights from instrumented queries back to OpenTelemetry traces.

Lastly, we want to broaden access to our differentiated offerings, like Spanner. The recently announced Spanner PostgreSQL interface allows organizations to access Spanner’s industry-leading consistency and availability at scale using tools and skills from the popular PostgreSQL ecosystem. This new way of working with Spanner provides familiarity for developers and portability for administrators. (blog) Learn more in the documentation or sign up for the preview today.

Provide connectivity that is simple and secure

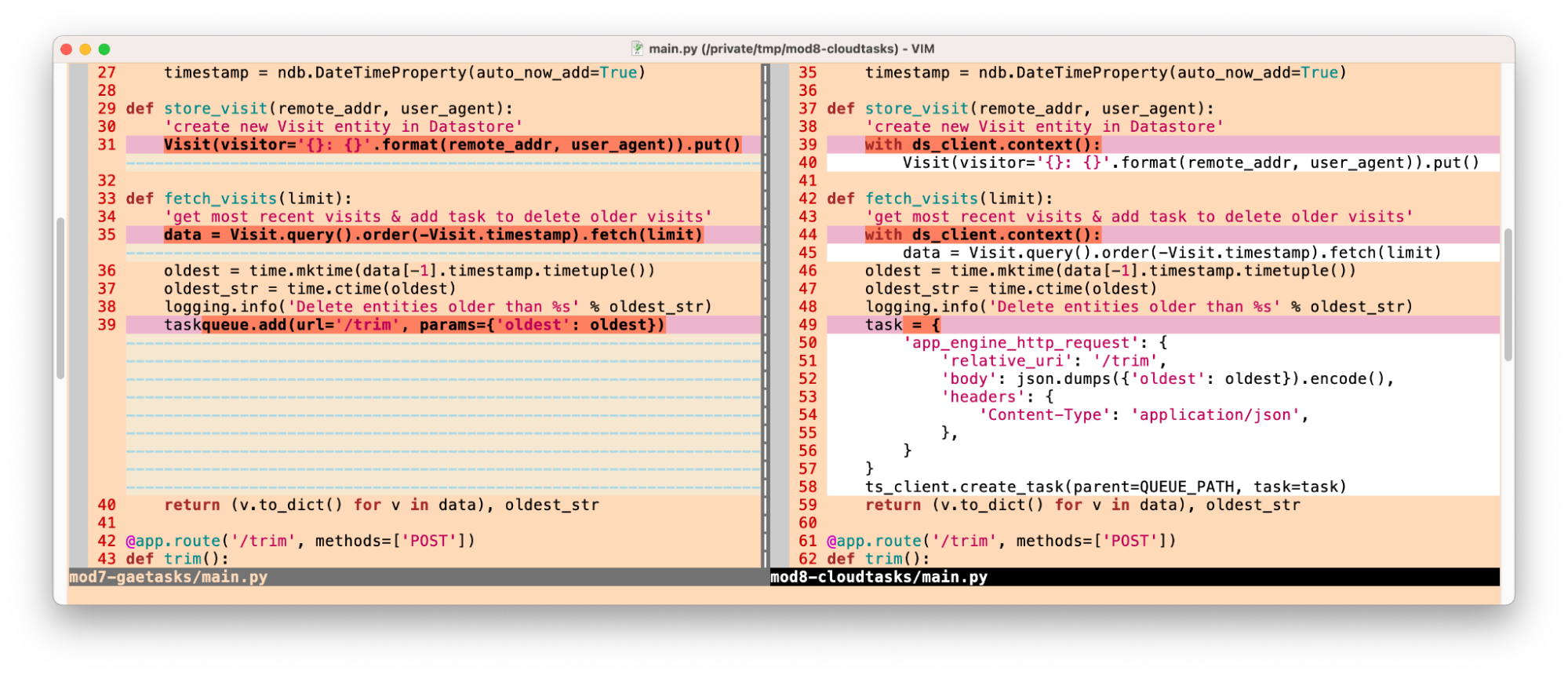

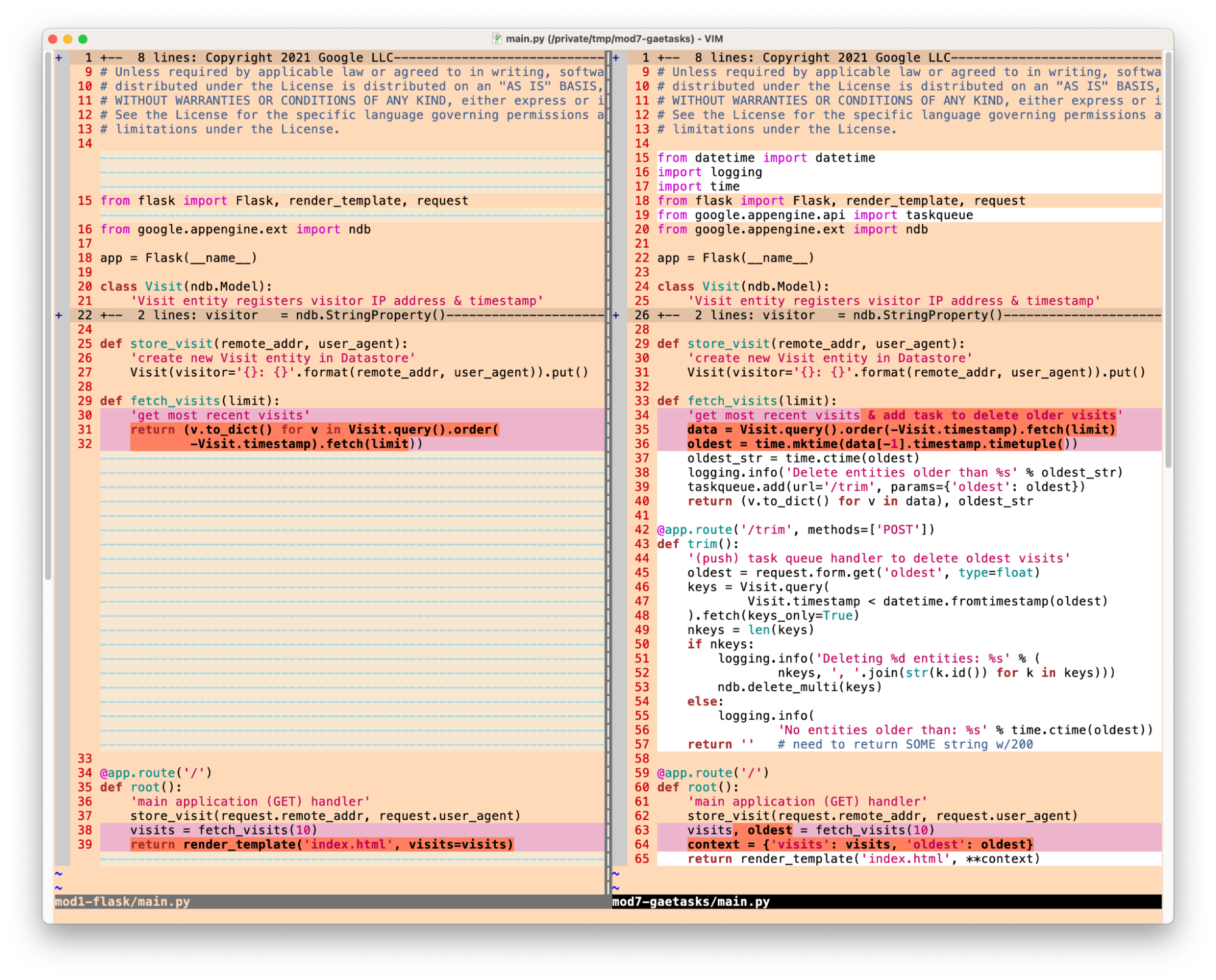

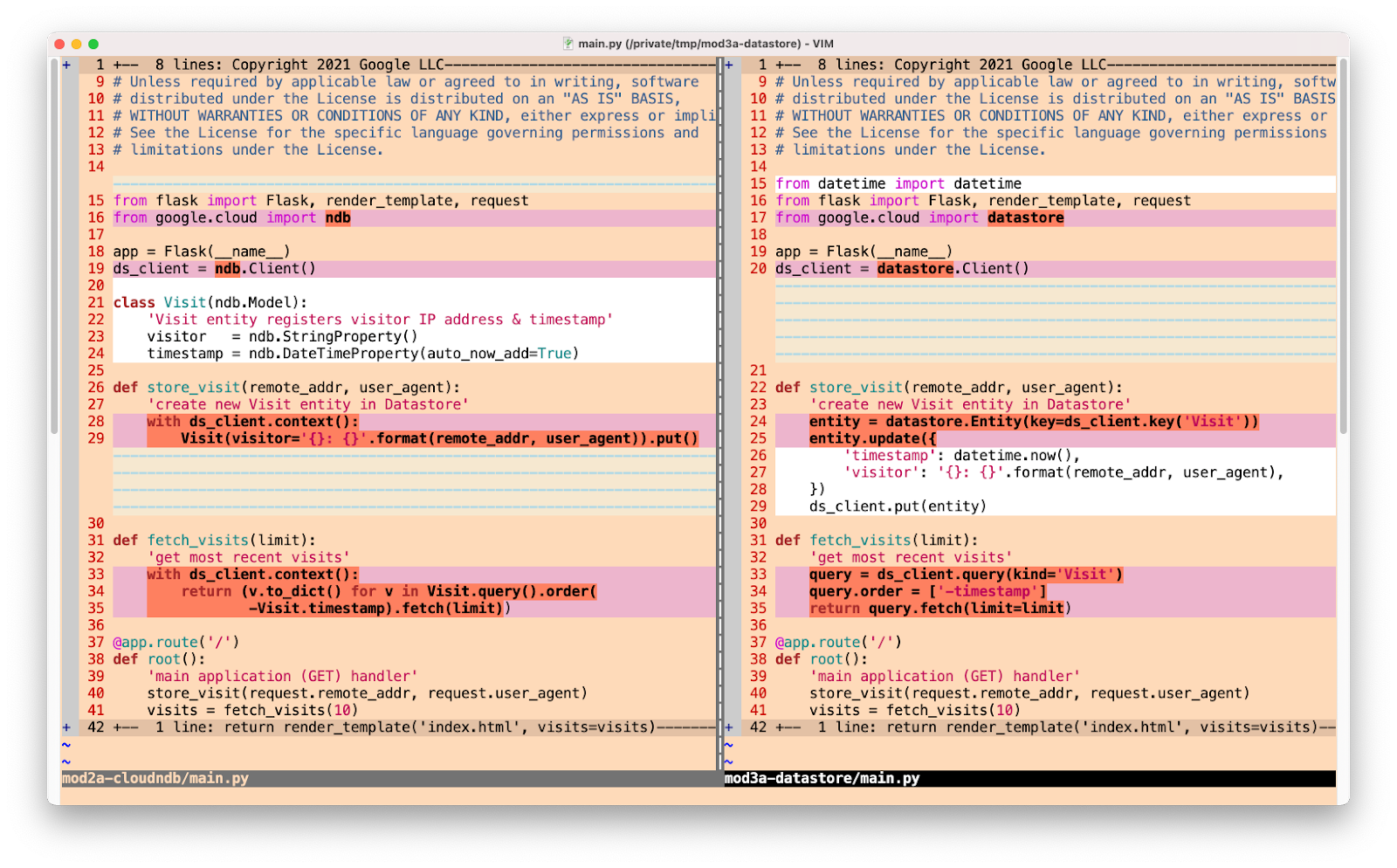

Connecting to APIs and databases from an application running in the cloud should be simple and secure. That’s why we recommend using IAM and Application Default Credentials when authenticating to other services. The Cloud SQL Proxy (repo) has been doing this and also setting up firewalls for you for a while. It works by running a local client either inside your VM or a GKE cluster. This year, we added libraries for Java (repo) and Python (repo) that can provide similar functionality without the overhead of running an extra client such as the proxy.Cloud Spanner also offers an open source adapter for its new PostgreSQL interface (repo). This local proxy allows tools, starting with psql, to connect to a Spanner database using the PostgreSQL wire protocol.

Manage cloud infrastructure with the tools of your choice

When it comes to provisioning, monitoring, and managing your cloud database services, flexibility and choice are important. We provide you with our cloud console, gcloud cli, and APIs as well as our own Deployment Manager. That said, you may prefer different ways to manage cloud infrastructure - whether through interactive tools or scripts or embedded into CI/CD pipelines that support GitOps or other controls, checks, and balances. Terraform is one of those open tools that is very popular - and we ensure that our cloud databases can be managed from it as documented in this blog about creating Spanner instances with Terraform.If you manage the majority of your resources with Kubernetes either directly or through package managers like Helm, then our Kubernetes Config Connector (KCC) might be for you. In a nutshell, KCC exposes Google Cloud services such as Cloud SQL, Spanner, and others as Custom Resources in Kubernetes. This allows you to create and reconcile cloud resources outside of Kubernetes just like K8s native objects.

Once you are managing cloud infrastructure with CI/CD, the next step is to extend that same mechanism to manage objects within your databases such as tables, indexes, and views. To that extent we have released a Liquibase extension for Cloud Spanner.

Help you to move data with confidence

Cloud journeys often involve moving data either in a lift and shift process or sometimes replatforming to a different database. Whatever your journey, we want to simplify the process and give you the confidence that your migration is successful.For enterprise users with Oracle databases, we have several open source projects. First, we have the Optimus Prime database assessment tool (repo) that queries your database and collects information about schemas and historic performance to be analyzed for migration complexity and consolidation potential. Our own professional services teams have been using this toolset to plan migrations to Bare Metal Solution for Oracle.

Some Oracle users are looking for opportunities to transform their workloads to fit with their bigger strategy of modernizing applications with Kubernetes. For this group we developed and open sourced the El Carro Kubernetes operator for Oracle. This not only automates database lifecycle tasks for systems running on Kubernetes, but also exposes declarative APIs for these operations.

If your application supports replatforming from Oracle to PostgreSQL, then we have a toolset for schema conversion along with dataflow pipelines that will read the output of a change data capture job and load it into a PostgreSQL database. What a great use-case for Datastream - our new serverless change data capture service.

Another case of heterogeneous database migration is to move MySQL or PostgreSQL databases to Cloud Spanner. HarbourBridge helps with the evaluation and data migration, and our latest contribution was adding support for DynamoDB as a source database. Part of every heterogeneous migration should be to validate that the source and target data are matching - we have released the Data Validation Toolkit for that use-case. DVT can connect to a number of source and target databases and compare the data on each side - giving you the confidence that your migration did not miss or change any records.