Posted by Wesley Chun

Background

In our ongoing Serverless Migration Station series aimed at helping developers modernize their serverless applications, one of the key objectives for Google App Engine developers is to upgrade to the latest language runtimes, such as from Python 2 to 3 or Java 8 to 17. Another objective is to help developers learn how to move away from App Engine legacy APIs (now called "bundled services") to Cloud standalone equivalent services. Once this has been accomplished, apps are much more portable, making them flexible enough to:

- Move to the next-generation App Engine service

- Migrate to other serverless platforms, Cloud Functions or Cloud Run (with or without Docker), or

- Shift to other compute platforms

In today's Module 12 video, we're going to start our journey by implementing App Engine's Memcache bundled service, setting us up for our next move to a more complete in-cloud caching service, Cloud Memorystore. Most apps typically rely on some database, and in many situations, they can benefit from a caching layer to reduce the number of queries and improve response latency. In the video, we add use of Memcache to a Python 2 app that has already migrated web frameworks from webapp2 to Flask, providing greater portability and execution options. More importantly, it paves the way for an eventual 3.x upgrade because the Python 3 App Engine runtime does not support webapp2. We'll cover both the 3.x and Cloud Memorystore ports next in Module 13.

| Got an older app needing an update? We can help with that. |

Adding use of Memcache

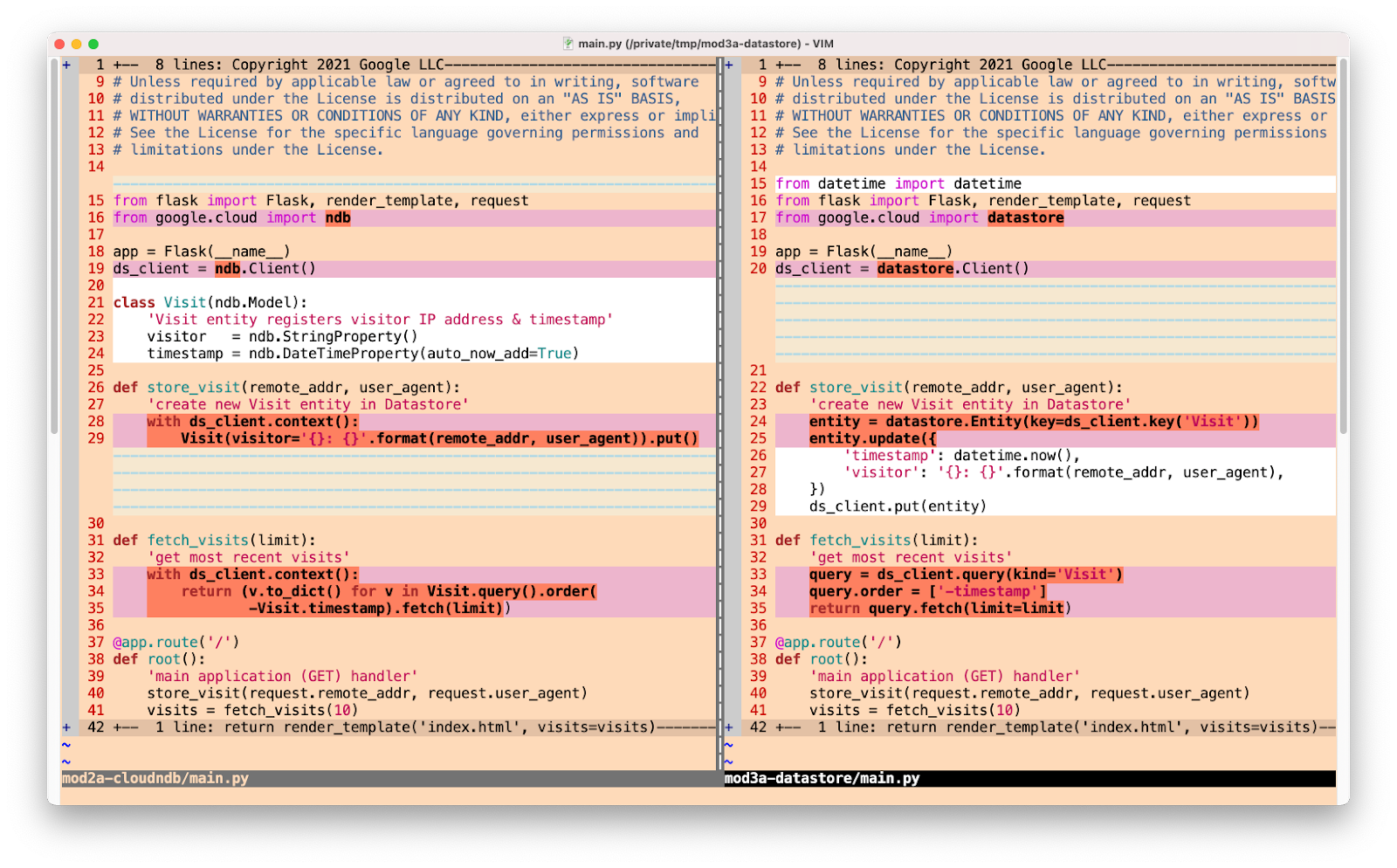

The sample application registers individual web page "visits," storing visitor information such as the IP address and user agent. In the original app, these values are stored immediately, and then the most recent visits are queried to display in the browser. If the same user continuously refreshes their browser, each refresh constitutes a new visit. To discourage this type of abuse, we cache the same user's visit for an hour, returning the same cached list of most recent visits unless a new visitor arrives or an hour has elapsed since their initial visit.

Below is pseudocode representing the core part of the app that saves new visits and queries for the most recent visits. Before, you can see how each visit is registered. After the update, the app attempts to fetch these visits from the cache. If cached results are available and "fresh" (within the hour), they're used immediately, but if cache is empty, or a new visitor arrives, the current visit is stored as before, and this latest collection of visits is cached for an hour. The bolded lines represent the new code that manages the cached data.

| Adding App Engine Memcache usage to sample app |

Wrap-up

Today's "migration" began with the Module 1 sample app. We added a Memcache-based caching layer and arrived at the finish line with the Module 12 sample app. To practice this on your own, follow the codelab doing it by-hand while following the video. The Module 12 app will then be ready to upgrade to Cloud Memorystore should you choose to do so.

In Fall 2021, the App Engine team extended support of many of the bundled services to next-generation runtimes, meaning you are no longer required to migrate to Cloud Memorystore when porting your app to Python 3. You can continue using Memcache in your Python 3 app so long as you retrofit the code to access bundled services from next-generation runtimes.

If you do want to move to Cloud Memorystore, stay tuned for the Module 13 video or try its codelab to get a sneak peek. All Serverless Migration Station content (codelabs, videos, source code [when available]) can be accessed at its open source repo. While our content initially focuses on Python users, we hope to one day cover other language runtimes, so stay tuned. For additional video content, check out our broader Serverless Expeditions series.