Shobana Radhakrishnan, Senior Director of Engineering - Google TV

Paul Lammertsma, Developer Relations Engineer

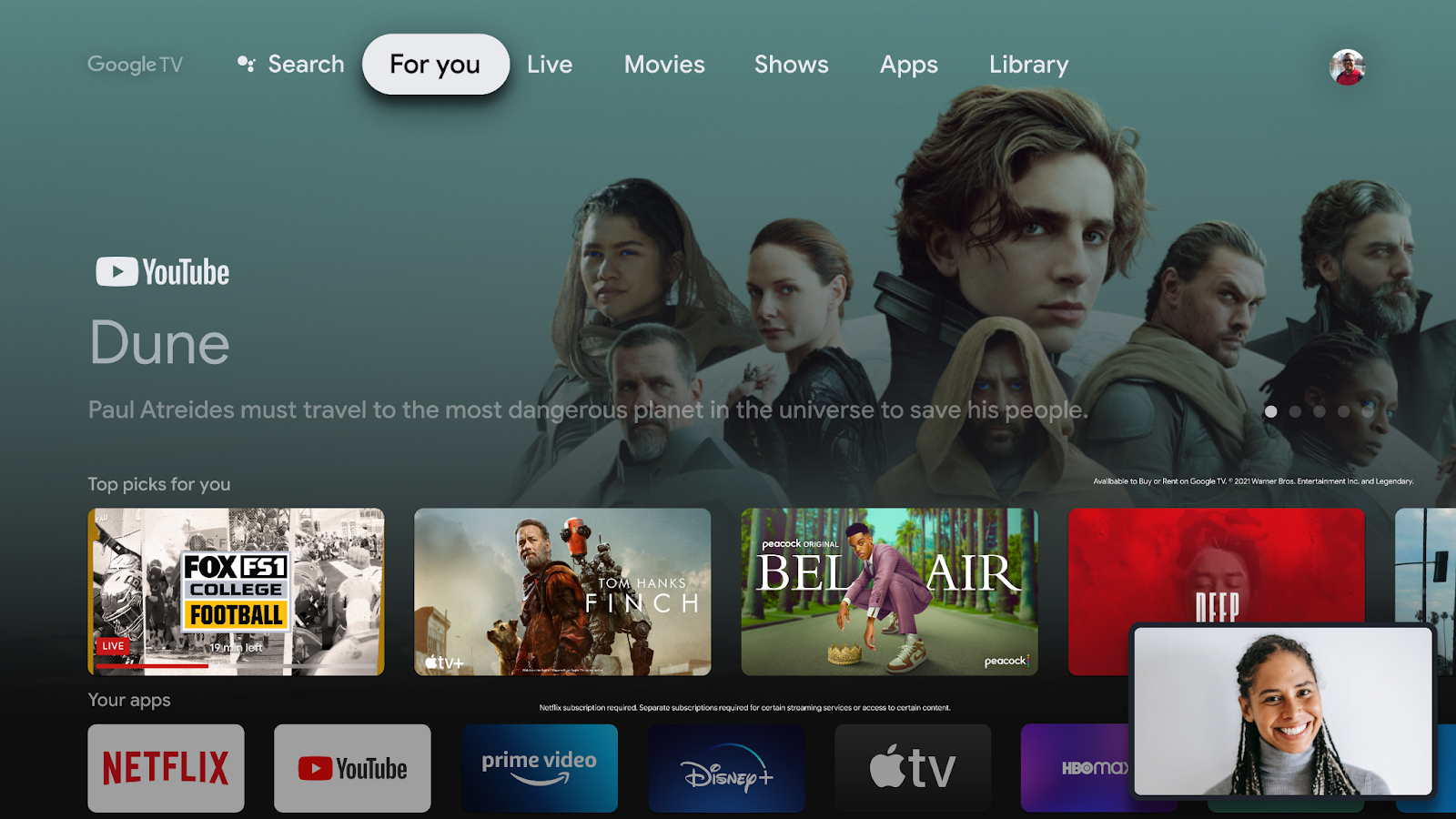

Today, there is more entertainment content available than ever before. In fact, our research shows a third of U.S. households now watch more than 25 hours of TV every week. As the role of TV continues to evolve, it’s our goal to build a tailored TV experience that gives users easy access to the entertainment they love.

We’re excited about the future of Android TV OS, now with over 110 million monthly active devices, including millions of Google TVs. Android TV and Google TV are available on over 300 partners worldwide, including 7 of the 10 largest smart TV OEMs and over 170 pay TV operators. And thanks to the hard work of our developer community, there are more than 10,000 apps available on TV, with more being added everyday.

Since last year’s I/O, we’ve continued our commitment to enable you to build better and more engaging experiences on Android TV OS. In addition to platform updates, new features, like expanded integrations with the Live tab, offer opportunities for users to better engage with your content. And if you haven’t begun using WatchNext API, take a moment to learn how to add it to your app to make your content more discoverable and accessible.

Today, we are introducing new features and tools on Android 13 that focus on overall performance & quality, improve accessibility, and enable multitasking.

- Performance & quality: To help build for the next generation of TVs, we’re introducing new APIs to help you better detect a user’s settings and give them the best experience for their device. AudioManager allows your app to anticipate audio routes and precisely understand which playback mode is available. Integrating your app correctly with MessiaSession allows Android TV to react to HDMI state changes in order to save power and signal that content should be paused.

- Accessibility: To improve how users interact with their TV, we’ve added support for different keyboard layouts in the InputDevice API. Game developers can also reference keys by their physical location to support different layouts of physical keyboards, such as QWERTZ and AZERTY keyboards. A new system-wide accessibility preference also allows users to enable audio descriptions across apps.

- Multitasking: TVs are now used for more than just watching media content. In fact, we often see users taking calls or monitoring cameras in a smart home. To help with multitasking, an updated picture in picture API will be supported in Android 13 with the APIs from core Android. Picture in picture on the TV supports an expanded mode to show more videos from a group call, a docked mode to avoid overlaying content on other apps, and a keep-clear API to prevent overlays from concealing important content in full-screen apps.

Android 13 Beta for TV is available now, allowing you to test your apps and provide feedback on the latest release. Thank you for your continued support of Android TV OS. We can’t wait to see what amazing and innovative things you continue to build for the big screen.

.png)