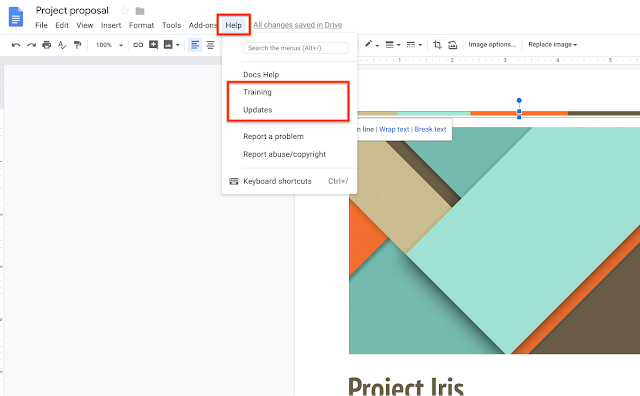

Posted by Yang Li, Research Scientist, Google AI Tapping is the most commonly used gesture on mobile interfaces, and is used to trigger all kinds of actions ranging from launching an app to entering text. While the style of clickable elements (e.g., buttons) in traditional desktop

graphical user interfaces is often conventionally defined, on mobile interfaces it can still be difficult for people to distinguish tappable versus non-tappable elements due to the diversity of styles. This confusion can lead to

false affordances (e.g., a feature that could be mistaken for a button) and a lack of discoverability that can lead to user frustration, uncertainty, and errors. To avoid this, interface designers can conduct a study or a visual affordance test to help clarify the tappability of items in their interfaces. However, such studies are time-consuming and their findings are often limited to a specific app or interface design.

In our

CHI'19 paper, "

Modeling Mobile Interface Tappability Using Crowdsourcing and Deep Learning", we introduced an approach for modeling the usability of mobile interfaces at scale. We crowdsourced a task to study UI elements across a range of mobile apps to measure the perceived tappability by a user. Our model predictions were consistent with the user group at the ~90% level, demonstrating that a machine learning model can be effectively used to estimate the perceived tappability of interface elements in their design without the need for expensive and time consuming user testing.

Predicting Tappability with Deep LearningDesigners often use visual properties such as the color or depth of an element to signify its availability for interaction on interfaces, e.g.,

the blue color and underline of a link. While these common signifiers are useful, it is not always clear when to apply them in each specific design setting. Furthermore, with design trends evolving, traditional signifiers are constantly being altered and challenged, potentially causing user uncertainty and mistakes.

To understand how users perceive this changing landscape, we analyzed the potential signifiers affecting tappability in real mobile apps—element

type (e.g., check boxes, text boxes, etc.),

location,

size,

color, and

words. We started by crowdsourcing volunteers to label the perceived clickability of ~20,000 unique interface elements from ~3,500 apps. With the exception of text boxes,

type signifiers yielded low uncertainty in user perceived tappability. The

location signifier refers to the position of a feature on the screen and is informed by the common layout design in mobile apps, as demonstrated in the figure below.

|

| Heatmaps displaying the accuracy of tappable and non-tappable elements by location, where warmer colors represent areas of higher accuracy. Users labeled non-tappable elements more accurately towards the upper center of the interface, and tappable elements towards the bottom center of the interface. |

The impact of element

size was relatively weak, but did indicate confusion in the case of large non-tappable elements. Users showed a tendency to bright

colors and short

word counts for tappable elements, though

word semantics also played a significant role.

We used these labels to train a simple

deep neural network that predicts the likelihood that a user will perceive an interface element as tappable versus non-tappable. For a given element of the interface, the model uses a range of features, including the spatial context of the element on the screen (

location), the semantics and functionality of the element (

words and

type), and the visual appearance (

size as well as raw pixels). The neural network model applies a

convolutional neural network (CNN) to extract features from raw pixels, and uses learned semantic embeddings to represent text content and element properties. The concatenation of all these features are then fed to a fully-connected network layer, the output of which produces a binary classification of an element's tappability.

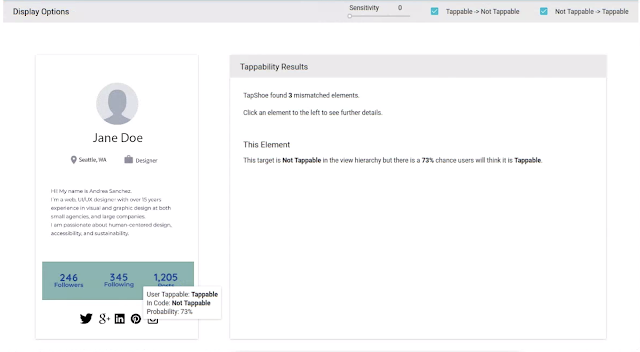

Evaluation of the ModelThe model allowed us to automatically diagnose mismatches between the tappability of each interface element as perceived by a user—predicted by our model—and the intended or actual tappable state of the element specified by the developer or designer. In the example below, our model predicts that there is a 73% chance that a user would think the labels such as "Followers" or "Following" are tappable, while these interface elements are in fact not programmed to be tappable.

To understand how our model behaves compared to human users, particularly when there is ambiguity in human perception, we generated a second, independent dataset by crowdsourcing an effort among 290 volunteers to label each of 2,000 unique interface elements with respect to their perceived tappability. Each element was labeled independently by five different users. We found that more than 40% of the elements in our sample were labeled inconsistently by volunteers. Our model matches this uncertainty in human perception quite well, as demonstrated in the figure below.

|

| The scatterplot of the tappability probability predicted by the model (the Y axis) versus the consistency in the human user labels (the X axis) for each element in the consistency dataset. |

When users agree an element's tappability, our model tends to give a more definite answer—a probability close to 1 for tappable and close to 0 for not tappable. When workers are less consistent on an element (towards the middle of the X axis), our model is also less certain about the decision. Overall, our model achieved reasonable accuracy of matching human perception in identifying tappable UI elements with a mean

precision of 90.2% and

recall of 87.0%.

Predicting tappability is merely one example of what we can do with machine learning to solve usability issues in user interfaces. There are many other challenges in interaction design and user experience research where deep learning models can offer a vehicle to distill large, diverse user experience datasets and advance scientific understandings about interaction behaviors.

AcknowledgementsThis research was a joint work of Amanda Swangson, summer intern at Google, and Yang Li, a Research Scientist in Deep Learning and Human Computer Interaction.