Posted by Patrick Fuentes, Developer Relations Engineer, Google ChromeOS People’s appetite for apps on larger screens is growing fast. In Q1 2022 alone, there were 270 million active Android users across Chromebooks, tablets, and foldables. So if you want to grow reach, engagement, and loyalty, taking your app beyond mobile will unlock a world of opportunity.

People’s appetite for apps on larger screens is growing fast. In Q1 2022 alone, there were 270 million active Android users across Chromebooks, tablets, and foldables. So if you want to grow reach, engagement, and loyalty, taking your app beyond mobile will unlock a world of opportunity.

If your app is available in Google Play, there’s a good chance users are already engaging with it on ChromeOS. And if you’re just starting to think about larger screens, tailoring your app to ChromeOS — which runs a full Android framework — is a great place to start. What’s more is that optimizing for ChromeOS is very similar to optimizing for other larger-screen devices, so any work you do for one will scale to the other.

At Android Dev Summit 2022, I shared a few ChromeOS-specific nuances to keep in mind when tailoring your app to larger screens. Let’s explore the top five things devs should consider, as well as workarounds to common challenges.

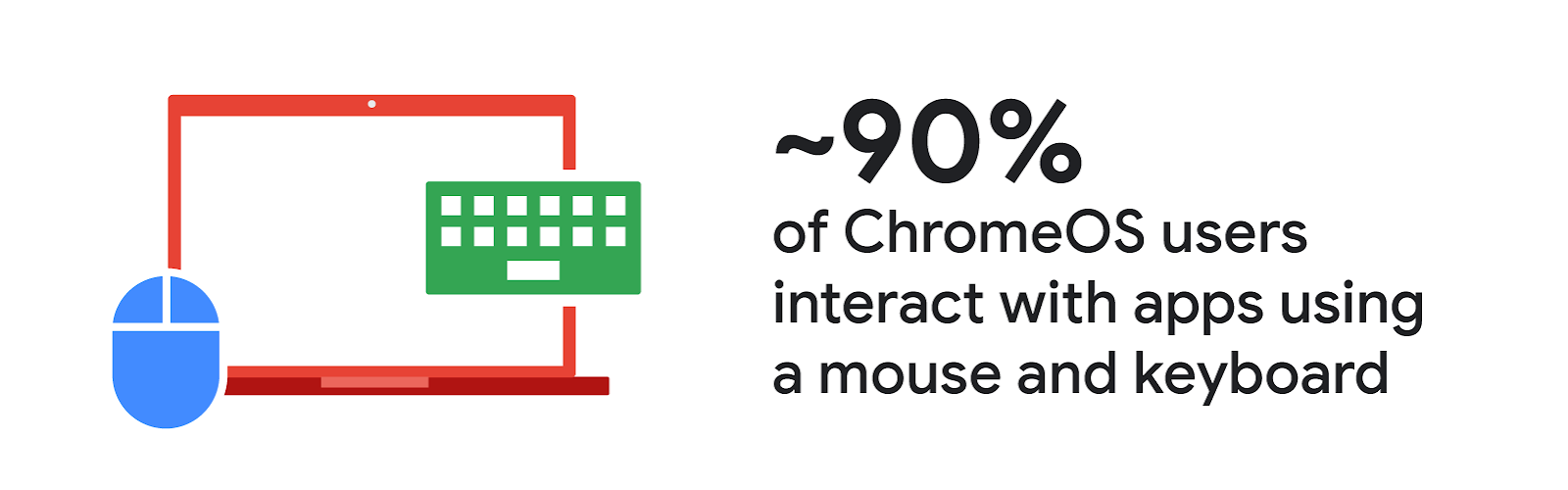

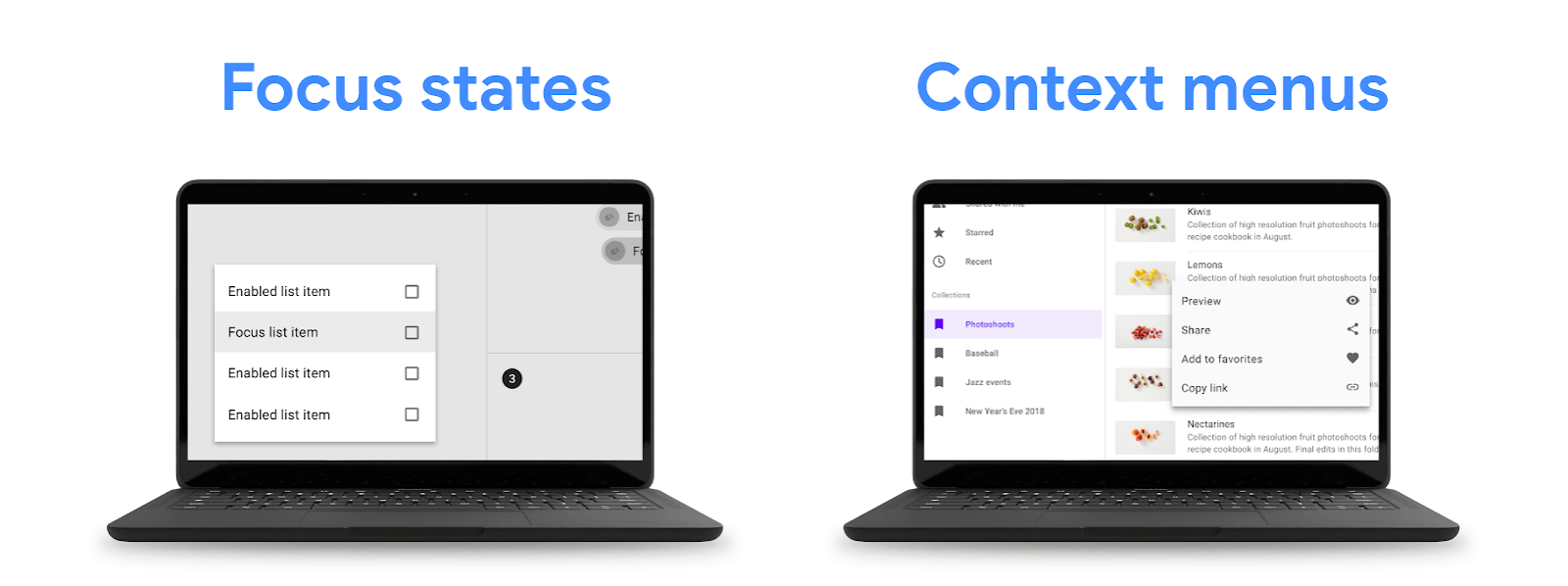

1) Finessing input compatibility

|

|

2) Creating a fit-for-larger-screen UI

People freely resize apps on ChromeOS, so it’s important to think about how your app looks and feels in a variety of aspect ratios — including landscape orientations. Although ChromeOS offers automatic windowing compatibility support for made-for-mobile experiences, apps that specifically optimize for larger screens tend to drive more engagement.

The extra screen real estate on Chromebooks, tablets, and foldables gives both you and your users more room to play, explore, and create. So why not make the most of it? You can implement a responsive UI for larger screens with toolkits such as Jetpack Compose and create adaptive experiences by sticking to design best practices.

3) Implementing binary compatibility

If you’ve exclusively run your app on Android phones, you might only be familiar with ARM devices. But Chromebooks and many other desktops often use x86 architectures, which makes binary support critical. Although Gradle builds for all non-deprecated ABIs by default, you’ll still need to specifically account for x86 support if your app or one of your libraries includes C++ code.

Thanks to binary translation, many Android apps will run on x86 ChromeOS devices even if a compatible version isn’t available. But this can hinder app performance and hurt battery life, so it’s best to provide x86 support explicitly whenever you can.

4) Giving apps a thorough test run

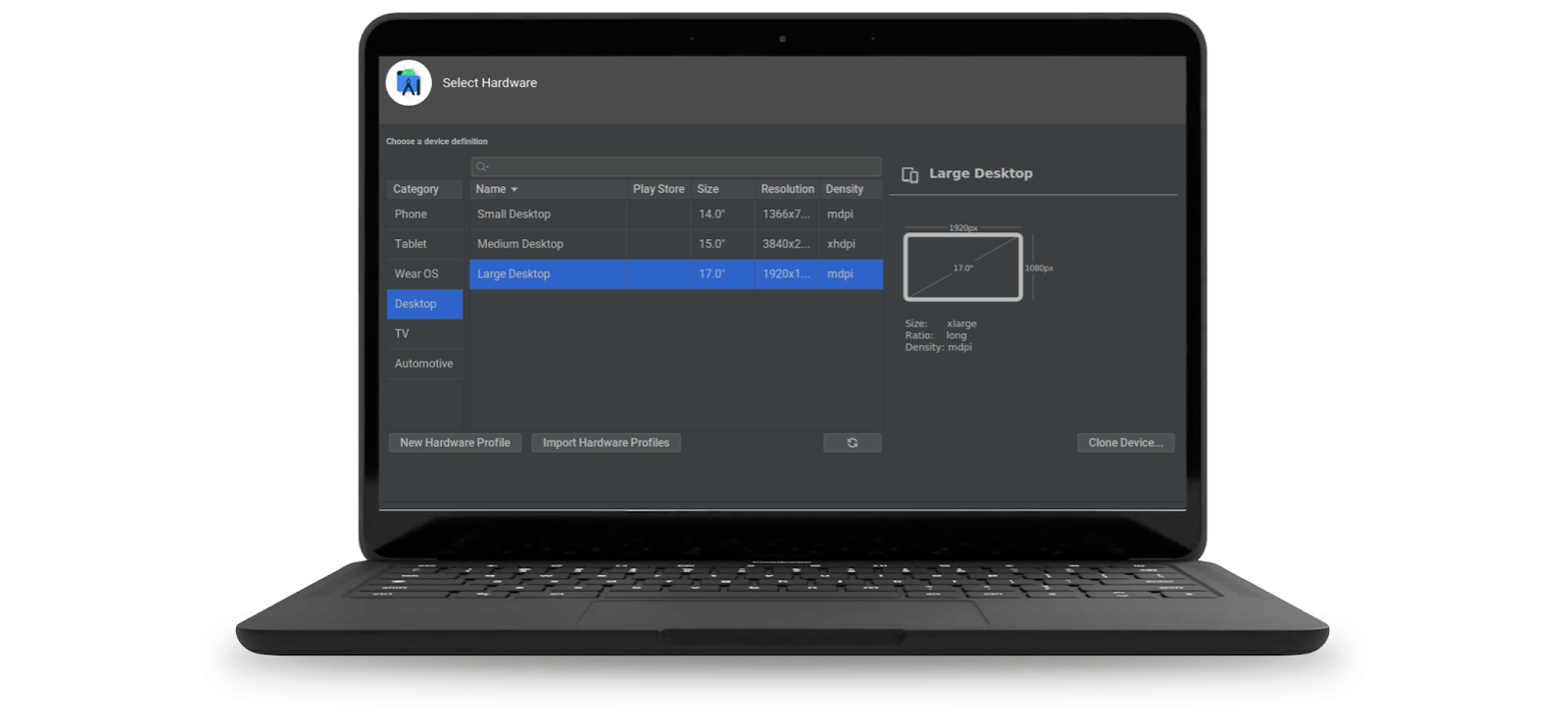

The surefire way of ensuring a great user experience? Run rigorous checks to make sure your apps and games work as expected on the devices you’re optimizing for. When you’re building for ChromeOS, testing your apps on Chromebooks or another larger-screen device is ideal. But you've still got options if a physical device isn’t available.

For instance, you can still test a keyboard or mouse on an Android handset by plugging them into the USB-C port. And with the new desktop emulator in Android Studio, you can take your app for a spin in a larger-screen setting and test desktop features such as window resizing.

|

5) Polishing apps for publishing

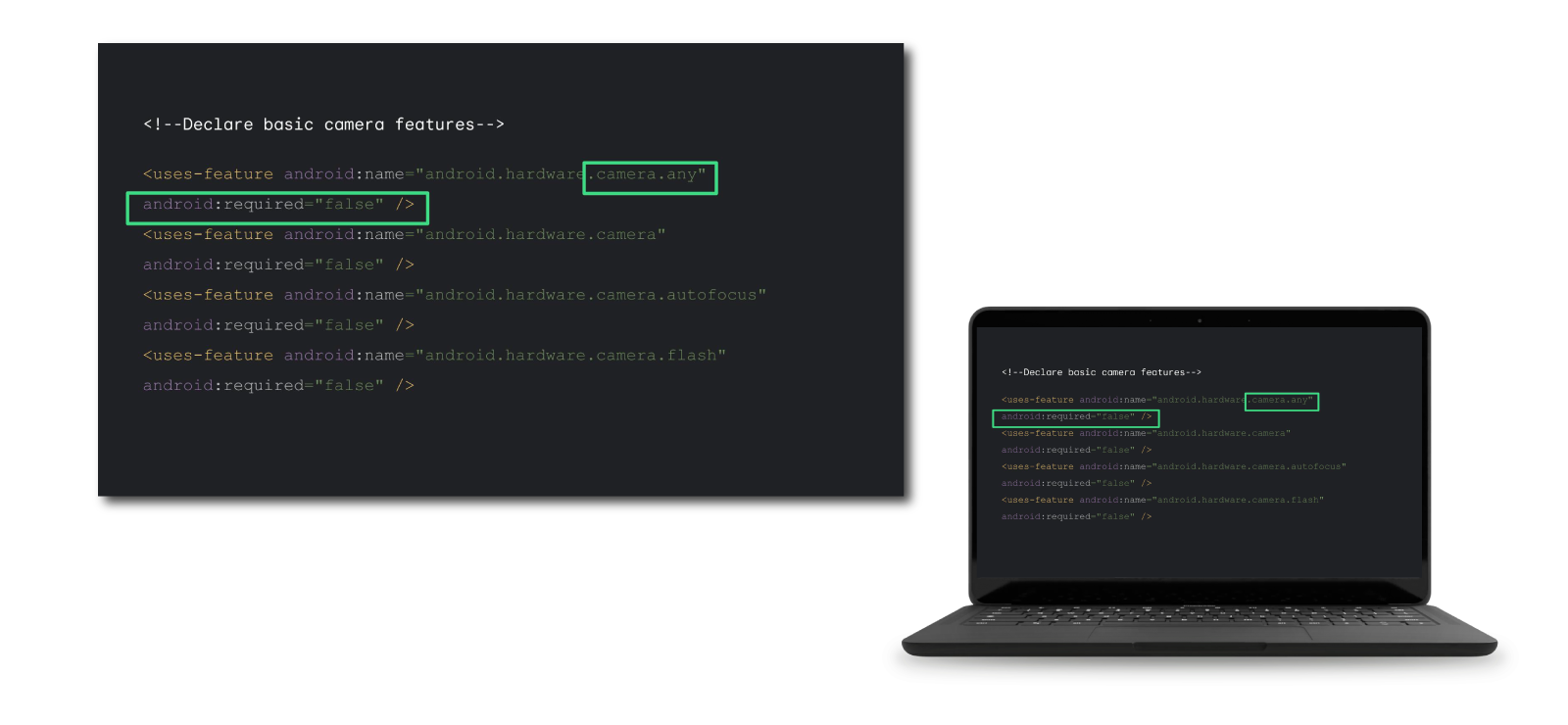

Sometimes, even apps tested on Chromebooks — and listed in Google Play — aren’t actually available to ChromeOS users. This usually happens because there’s an entry in the app’s manifest declaring it requires features that aren’t available on the unsupported device.

Let’s say you specify your app requires “android.hardware.camera.” That entry refers to a rear-facing camera — so any devices with only a user-facing camera would be considered unsupported. If any camera will work for your app, you can use “android.hardware.camera.any” instead. And if a hardware feature isn’t a must for your app, it’s best to specify in your manifest that it’s not required by using “required=false.”

|

Connect with millions of larger-screen users

As people’s love for desktops, tablets, and foldables continues to grow, building for these form factors is becoming more and more important. Check out other talks from Android Dev Summit 2022 as well as resources on ChromeOS.dev and developer.android.com for more inspiration and how-tos as you optimize for larger screens. And don’t forget to sign up for the ChromeOS newsletter to keep up with the latest.

![A cellphone screen demonstrating finding the language settings from system settings by selecting Settings → System → Languages & Input → App Languages → [Select the desired App] → [Select the desired Language]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEigMMkDrk24GFhwQYTFL3SOe7BtcvUXhnudcyYkJE4um0woEr2aRZ78LqcP8_UaqoSJliwQmoBllEw44RwSYuPTaG372Oyorri8Q_qkaapDOrzkU6nR_kWh9pQTx6Jj6Tqmjb4VwqiPtn43bZsOoAQyQ1RZ9kLnhbEScICv-ApjTaPNS7Gd3L4HxJZu/s1600/image1.gif)