Today, Android is launching a few updates across the platform! This includes the start of Android 16's rollout, with details for both developers and users, a Developer Preview for enhanced Android desktop experiences with connected displays, and updates for Android users across Google apps and more, plus the June Pixel Drop. We're also recapping all the Google I/O updates for Android developers focused on building excellent, adaptive Android apps.

Google I/O 2025 brought exciting advancements to Android, equipping you with essential knowledge and powerful tools you need to build outstanding, user-friendly applications that stand out.

If you missed any of the key #GoogleIO25 updates and just saw the release of Android 16 or you're ready to dive into building excellent adaptive apps, our playlist is for you. Learn how to craft engaging experiences with Live Updates in Android 16, capture video effortlessly with CameraX, process it efficiently using Media3's editing tools, and engage users across diverse platforms like XR, Android for Cars, Android TV, and Desktop.

Check out the Google I/O playlist for all the session details.

Here are three key announcements directly influencing how you can craft deeply engaging experiences and truly connect with your users:

#1: Build adaptively to unlock 500 million devices

In today's diverse device ecosystem, users expect their favorite applications to function seamlessly across various form factors, including phones, tablets, Chromebooks, automobiles, and emerging XR glasses and headsets. Our recommended approach for developing applications that excel on each of these surfaces is to create a single, adaptive application. This strategy avoids the need to rebuild the application for every screen size, shape, or input method, ensuring a consistent and high-quality user experience across all devices.

The talk emphasizes that you don't need to rebuild apps for each form factor. Instead, small, iterative changes can unlock an app's potential.

Here are some resources we encourage you to use in your apps:

New feature support in Jetpack Compose Adaptive Libraries

- We’re continuing to make it as easy as possible to build adaptively with Jetpack Compose Adaptive Libraries. with new features in 1.1 like pane expansion and predictive back. By utilizing canonical layout patterns such as List Detail or Supporting Pane layouts and integrating your app code, your application will automatically adjust and reflow when resized.

Navigation 3

- The alpha release of the Navigation 3 library now supports displaying multiple panes. This eliminates the need to alter your navigation destination setup for separate list and detail views. Instead, you can adjust the setup to concurrently render multiple destinations when sufficient screen space is available.

Updates to Window Manager Library

- AndroidX.window 1.5 introduces two new window size classes for expanded widths, facilitating better layout adaptation for large tablets and desktops. A width of 1600dp or more is now categorized as "extra large," while widths between 1200dp and 1600dp are classified as "large." These subdivisions offer more granularity for developers to optimize their applications for a wider range of window sizes.

Support all orientations and be resizable

- In Android 16 important changes are coming, affecting orientation, aspect ratio, and resizability. Apps targeting SDK 36 will need to support all orientations and be resizable.

Extend to Android XR

- We are making it easier for you to build for XR with the Android XR SDK in developer preview 2, which features new Material XR components, a fully integrated Emulator withinAndroid Studio and spatial videos support for your Play Store listings.

Upgrade your Wear OS apps to Material 3 Design

- Wear OS 6 features Material 3 Expressive, a new UI design with personalized visuals and motion for user creativity, coming to Wear, Android, and Google apps later this year. You can upgrade your app and Tiles to Material 3 Expressive by utilizing new Jetpack libraries: Wear Compose Material 3, which provides components for apps and Wear ProtoLayout Material 3 which provides components and layouts for tiles.

You should build a single, adaptive mobile app that brings the best experiences to all Android surfaces. By building adaptive apps, you meet users where they are today and in the future, enhancing user engagement and app discoverability. This approach represents a strategic business decision that optimizes an app’s long-term success.

#2: Enhance your app’s performance optimization

Get ready to take your app's performance to the next level! Google I/O 2025, brought an inside look at cutting-edge tools and techniques to boost user satisfaction, enhance technical performance metrics, and drive those all-important key performance indicators. Imagine an end-to-end workflow that streamlines performance optimization.

Redesigned UiAutomator API

- To make benchmarking reliable and reproducible, there's the brand new UiAutomator API. Write robust test code and run it on your local devices or in Firebase Test Lab, ensuring consistent results every time.

Macrobenchmarks

- Once your tests are in place, it's time to measure and understand. Macrobenchmarks give you the hard data, while App Startup Insights provide actionable recommendations for improvement. Plus, you can get a quick snapshot of your app's health with the App Performance Score via DAC. These tools combined give you a comprehensive view of your app's performance and where to focus your efforts.

R8, More than code shrinking and obfuscation

- You might know R8 as a code shrinking tool, but it's capable of so much more! The talk dives into R8's capabilities using the "Androidify" sample app. You'll see how to apply R8, troubleshoot any issues (like crashes!), and configure it for optimal performance. It'll also be shown how library developers can include "consumer Keep rules" so that their important code is not touched when used in an application.

#3: Build Richer Image and Video Experiences

In today's digital landscape, users increasingly expect seamless content creation capabilities within their apps. To meet this demand, developers require robust tools for building excellent camera and media experiences.

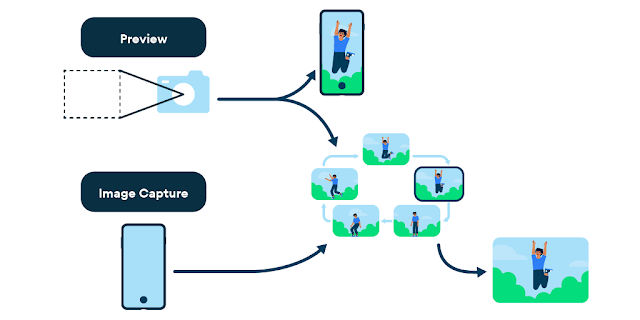

Media3Effects in CameraX Preview

- At Google I/O, developers delve into practical strategies for capturing high-quality video using CameraX, while simultaneously leveraging the Media3Effects on the preview.

Google Low-Light Boost

- Google Low Light Boost in Google Play services enables real-time dynamic camera brightness adjustment in low light, even without device support for Low Light Boost AE Mode.

New Camera & Media Samples!

- For Google I/O 2025, The Camera & Media team created new samples and demos for building excellent media and camera experiences on Android. It emphasizes future-proofing apps using Jetpack libraries like Media3 Transformer for advanced video editing and Compose for adaptive UIs, including XR. Get more information about incrementally adding premium features with CameraX, utilizing Media3 for AI-powered functionalities such as video summarization and HDR thumbnails, and employing specialized APIs like Oboe for efficient audio playback. We have also updated CameraX samples to fully use Compose instead of the View based system.

Learn more about how CameraX & Media3 can accelerate your development of camera and media related features.

Learn how to build adaptive apps

Want to learn more about building excellent, adaptive apps? Watch this playlist to learn more about all the session details.

Posted by Mozart Louis – Developer Relations Engineer

Posted by Mozart Louis – Developer Relations Engineer

Posted by Rebecca Franks – Developer Relations Engineer

Posted by Rebecca Franks – Developer Relations Engineer

Posted by Nick Butcher – Product Manager

Posted by Nick Butcher – Product Manager

Posted by Rebecca Franks - Developer Relations Engineer

Posted by Rebecca Franks - Developer Relations Engineer

Posted by Scott Nien – Software Engineer (scottnien@)

Posted by Scott Nien – Software Engineer (scottnien@)

Posted by Donovan McMurray, Camera Developer Relations Engineer

Posted by Donovan McMurray, Camera Developer Relations Engineer