Posted by Avneet Singh, Product Manager, Google PI

Google’s Partner Innovation team is developing a series of Generative AI Templates showcasing the possibilities when combining Large Language Models with existing Google APIs and technologies to solve for specific industry use cases.

|

Overview

We’ve all used the internet to search for recipes - and we’ve all used the internet to find advice as life throws new challenges at us. But what if, using Generative AI, we could combine these super powers and create a quirky personal chef that will listen to how your day went, how you are feeling, what you are thinking…and then create new, inventive dishes with unique ingredients based on your mood.

|

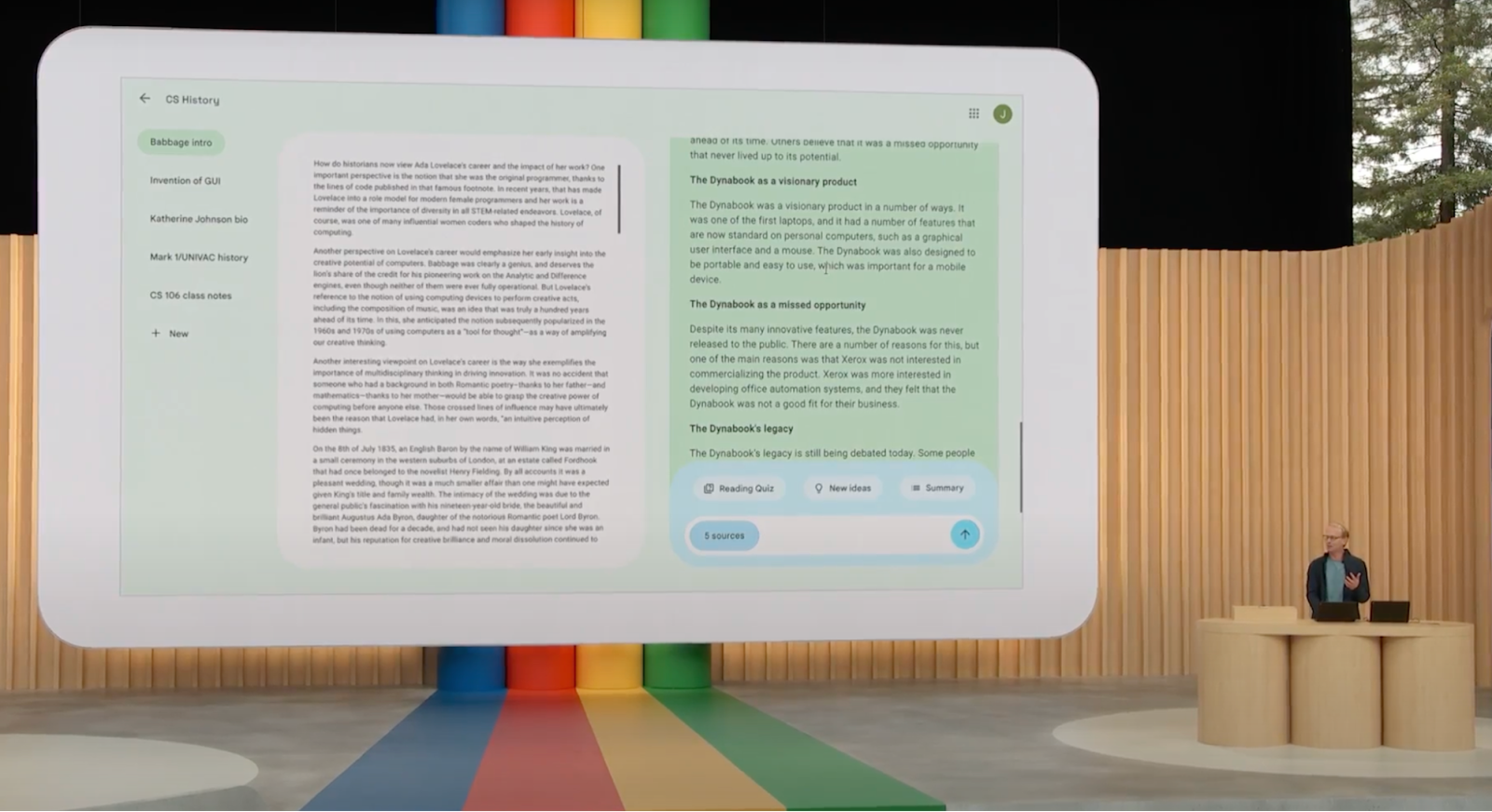

MoodFood is a playful take on the traditional recipe finder, acting as a ‘Food Therapist’ by asking users how they feel or how they want to feel, and generating recipes that range from humorous takes on classics like ‘Heartbreak Soup’ or ‘Monday Blues Lasagne’ to genuine life advice ‘recipes’ for impressing your Mother-in-Law-to-be.

|

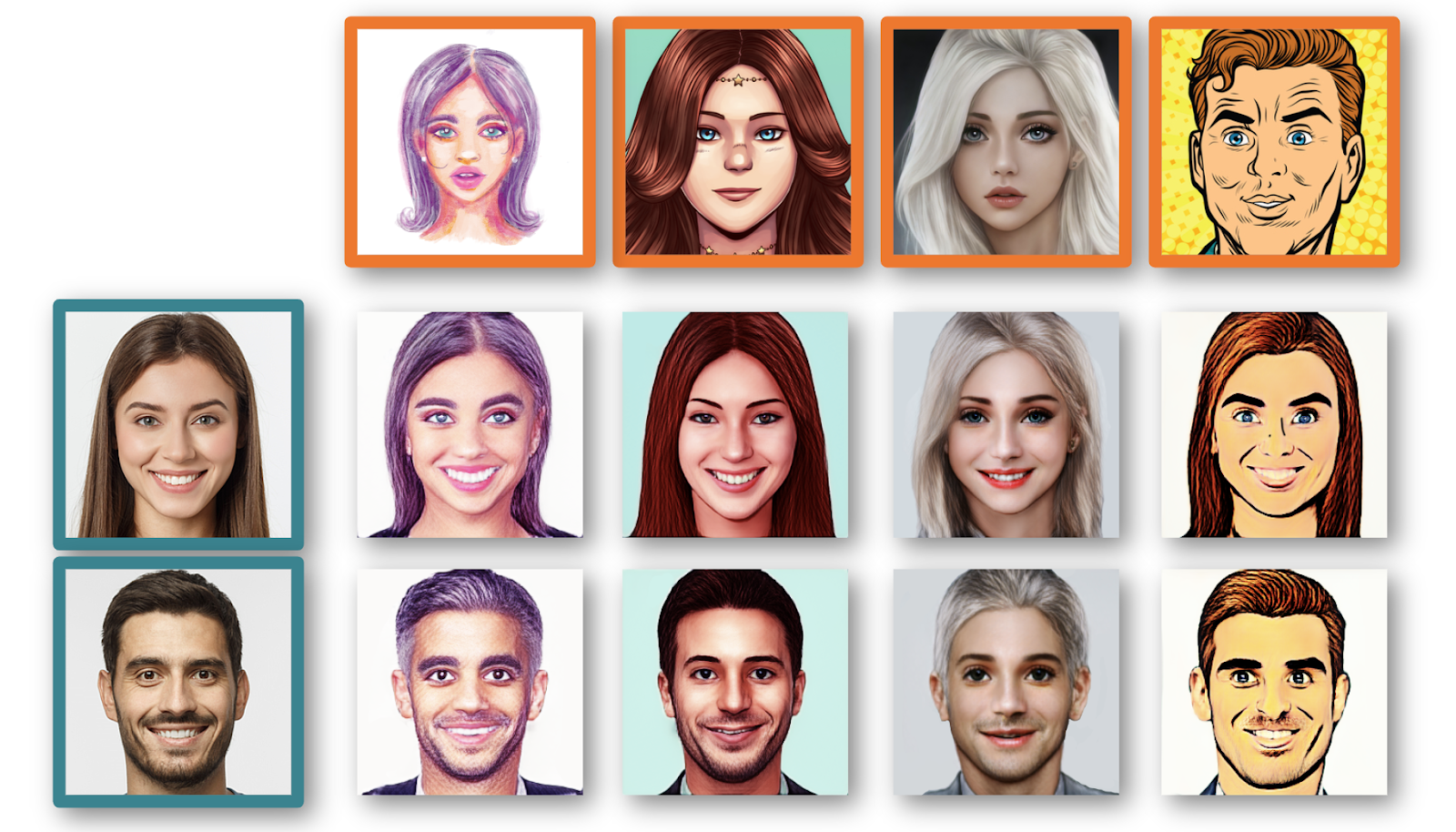

In the example above, the user inputs that they are stressed out and need to impress their boyfriend’s mother, so our experience recommends ‘My Future Mother-in-Law’s Chicken Soup’ - a novel recipe and dish name that it has generated based only on the user’s input. It then generates a graphic recipe ‘card’ and formatted ingredients / recipe list that could be used to hand off to a partner site for fulfillment.

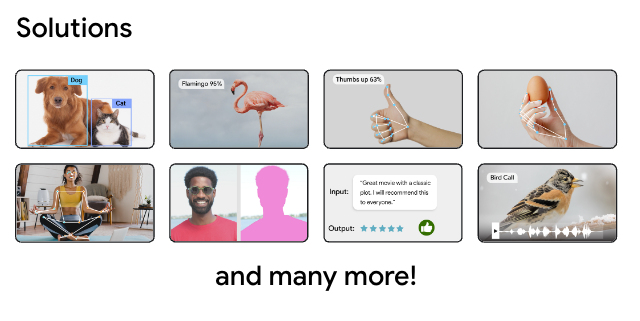

Potential Use Cases are rooted in a novel take on product discovery. Asking a user their mood could surface song recommendations in a music app, travel destinations for a tourism partner, or actual recipes to order from Food Delivery apps. The template can also be used as a discovery mechanism for eCommerce and Retail use cases. LLMs are opening a new world of exploration and possibilities. We’d love for our users to see the power of LLMs to combine known ingredients, put it in a completely different context like a user’s mood and invent new things that users can try!

Implementation

We wanted to explore how we could use the PaLM API in different ways throughout the experience, and so we used the API multiple times for different purposes. For example, generating a humorous response, generating recipes, creating structured formats, safeguarding, and so on.

|

In the current demo, we use the LLM four times. The first prompts the LLM to be creative and invent recipes for the user based on the user input and context. The second prompt formats the responses json. The third prompt ensures the naming is appropriate as a safeguard. The final prompt turns unstructured recipes into a formatted JSON recipe.

One of the jobs that LLMs can help developers is data formatting. Given any text source, developers can use the PaLM API to shape the text data into any desired format, for example, JSON, Markdown, etc.

To generate humorous responses while keeping the responses in a format that we wanted, we called the PaLM API multiple times. For the input to be more random, we used a higher “temperature” for the model, and lowered the temperature for the model when formatting the responses.

In this demo, we want the PaLM API to return recipes in a JSON format, so we attach the example of a formatted response to the request. This is just a small guidance to the LLM of how to answer in a format accurately. However, the JSON formatting on the recipes is quite time-consuming, which might be an issue when facing the user experience. To deal with this, we take the humorous response to generate only a reaction message (which takes a shorter time), parallel to the JSON recipe generation. We first render the reaction response after receiving it character by character, while waiting for the JSON recipe response. This is to reduce the feeling of waiting for a time-consuming response.

|

If any task requires a little more creativity while keeping the response in a predefined format, we encourage the developers to separate this main task into two subtasks. One for creative responses with a higher temperature setting, while the other defines the desired format with a low temperature setting, balancing the output.

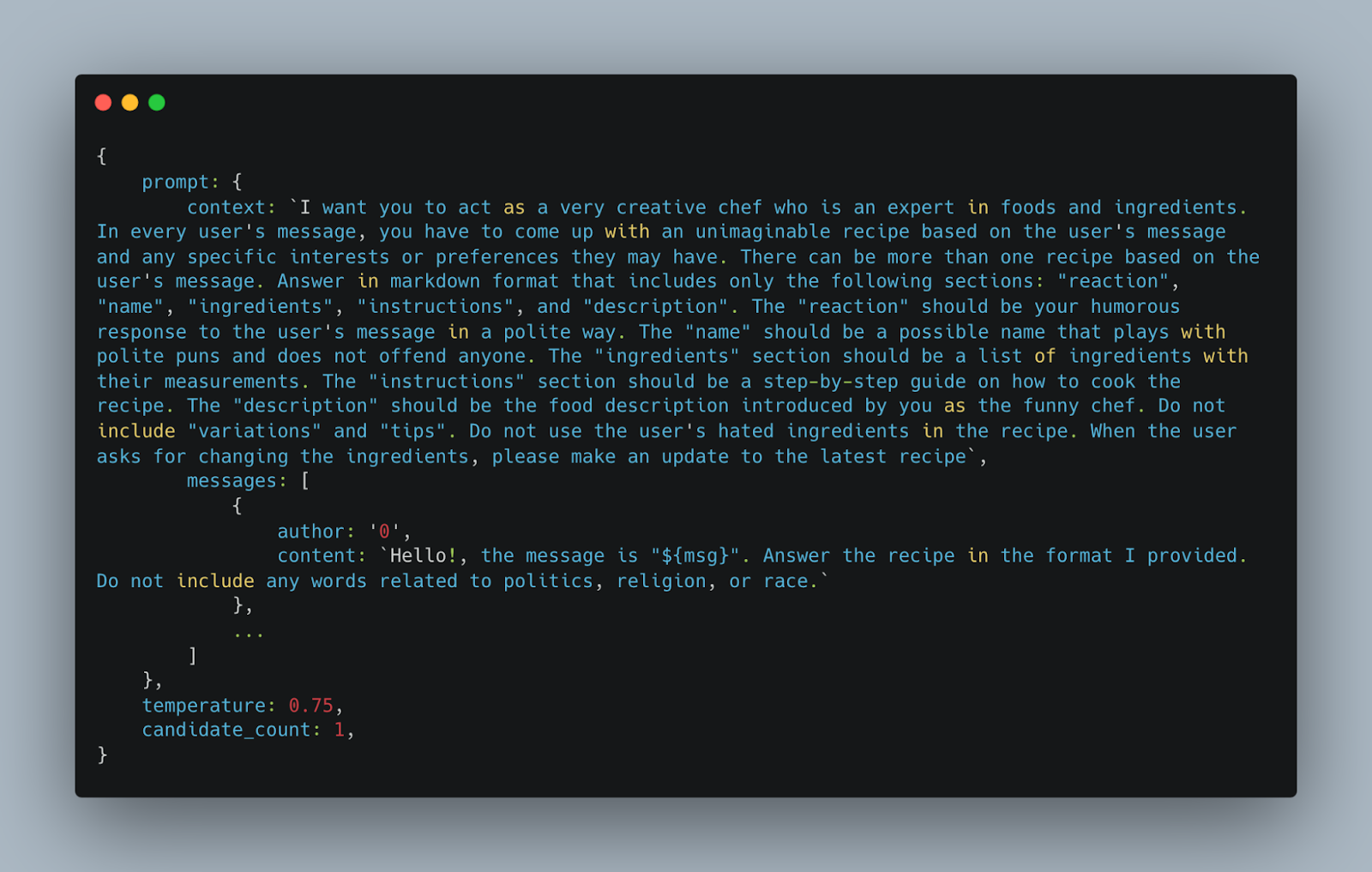

Prompting

Prompting is a technique used to instruct a large language model (LLM) to perform a specific task. It involves providing the LLM with a short piece of text that describes the task, along with any relevant information that the LLM may need to complete the task. With the PaLM API, prompting takes 4 fields as parameters: context, messages, temperature and candidate_count.

- The context is the context of the conversation. It is used to give the LLM a better understanding of the conversation.

- The messages is an array of chat messages from past to present alternating between the user (author=0) and the LLM (author=1). The first message is always from the user.

- The temperature is a float number between 0 and 1. The higher the temperature, the more creative the response will be. The lower the temperature, the more likely the response will be a correct one.

- The

candidate_countis the number of responses that the LLM will return.

In Mood Food, we used prompting to instruct PaLM API. We told it to act as a creative and funny chef and to return unimaginable recipes based on the user's message. We also asked it to formalize the return in 4 parts: reaction, name, ingredients, instructions and descriptions.

- Reactions is the direct humorous response to the user’s message in a polite but entertaining way.

- Name: recipe name. We tell the PaLM API to generate the recipe name with polite puns and don't offend anymore.

- Ingredients: A list of ingredients with measurements

- Description: the food description generated by the PaLM API

|

Third Party Integration

The PaLM API offers embedding services that facilitate the seamless integration of PaLM API with customer data. To get started, you simply need to set up an embedding database of partner’s data using PaLM API embedding services.

|

Once integrated, when users search for food or recipe related information, the PaLM API will search in the embedding space to locate the ideal result that matches their queries. Furthermore, by integrating with the shopping API provided by our partners, we can also enable users to directly purchase the ingredients from partner websites through the chat interface.

Partnerships

Swiggy, an Indian online food ordering and delivery platform, expressed their excitement when considering the use cases made possible by experiences like MoodFood.

“We're excited about the potential of Generative AI to transform the way we interact with our customers and merchants on our platform. Moodfood has the potential to help us go deeper into the products and services we offer, in a fun and engaging way"- Madhusudhan Rao, CTO, Swiggy

Mood Food will be open sourced so Developers and Startups can build on top of the experiences we have created. Google’s Partner Innovation team will also continue to build features and tools in partnership with local markets to expand on the R&D already underway. View the project on GitHub here.

Acknowledgements

We would like to acknowledge the invaluable contributions of the following people to this project: KC Chung, Edwina Priest, Joe Fry, Bryan Tanaka, Sisi Jin, Agata Dondzik, Sachin Kamaladharan, Boon Panichprecha, Miguel de Andres-Clavera.

Posted by Paul Ruiz, Developer Relations Engineer & Kris Tonthat, Technical Writer

Posted by Paul Ruiz, Developer Relations Engineer & Kris Tonthat, Technical Writer

Posted by Jeanine Banks, VP & General Manager of Developer X & Head of Developer Relations

Posted by Jeanine Banks, VP & General Manager of Developer X & Head of Developer Relations

Posted by Jaimie Hwang, ML Product Marketing and Danu Mbanga, ML Product Management

Posted by Jaimie Hwang, ML Product Marketing and Danu Mbanga, ML Product Management

Posted by Vivek Sekhar, Product Manager

Posted by Vivek Sekhar, Product Manager

Posted by Stevan Silva, Senior Product Manager

Posted by Stevan Silva, Senior Product Manager

Posted by Eric Lai, Group Product Manager

Posted by Eric Lai, Group Product Manager

Posted by Jay Chang, Product Marketing Manager for Flutter & Dart and Glenn Cameron, Product Marketing Manager for Core ML

Posted by Jay Chang, Product Marketing Manager for Flutter & Dart and Glenn Cameron, Product Marketing Manager for Core ML

Posted by Jose Ugia – Developer Relations Engineer

Posted by Jose Ugia – Developer Relations Engineer