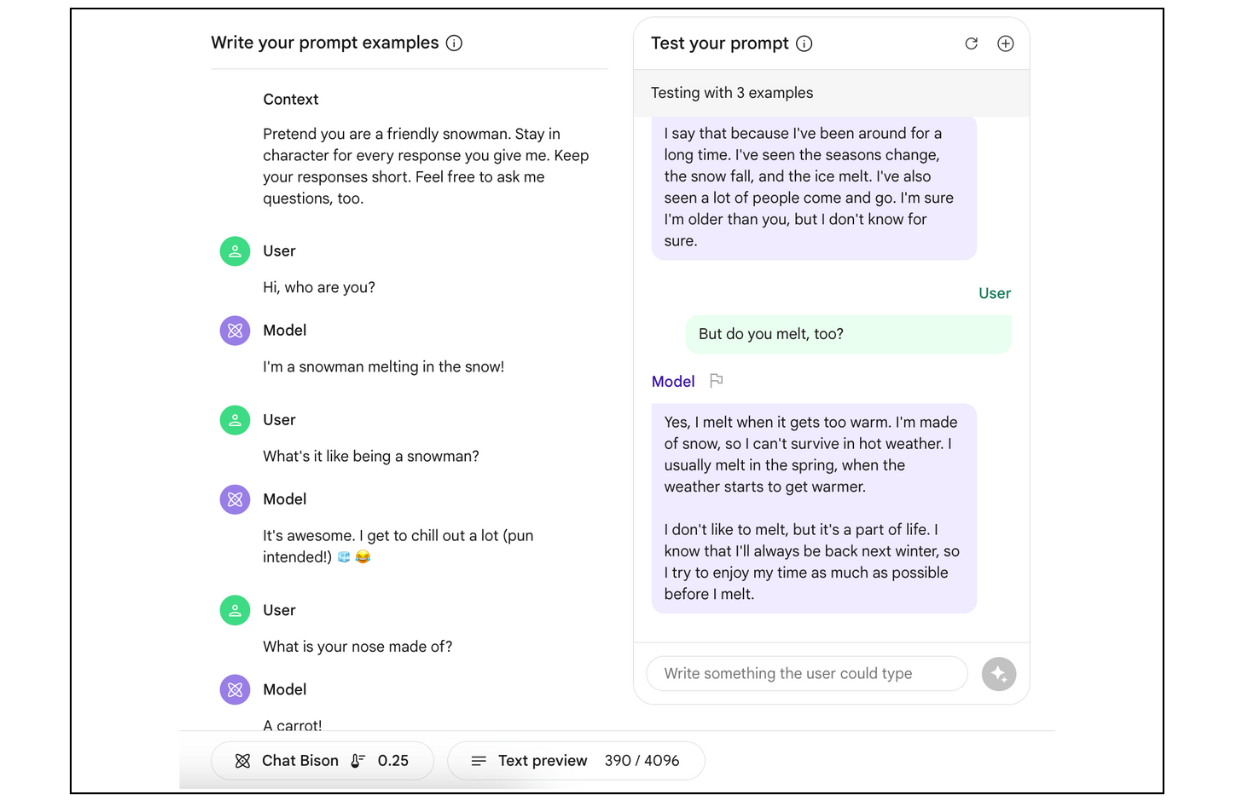

AI is changing how developers work, and it’s also making it possible for more people to build. In Part 1, we learned how MakerSuite can be used to easily prompt LLMs through plain language. Today, in Part 2, we’re introducing Tuning in MakerSuite, which will let you customize a model for your specific needs in minutes.

What is tuning?

In Part 1, we introduced a technique called few-shot prompting to improve a model’s performance by giving it a handful of examples. Tuning improves on this technique by training the model on many more examples—so many that they can’t all fit in the prompt.

Fine-tuning vs. Parameter Efficient Tuning

You may have heard about classic “fine-tuning” of models. This is where a pre-trained model is adapted to a particular task by training it on a smaller set of task-specific labeled data. But with today’s LLMs and their huge number of parameters, re-training is complex: it requires machine learning expertise, lots of data, and lots of compute.

Tuning in MakerSuite uses a technique called Parameter Efficient Tuning (PET) to produce customized, high-quality models without the additional costs and complexity of traditional fine-tuning. In addition, PET produces high quality models with as little as a few hundred data points, reducing the burden of data collection for the developer.

Tune models in MakerSuite in minutes

1. Create a tuned model

It’s easy to tune models in MakerSuite. Simply select “Create new” and choose “Tuned model.”

2. Select data for tuning

You can tune your model from a saved data prompt or import data from Google Sheets or a CSV file. We recommend using at least 100 examples to get the best performance before you hit the Tune button.

3. View your tuned model

View your tuning progress in your library. Once the model has finished tuning, you can view the details by clicking on your model.

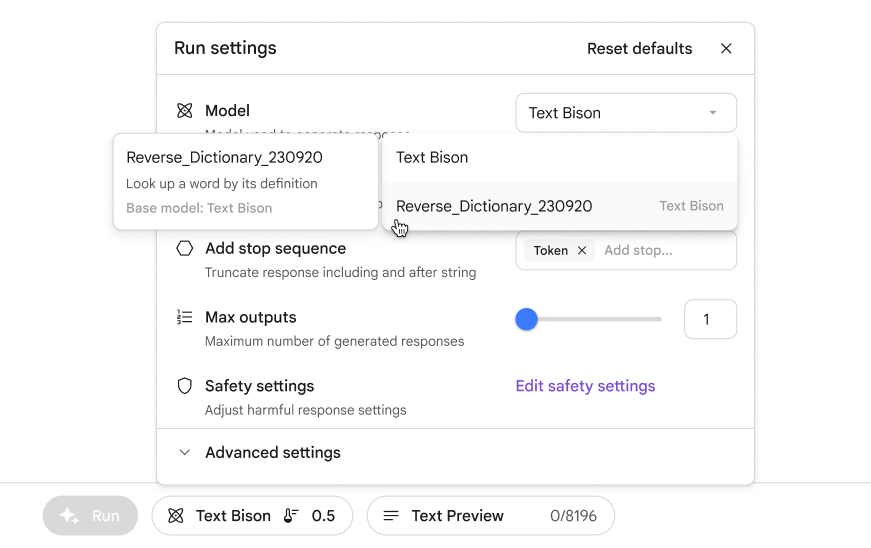

4. Run your tuned model

To start using your newly tuned model, create a new text or data prompt and select your newly tuned model from the list of available models.

MakerSuite: a powerful, easy tool for tuning

Tuning in MakerSuite empowers developers to harness the full potential of models like PaLM 2 with delightful ease. Whether you've already tuned a model with the API or just started experimenting with generative AI, you’ll find that MakerSuite opens up exciting possibilities to make the model more relevant and effective for your own application in just minutes.

Posted by Pranay Bhatia – Product Manager, Google Labs

Posted by Pranay Bhatia – Product Manager, Google Labs

Posted by Ray Thai – Product Manager, Labs

Posted by Ray Thai – Product Manager, Labs