Posted by Alex Vanyo, Developer Relations Engineer

Watch faces are one of the most visible ways that people express themselves on their smartwatches, and they’re one of the best ways to display your brand to your users.

Watch Face Studio from Samsung is a great tool for creating watch faces without writing any code. For developers who want more fine-tuned control, we've recently launched the Jetpack Watch Face library written from the ground up in Kotlin.

The stable release of the Jetpack Watch Face library includes all functionality from the Wearable Support Library and many new features that make it easier to support customization on the smartwatch and on the system companion app on mobile, including:

- Watch face styling which persists across both the watch and phone (with no need for your own database or companion app).

- Support for a WYSIWYG watch face configuration UI on the phone.

- Smaller, separate libraries (that only include what you need).

- Battery improvements through encouraging good battery usage patterns out of the box, such as automatically reducing the interactive frame rate when battery is low.

- New screenshot APIs so users can see previews of their watch face changes in real time on both the watch and phone.

If you are still using the Wearable Support Library, we strongly encourage migrating to the new Jetpack libraries to take advantage of the new APIs and upcoming features and bug fixes.

Below is an example of configuring a watch face from the phone with no code written on or for the phone.

Editing a watch face using the Galaxy Wearable mobile companion app

If you use the Jetpack Watch Face library to save your watch face configuration options, the values are synced with the mobile companion app. That is, all the cross-device communication is handled for you.

The mobile app will automatically present those options to the user in a simple, intuitive user interface where they change them to whatever works best for their style. It also includes previews that update in real time.

Let’s dive into the API with an overview of the most important components for creating a custom watch face!

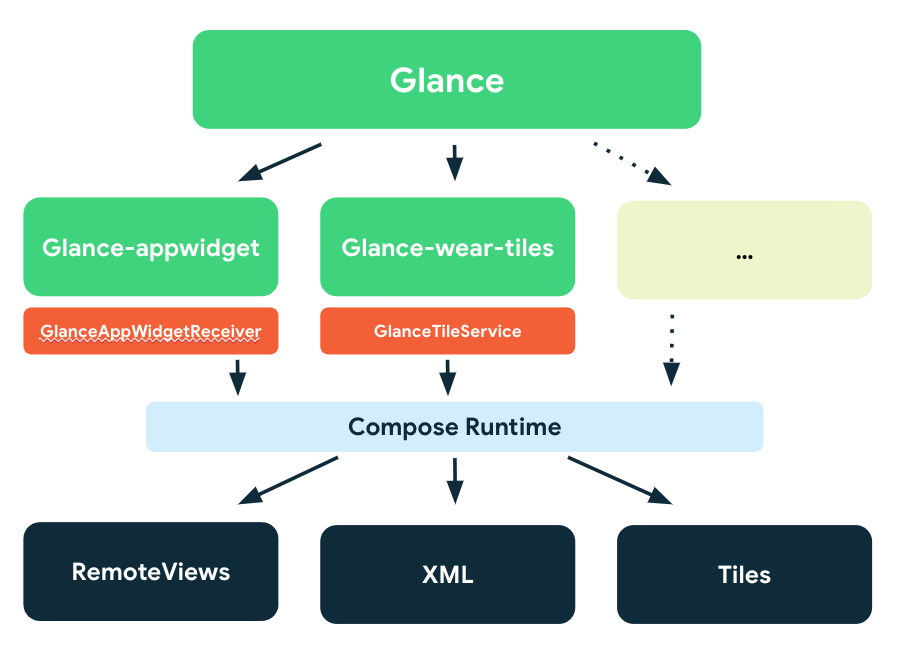

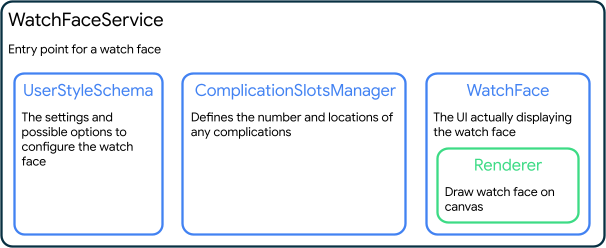

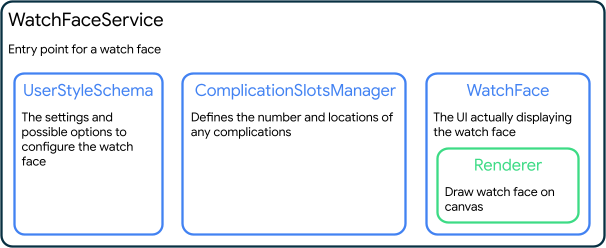

A subclass of WatchFaceService forms the entry point of any Jetpack watch face. Implementing a WatchFaceService requires creating 3 objects: A UserStyleSchema, a ComplicationSlotsManager, and a WatchFace:

Diagram showing the 3 main parts of a WatchFaceService

These 3 objects are specified by overriding 3 abstract methods from WatchFaceService:

class CustomWatchFaceService : WatchFaceService() {

/**

* The specification of settings the watch face supports.

* This is similar to a database schema.

*/

override fun createUserStyleSchema(): UserStyleSchema = // ...

/**

* The complication slot configuration for the watchface.

*/

override fun createComplicationSlotsManager(

currentUserStyleRepository: CurrentUserStyleRepository

): ComplicationSlotsManager = // ...

/**

* The watch face itself, which includes the renderer for drawing.

*/

override suspend fun createWatchFace(

surfaceHolder: SurfaceHolder,

watchState: WatchState,

complicationSlotsManager: ComplicationSlotsManager,

currentUserStyleRepository: CurrentUserStyleRepository

): WatchFace = // ...

}

Let’s take a more detailed look at each one of these in turn, and some of the other classes that the library creates on your behalf.

The UserStyleSchema defines the primary information source for a Jetpack watch face. The UserStyleSchema should contain a list of all customization settings available to the user, as well as information about what those options do and what the default option is. These settings can be boolean flags, lists, ranges, and more.

By providing this schema, the library will automatically keep track of changes to settings by the user, either through the mobile companion app on a connected phone or via changes made on the smartwatch in a custom editor activity.

override fun createUserStyleSchema(): UserStyleSchema =

UserStyleSchema(

listOf(

// Allows user to change the color styles of the watch face

UserStyleSetting.ListUserStyleSetting(

UserStyleSetting.Id(COLOR_STYLE_SETTING),

// ...

),

// Allows user to toggle on/off the hour pips (dashes around the outer edge of the watch

UserStyleSetting.BooleanUserStyleSetting(

UserStyleSetting.Id(DRAW_HOUR_PIPS_STYLE_SETTING),

// ...

),

// Allows user to change the length of the minute hand

UserStyleSetting.DoubleRangeUserStyleSetting(

UserStyleSetting.Id(WATCH_HAND_LENGTH_STYLE_SETTING),

// ...

)

)

)

The current user style can be observed via the CurrentUserStyleRepository, which is created by the library based on the UserStyleSchema.

It gives you a UserStyle which is just a Map with keys based on the settings defined in the schema:

Map<UserStyleSetting, UserStyleSetting.Option>

As the user’s preferences change, a MutableStateFlow of UserStyle will emit the latest selected options for all of the settings defined in the UserStyleSchema.

currentUserStyleRepository.userStyle.collect { newUserStyle ->

// Update configuration based on user style

}

Complications allow a watch face to display additional information from other apps on the watch, such as events, health data, or the day.

The ComplicationSlotsManager defines how many complications a watch face supports, and where they are positioned on the screen. To support changing the location or number of complications, the ComplicationSlotsManager also uses the CurrentUserStyleRepository.

override fun createComplicationSlotsManager(

currentUserStyleRepository: CurrentUserStyleRepository

): ComplicationSlotsManager {

val defaultCanvasComplicationFactory =

CanvasComplicationFactory { watchState, listener ->

// ...

}

val leftComplicationSlot = ComplicationSlot.createRoundRectComplicationSlotBuilder(

id = 100,

canvasComplicationFactory = defaultCanvasComplicationFactory,

// ...

)

.setDefaultDataSourceType(ComplicationType.SHORT_TEXT)

.build()

val rightComplicationSlot = ComplicationSlot.createRoundRectComplicationSlotBuilder(

id = 101,

canvasComplicationFactory = defaultCanvasComplicationFactory,

// ...

)

.setDefaultDataSourceType(ComplicationType.SHORT_TEXT)

.build()

return ComplicationSlotsManager(

listOf(leftComplicationSlot, rightComplicationSlot),

currentUserStyleRepository

)

}

The WatchFace describes the type of watch face and how to draw it.

A WatchFace can be specified as digital or analog and can optionally have a tap listener for when the user taps on the watch face.

Most importantly, a WatchFace specifies a Renderer, which actually renders the watch face:

override suspend fun createWatchFace(

surfaceHolder: SurfaceHolder,

watchState: WatchState,

complicationSlotsManager: ComplicationSlotsManager,

currentUserStyleRepository: CurrentUserStyleRepository

): WatchFace = WatchFace(

watchFaceType = WatchFaceType.ANALOG,

renderer = // ...

)

The prettiest part of a watch face! Every watch face will create a custom subclass of a renderer that implements everything needed to actually draw the watch face to a canvas.

The renderer is in charge of combining the UserStyle (the map from CurrentUserStyleRepository), the complication information from ComplicationSlotsManager, the current time, and other state information to render the watch face.

class CustomCanvasRenderer(

private val context: Context,

surfaceHolder: SurfaceHolder,

watchState: WatchState,

private val complicationSlotsManager: ComplicationSlotsManager,

currentUserStyleRepository: CurrentUserStyleRepository,

canvasType: Int

) : Renderer.CanvasRenderer(

surfaceHolder = surfaceHolder,

currentUserStyleRepository = currentUserStyleRepository,

watchState = watchState,

canvasType = canvasType,

interactiveDrawModeUpdateDelayMillis = 16L

) {

override fun render(canvas: Canvas, bounds: Rect, zonedDateTime: ZonedDateTime) {

// Draw into the canvas!

}

override fun renderHighlightLayer(canvas: Canvas, bounds: Rect, zonedDateTime: ZonedDateTime) {

// Draw into the canvas!

}

}

In addition to the system WYSIWYG editor on the phone, we strongly encourage supporting configuration on the smartwatch to allow the user to customize their watch face without requiring a companion device.

To support this, a watch face can provide a configuration Activity and allow the user to change settings using an EditorSession returned from EditorSession.createOnWatchEditorSession. As the user makes changes, calling EditorSession.renderWatchFaceToBitmap provides a live preview of the watch face in the editor Activity.

To see how the whole puzzle fits together to tell the time, check out the watchface sample on GitHub. To learn more about developing for Wear OS, check out the developer website.

Posted by J. Eason – Director, Product Management

Posted by J. Eason – Director, Product Management

Posted by Kanyinsola Fapohunda – Software Engineer, and Geoffrey Boullanger – Technical Lead

Posted by Kanyinsola Fapohunda – Software Engineer, and Geoffrey Boullanger – Technical Lead

Posted by Geoffrey Boullanger – Senior Software Engineer, Shandor Dektor – Sensors Algorithms Engineer, Martin Frassl and Benjamin Joseph – Technical Leads and Managers

Posted by Geoffrey Boullanger – Senior Software Engineer, Shandor Dektor – Sensors Algorithms Engineer, Martin Frassl and Benjamin Joseph – Technical Leads and Managers

Posted by Matthew Pateman & Mallory Carroll (UX Research), and Josef Burnham (UX Design)

Posted by Matthew Pateman & Mallory Carroll (UX Research), and Josef Burnham (UX Design)

Posted by

Posted by

Posted by John Richardson, Partner Engineer

Posted by John Richardson, Partner Engineer