Since we launched Coral back in March 2019, we’ve added a number of new product form factors to accommodate the many ways users are adding on-device ML to their products. We've also streamlined the ML workflow and added capabilities like model pipelining with multiple Edge TPUs for an easier and more robust developer experience. And from this, we’ve helped enable amazing use cases from smart water meters that prevent water loss with Olea Edge, to systems for improving harvest yield with Farmwave, to noise cancellation in meetings in Google’s own Series One meeting kits.

This week, we’ll begin shipping the Coral Accelerator Module, a multi-chip module that combines the Edge TPU and it’s power circuitry into a solderable package. The module exposes PCIe and USB2 interfaces, which make it even easier to integrate Coral into custom designs. Several companies are already taking advantage of the compact size and capabilities with their new products coming to market. Read more about how Gumstix, STD, Siana Systems and IEI are using our module.

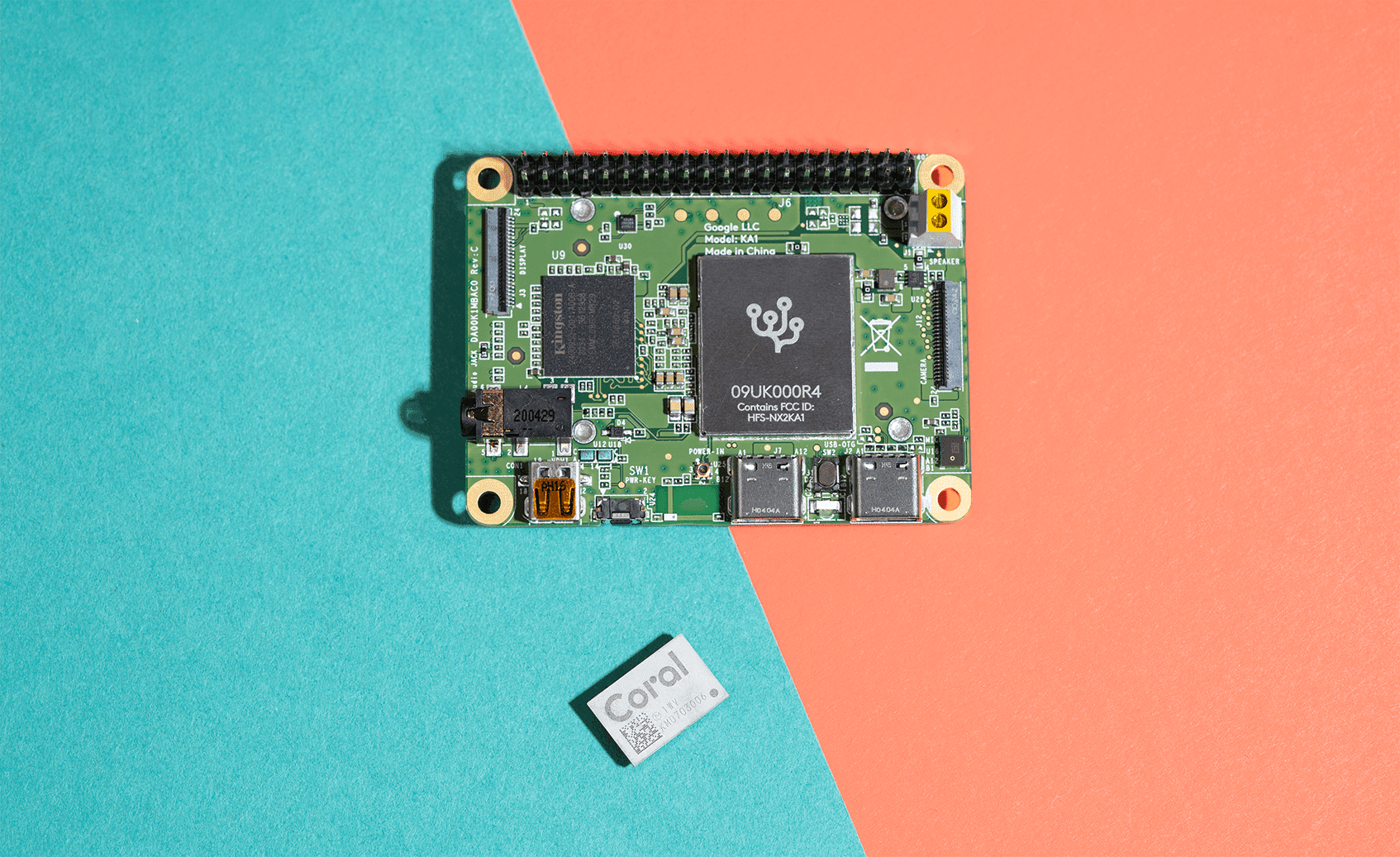

And in December, we’ll begin shipping the Dev Board Mini, a smaller, more power-efficient, and value-oriented board that brings forward a more traditional, flattened single-board computer design. The Dev Board Mini pairs a Mediatek 8167 SoC with the Coral Accelerator Module over USB 2 and is a great way to evaluate the module as the center of a project or deployment.

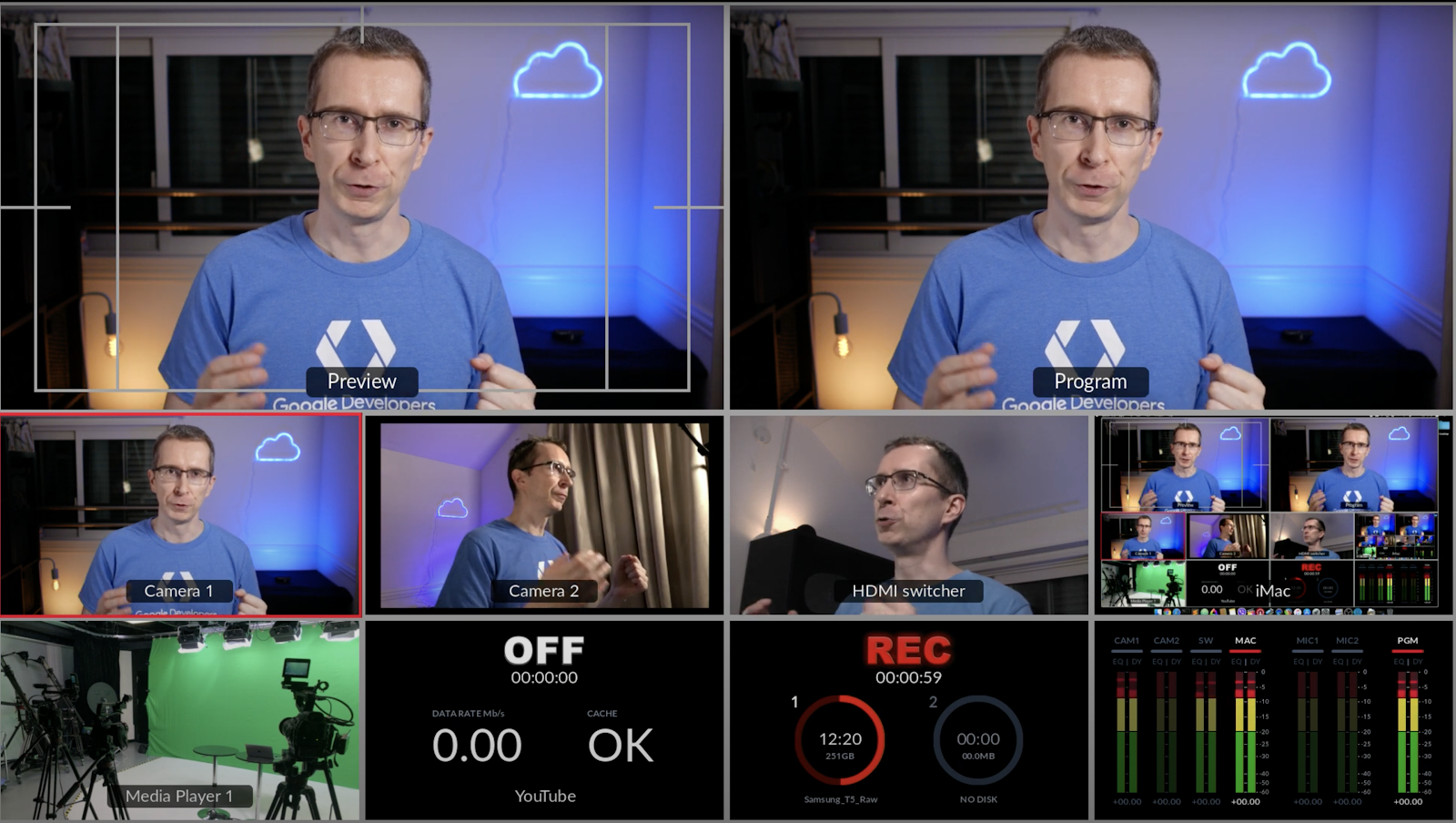

You can see the new Dev Board Mini and Accelerator Module in action in the latest episode of Level Up, where Markku Lepisto controls his studio lights with speech commands.

To get updates on when the board will be available for purchase and other Coral news, sign up for our newsletter.

Developing for the edge, now simplified

We recently announced a new version of the Coral ML APIs and tools. This release brings the C++ API into parity with Python and makes it more modular, reusable and performant. At the same time it eliminates unnecessary abstractions and surfaces replacing them with native TensorFlow Lite APIs. This release also graduates the Model Pipelining API out of beta and introduces a new model partitioner that automatically partitions models based on profiling and up to 10x better performance.

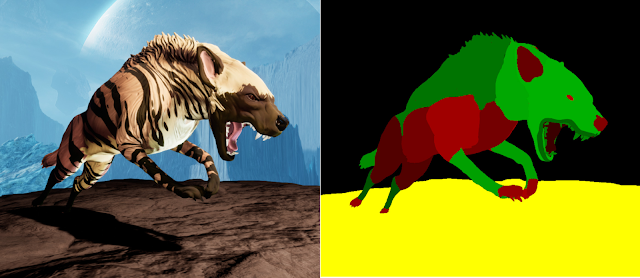

We’ve added a pre-trained version of MobileDet — a state-of-the-art object detection model for mobile systems — into our models portfolio. We’re migrating our model-development workflow to TensorFlow 2, and we’re including a handful of updated or new models based on the TF2 Keras framework. For details, check out the full announcement on the TensorFlow blog.

We’re also excited to see great developer tools coming from our ecosystem partners. For example, PerceptiLabs offers a visual API for building TensorFlow models and recently published a new demo which trains a machine learning model to identify sign language optimized for the edge with Coral.

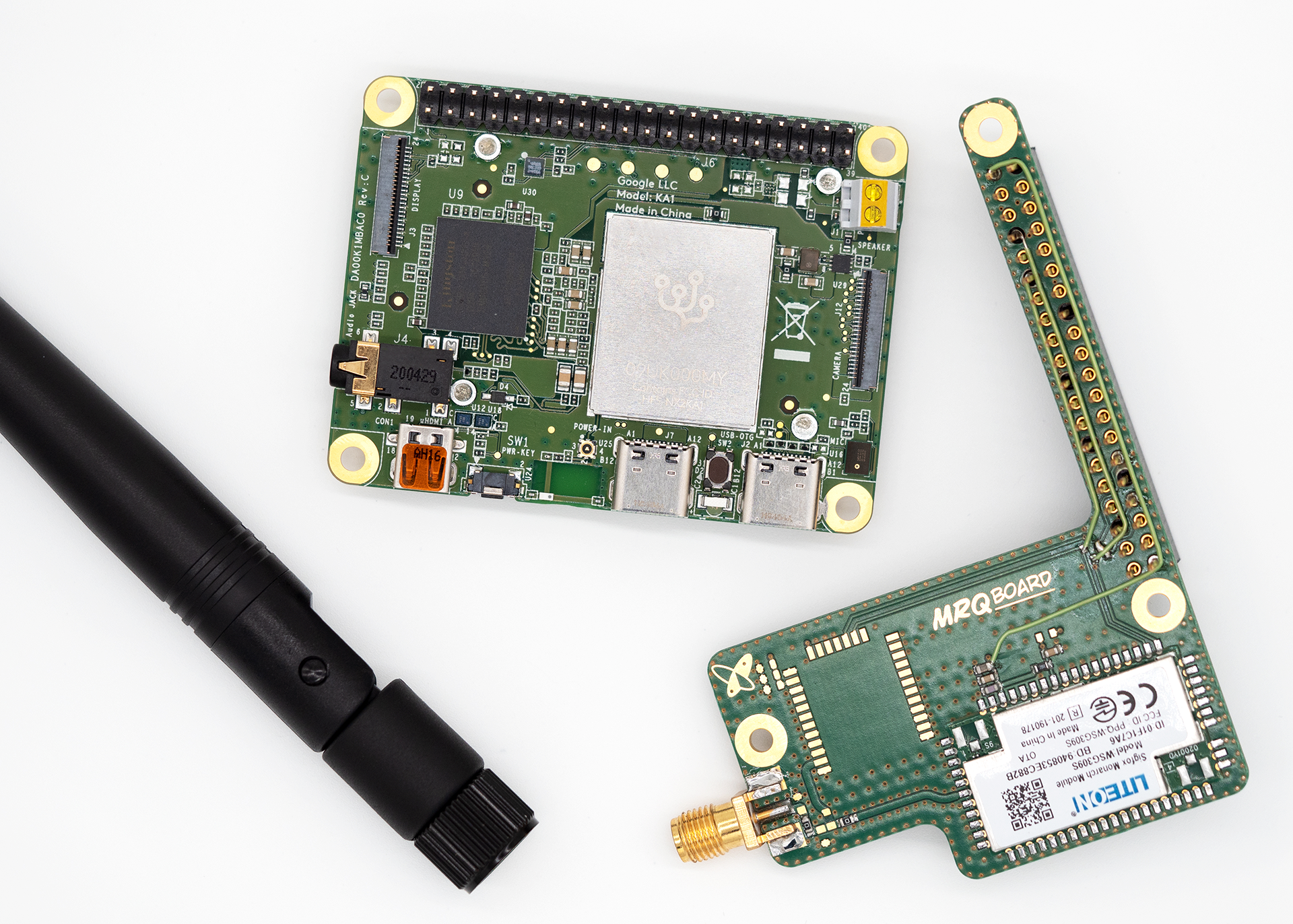

The MRQ design from SigFox enables prototyping at the edge for low bandwidth IoT solutions with Coral

And SigFox released a radio transceiver board that stacks on either the Coral Dev Board or Dev Board Mini. This allows small data payloads to be transmitted across low power, long range radio networks for use cases like smart cities, fleet management, asset tracking, agriculture and energy. The PCB design will be offered as a free download on SigFox’s website. Google Cloud Solutions Architect Markku Lepisto will present the new design today, in the opening keynote at SigFox Connect.

Customers with a Coral edge

The tool, from Farmwave, includes custom-developed ML models, a harvester-mounted box with cameras, an in-cab display, and on- device AI acceleration from Coral.

Just in time for harvest we wanted to share a story about how Farmwave is using Coral to improve the efficiency of farm equipment and reduce food waste. Traditional yield loss analysis involves hand-counting grains of corn left on the ground mid harvest. It’s a time and labor intensive task, and not feasible for farmers who measure the value of their half-million-dollar combines in minutes spent running them.

By leveraging Coral’s on-device AI capabilities, Farmwave was able to build a system that automates the count while the machine is running. Thus allowing farmers to make real-time adjustments to harvesting machines in response to conditions in the field, which can make a big difference in yield.

Kura Sushi designed their intelligent QA system using a Raspberry Pi paired with the Coral USB Accelerator

Kura Revolving Sushi Bar in Japan has always been committed to the highest standards of health and safety for its customers. Known for their tech forward approach, Kura has dabbled in sushi making robots, an automated prize machine called Bikkura-pon, and a patented dome-shaped dish cover, aptly dubbed Mr. Fresh. But most recently, Kura has used Coral to develop an AI powered system that not only facilitates efficiency for better customer experiences, but also enables better tracking to prevent foodborne illnesses.

Making AI more accessible

While this year has presented the world with many obstacles, we’ve been impressed by the new ideas and innovations coming forward through technology. By providing the necessary tools and technology for edge AI, we strive to empower society to create affordable, adaptable, and intelligent systems.

We are excited to share all that Coral has to offer as we evolve our platform. For a list of worldwide distributors, system integrators and partners, visit the Coral partnerships page.

Please visit Coral.ai to discover more about our edge ML platform and share your feedback at [email protected]. To receive future Coral updates directly in your inbox, sign up for our newsletter.