To celebrate the 45th anniversary of “SPACE INVADERS,” we collaborated with TAITO, the Japanese developer of the original arcade game, and UNIT9 to launch “SPACE INVADERS: World Defense,” an immersive game that takes advantage of the most advanced location-based AR technology. Players around the world can go outside to explore their local neighborhoods, defend the Earth from virtual Space Invaders that spawn from nearby structures, and score points by taking them down – all with augmented reality.

The game is powered by our latest ARCore technology - Geospatial API, Streetscape Geometry API, and Geospatial Creator. We’re excited to show you behind the scenes of how the game was developed and how we used our newest features and tools to design the first of its kind procedural, global AR gameplay.

Geospatial API: Turn the world into a playground

Geospatial API enables you to attach content remotely to any area mapped by Google Street View and create richer and more robust immersive experiences linked to real-world locations on a global scale. SPACE INVADERS: World Defense is available in over 100 countries in areas with high Visual Positioning Service (VPS) coverage in Street View, adapting the gameplay to busy urban environments as well as smaller towns and villages.

For players who live in areas without VPS coverage, we have recently updated the game to include our new mode called Indoor Mode, which allows you to defend the Earth from Space Invaders in any setting or location - indoors or outdoors.

|

| The new Indoor Mode in Space Invaders brings the immersive gameplay to any indoor building setting |

Creating the initial user flow

ARCore Geospatial API uses camera images from the user’s device to scan for feature points and compares those to images from Google Street View in order to precisely position the device in real-world space.

|

| Geospatial API is based on VPS with tens of billions of images in Street View to enable developers to build world-anchored experiences remotely in over 100 countries |

This requires the user to hold up their phone and pan around the area such that enough data is collected to accurately position the user. To do this, we employed a clever technique to get users to scan the area, by requiring them to track the spaceship in the camera’s field of view.

|

| To get started, follow the spaceship to scan your local surroundings |

Using this user flow, we continually check whether the Geospatial API has gathered enough data for a high quality experience:

if (earthManager.EarthTrackingState == TrackingState.Tracking)

{

var yawAcc = earthManager.CameraGeospatialPose.OrientationYawAccuracy;

var horiAcc = earthManager.CameraGeospatialPose.HorizontalAccuracy;

bool yawIsAccurate = yawAcc <= 5;

bool horizontalIsAccurate = horiAcc <= 10;

return yawIsAccurate && horizontalIsAccurate;

}

|

Transforming the environment into the playground

After scanning the nearby area, the game uses mesh data from the Streetscape Geometry API to algorithmically make playing the game in different locations a unique experience. Every real-world location has its own topography and city layout, affecting the gameplay in its own unique way.

|

| Gameplay is varied depending on your location - from towns in Czech Republic (left) to cities in New York (right) |

In the game, SPACE INVADERS can spawn from buildings, so we constructed test cases using building geometry obtained from different parts of the world. This ensures that the game would perform optimally in diverse environments from local villages to bustling cities.

|

| A visualization of how the algorithm would place portals in the real-world |

Entering the Invader’s dimension

From our research studies, we learned that it can be tiring for users to keep holding their hands up for a prolonged period of time for an augmented reality experience. This knowledge influenced our gameplay development - we created the Invader’s dimension to give players a chance to relax their phone arm and improve user comfort.

Our favorite ‘wow’ moment that really shows you the power of the Geospatial API is the transition between real-world AR and virtually generated, 3D dimensions.

|

| Gameplay transition from real-world AR to 3D dimension |

This effect is achieved by blending the camera feed with the virtual environment shader that renders the buildings and terrain in the distinct wireframe style.

|

| The Invader’s dimension appears around the player in the Unity Editor, seamlessly transitioning between the two modes |

After the player enters the Invader’s dimension, the player’s spaceship flies through an algorithmically generated path through their local neighborhood. This is done by creating a depth image of the user’s environment from an overhead camera. In this image, the red channel represents buildings and the blue channel represents space that could potentially be used for the flight path. This image is then used to generate a grid with points that the path should follow, and an A* search algorithm is used to solve for a path that follows all the points.

Finally, the generated A-Star path is post-processed to smooth out any potential jittering, sharp turns and collisions.

To smooth out the spaceship’s pathway, the jitter is removed by sampling the path over a set interval of nodes. Then, we determine if there are any sharp turns on a path by analyzing the angles along the path. If a sharp turn is present, we introduce two additional points to round it out. Lastly we see if this smoothed path would collide with any obstacles, and adjust it to fly over them if detected.

|

| A visualization of the depth map and a generated sample path in the Invader’s dimension |

Creating a global gaming experience

A key takeaway from building the game was that the complexity of the contextual generation required worldwide testing. With Unity, we brought multiple environments into test cases, which allowed us to rapidly iterate and validate changes to these algorithms. This gave us confidence to deploy the game globally.

Visualizing SPACE INVADERS using Geospatial Creator

We used Geospatial Creator, powered by ARCore and Photorealistic 3D Tiles from Google Maps Platform, to validate how virtual content, such as Space Invaders, would appear next to specific landmarks within Tokyo in Unity.

|

| With Photorealistic 3D Tiles, we were able to visualize Invaders in specific locations, including the Tokyo Tower in Japan |

Future updates and releases

Since the game’s launch, we have heard our players’ feedback and have been actively updating and improving the gameplay experience.

- We have added a new gameplay mode, Indoor Mode, which allows all players without VPS coverage or players who do not want to use AR mode to experience the game.

- To encourage users to play the game in AR, scores have been rebalanced to reward players who play outside more than players who play indoors.

Download the game on Android or iOS today and join the ranks of an elite Earth defender force to compete in your neighborhood for the highest score. To hear the latest game updates, follow us on Twitter (@GoogleARVR) to hear how we are improving the game. Plus, visit our ARCore and Geospatial Creator websites to learn how to get started building with Google’s AR technology.

Posted by Dereck Bridie, Developer Relations Engineer, ARCore and Bradford Lee, Product Marketing Manager, Augmented Reality

Posted by Dereck Bridie, Developer Relations Engineer, ARCore and Bradford Lee, Product Marketing Manager, Augmented Reality

Posted by Serban Constantinescu, Product Manager

Posted by Serban Constantinescu, Product Manager

Posted by Kübra Zengin, North America GDSC Regional Lead

Posted by Kübra Zengin, North America GDSC Regional Lead

Posted by Miguel Guevara, Product Manager, Privacy and Data Protection Office

Posted by Miguel Guevara, Product Manager, Privacy and Data Protection Office

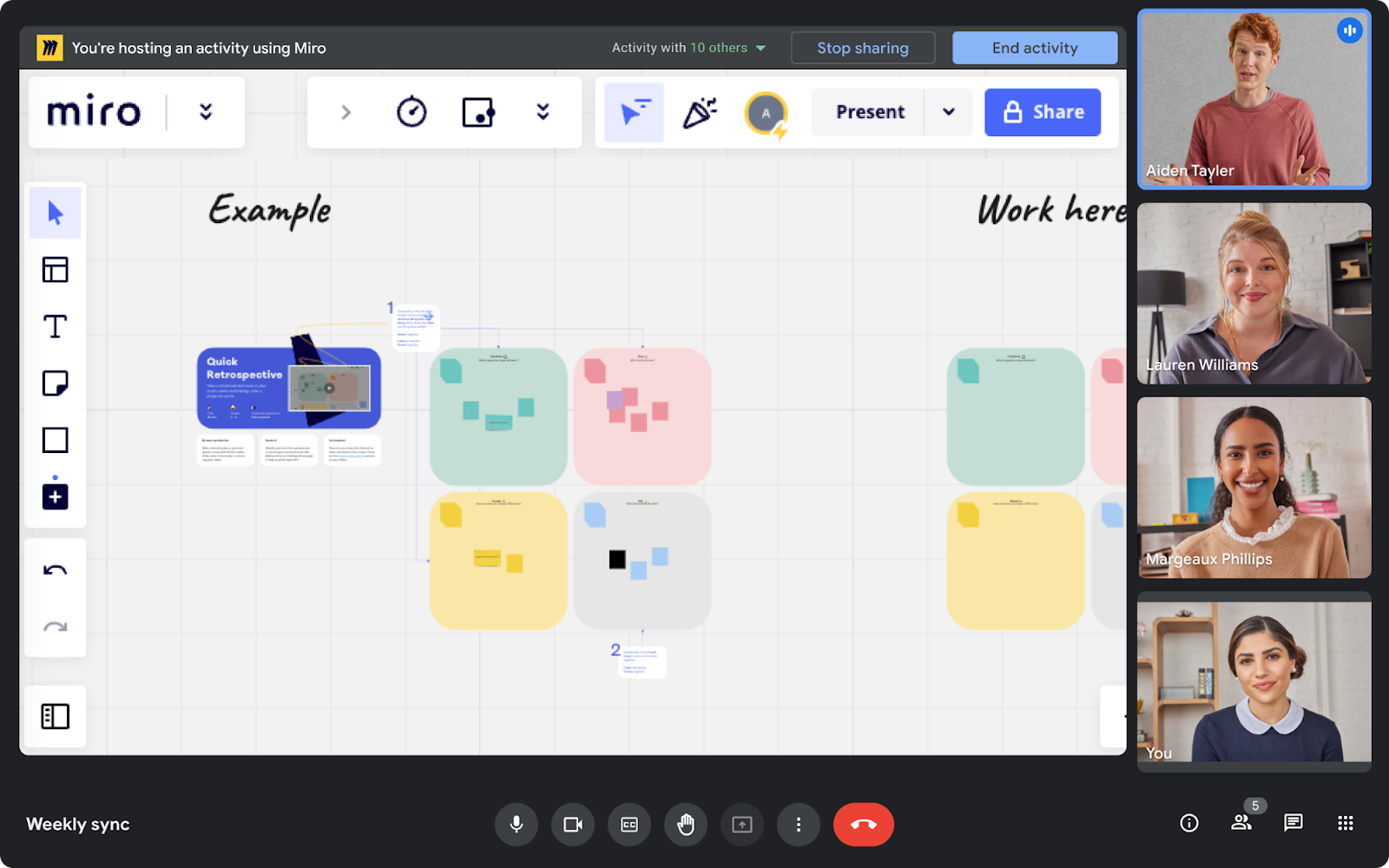

Posted by Simon Tokumine, Director of Product Management

Posted by Simon Tokumine, Director of Product Management

Posted by

Posted by

.png)

.png)

.png)

Posted by

Posted by

Posted by the Android team

Posted by the Android team

Posted by Rachel Francois, Global Program Manager, Google Developer Student Clubs

Posted by Rachel Francois, Global Program Manager, Google Developer Student Clubs