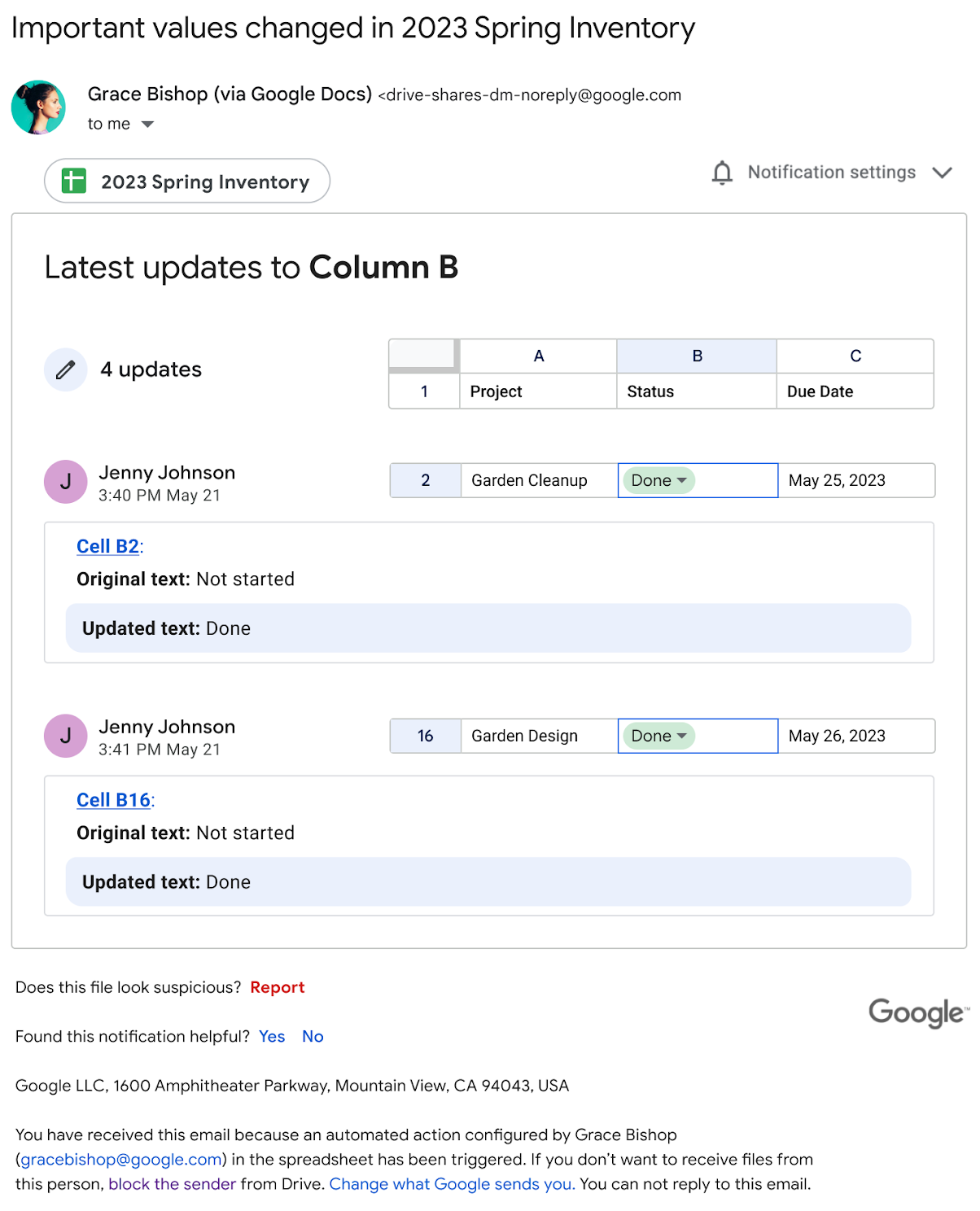

Stay up to date on important changes in your Google Sheets

This announcement was part of Google Cloud Next ‘24. Visit the Workspace Blog to learn more about the next wave of innovations in Workspace, including enhancements to Gemini for Google Workspace.

What’s changing

Who’s impacted

Why it matters

- Tasks have changed status or owner in a project tracker

- Certain items reach a certain number of stock in an inventory tracker

- A number drops below a certain value in a forecast analysis

Getting started

- Admins: There is no admin control for this feature.

- End users:

- If you own or have edit-access to a Sheet, you can set notifications when:

- A range of cells changes value

- A range of cells matches a particular condition

- To set conditional notifications, open your spreadsheet, go to Tools > Conditional notifications > Add rule. You can also right-click directly on a spreadsheet and select Conditional notifications.

- Note: The rules are assigned default names automatically. However, you can update the name of the rule by utilizing the text editor. Visit the Help Center to learn how to use conditional notifications.

Rollout pace

- Rapid Release domains: Gradual rollout (up to 15 days for feature visibility) starting on June 4, 2024

- Scheduled Release domains: Gradual rollout (up to 15 days for feature visibility) starting on June 18, 2024

Availability

- Business Standard, Plus

- Enterprise Starter, Standard, Plus

- Education Plus

- Enterprise Essentials

Resources

Source: Google Workspace Updates

Chrome for Android Update

Hello, Everyone! We've just released Chrome 125 (125.0.6422.164/.165) for Android: it'll become available on Google Play over the next few days.

This release includes stability and performance improvements. You can see a full list of the changes in the Git log. If you find a new issue, please let us know by filing a bug.

Google Chrome

Source: Google Chrome Releases

Top 3 Updates for Building with AI on Android at Google I/O ‘24

At Google I/O, we unveiled a vision of Android reimagined with AI at its core. As Android developers, you're at the forefront of this exciting shift. By embracing generative AI (Gen AI), you'll craft a new breed of Android apps that offer your users unparalleled experiences and delightful features.

Gemini models are powering new generative AI apps both over the cloud and directly on-device. You can now build with Gen AI using our most capable models over the Cloud with the Google AI client SDK or Vertex AI for Firebase in your Android apps. For on-device, Gemini Nano is our recommended model. We have also integrated Gen AI into developer tools - Gemini in Android Studio supercharges your developer productivity.

Let’s walk through the major announcements for AI on Android from this year's I/O sessions in more detail!

#1: Build AI apps leveraging cloud-based Gemini models

To kickstart your Gen AI journey, design the prompts for your use case with Google AI Studio. Once you are satisfied with your prompts, leverage the Gemini API directly into your app to access Google’s latest models such as Gemini 1.5 Pro and 1.5 Flash, both with one million token context windows (with two million available via waitlist for Gemini 1.5 Pro).

If you want to learn more about and experiment with the Gemini API, the Google AI SDK for Android is a great starting point. For integrating Gemini into your production app, consider using Vertex AI for Firebase (currently in Preview, with a full release planned for Fall 2024). This platform offers a streamlined way to build and deploy generative AI features.

We are also launching the first Gemini API Developer competition (terms and conditions apply). Now is the best time to build an app integrating the Gemini API and win incredible prizes! A custom Delorean, anyone?

#2: Use Gemini Nano for on-device Gen AI

While cloud-based models are highly capable, on-device inference enables offline inference, low latency responses, and ensures that data won’t leave the device.

At I/O, we announced that Gemini Nano will be getting multimodal capabilities, enabling devices to understand context beyond text – like sights, sounds, and spoken language. This will help power experiences like Talkback, helping people who are blind or have low vision interact with their devices via touch and spoken feedback. Gemini Nano with Multimodality will be available later this year, starting with Google Pixel devices.

We also shared more about AICore, a system service managing on-device foundation models, enabling Gemini Nano to run on-device inference. AICore provides developers with a streamlined API for running Gen AI workloads with almost no impact on the binary size while centralizing runtime, delivery, and critical safety components for Gemini Nano. This frees developers from having to maintain their own models, and allows many applications to share access to Gemini Nano on the same device.

Gemini Nano is already transforming key Google apps, including Messages and Recorder to enable Smart Compose and recording summarization capabilities respectively. Outside of Google apps, we're actively collaborating with developers who have compelling on-device Gen AI use cases and signed up for our Early Access Program (EAP), including Patreon, Grammarly, and Adobe.

Adobe is one of these trailblazers, and they are exploring Gemini Nano to enable on-device processing for part of its AI assistant in Acrobat, providing one-click summaries and allowing users to converse with documents. By strategically combining on-device and cloud-based Gen AI models, Adobe optimizes for performance, cost, and accessibility. Simpler tasks like summarization and suggesting initial questions are handled on-device, enabling offline access and cost savings. More complex tasks such as answering user queries are processed in the cloud, ensuring an efficient and seamless user experience.

This is just the beginning - later this year, we'll be investing heavily to enable and aim to launch with even more developers.

To learn more about building with Gen AI, check out the I/O talks Android on-device GenAI under the hood and Add Generative AI to your Android app with the Gemini API, along with our new documentation.

#3: Use Gemini in Android Studio to help you be more productive

Besides powering features directly in your app, we’ve also integrated Gemini into developer tools. Gemini in Android Studio is your Android coding companion, bringing the power of Gemini to your developer workflow. Thanks to your feedback since its preview as Studio Bot at last year’s Google I/O, we’ve evolved our models, expanded to over 200 countries and territories, and now include this experience in stable builds of Android Studio.

At Google I/O, we previewed a number of features available to try in the Android Studio Koala preview release, like natural-language code suggestions and AI-assisted analysis for App Quality Insights. We also shared an early preview of multimodal input using Gemini 1.5 Pro, allowing you to upload images as part of your AI queries — enabling Gemini to help you build fully functional compose UIs from a wireframe sketch.

You can read more about the updates here, and make sure to check out What’s new in Android development tools.

Source: Android Developers Blog

#WeArePlay | How Zülal is using AI to help people with low vision

Born in Istanbul, Türkiye with limited sight, Zülal has been a power-user of visual assistive technologies since the age of 4. When she lost her sight completely at 10 years old, she found herself reliant on technology to help her see and experience the world around her.

Today, Zülal is the founder of FYE, her solution to the issues she found with other visual assistive technologies. The app empowers people with low vision to be inspired by the world around them. Employing a team of 4, she heads up technological development and user experience for the app.

Zülal shared her story in our latest film for #WeArePlay, which celebrates people around the world building apps and games. She shared her journey from uploading pictures of her parents to a computer to get descriptions of them as a child, to developing her own visual assistive app. Find out what’s next for Zülal and how she is using AI to help people like herself.

Tell us more about the inspiration behind FYE.

Today, there are around 330 million people with severe to moderate visual impairment. Visual assistive technology is life-changing for these people, giving them back a sense of independence and a connection to the world around them. I’m a poet and composer, and in order to create I needed this tech so that I could see and describe the world around me. Before developing FYE, the visual assistive technology I was relying on was falling short. I wanted to take back control. I didn’t want to sit back, wait and see what technology could do for me - I wanted to harness its power. So I did.

Why was it important for you to build FYE?

I never wanted to be limited by having low vision. I’ve always thought, how can I make this better? How can I make my life better? I want to do everything, because I can. I really believe that there’s nothing I can’t do. There’s nothing WE can’t do. Having a founder like me lead the way in visual assistive technology illustrates just that. We’re taking back control of how we experience the world around us.

What’s different about FYE?

With our app, I believe our audience can really see the world again. It uses a combination of AI and human input to describe the world around them to our users. It incorporates an AI model trained on a dataset of over 15 million data points, so it really encompasses all the varying factors that make up the world of everyday visual experiences. The aim was to have descriptions as vivid as if I was describing my surroundings myself. It’s the small details that make a big difference.

What’s next for your app?

We already have personalized AI outputs so the user can create different AI assistants to suit different situations. You can use it to work across the internet as you’re browsing or shopping. I use it a lot for cooking - where the AI can adapt and learn to suit any situation. We are also collaborating with places where people with low vision might struggle, like the metro and the airport. We’ve built in AI outputs in collaboration with these spaces so that anyone using our app will be able to navigate those spaces with confidence. I’m currently working on evolving From Your Eyes as an organization, reimagining the app as one element of the organization under the new name FYE. Next, we’re exploring integrations with smart glasses and watches to bring our app to wearables.

Discover more #WeArePlay stories and share your favorites.

Source: Android Developers Blog

New investments to help build the U.S. cybersecurity workforce

We’re supporting more organizations to establish 15 new cybersecurity clinics across the U.S.

We’re supporting more organizations to establish 15 new cybersecurity clinics across the U.S.

Source: The Official Google Blog

Designing for privacy in an AI world

Artificial intelligence can help take on tasks that range from the everyday to the extraordinary, whether it’s crunching numbers or curing diseases. But the only way to …

Artificial intelligence can help take on tasks that range from the everyday to the extraordinary, whether it’s crunching numbers or curing diseases. But the only way to …

Source: AI

Adding markup support for organization-level return policies

Source: Google Search Central Blog

We’re on LinkedIn (finally)

Source: Google Search Central Blog

The Kubernetes ecosystem is a candy store

For the 10th anniversary of Kubernetes, I wanted to look at the ecosystem we created together.

I recently wrote about the pervasiveness and magnitude of the Kubernetes and CNCF ecosystem. This was the result of a deliberate flywheel. This is a diagram I used several years ago:

|

Because Kubernetes runs on public clouds, private clouds, on the edge, etc., it is attractive to developers and vendors to build solutions targeting its users. Most tools built for Kubernetes or integrated with Kubernetes can work across all those environments, whereas integrating directly with cloud providers directly entails individual work for each one. Thus, Kubernetes created a large addressable market with a comparatively lower cost to build.

We also deliberately encouraged open source contribution, to Kubernetes and to other projects. Many tools in the ecosystem, not just those in CNCF, are open source. This includes many tools built by Kubernetes users and tools built by vendors but were too small to be products, as well as those intended to be the cores of products. Developers built and/or wrote about solutions to problems they experienced or saw, and shared them with the community. This made Kubernetes more usable and more visible, which likely attracted more users.

Today, the result is that if you need a tool, extension, or off-the-shelf component for pretty much anything, you can probably find one compatible with Kubernetes rather than having to build it yourself, and it’s more likely that you can find one that works out of the box with Kubernetes than for your cloud provider. And often there are several options to choose from. I’ll just mention a few. Also, I want to give a shout out to Kubetools, which has a great list of Kubernetes tools that helped me discover a few new ones.

For example, if you’re an application developer whose application runs on Kubernetes, you can build and deploy with Skaffold, test it on Kubernetes locally with Minikube, or connect to Kubernetes remotely with Telepresence, or sync to a preview environment with Gitpod or Okteto. When you need to debug multiple instances, you can use kubetail to view the logs in real time.

To deploy to production, you can use GitOps tools like FluxCD, ArgoCD, or Google Cloud’s Config Sync. You can perform database migrations with Schemahero. To aggregate logs from your production deployments, you can use fluentbit. To monitor them, you have your pick of observability tools, including Prometheus, which was inspired by Google’s Borgmon tool similar to how Kubernetes was inspired by Borg, and which was the 2nd project accepted into the CNCF.

If your application needs to receive traffic from the Internet, you can use one of the many Ingress controllers or Gateway implementations to configure HTTPS routing, and cert-manager to obtain and renew the certificates. For mutual TLS and advanced routing, you can use a service mesh like Istio, and take advantage of it for progressive delivery using tools like Flagger.

If you have a more specialized type of workload to run, you can run event-driven workloads using Knative, batch workloads using Kueue, ML workflows using Kubeflow, and Kafka using Strimzi.

If you’re responsible for operating Kubernetes workloads, to monitor costs, there’s kubecost. To enforce policy constraints, there’s OPA Gatekeeper and Kyverno. For disaster recovery, you can use Velero. To debug permissions issues, there are RBAC tools. And, of course, there are AI-powered assistants.

You can manage infrastructure using Kubernetes, such as using Config Connector or Crossplane, so you don’t need to learn a different syntax and toolchain to do that.

There are tools with a retro experience like K9s and Ktop, fun tools like xlskubectl, and tools that are both retro and fun like Kubeinvaders.

If this makes you interested in migrating to Kubernetes, you can use a tool like move2kube or kompose.

This just scratched the surface of the great tools available for Kubernetes. I view the ecosystem as more of a candy store than as a hellscape. It can take time to discover, learn, and test these tools, but overall I believe they make the Kubernetes ecosystem more productive. To develop any one of these tools yourself would require a significant time investment.

I expect new tools to continue to emerge as the use cases for Kubernetes evolve and expand. I can’t wait to see what people come up with.

By Brian Grant, Distinguished Engineer, Google Cloud Developer Experience

Posted by

Posted by  Posted by Leticia Lago – Developer Marketing

Posted by Leticia Lago – Developer Marketing