Posted by Harsh Mehta, Software Engineer and Jason Baldridge, Research Scientist, Google Research Significant advances continue to be made in both

natural language processing and

computer vision, but the research community is still far from having computer agents that can interpret instructions in a real-world visual context and take appropriate actions based on those instructions. Agents,

including robots, can learn to navigate new environments, but they cannot yet understand instructions such as, “

Go forward and turn left after the red fire hydrant by the train tracks. Then go three blocks and stop in front of the building with a row of flags over its entrance.” Doing so requires relating verbal descriptions like

train tracks,

red fire hydrant, and

row of flags to their visual appearance, understanding what a

block is and how to count three of them, relating objects based on spatial configurations such as

by and

over, relating directions such as

go forward and

turn left to actions, and much more.

Grounded language understanding problems of this form are excellent testbeds for research on computational intelligence in that they are easy for people but hard for current agents, they synthesize language, perception and action, and evaluation of successful completion is straightforward. Progress on such problems can greatly enhance the ability of agents to coordinate movement and action with people. However finding or creating datasets large and diverse enough for developing robust models is difficult.

An ideal resource for quickly training and evaluating agents on grounded language understanding tasks is

Street View imagery, an extensive and visually rich virtual representation of the world. Street View is integrated with Google Maps and is composed of billions of street-level panoramas. The

Touchdown dataset, created by researchers at Cornell Tech, represents a compelling example of using Street View to drive research on grounded language understanding. However, due to restrictions on access to Street View panoramas, Touchdown can only provide panorama IDs rather than the panoramas themselves, sometimes making it difficult for the broader research community to work on Touchdown’s tasks:

vision-and-language navigation (VLN), in which instructions are presented for navigation through streets, and

spatial description resolution (SDR), which requires resolving spatial descriptions from a given viewpoint.

In “

Retouchdown: Adding Touchdown to StreetLearn as a Shareable Resource for Language Grounding Tasks in Street View,” we address this problem by adding the Street View panoramas referenced in the Touchdown tasks to the existing

StreetLearn dataset. Using this data, we generate a model that is fully compatible with the tasks defined in Touchdown. Additionally, we have provided

open source TensorFlow implementations for the Touchdown tasks as part of the

VALAN toolkit.

Grounded Language Understanding TasksTouchdown’s two grounded language understanding tasks can be used as benchmarks for navigation models. VLN involves following instructions from one street location to another, while SDR requires identifying a point in a Street View panorama given a description based on its surrounding visual context. The two tasks are shown being performed together in the animation below.

|

| Example animation of a person following Touchdown instructions: “Orient yourself so that the umbrellas are to the right. Go straight and take a right at the first intersection. At the next intersection there should be an old-fashioned store to the left. There is also a dinosaur mural to the right. Touchdown is on the back of the dinosaur.” |

Touchdown’s VLN task is similar to that defined in the popular

Room-to-Room dataset, except that Street View has far greater visual diversity and more degrees of freedom for movement. Performance of the

baseline models in Touchdown leaves considerable headroom for innovation and improvement on many facets of the task, including linguistic and visual representations, their integration, and learning to take actions conditioned on them.

That said, while enabling the broader research community to work with Touchdown’s tasks, certain safeguards are needed to make it compliant with the

Google Maps/Google Earth Terms of Service and protect the needs of both Google and individuals. For example, panoramas may not be mass downloaded, nor can they be stored indefinitely (for example, individuals may ask to remove specific panoramas). Therefore, researchers must periodically delete and refresh panoramas in order to work with the data while remaining compliant with these terms.

StreetLearn: A Dataset of Approved Panoramas for Research UseAn alternative way to interact with Street View panoramas was forged by

DeepMind with the

StreetLearn data release last year. With StreetLearn, interested researchers can fill out a form requesting access to a set of 114k panoramas for regions of New York City and Pittsburgh. Recently, StreetLearn has been used to support

the StreetNav task suite, which includes training and evaluating agents that follow Google Maps directions. This is a VLN task like Touchdown and

Room-to-Room; however, it differs greatly in that it does not use natural language provided by people.

Additionally, even though StreetLearn’s panoramas cover the same area of Manhattan as Touchdown, they are not adequate for research covering the tasks defined in Touchdown, because those tasks require the exact panoramas that were used during the Touchdown annotation process. For example, in Touchdown tasks, the language instructions refer to

transient objects such as cars, bicycles, and couches. A Street View panorama from a different time period may not contain these objects, so the instructions are not stable across time periods.

|

| Touchdown instruction: “Two parked bicycles, and a discarded couch, all on the left. Walk just past this couch, and stop before you pass another parked bicycle. This bike will be white and red, with a white seat. Touchdown is sitting on top of the bike seat.” Other panoramas from the same location taken at other times would be highly unlikely to contain these exact items in the exact same positions. For a concrete example, see the current imagery available for this location in Street View, which contains very different transient objects. |

Furthermore, SDR requires coverage of multiple points-of-view for those specific panoramas. For example, the following panorama is one step down the street from the previous one. They may look similar, but they are in fact quite different — note that the bikes seen on the left side in both panoramas are not the same — and the location of Touchdown is toward the middle of the above panorama (on the bike seat) and to the bottom left in the second panorama. As such, the pixel location of the SDR problem is different for different panoramas, but consistent with respect to the real world location referred to in the instruction. This is especially important for the end-to-end task of following both the VLN and SDR instructions together: if an agent stops, they should be able to complete the SDR task regardless of their exact location (provided the target is visible).

|

| A panorama one step farther down the street from the previous scene. |

Another problem is that the

granularity of the panorama spacing is different. The figure below shows the overlap between the StreetLearn (blue) and Touchdown (red) panoramas in Manhattan. There are 710 panoramas (out of 29,641) that share the same ID in both datasets (in black). Touchdown covers half of Manhattan and the density of the panoramas is similar, but the exact locations of the nodes visited differ.

Adding Touchdown Panoramas to StreetLearn and Verifying Model BaselinesRetouchdown reconciles Touchdown’s mode of dissemination with StreetLearn’s, which was originally designed to adhere to the rights of Google and individuals while also simplifying access to researchers and improving reproducibility. Retouchdown includes both data and code that allows the broader research community to work effectively with the Touchdown tasks — most importantly to ensure access to the data and to ease reproducibility. To this end, we have integrated the Touchdown panoramas into the StreetLearn dataset to create a new version of StreetLearn with 144k panoramas (an increase of 26%) that are all approved for research use.

We also reimplemented models for VLN and SDR and show that they are on par or better than the results obtained in the original Touchdown paper. These implementations are open-sourced as well, as part of the

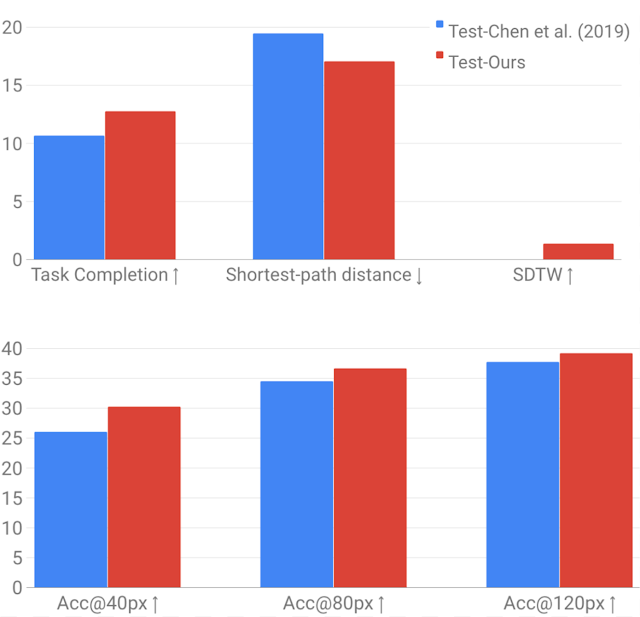

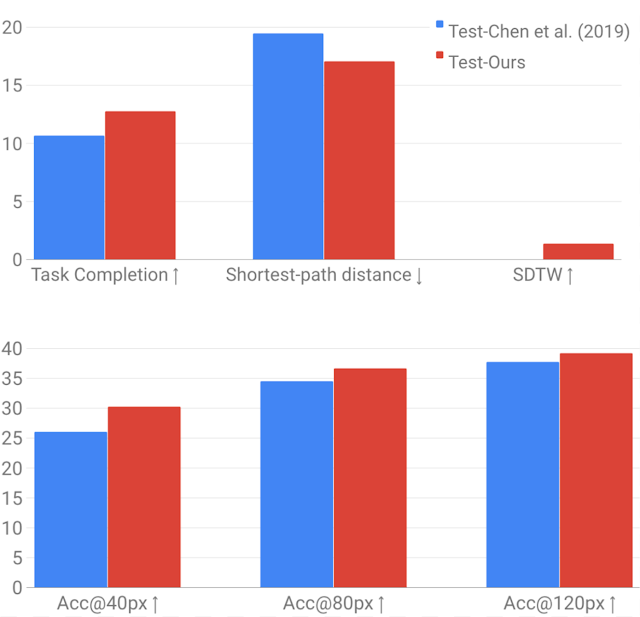

VALAN toolkit. The first graph below compares the results of

Chen et al. (2019) to our reimplementation for the VLN task. It includes the

SDTW metric, which measures both successful completion and fidelity to the true reference path. The second graph below makes the same comparison for the SDR task. For SDR, we show

accuracy@n

px measurements, which provides the percent of times the model’s prediction is within

n pixels of the goal location in the image. Our results are slightly better due to some

small differences in models and processing, but most importantly, the results show that the updated panoramas are fully capable of supporting future modeling for the Touchdown tasks.

|

| Performance comparison between Chen et al. (2019) using the original panoramas (in blue) and our reimplementation using the panoramas available in StreetLearn (in red). Top: VLN results for task completion, shortest path distance and success weighted by Dynamic Time Warping (SDTW). Bottom: SDR results for the accuracy@npx metrics. |

Obtaining the DataResearchers interested in working with the panoramas should fill out the

StreetLearn interest form. Subject to approval, they will be provided with a download link. Their information is held so that the StreetLearn team can inform them of updates to the data. This allows both Google and participating researchers to effectively and easily respect takedown requests. The instructions and panorama connectivity data can be obtained from the

Touchdown github repository.

It is our hope that this release of these additional panoramas will enable the research community to make further progress on these challenging grounded language understanding tasks.

AcknowledgementsThe core team includes Yoav Artzi, Eugene Ie, and Piotr Mirowski. We would like to thank Howard Chen for his help with reproducing the Touchdown results, Larry Lansing, Valts Blukis and Vihan Jain for their help with the code and open-sourcing, and the Language team in Google Research, especially Radu Soricut, for the insightful comments that contributed to this work. Many thanks also to the Google Maps and Google Street View teams for their support in accessing and releasing the data, and to the Data Compute team for reviewing the panoramas.