Posted by Charles Maxson, Developer Advocate

Google Workspace offers a broad set of tools and capabilities that empowers creators and developers of all experience levels to build a wide range of custom productivity solutions. For professional developers looking to integrate their own app experiences into Workspace, the platform enables deep integrations with frameworks like Google Workspace Add-ons and Chat apps, as well as deep access to the full suite of Google Workspace apps via numerous REST APIs. And for citizen developers on the business side or developers looking to build solutions quickly and easily, tools like Apps Script and AppSheet make it simple to customize, extend, and automate workflows directly within Google Workspace.

At Next ‘21 we have 7 sessions you won’t want to miss that cover the breadth of the platform. From no-code and low-code solutions to content for developers looking to publish in the Google Workspace Marketplace and reach the more than 3 billion users in Workspace, Next ‘21 has something for everyone.

1. See what’s new in Google Workspace

Matthew Izatt, Product Manager, Google Cloud

Erika Trautman, Director Product Management, Google Cloud

Join us for an interactive demo and see the latest Google Workspace innovations in action. As the needs of our users shifted over the past year, we’ve delivered entirely new experiences to help people connect, create, and collaborate—across Gmail, Drive, Meet, Docs, and the rest of the apps. You’ll see how Google Workspace meets the needs of different types of users with thoughtfully designed experiences that are easy to use and easy to love, Then, we’ll go under the hood to show you the range of ways to build powerful integrations and apps for Google Workspace using tools that span from no-code to professional grade.

2. Developer Platform State of the Union: Google Workspace

Charles Maxson, Developer Advocate, Google Cloud

Steven Bazyl, Developer Relations Engineer, Google Cloud

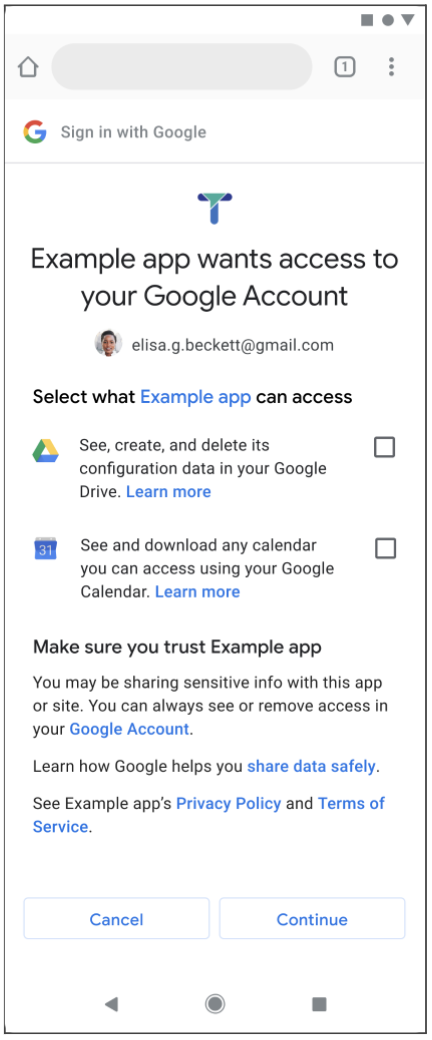

Google Workspace offers a comprehensive developer platform to support every developer who’s on a journey to customize and enhance Google Workspace. In this session, take a deeper dive into the new tools, technologies, and advances across the Google Workspace developer platform that can help you create even better integrations, extensions, and workflows. We’ll focus on updates for Google Apps Script, Google Workspace Add-ons, Chat apps, APIs, AppSheet, and Google Workspace Marketplace.

3. How Miro, Docusign, Adobe and Atlassian are helping organizations centralize their work

Matt Izatt, Group Product Manager, Google Cloud

David Grabner, Product Lead, Apps & Integrations, Miro

Integrations make Google Workspace the hub for your work and give users more value by bringing all their tools into one space. Our ecosystem allows users to connect industry-leading software and custom-built applications with Google Workspace to centralize important information from the tools you use every day. And integrations are not limited to Gmail, Docs, or your favorite Google apps – they’re also available for Chat. With Chat apps, users can seamlessly blend conversations with automation and timely information to accelerate teamwork directly from within a core communication tool.

In this session, we’ll briefly review the Google Workspace platform and how Miro and Atlassian are helping organizations centralize their work and keep important information a mouse click or a tap away.

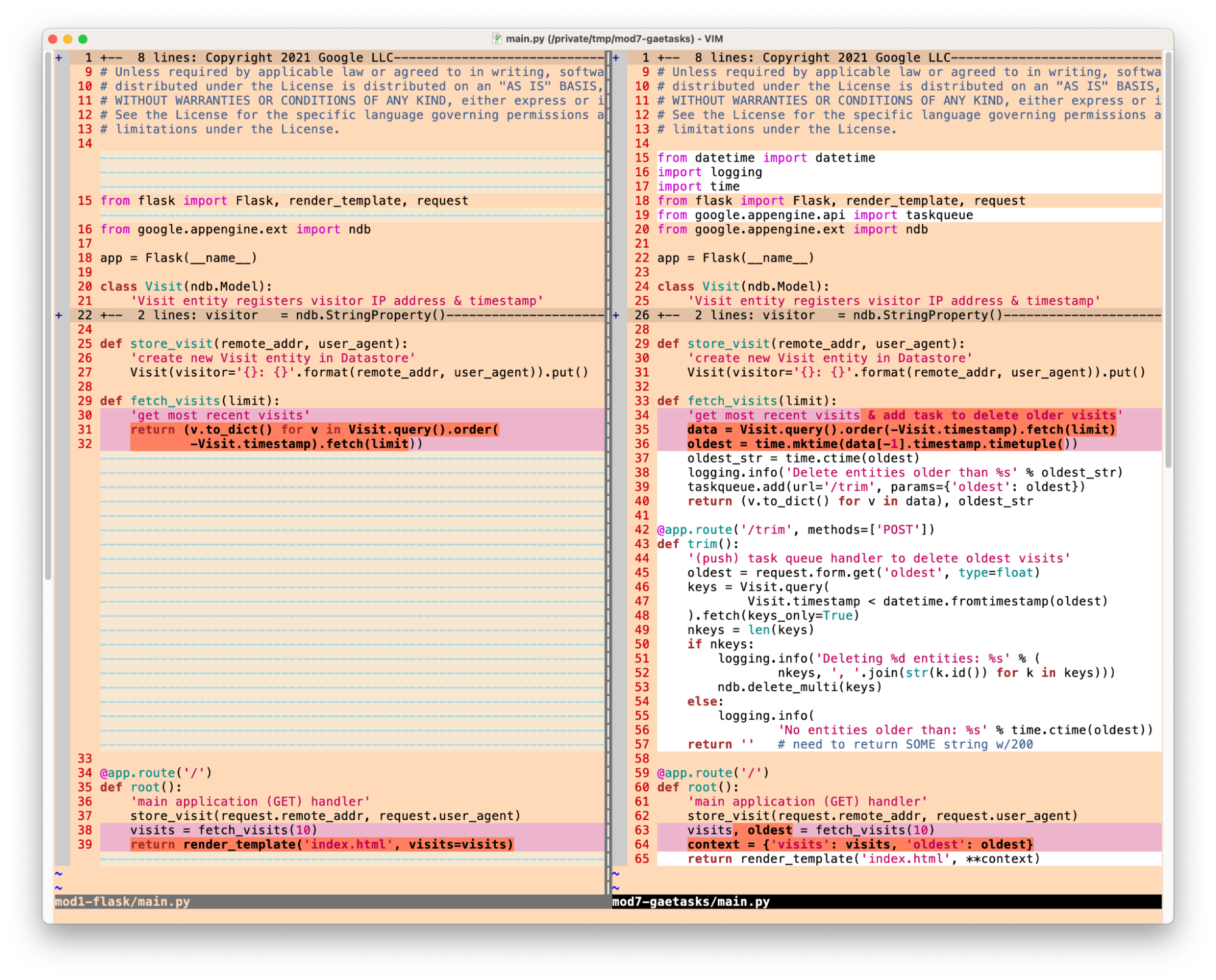

4. Learn how customers are empowering their workforce to customize Google Workspace

Charles Maxson, Developer Advocate, Google Cloud

Aspi Havewala, Global Head of Digital Workplace, Verizon

Organizations small and large are seeing their needs grow increasingly diverse as they pursue digital transformation projects. Many of our customers are empowering their workforces by allowing them to build advanced workflows and customizations using Google Apps Script. It’s a powerful low-code development platform included with Google Workspace that makes it fast and easy to build custom business solutions for your favorite Google Workspace applications – from macro automations to custom functions and menus. In this session, we’ll do a quick overview of the Apps Script platform and hear from customers who are using it to enable their organizations.

5. Transform your business operations with no-code apps

Arthur Rallu, Product Manager, Google Cloud

Paula Bell, Business Process Analyst, Kentucky Power Company, American Electric Power

Building business apps has become something anyone can do. Don’t believe us? Join this session to learn how Paula Bell, who self describes as a person with “zero coding experience” built a series of mission-critical apps on AppSheet that revolutionized how Kentucky Power, a branch of American Electric Power, runs their field operations.

6. How AppSheet helps you work smarter with Google Workspace

Mike Procopio, Senior Staff Software Engineer, Google Cloud

Millions of Google Workspace users are looking for new ways to reclaim time and work smarter within Google Workspace. AppSheet, Google Workspace’s first-party extensibility platform, will be announcing several new features that will allow people to automate and customize their work within their Google Workspace environment – all without having to write a line of code.

Join this session to learn how you can use these new features to work smarter in Google Workspace.

7. How to govern an innovative workforce and reduce Shadow IT

Kamila Klimek, Product Manager, Google Cloud

Jacinto Pelayo, Chief Executive Officer, Evenbytes

For organizations focused on growth, finding new ways that employees can use technology to work smarter and innovate is key to their success. But enabling employees to create their own solutions comes at a cost that IT is keenly aware of. The threats of external hacks, data leaks, and shadow IT make it difficult for IT to find a solution that gives them the control and visibility they need, while still empowering their workforce. AppSheet was built with these challenges in mind.

Join our session to learn how you can use AppSheet to effectively govern your workforce and reduce security threats, all while giving employees the tools to make robust, enterprise-grade applications.

To learn more about these sessions and to register, visit the Next ‘21 website and also check out my playlist of Next ‘21 content.