Posted by Erica Hanson, Global Program Manager, Google Developer Student Clubs

With every new challenge ahead comes a new opportunity for finding a solution. Today’s challenges, and those we will continue to face, remind us all of how vital it remains to protect our planet and the people living on it. Enter the Solution Challenge, Google’s annual contest inviting the global Google Developer Student Clubs (GDSC) community to develop solutions to real world problems utilizing Google technologies.

This year’s Solution Challenge asks participants to solve for one or more of the United Nations 17 Sustainable Development Goals, intended to promote employment for all, economic growth, and climate action.

The top 50 semi-finalists and the top 10 finalists were announced earlier this month. Now, it all comes down to Demo Day on July 28th, where the finalists will present their solutions to Google and developers all around the world, live on YouTube.

At Demo Day, our judges will review the projects, ask questions, and choose the top 3 grand prize winners! You can RSVP here to be a part of Demo Day, vote for the People’s Choice Award, and watch all the action as it unfolds live.

Ahead of the event, let’s get to know the top 10 finalists and their incredible solutions below.

The Top 10 Projects

. . .

BloodCall - Greece, Harokopio University of Athens

UN Sustainable Goals Addressed: #3: Good Health & Wellbeing

BloodCall aims to make blood donation an easier task for everyone involved by leveraging Android, Firebase, and the Google Maps SDK. It was built by Athanasios Bimpas, Georgios Kitsakis and Stefanos Togias.

“Our main inspiration was based on two specific findings, we noticed that especially in Greece the willingness to donate blood is significantly high but information is not readily available. We also have noticed lots of individuals trying to reach as many people as possible through sharing their (or a loved one's) need of blood on social media, so we concluded that there exists a major need for blood especially in periods of heightened activity like summertime.”

Blossom - Canada, University of Waterloo

UN Sustainable Goals Addressed: #3: Good Health & Wellbeing, #4: Quality Education, #5: Gender Equality and Women’s Empowerment, #10: Reduced Inequalities

Blossom provides an integrated solution for young girls to get access to accurate and reliable menstrual education and resources and uses Android, Firebase, Flutter, Google Cloud Platform. It was built by Aditi Sandhu, Het Patel, Mehak Dhaliwal, and Jinal Rajawat.

“As all group members of this project are of South Asian descent, we know firsthand how difficult it is to talk about the female reproductive system within our families. We wanted to develop an application that would target youth so they can begin this conversation at an earlier age. Blossom allows users to learn from the safety of their own devices. Simply by knowing more about their bodies, individuals are more confident with them, thereby solving Goal 5: to achieve gender equality and empower all women and girls.”

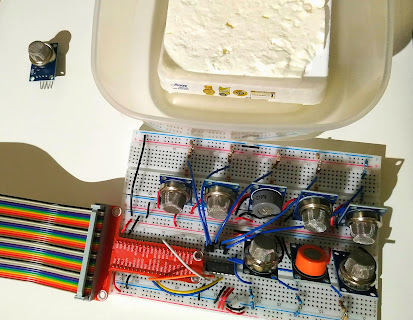

Gateway - Vietnam, Hoa Sen University

UN Sustainable Goals Addressed: #3: Good Health & Wellbeing, #11: Sustainable Cities, #17: Partnerships

Gateway creates an open covid-19 digital check-in system. Through an open-source, IoT solution that pairs with an application on a mobile device and communicates with an embedded system over Bluetooth connection protocol. It uses Angular, Firebase, Flutter, Google Cloud Platform, TensorFlow, Progressive Web Apps and connects users with a COVID-19 digital check-in system.It was built by: Cao Nguyen Vo Dang, Duy Truong Hoang, Khuong Nguyen Dang, and Nguyễn Mạnh Hùng.

“Problems are still happening in our community where the support of technology is still lacking when it comes to covid. Vaccination in our country is still continuing. We still have to manually (paper) when it comes to registering for vaccination results. And "back to school/office" are now the biggest challenges for business, community. Contact tracing solutions are fully overloaded with crowded areas. We're focused on improving the crowded situation by creating an open-source automatic checking gateway, allowing users to interact with the system more intuitively.”

GetWage - India, G.H. Raisoni College of Engineering, Nagpur

UN Sustainable Goals Addressed: #1: No Poverty, #4: Quality Education, #8: Decent Work & Economic Growth

GetWage provides a tool to help those impacted by unemployment and unfilled positions in the local economy find and post daily wage work with ease. It uses Firebase, Flutter, Google Cloud Platform, TensorFlow. It was built by Aaliya Ali, Aniket Singh, Neenad Sahasrabuddhe, and Shivam.

“When COVID struck the world, daily wage laborers were hit the hardest. Data from Lucknow shows how the average working days pre-Covid for most workers were around 21 days a month, which fell to nine days a month post the lockdown. In the city of Pune, average working days in a month came down from 12 to two days. All of this inspired us to do something in order to help the needy by connecting them with those looking to hire laborers and educating them.“

Isak - South Korea, Soonchunhyang University

UN Sustainable Goals Addressed: #3: Good Health & Wellbeing, #12: Responsible Consumption & Production

Isak is an application that combines the activity of jogging and trash collection to make picking up trash more impactful. It uses Firebase, Flutter, Google Cloud Platform, TensorFlow. It was built by Choo Chang Woo, Jang Hyeon Wook, Jeong Hyeong Lee, and JeongWoo Han.

“COVID-19 has increased people's time to stay at home, and disposable garbage generated by the increase in packaging and delivery orders has been increasing exponentially and people are home more as a result. Our team decided to solve both garbage reduction and exercise. We thought that if we picked up trash while jogging, we could take care of our health and environment at the same time, and if we added additional functions, we could arouse interest from users and encourage them to participate.”

SaveONE life - Kenya, Taita Taveta University

UN Sustainable Goals Addressed: #1: No Poverty, #2: Zero Hunger, #4:Quality Education, #10: Reduced Inequality

SaveONE life helps donors locate and donate goods to home orphanages in Kenya that are in need of basic items, food, clothing, and other educational resources. It's built with Android, Assistant / Actions on Google, Firebase, Google Cloud Platform, and Google Maps. It was built by David Kinyanjui, Nasubo Imelda, and Wycliff Njenga.

“We visited one of the orphanage homes near our campus and we talked to the Orphanage Manager and his feedback he told us that their challenge is food. Some of the kids are suffering from malnutrition because they are not getting enough food, water, clothing and educational materials including school fees for the kids. The major inspiration for use is helping donors around our campus better know where the home orphanage is, when, and how orphans can get donations.

SIGNify - Canada, University of Toronto, Mississauga

UN Sustainable Goals Addressed: #10: Reduced Inequalities, #4: Quality Education

SIGNify provides an interface where deaf and non-deaf people can easily understand sign language through a graphical context. It leverages Android, Firebase, Flutter, Google Cloud Platform, and TensorFlow. It was built by Kavya Mehta, Milind Vishnoi, Mitesh Ghimire, and Wentao Zhou.

“Approximately 70 million deaf people around the world use sign language for communication. These are all people that have great ideas, thoughts, and opinions that need to be heard. However, their talent and skills will be of no use if people are not able to understand what they have to say; this has lead to 1 in 4 deaf people leaving a job due to discrimination. If we fail to learn sign language, we are depriving ourselves of the knowledge resources that deaf people have to provide. By learning sign language and hiring deaf people in the workspace, we are promoting equal rights and increasing employment opportunities for disabled people.”

Starvelp - Turkey, İzmir University of Economics

UN Sustainable Goals Addressed: #2: Zero Hunger

Starvelp aims to tackle the problems of food waste and hunger by enabling more ways to share local resources with those in need. It leverages Firebase, Flutter, and Google Cloud Platform. It was built by Akash Srivastava and Selin Doğa Orhan.

"We found that the prevalence of undernourishment is impacting a huge population. Prevalence of food insecurity and not being able to feed themselves and their families are related to poor financial conditions. We were inspired to build this, because in many countries, there are a large number of slum areas and many people who are in the farming sector are not able to get sufficient food. It is really shocking for us to see news about how people are getting impacted each year and have different diseases due to improper nutrition. In fact, they have to skip many meals which ultimately leads to undernourishment, and this is a big problem."

Xtrinsic - Germany, Faculty of Engineering Albert-Ludwigs-Universität Freiburg

UN Sustainable Goals Addressed: #3: Good Health & Wellbeing

Xtrinsic is an application for mental health research and therapy - it adapts your environment to your personal habits and needs. Using a wearable device and TensorFlow, the team aims to detect and help users get through their struggles throughout the day and at night with behavioral suggestions. It’s built using Android, Assistant / Actions on Google, Firebase, Flutter, Google Cloud Platform, TensorFlow, WearOS, DialogFlow, and Google Health Services. It was built by Alexander Monneret, Chikordili Fabian Okeke, Emma Rein, and Vandysh Kateryna.

“Our inspiration comes from our own experience with mental health issues. Two of our team members were directly impacted by the recently waged wars in Syria and Ukraine. And all of us have experienced mental health conditions during the pandemic. We learned through our hardships how to overcome these tough situations and stay strong and positive. We believe that with our know-how and Google technologies we can make a difference and help make the world a better place.”

Zero-zone - South Korea, Sookmyung Women's University

UN Sustainable Goals Addressed: #4: Quality Education, #10: Reduced Inequalities

Zero-zone supports active communication for, and with, the hearing impaired and helps individuals with hearing impairments practice lip reading. The tool leverages Android, Assistant / Actions on Google, Flutter, Google Cloud Platform, and TensorFlow. It was built by DoEun Kim, Hwi Min, Hyemin Song, and Hyomin Kim.

“About 39% of Korean hearing impaired people find it difficult to learn lip-reading, even if they have enrolled in special schools. The project aims to refine lip-reading so that hearing impaired can learn lip-reading anytime, anywhere and communicate actively. Our tool provides equal educational opportunities for deaf users who want to practice oral speech. In addition, the active communication of the hearing impaired, will give them confidence and develop a power to overcome inequality due to difficulties in communication.”

Feeling inspired and ready to learn more about Google Developer Student Clubs? Find a club near you here, and be sure to RSVP here and tune in for the livestream of the upcoming Solution Challenge Demo Day on July 28th.