Posted by Francis Ma, Firebase Product Manager

Originally posted to the Firebase blog

Our goal with Firebase is to help developers build better apps and grow them into successful businesses. Six months ago at Google I/O, we took our well-loved backend-as-a-service (BaaS) and expanded it to 15 features to make it Google’s unified app development platform, available across iOS, Android, and the web.

We launched many new features at Google I/O, but our work didn’t stop there. Since then, we’ve learned a lot from you (750,000+ projects created on Firebase to date!) about how you’re using our platform and how we can improve it. Thanks to your feedback, today we’re launching a number of enhancements to Crash Reporting, Analytics, support for game developers and more. For more information on our announcements, tune in to the livestream video from Firebase Dev Summit in Berlin. They’re also listed here:

Improve App Quality to Deliver Better User Experiences

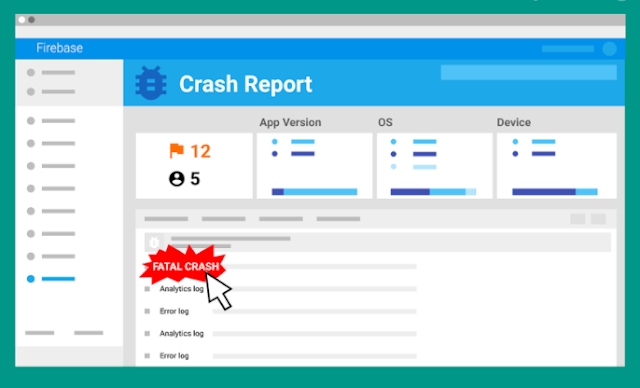

Firebase Crash Reporting comes out of Beta and adds a new feature that helps you diagnose and reproduce app crashes.

Often the hardest part about fixing an issue is reproducing it, so we’ve added rich context to each crash to make the process simple. Firebase Crash Reporting now shows Firebase Analytics event data in the logs for each crash. This gives you clarity into the state of your app leading up to an error. Things like which screens of your app were visited are automatically logged with no instrumentation code required. Crash logs will also display any custom events and parameters you explicitly log using Firebase Analytics. Firebase Crash Reporting works for both iOS and Android apps.

Glide, a popular live video messaging app, relies on Firebase Crash Reporting to ensure user quality and release agility. “No matter how much effort you put into testing, it will never be as thorough as millions of active users in different locations, experiencing a variety of network conditions and real life situations. Firebase allows us to rapidly gain trust in our new version during phased release, as well as accelerate the process of identifying core issues and providing quick solutions.” - Roi Ginat, Founder, Glide.

Firebase Test Lab for Android supports more devices and introduces a free tier.

We want to help you deliver high-quality experiences, so testing your app before it goes into the wild is incredibly important. Firebase Test Lab allows you to easily test your app on many physical and virtual devices in the cloud, without writing a single line of test code. Beginning today, developers on the Spark service tier (which is free!) can run five tests per day on physical devices and ten tests per day on virtual devices—with no credit card setup required. We’ve also heard that you want more device options, so we’ve added 11 new popular Android device models to Test Lab, available today.

Illustration of Firebase Crash Reporting

Make Faster Data Driven Decisions with Firebase Analytics

Firebase Analytics now offers live reporting, a new integration with Google “Data Studio”, and real-time exporting to BigQuery.

We know that your data is most actionable when you can see and process it as quickly as possible. Therefore, we’re announcing a number of features to help you maximize the potential of your analytics events:

- Real-time uploading of conversion events

- Real-time exporting to BigQuery

- DebugView for validation of your analytics instrumentation

- StreamView, which will offer a live, dynamic view of your analytics data as we receive it

To further enhance your targeting options, we’ve improved the connection between Firebase Analytics and other Firebase features, such as Dynamic Links and Remote Config. For example, you can now use Dynamic Links on your Facebook business page, and we can identify Facebook as a source in Firebase Analytics reporting. As well, you can now target Remote Config changes by User Properties, in addition to Audiences.

Build Better Games using Firebase

Firebase now has a Unity plugin!

Game developers are building great apps, and we want Firebase to work for you, too. We’ve built an entirely new plugin for Unity that supports Analytics, the Realtime Database, Authentication, Dynamic Links, Remote Config, Notifications and more. We've also expanded our C++ SDK with Realtime Database support.

Integrate Firebase Even Easier with Open-Sourced UI Library

FirebaseUI is a library that provides common UI elements when building apps, and it’s a quick way to integrate with Firebase. FirebaseUI 1.0 includes a drop-in UI flow for Firebase Authentication, with common identity providers such as Google, Facebook, and Twitter. FirebaseUI 1.0 also added features such as client-side joins and intersections for the Realtime Database, plus integrations with Glide and SDWebImage that make downloading and displaying images from Firebase Storage a cinch. Follow our progress or contribute to our Android, iOS, and Web components on Github.

Learn More via Udacity and Join the Firebase Community

We want to provide the best tool for developers, but it’s also important that we give resources and training to help you get more out of the platform. As such, we’ve created a new Udacity course: Firebase in a Weekend! It’s an instructor-led video course to help all developers get up and running with Firebase on iOS and Android, in two days.

Finally, to help wrap your head around all our announcements, we’ve created a new demo app. This is an easy way to see how Analytics, Crash Reporting, Test Lab, Notifications, and Remote Config work in a live environment, without having to write a line of code.

Helping developers build better apps and successful businesses is at the core of Firebase. We work hard on it every day. We love hearing your feedback and ideas for new features and improvements—and we hope you can see from the length of this post that we take them to heart! Follow us on Twitter, join our Slack channel, participate in our Google Group, and let us know what you think. We’re excited to see what you’ll build next!