Slack has become the hub of communications for

many teams. It integrates with many services, like Google Drive, and there are ways to integrate with your own infrastructure. This blog post describes three ways to build Slack integrations on

Google Cloud Platform using samples from our

Slack samples repository on GitHub. Clone it with:

git clone https://github.com/GoogleCloudPlatform/slack-samples.git

Or make your own fork to use as the base for your own integrations. Since all the platforms we use in this tutorial support incoming HTTPS connections, all these samples could be extended into

Slack Apps and distributed to other teams.

Using Slack for notifications from a Compute Engine instance

If you're using

Google Compute Engine as a virtual private server, it can be useful to get an alert to know who's using a machine. This could be an audit log, but it's also useful to know when someone is using a shared machine so you don't step on changes each other are making.

To get started, we assume you have a Linux Compute Engine instance. You can follow

this guide to create one and follow along.

Create a

Slack incoming webhook and save the webhook URL. It will look something like https://hooks.slack.com/services/YOUR/SLACK/INCOMING-WEBHOOK. Give the hook a nice name, like "SSH Bot" and a recognizable icon, like a lock emoji.

Next, SSH into the machine and clone the repository. We'll be using the

notify sample for this integration.

git clone https://github.com/GoogleCloudPlatform/slack-samples.git

cd slack-samples/notify

Create a file slack-hook with the webhook URL and test your webhook out.

nano slack-hook

# paste in URL, write out, and exit

PAM_USER=$USER PAM_RHOST=testhost ./login-notify.sh

The script sends a POST request to your Slack webhook. You should receive a Slack message notifying you of this.

We'll be adding a

PAM hook to run whenever someone SSHes into the machine. Verify that SSH is using PAM by making sure there's a line "UsePAM yes" in the /etc/ssh/sshd_config file.

sudo nano /etc/ssh/sshd_config

We can now set up the PAM hook. The install.sh script creates a /etc/slack directory and copies the login-notify.sh script and slack-hook configuration there.

It configures /etc/pam.d/sshd to run the script whenever someone SSHes into the machine by adding the line

"session optional pam_exec.so seteuid /etc/slack/login-notify.sh".

Keep this SSH window open in case something went wrong and verify that you can login from another SSH terminal. You should receive another notification on Slack, this time with the real remote host IP address.

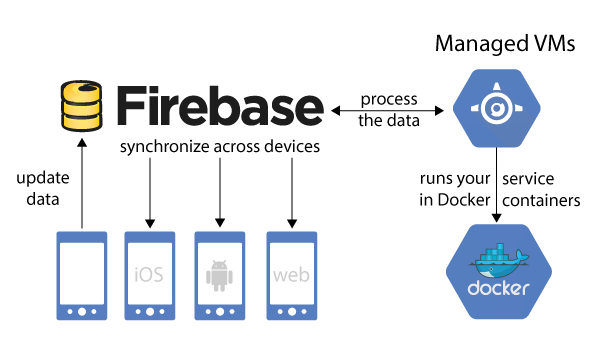

Building a bot and running it in Google Container Engine

If you want to run a Slack bot, one of the easiest ways to do it is to use

Beep Boop, which will take care of running your bot on Cloud Platform for you, so you can focus on making the bot the best you can.

A Slack bot connects to the

Slack Real Time Messaging API using Websockets; it runs as a long-running process, listening to and sending messages.

Google Container Engine provides a nice balance of control for running a bot. It uses

Kubernetes to

keep your bot running and

manage your secret tokens. It's also one of the easiest ways to run a server that uses Websockets on Cloud Platform. We'll walk you through running a Node.js

Botkit Slack bot on Container Engine, using Google Container Registry to store our Docker image.

First,

set up your development environment for Google Container Engine. Clone the repository and change to the

bot sample directory.

git clone https://github.com/GoogleCloudPlatform/slack-samples.git

cd slack-samples/bot

Next, create a cluster, if you don't already have one:

gcloud container clusters create my-cluster

Create a

Slack bot user and get an authentication token. We'll be loading this token in our bot using the

Kubernetes Secrets API. Replace MY-SLACK-TOKEN with the one for your bot user. The

generate-secret.sh script creates the secret configuration for you by doing a simple text substitution in a template.

./generate-secret.sh MY-SLACK-TOKEN

kubectl create -f slack-token-secret.yaml

First, build the Docker container. Replace my-cloud-project-id below with your Cloud Platform

project ID. This tags the container so that the gcloud command line tool can upload it to your private Container Registry.

export PROJECT_ID=my-cloud-project-id

docker build -t gcr.io/${PROJECT_ID}/slack-bot .

Once the build completes, upload it.

gcloud docker push gcr.io/${PROJECT_ID}/slack-bot

First, create a replication controller configuration, populated with your project ID, so that Kubernetes knows where to load the Docker image from. Like generate-secret.sh, the

generate-rc.sh script creates the replication controller configuration for you by doing a simple text substitution in a template.

./generate-rc.sh $PROJECT_ID

Now, tell Kubernetes to create the replication controller to start running the bot.

kubectl create -f slack-bot-rc.yaml

You can check the status of your bot with:

kubectl get pods

Now your bot should be online and respond to "Hello."

Shut down and clean up

To shutdown your bot, we tell Kubernetes to delete the replication controller.

kubectl delete -f slack-bot-rc.yaml

If you've created a container cluster, you may still get charged for the Compute Engine resources it's using, even if they're idle. To delete the cluster, run:

gcloud container clusters delete my-cluster

This deletes the Compute Engine instances that are running the cluster.

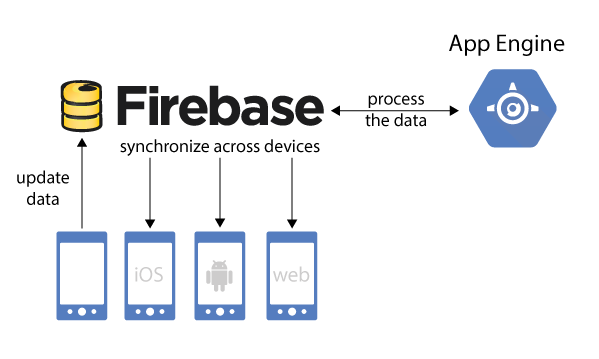

Building a Slash command on Google App Engine

App Engine is a great platform for building

Slack slash commands. Slash commands require that the server

support SSL with a valid certificate. App Engine supports HTTPS without any configuration for apps using the provided *.appspot.com domain, and it supports

SSL for custom domains. App Engine also provides great auto-scaling. You automatically get more instances with more usage and fewer (as few as zero or a configurable minimum) when demand goes down, and a

free tier to make it easy to get started.

We'll be using

Go on App Engine, but you can use any language supported by the runtime, including

Python,

Java1, and

PHP.

Clone the repository. We'll be using the

slash command sample for this integration.

git clone https://github.com/GoogleCloudPlatform/slack-samples.git

cd slack-samples/command/1-custom-integration

If you can reach your development machine from the internet, you should be able to test locally. Create a

Slash Command and point it at

http://your-machine:8080/quotes/random and run:

goapp serve --host=0.0.0.0

Now that we see it's working, we can deploy it. Replace

with your Cloud Platform project ID in the following command and run:

goapp deploy -application ./

Update your Slash Command configuration and try it out!

If you want to publish your command to be used by more than one team, you'll need to create a

Slack App. This will give you an OAuth Client ID and Client secret. Plug these values into the config.go file of the

App sample and deploy in the same way to get an "Add to Slack" button.

- Posted by Tim Swast, Developer Programs Engineer

1 Java is registered trademark of Oracle and/or its affiliates.↩