Today we're releasing Android 15 and making the source code available at the Android Open Source Project (AOSP). Android 15 will be available on supported Pixel devices in the coming weeks, as well as on select devices from Samsung, Honor, iQOO, Lenovo, Motorola, Nothing, OnePlus, Oppo, realme, Sharp, Sony, Tecno, vivo, and Xiaomi in the coming months.

We're proud to continue our work in open source through the AOSP. Open source allows anyone to build upon and contribute to Android, resulting in devices that are more diverse and innovative. You can leverage your app development skills in Android Studio with Jetpack Compose to create applications that thrive across the entire ecosystem. You can even examine the source code for a deeper understanding of how Android works.

Android 15 continues our mission of building a private and secure platform that helps improve your productivity while giving you new capabilities to produce beautiful apps, superior media and camera experiences, and an intuitive user experience, particularly on tablets and foldables.

Starting today, we're kicking off a new educational series called Spotlight Weeks, where we dive into technical topics across Android, beginning with a week of content on Android 15. Check out what we'll be covering throughout the week, as well as today's deep dive into edge-to-edge.

Improving your developer experience

While most of our work to improve your productivity centers around tools like Android Studio, Jetpack Compose, and the Android Jetpack libraries, each new Android platform release includes quality-of-life updates to improve the development experience. For example, Android 15 gives you new insights and telemetry to allow you to further tune your app experience, so you can make changes that improve the way your app runs on any platform release.

- The ApplicationStartInfo API helps provide insight into your app startup including the startup reason, time spent in launch phases, start temperature, and more.

- The Profiling class within Android Jetpack, streamlining the use of the new ProfilingManager API in Android 15, lets your app request heap profiles, heap dumps, stack samples, or system traces, enabling a new way to collect telemetry about how your app runs on user devices.

- The StorageStats.getAppBytesByDataType([type]) API gives you new insights into how your app is using storage, including apk file splits, ahead-of-time (AOT) and speedup related code, dex metadata, libraries, and guided profiles.

- The PdfRenderer APIs now include capabilities to incorporate advanced features such as rendering password-protected files, annotations, form editing, searching, and selection with copy. Linearized PDF optimizations are supported to speed local PDF viewing and reduce resource use. The Jetpack PDF library uses these APIs to simplify adding PDF viewing capabilities to your app, with planned support for older Android releases.

- Newly-added OpenJDK APIs include support for additional math/strictmath methods, many util updates including sequenced collection/map/set, ByteBuffer support in Deflater, and security key updates. These APIs are updated on over a billion devices running Android 12+ through Android 15 through Google Play system updates, so you can broadly take advantage of the latest programming features.

- Newly added SQLite APIs include support for read-only deferred transactions, new ways to retrieve the count of changed rows or the last inserted row ID without issuing an additional query, and direct support for raw SQLite statements.

- Android 15 adds new Canvas drawing capabilities, including Matrix44 to help manipulate the Canvas in 3D and clipShader/clipOutShader to enable complex shapes by intersecting either the current shader or a difference of the current shader.

Improving typography and internationalization

Android helps you make beautiful apps that work well across the global diversity of the Android ecosystem.

- You can now create a FontFamily instance from variable fonts in Android 15 without having to specify wght and ital axes using the buildVariableFamily API; the text renderer will automatically adjust the values of the wght and ital axes to match the displaying text with compatible fonts.

- The font file in Android 15 for Chinese, Japanese, and Korean (CJK) languages, NotoSansCJK, is now a variable font, opening up new possibilities for creative typography.

- Android 15 bundles the old Japanese Hiragana (also known as Hentaigana) font by default, helping add a distinctive flair to design while preserving more accurate transmission and understanding of ancient Japanese documents.

- JUSTIFICATION_MODE_INTER_CHARACTER in Android 15 improves justification for languages that use white space for segmentation such as Chinese and Japanese.

Camera and media improvements

Each Android release helps you bring superior media and camera experiences to your users.

- For screens that contain both HDR and SDR content, Android 15 allows you to control the HDR headroom with setDesiredHdrHeadroom to prevent SDR content from appearing too washed-out.

- Android 15 supports intelligently adjusting audio loudness and dynamic range compression levels for apps with AAC audio content that contains loudness metadata so that audio levels can adapt to user devices and surroundings. To enable, instantiate a LoudnessCodecController with the audio session ID from the associated AudioTrack.

- Low Light Boost in Android 15 adjusts the exposure of the Preview stream in low-light conditions, enabling enhanced image previews, scanning QR codes in low light, and more.

- Advanced flash strength adjustments in Android 15 enable precise control of flash intensity in both SINGLE and TORCH modes while capturing images.

- Android 15 extends Universal MIDI Packets support to virtual MIDI apps, enabling composition apps to control synthesizer apps as a virtual MIDI 2.0 device just like they would with an USB MIDI 2.0 device.

Improving the user experience

We continue to refine the Android user experience with every release, while working to improve performance and battery life. Here is just some of what Android 15 brings to make the experience more intuitive, performant, and accessible.

- Users can save their favorite split-screen app combinations for quick access and pin the taskbar on screen to quickly switch between apps for better large screen multitasking on Android 15; making sure your app is adaptive is more important than ever.

- Android 15 defaults to displaying apps edge-to-edge when they target SDK 35. Also, the system bars will be transparent or translucent and content will draw behind by default. To ensure your app is ready check out "Handle overlaps using insets" (Views) or Window insets in Compose. Also many of the Material 3 composables help handle insets for you.

- Android 15 enables TalkBack to support Braille displays that are using the HID standard over both USB and secure Bluetooth to help Android support a wider range of Braille displays.

- On supported Android 15 devices, NfcAdapter allows apps to request observe mode as well as register filters, enabling one-tap transactions in many cases across multiple NFC capable apps.

- Apps can declare a property to allow your Application or Activity to be presented on the small cover screens of supported flippable devices.

- Android 15 greatly enhances AutomaticZenRules to allow apps to further customize Attention Management (Do Not Disturb) rules by adding types, icons, trigger descriptions, and the ability to trigger ZenDeviceEffects.

- Android 15 now includes OS–level support for app archiving and unarchiving. Archiving removes the APK and any cached files, but persists user data and returns the app as displayable through the LauncherApps APIs, and the original installer can restore it upon a request to unarchive.

- Foreground services change on Android 15 as part of our work to improve battery life and multitasking performance, including data sync timeouts, a new media processing foreground service type, and restrictions on launching foreground services from BOOT_COMPLETED and while an app holds the SYSTEM_ALERT_WINDOW permission.

- Beginning with Android 15, 16 KB page size support will be available on select devices as a developer option. When Android uses this larger page size, our initial testing shows an overall performance boost of 5-10% while using ~9% additional memory.

Privacy and security enhancements

Privacy and security are at the core of everything we do, and we work to make meaningful improvements to protect your apps and our users with each platform release.

- Private space in Android 15 lets users create a separate space on their device where they can keep sensitive apps away from prying eyes, under an additional layer of authentication. Some types of apps such as medical apps, launcher apps, and app stores may need to take additional steps to function as expected in a user's private space.

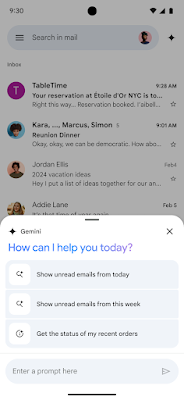

- Android 15 supports sign-in using passkeys with a single tap, as well as support to autofill saved credentials to relevant input fields.

- Android 15 adds support for apps to detect that they are being recorded so that you can inform the user that they're being recorded if your app is performing a sensitive operation.

- Android 15 adds the allowCrossUidActivitySwitchFromBelow attribute that blocks apps that don't match the top UID on the stack from launching activities to help prevent task hijacking attacks.

- PendingIntent creators block background activity launches by default in Android 15 to help prevent apps from accidentally creating a PendingIntent that could be abused by malicious actors.

Get your apps, libraries, tools, and game engines ready!

If you develop an SDK, library, tool, or game engine, it's particularly important to prepare any necessary updates immediately to prevent your downstream app and game developers from being blocked by compatibility issues and allow them to target the latest SDK features. Please let your developers know if updates are needed to fully support Android 15.

Testing your app involves installing your production app using Google Play or other means onto a device or emulator running Android 15. Work through all your app's flows and look for functional or UI issues. Review the behavior changes to focus your testing. Here are several changes to consider that apply even if you don't yet target Android 15:

- Package stopped state changes - Android 15 updates the behavior of the package FLAG_STOPPED state to keep apps stopped until the user launches or indirectly interacts with the app.

- Support for 16KB page sizes - Beginning with Android 15, 16 KB page size support will be available on select devices as a developer option. Additionally, Android Studio also offers an emulator system image with 16 KB support through the SDK manager. If your app or library uses the NDK, either directly or indirectly through a library, you can use the developer option in the QPR beta or the Android 15 emulator system image to test and fix applications to prepare for Android devices with 16 KB page sizes in the near future.

- Private space support - Test that your app/library works when installed in a private space; we have guidance for medical apps, launcher apps, and app stores.

- Removed legacy emoji font file - Some Android 15 devices such as Pixel will no longer have the bitmap NotoColorEmojiLegacy.ttf file included for compatibility since Android 13 and will only have the default vector file.

Please thoroughly exercise libraries and SDKs that your app is using during your compatibility testing. You may need to update to current SDK versions or reach out to the developer for help if you encounter any issues.

Once you’ve published the Android 15-compatible version of your app, you can start the process to update your app's targetSdkVersion.

App compatibility

We’re working to make updates faster and smoother with each platform release by prioritizing app compatibility. In Android 15 we’ve made most app-facing changes opt-in until your app targets SDK version 35. This gives you more time to make any necessary app changes.

To make it easier for you to test the opt-in changes that can affect your app, based on your feedback we’ve made many of them toggleable again this year. With the toggles, you can force-enable or disable the changes individually from Developer options or adb. Check out how to do this, here.

To help you migrate your app to target Android 15, the Android SDK Upgrade Assistant within the latest Android Studio Koala Feature Drop release now covers android 15 API changes and walks you through the steps to upgrade your targetSdkVersion.

Get started with Android 15

If you have a supported Pixel device, you will receive the public Android 15 over the air update when it becomes available. If you don't want to wait, you can get the most recent quarterly platform release (QPR) beta by joining the Android 15 QPR beta program at any time.

If you're already in the QPR beta program on a Pixel device that supports the next Android release, you'll likely have been offered the opportunity to install the first Android 15 QPR beta update. If you want to opt-out of the beta program without wiping your device, don't install the beta and instead wait for an update to the release version when it is made available on your Pixel device. Once you've applied the stable release update, you can opt out without a data wipe as long as you don't apply the next beta update.

Stay tuned for the next five days of our Spotlight Week on Android 15, where we'll be covering topics like edge-to-edge, passkeys, updates to foreground services, picture-in-picture, and more. Follow along on our blog, X, LinkedIn or YouTube channels. Thank you again to everyone who participated in our Android developer preview and beta program. We're looking forward to seeing how your apps take advantage of the updates in Android 15.

For complete information, visit the Android 15 developer site.

Java and OpenJDK are trademarks or registered trademarks of Oracle and/or its affiliates.

Posted by Matthew McCullough – VP of Product Management, Android Developer

Posted by Matthew McCullough – VP of Product Management, Android Developer

Posted by Alice Yuan – Developer Relations Engineer, in collaboration with Software Engineers – Matthew Valgenti and Emma Li – at Google

Posted by Alice Yuan – Developer Relations Engineer, in collaboration with Software Engineers – Matthew Valgenti and Emma Li – at Google

Posted by

Posted by

Posted by Mayuri Khinvasara Khabya – Developer Relations Engineer, Google; in partnership with Bismark Ito - Android Developer, Rex Jin - Android Developer and Bei Yi - Partner Engineering

Posted by Mayuri Khinvasara Khabya – Developer Relations Engineer, Google; in partnership with Bismark Ito - Android Developer, Rex Jin - Android Developer and Bei Yi - Partner Engineering

Posted by Terence Zhang – Developer Relations Engineer and Kristi Bradford - Product Manager

Posted by Terence Zhang – Developer Relations Engineer and Kristi Bradford - Product Manager

Posted by Anirudh Dewani, Director – Android Developer Relations

Posted by Anirudh Dewani, Director – Android Developer Relations