Posted by the Android team

Concepts is a digital illustration app created by TopHatch that helps creative thinkers bring their visions to life. The app uses an infinitely-large canvas format, so its users can sketch, plan, and edit all of their big ideas without limitation, while its vector-based ink provides the precision needed to refine and reorganize their ideas as they go.

For Concepts, having more on-screen real estate means more comfort, more creative space, and a better user experience overall. That’s why the app was specifically designed with large screens in mind. Concepts’ designers and engineers are always exploring new ways to expand the app’s large screen capabilities on Android. Thanks to Android’s suite of developer tools and resources, that’s easier than ever.

Evaluating an expanding market of devices

Large screens are the fastest growing segment of Android users, with more than 270 million users on tablets, foldables, and ChromeOS devices. It’s no surprise then that Concepts, an app that benefits users by providing them with more screen space, was attracted to the format. The Concepts team was also excited about innovation with foldables because having the large screen experience with greater portability gives users more opportunities to use the app in the ways that are best for them.

The team at Concepts spends a lot of time evaluating new large screen technologies and experiences, trying to find what hardware or software features might benefit the app the most. The team imagines and storyboards several scenarios, shares the best ones with a close-knit beta group, and quickly builds prototypes to determine whether these updates improve the UX for its larger user base.

For instance, Concepts’ designers recently tested the Samsung Galaxy Fold and found that users benefited from having more screen space when the device was folded. With help from the Jetpack WindowManager library, Concepts’ developers implemented a feature to automatically collapse the UI when the Galaxy’s large screen was folded, allowing for more on-screen space than if the UI were expanded.

|

Concepts’ first release for Android was optimized for ChromeOS and, because of this, supporting resizable windows was important to their user experience from the very beginning. Initially, they needed to use a physical device to test for various screen sizes. Now, the Concepts team can use Android’s resizeable emulator, which makes testing for different screen sizes much easier.

Android’s APIs and toolkit carry the workload

The developers’ goal with Concepts is to make the illustration experience feel as natural as putting pen to paper. For the Concepts team, this meant achieving as close to zero lag as possible between the stylus tip and the lines drawn on the Concepts canvas.

When Concepts’ engineers first created the app, they put a lot of effort into creating low-latency drawing themselves. Now, Android’s graphical APIs eliminate the complexity of creating efficient inking.

“The hardware to support low-latency inking with higher refresh rate screens and more accurate stylus data keeps getting better,” said David Brittain, co-founder and CEO of TopHatch, parent company of Concepts. “Android’s mature set of APIs make it easy.”

Concepts engineers also found that the core Android View APIs take care of most of the workload for supporting tablets and foldables and make heavy use of custom Views and ViewGroups in Concepts. The app’s color wheel, for example, is a custom View drawing to a Canvas, which uses Animators for the reveal animation. View, Canvas, and Animator are all classes from the Android SDK.

“Android’s tools and platform are making it easier to address the variety of screen sizes and input methods, with well-structured APIs for developing and increasing the number of choices for testing. Plus, Kotlin allows us to create concise, readable code,” said David.

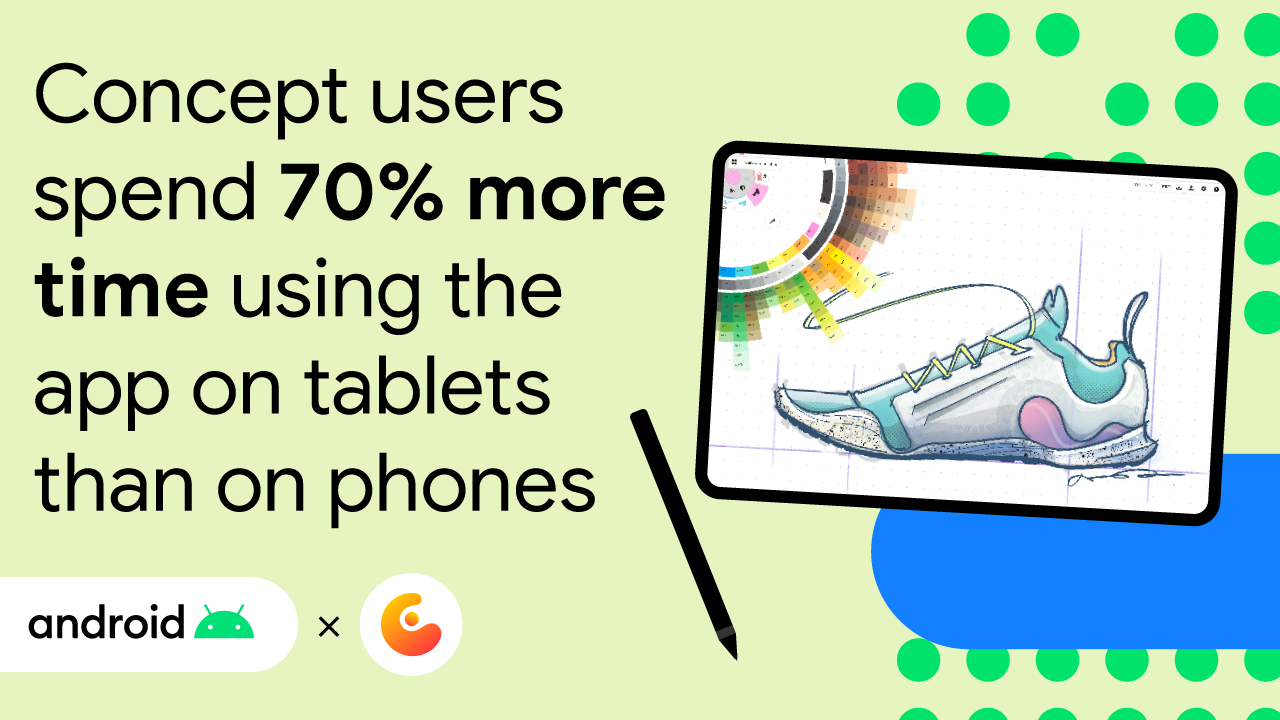

Concepts’ users prefer large screens

Tablets and foldables represent the bulk of Concepts’ investments and user base, and the company doesn’t see that changing any time soon. Currently, tablets deliver 50% higher revenue per user than smartphone users. Tablets also account for eight of the top 10 most frequently used devices among Concepts’ users, with the other two being ChromeOS devices.

Additionally, Concepts’ monthly users spend 70% more time engaging with the app on tablets than on traditional smartphones. The application’s rating is also 0.3 stars higher on tablets.

“We’re looking forward to future improvements in platform usability and customization while increasing experimentation with portable form factors. Continued efforts in this area will ensure high user adoption well into the future,” said David.

Start developing for large screens today

Learn how you can reach a growing audience of users by increasing development for large screens and foldables today.

Search for your next recipe, shop for helpful products and more this Ramadan, with help from Google.

Search for your next recipe, shop for helpful products and more this Ramadan, with help from Google.