Posted by Dave Burke, VP of Engineering

A few weeks ago we Introduced Android Q Beta, a first look at the next version of Android. Along with new privacy features for users, Android Q adds new capabilities for developers - like enhancements for foldables, new APIs for connectivity, new media codecs and camera capabilities, NNAPI extensions, Vulkan 1.1 graphics, and more.

Android's program of early, open previews is driven by our core philosophy of openness, and collaboration with our community. Your feedback since Beta 1 proves yet again the value of that openness - it's been loud, clear, and incredibly valuable. You've sent us thousands of bug reports, giving us insights and directional feedback, changing our plans in ways that make the platform better for users and developers. We're taking your feedback to heart, so please stay tuned. We're fortunate to have such a passionate community helping to guide Android Q toward the final product later this year.

Today we're releasing Android Q Beta 2 and an updated SDK for developers. It includes the latest bug fixes, optimizations, and API updates for Android Q, along with the April 2019 security patches. You'll also notice isolated storage becoming more prominent as we look for your wider testing and feedback to help us refine that feature.

We're still in early Beta with Android Q so expect rough edges! Before you install, check out the Known Issues. In particular, expect the usual transitional issues with apps that we typically see during early Betas as developers get their app updates ready. For example, you might see issues with apps that access photos, videos, media, or other files stored on your device, such as when browsing or sharing in social media apps.

You can get Beta 2 today by enrolling any Pixel device here. If you're already enrolled, watch for the Beta 2 update coming soon. Stay tuned for more at Google I/O in May.

What's new in Beta 2?

Privacy features for testing and feedback

As we shared at Beta 1, we're making significant privacy investments in Android Q in addition to the work we've done in previous releases. Our goals are improving transparency, giving users more control, and further securing personal data across platform and apps. We know that to reach those goals, we need to partner with you, our app developers. We realize that supporting these features is an investment for you too, so we'll do everything we can to minimize the impact on your apps.

For features like Scoped Storage, we're sharing our plans as early as possible to give you more time to test and give us your input. To generate broader feedback, we've also enabled Scoped Storage for new app installs in Beta 2, so you can more easily see what is affected.

With Scoped Storage, apps can use their private sandbox without permission, but they need new permissions to access shared collections for photos, videos and audio. Apps using files in shared collections -- for example, photo and video galleries and pickers, media browsing, and document storage -- may behave differently under Scoped Storage.

We recommend getting started with Scoped Storage soon -- the developer guide has details on how to handle key use-cases. For testing, make sure to enable Scoped Storage for your app using the adb command. If you discover that your app has a use-case that's not supported by Scoped Storage, please let us know by taking this short survey. We appreciate the great feedback you've given us already, it's a big help as we move forward with the development of this feature.

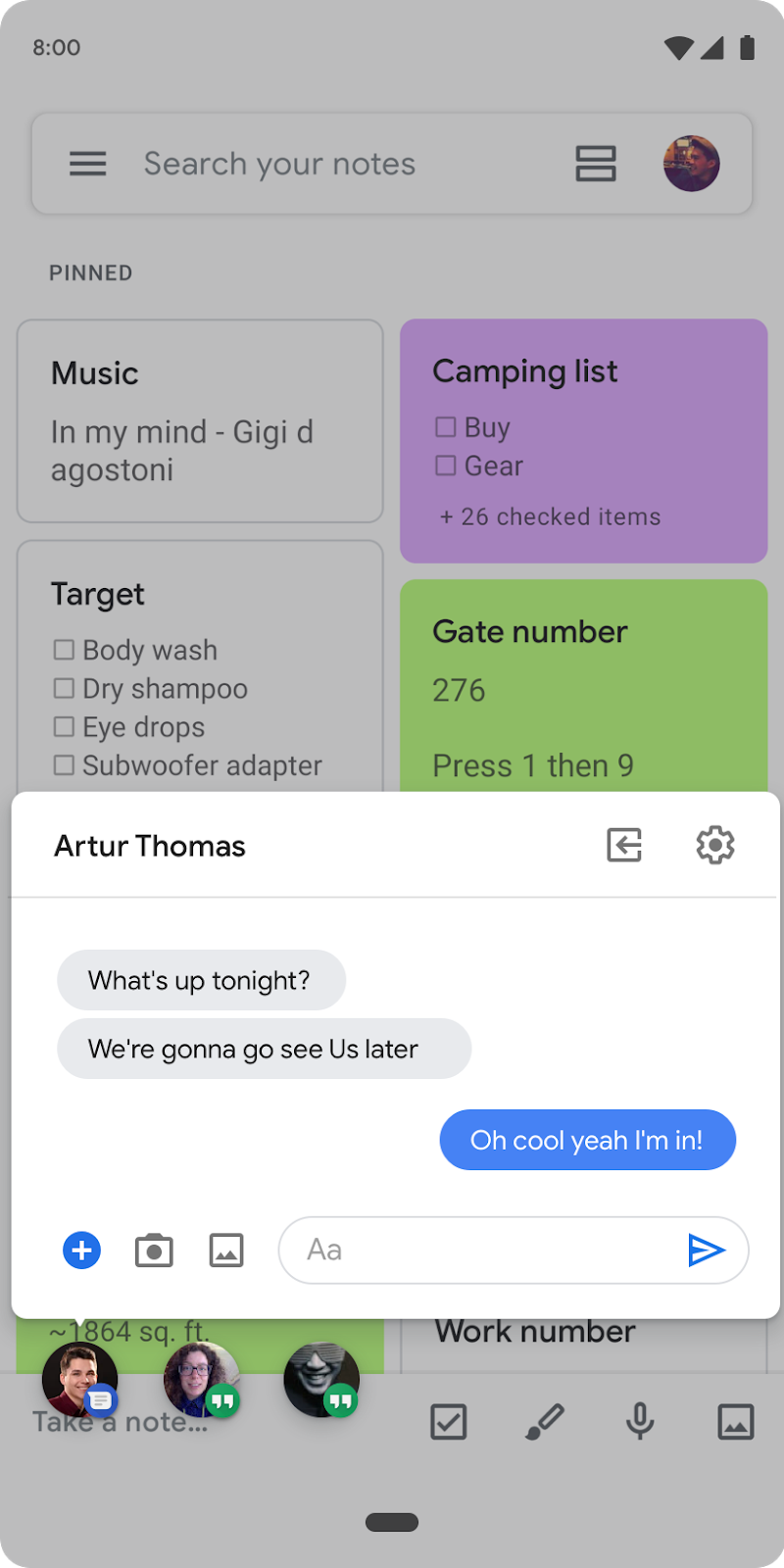

Bubbles: a new way to multitask

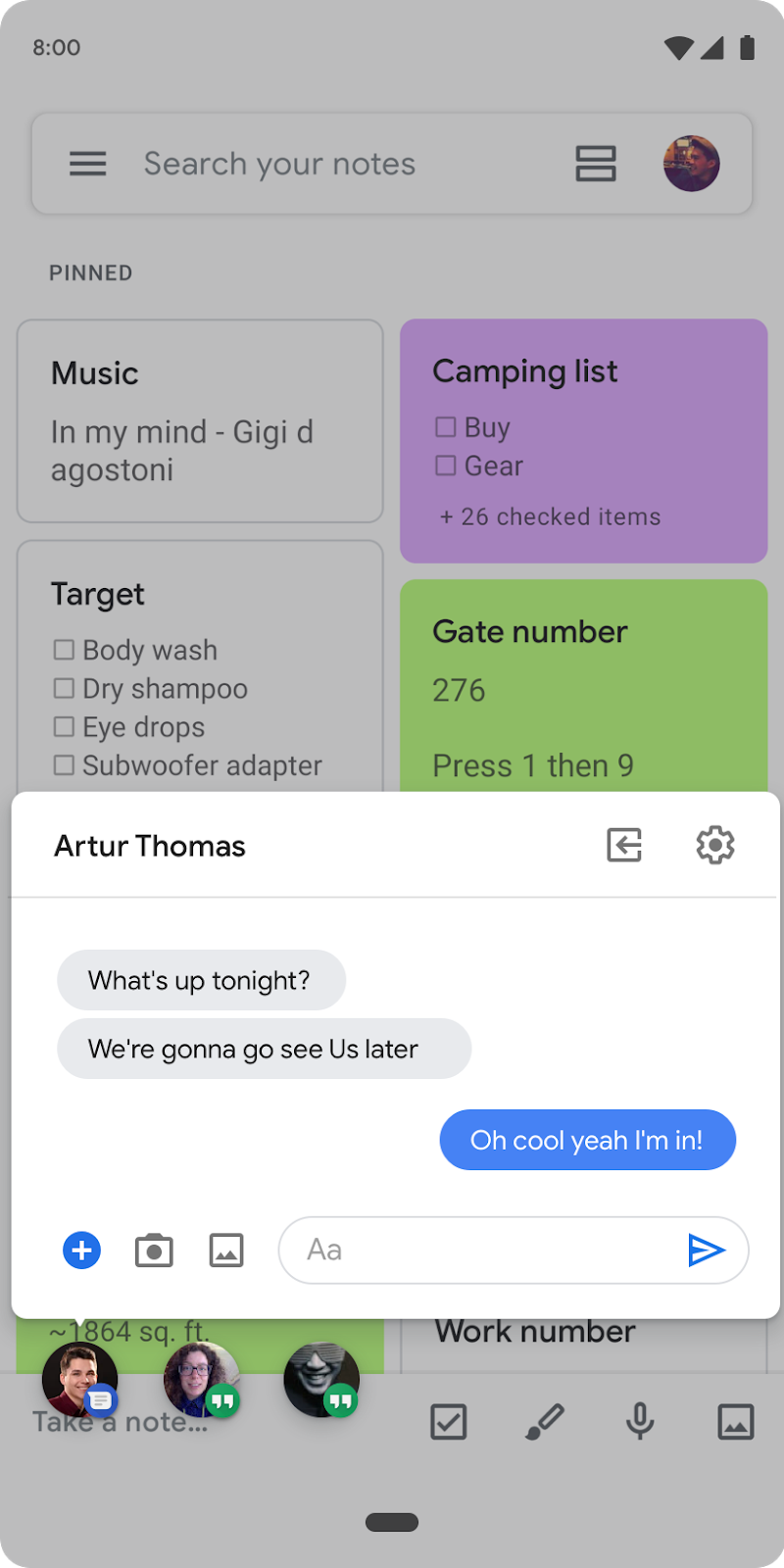

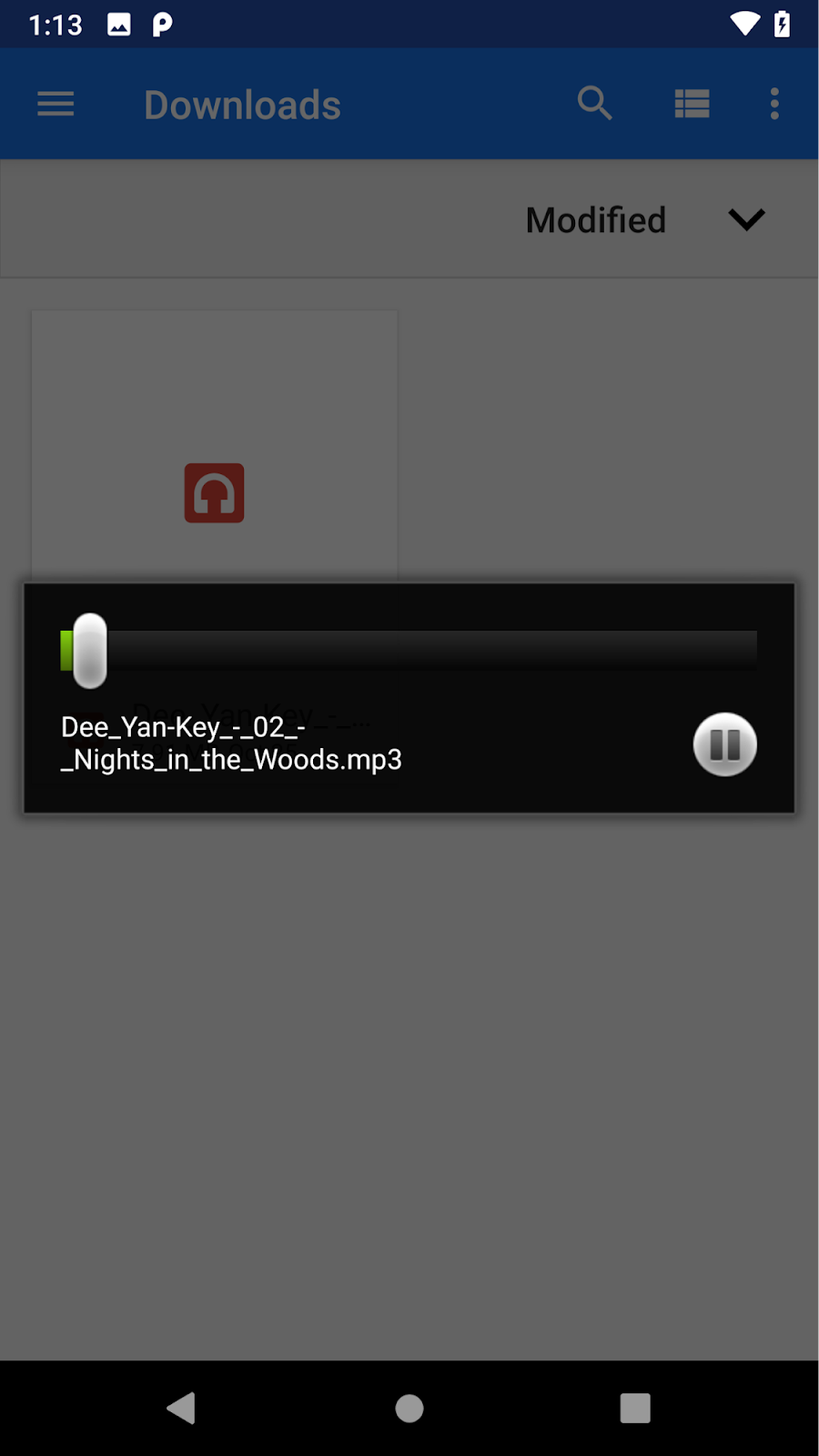

In Android Q we're adding platform support for bubbles, a new way for users to multitask and re-engage with your apps. Various apps have already built similar interactions from the ground up, and we're excited to bring the best from those into the platform, while helping to make interactions consistent, safeguard user privacy, reduce development time, and drive innovation.

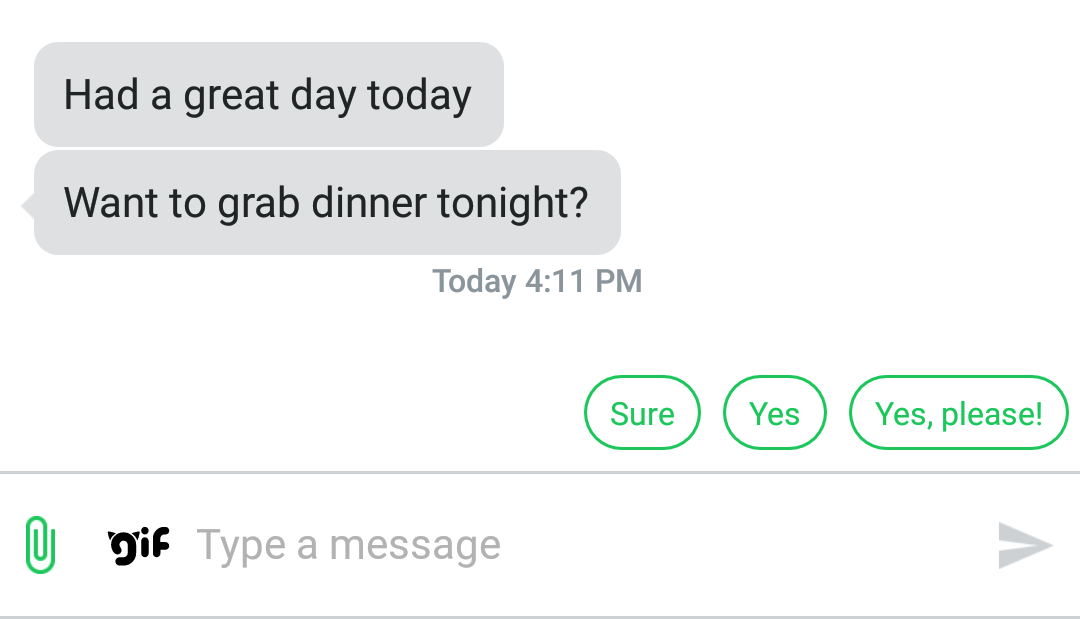

Bubbles will let users multitask as they move between activities.

Bubbles help users prioritize information and take action deep within another app, while maintaining their current context. They also let users carry an app's functionality around with them as they move between activities on their device.

Bubbles are great for messaging because they let users keep important conversations within easy reach. They also provide a convenient view over ongoing tasks and updates, like phone calls or arrival times. They can provide quick access to portable UI like notes or translations, and can be visual reminders of tasks too.

We've built bubbles on top of Android's notification system to provide a familiar and easy to use API for developers. To send a bubble through a notification you need to add a BubbleMetadata by calling setBubbleMetadata. Within the metadata you can provide the Activity to display as content within the bubble, along with an icon (disabled in beta 2) and associated person.

We're just getting started with bubbles, but please give them a try and let us know what you think. You can find a sample implementation here.

Foldables emulator

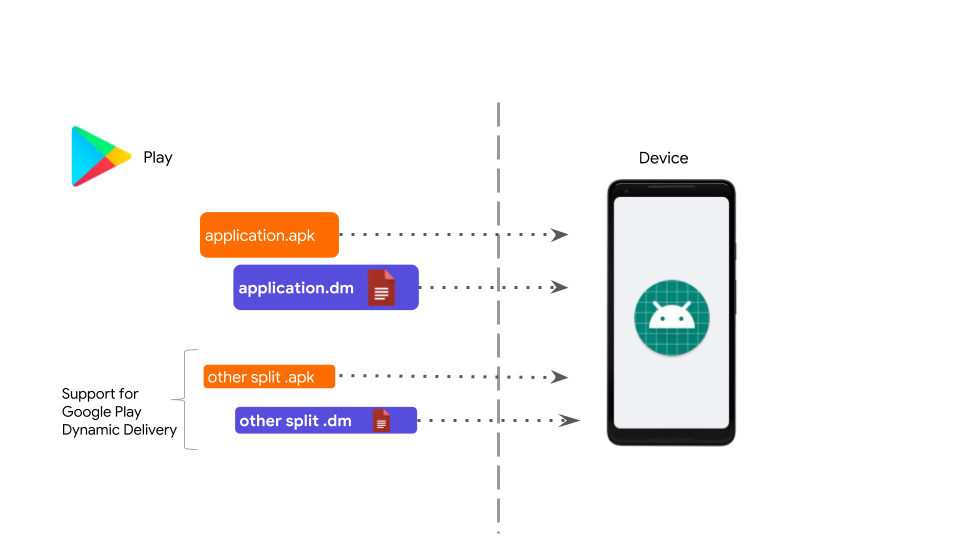

As the ecosystem moves quickly toward foldable devices, new use-cases are opening up for your apps to take advantage of these new screens. With Beta 2, you can build for foldable devices through Android Q enhanced platform support, combined with a new foldable device emulator, available as an Android Virtual device in Android Studio 3.5 available in the canary release channel.

7.3" Foldable AVD switches between the folded and unfolded states

On the platform side, we've made a number of improvements in onResume and onPause to support multi-resume and notify your app when it has focus. We've also changed how the resizeableActivity manifest attribute works, to help you manage how your app is displayed on foldable and large screens. You can read more in the foldables developer guide.

To set up a runtime environment for your app, you can now configure a foldable emulator as a virtual device (AVD) in Android Studio. The foldable AVD is a reference device that lets you test with standard hardware configurations, behaviors, and states, as will be used by our device manufacturer partners. To ensure compatibility, the AVD meets CTS/GTS requirements and models CDD compliance. It supports runtime configuration change, multi-resume and the new resizeableActivity behaviors.

Use the canary release of Android Studio 3.5 to create a foldable virtual device to support either of two hardware configurations 7.3" (4.6" folded) and 8" (6.6" folded) with Beta 2. In each configuration, the emulator gives you on-screen controls to trigger fold/unfold, change orientation, and quick actions.

Android Studio - AVD Manager: Foldable Device Setup

Try your app on the foldable emulator today by downloading the canary release of Android Studio 3.5 and setting up a foldable AVD that uses the Android Q Beta 2 system image.

Improved sharesheet

Following on the initial Sharing Shortcuts APIs in Beta 1, you can now offer a preview of the content being shared by providing an EXTRA_TITLE extra in the Intent for the title, or by setting the Intent's ClipData for a thumbnail image. See the updated sample application for the implementation details.

Directional, zoomable microphones

Android Q Beta 2 gives apps more control over audio capture through a new MicrophoneDirection API. You can use the API to specify a preferred direction of the microphone when taking an audio recording. For example, when the user is taking a "selfie" video, you can request the front-facing microphone for audio recording (if it exists) by calling setMicrophoneDirection(MIC_DIRECTION_FRONT).

Additionally, this API introduces a standardized way of controlling zoomable microphones, allowing your app to have control over the recording field dimension using setMicrophoneFieldDimension(float).

Compatibility through public APIs

In Android Q we're continuing our long-term effort to move apps toward only using public APIs. We introduced most of the new restrictions in Beta 1, and we're making a few minor updates to those lists in Beta 2 to minimize impact on apps. Our goal is to provide public alternative APIs for valid use-cases before restricting access, so if an interface that you currently use in Android 9 Pie is now restricted, you should request a new public API for that interface.

Get started with Android Q Beta

Today's update includes Beta 2 system images for all Pixel devices and the Android Emulator, as well updated SDK and tools for developers. These give you everything you need to get started testing your apps on the new platform and build with the latest APIs.

First, make your app compatible and give your users a seamless transition to Android Q, including your users currently participating in the Android Beta program. To get started, just install your current app from Google Play onto a device or emulator running Beta 2 and work through the user flows. The app should run and look great, and handle the Android Q behavior changes for all apps properly. If you find issues, we recommend fixing them in the current app, without changing your targeting level. See the migration guide for steps and a recommended timeline.

With important privacy features that are likely to affect your apps, we recommend getting started with testing right away. In particular, you'll want to test against scoped storage, new location permissions, restrictions on background Activity starts, and restrictions on device identifiers. See the privacy checklist as a starting point.

Next, update your app's targetSdkVersion to 'Q' as soon as possible. This lets you test your app with all of the privacy and security features in Android Q, as well as any other behavior changes for apps targeting Q.

Explore the new features and APIs

When you're ready, dive into Android Q and learn about the new features and APIs you can use in your apps. Here's a video highlighting many of the changes for developers in Beta 1 and Beta 2. Take a look at the API diff report for an overview of what's changed in Beta 2, and see the Android Q Beta API reference for details. Visit the Android Q Beta developer site for more resources, including release notes and how to report issues.

To build with Android Q, download the Android Q Beta SDK and tools into Android Studio 3.3 or higher, and follow these instructions to configure your environment. If you want the latest fixes for Android Q related changes, we recommend you use Android Studio 3.5 or higher.

How do I get Beta 2?

It's easy - you can enroll here to get Android Q Beta updates over-the-air, on any Pixel device (and this year we're supporting all three generations of Pixel -- Pixel 3, Pixel 2, and even the original Pixel!). If you're already enrolled, you'll receive the update to Beta 2 soon, no action is needed on your part. Downloadable system images are also available. If you don't have a Pixel device, you can use the Android Emulator -- just download the latest emulator system images via the SDK Manager in Android Studio.

As always, your input is critical, so please let us know what you think. You can use our hotlists for filing platform issues (including privacy and behavior changes), app compatibility issues, and third-party SDK issues. You've shared great feedback with us so far and we're working to integrate as much of it as possible in the next Beta release.