Posted by Sachiyo Sugimoto, Android Partner Engineering

A strength of Android is its diverse ecosystem of devices, brought to market by more than 24K distinct devices, and used by billions of people around the world. Since the early releases of Android, we’ve invested in our Android Compatibility Program as a way to ensure that devices continue to provide a stable, consistent environment for apps.

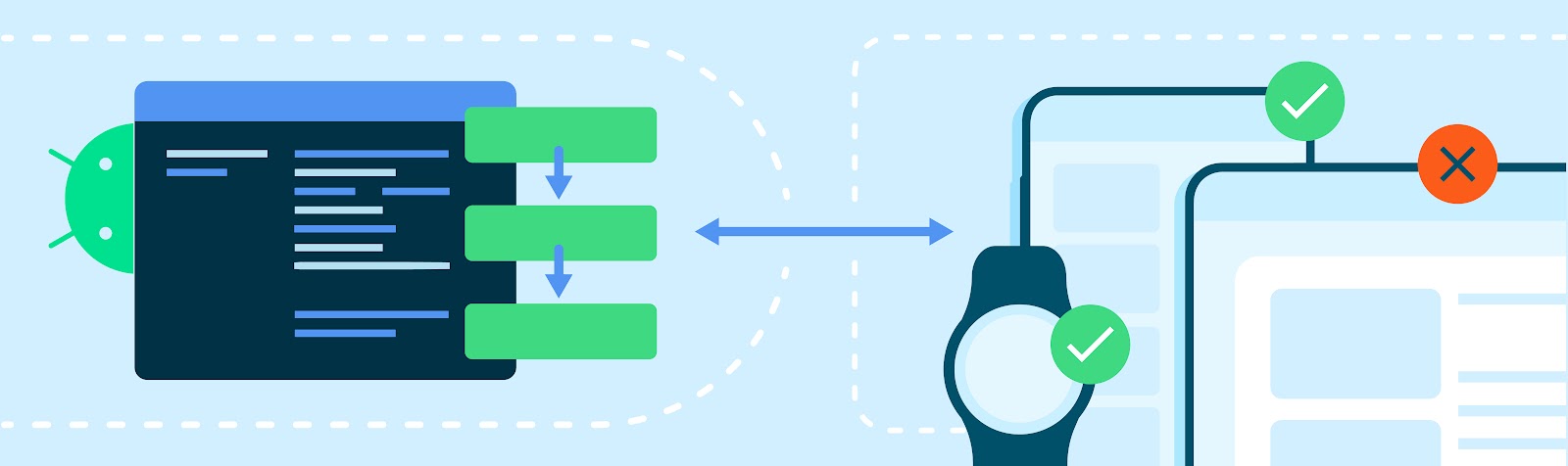

The Compatibility Test Suite (CTS) is a key part of the program - it is a collection of more than two million test cases that check Android device implementations to ensure developer applications run on a variety of devices and enable a consistent application experience for users.

Device makers run CTS on their devices throughout the development process, and use it to identify and fix bugs early. Over the years we have constantly expanded the suite by adding new test cases, and today CTS includes more than 2 million tests. It is still growing - as Android evolves, there are new areas to cover and there are also gaps where we are constantly working to create additional tests.

While most CTS tests are written by Android engineers, we know that app developers have a unique perspective on actual device compatibility issues. So to enhance CTS with better input from app developers, we are adding a new test suite called CTS-D that is built and run by developers like you.

What is CTS-D?

CTS-D is a new CTS module that is powered by app developers with a focus on pain points that they are seeing in the field. Developers can build and contribute test cases to CTS-D to help catch those issues, and they can run the CTS-D suite to verify compatibility. Longer term, our plan is to work closely with the Android developer community to expand the CTS-D suite.We know that many of you have already created your own tests to verify compatibility on various devices. We want to work with you to bring those tests into AOSP, and you can see the first tests contributed by the community in the initial CTS-D commit here.

So with CTS-D, we are helping to make those kinds of tests available widely, to help device manufacturers and app developers identify and share issues more effectively.

How is CTS-D used?

CTS-D is open-sourced and available on AOSP, so any app developer can use it as a verification tool. Using CTS-D helps to minimize the communication overhead among app developers, device manufacturers and Google, helping to resolve issues effectively.If a certain device does not pass a CTS-D test, please report the problem using this issue tracker template. After we verify the issue on the reported device, we will work with our partners to resolve it. We're also strongly advising device manufacturers to use CTS-D to discover and mitigate issues.

Get Started with CTS-D!

If you have an idea for CTS-D, please file a test proposal using this issue tracker template before contributing your test code to AOSP. The Android team will review your proposal and verify your test’s eligibility. We’re currently most interested in adding more test cases in the area of Power Management.Just like with CTS, new CTS-D test cases must meet eligibility requirements and can only enforce the following:

- All public API behaviors that are described in Android developer documentation.

- All MUST requirements that are included in Android Compatibility Definition Document (CDD).

- Test cases that have not been covered by existing CTS test cases in AOSP