Posted by Eric Lai, Product Manager, Augmented Reality

Augmented reality (AR) can help you explore the world around you in new, seemingly magical ways. Whether you want to venture through the Earth’s unique habitats, explore historic cultures or even just find the shortest path to your destination, there’s no shortage of ways that AR can help you interact with the world.

That’s why we’re constantly improving ARCore — so developers can build amazing AR experiences that help us reimagine what’s possible.

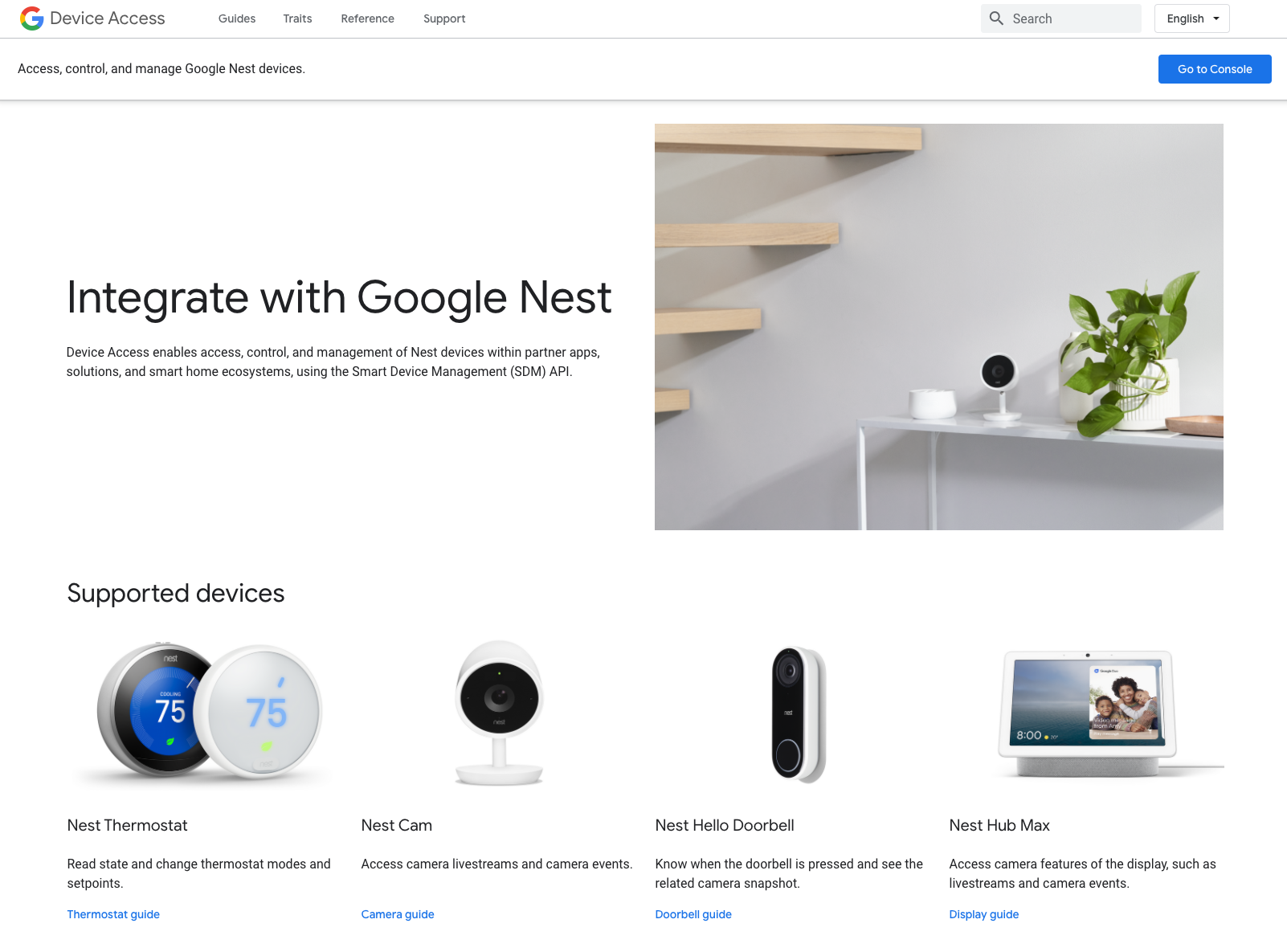

In 2018, we introduced the Cloud Anchors API in ARCore, which lets people across devices view and share the same AR content in real-world spaces. Since then, we’ve been working on new ways for developers to use Cloud Anchors to make AR content persist and more easily discoverable.

Create long-lasting AR experiences

Last year, we previewed persistent Cloud Anchors, which lets people return to shared AR experiences again and again. With ARCore 1.20, this feature is now widely available to Android, iOS, and Unity mobile developers.

Developers all over the world are already using this technology to help people learn, share and engage with the world around them in new ways.

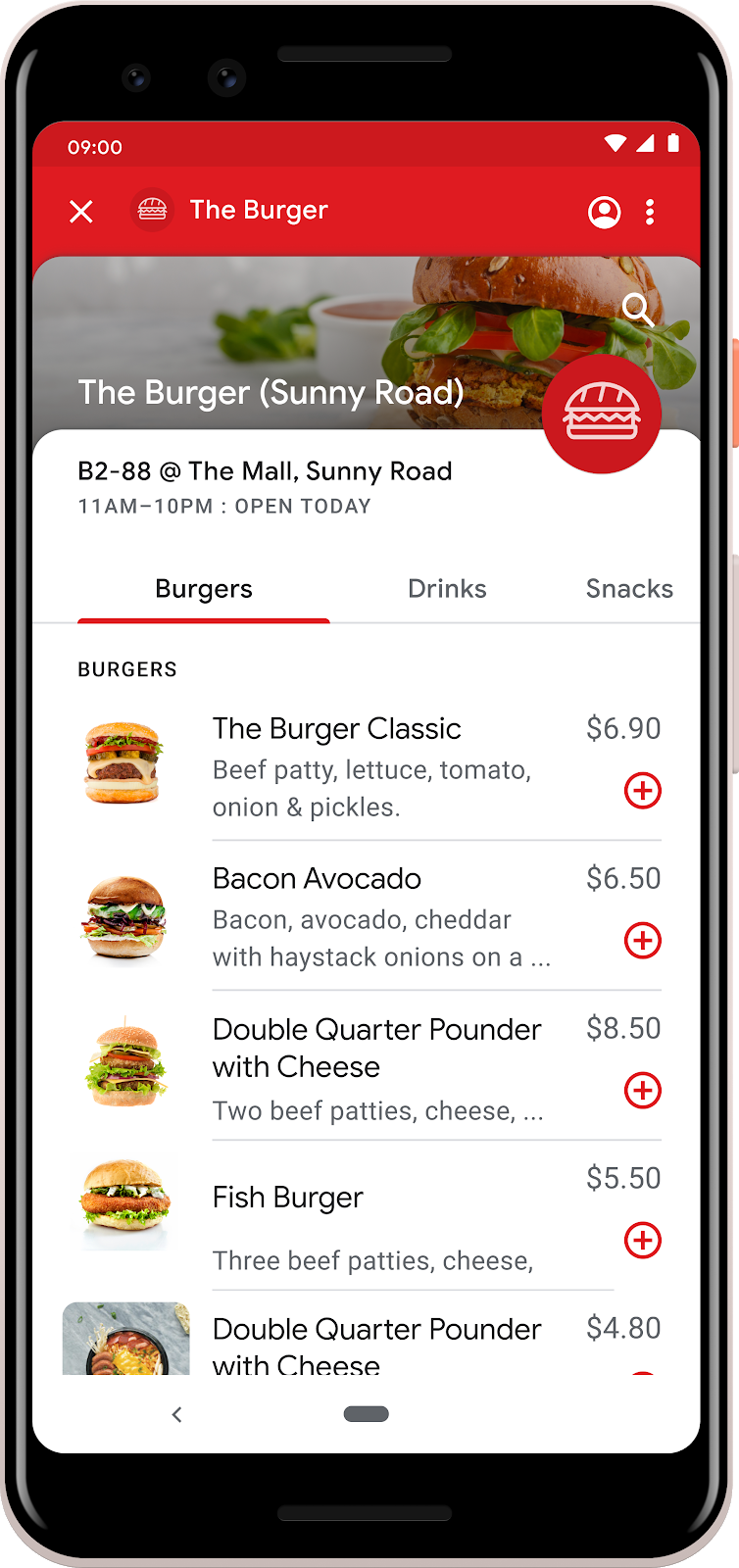

MARK, which we highlighted last year, is a social platform that lets people leave AR messages in real-world locations for friends, family and their community to discover. MARK is now available globally and will be launching the MARK Hope Campaign in the US to help people raise funds for their favorite charities and have their donations matched for a limited time.

MARK by People Sharing Streetart Together Limited

REWILD Our Planet is an AR nature series produced by Melbourne based studio PHORIA. The experience is based on the Netflix original documentary series Our Planet. REWILD uses Ultra High Definition Video alongside AR content to let you venture into earth’s unique habitats and interact with endangered wildlife. It originally launched in museums, but can now be enjoyed on your smartphone in your living room. As episodes of the show are released, persistent Cloud Anchors allow you to return to the same spot in your own home to see how nature is changing.

REWILD Our Planet by PHORIA

Changdeok ARirang is an AR tour guide app that combines the power of SK Telecom’s 5G with persistent Cloud Anchors. Visitors at Changdeokgung Palace in South Korea are guided by the legendary Haechi to relevant locations where they can experience historical and cultural high fidelity AR content. Changdeok ARirang at Home was also launched so that this same experience can be accessed from the comfort of your couch.

Changdeok ARirang by SK Telecom

In Sweden, SJ Labs, the innovation arm of Swedish Railways, together with Bontouch, their tech innovation partner, uses persistent Cloud Anchors to help passengers find their way at Central Station in Stockholm, making it easier and faster for them to make their train departures.

SJ Labs by SJ – Swedish Railways

Coming soon, Lowe’s Persistent View will let you design your home in AR with the help of an expert. You’ll be able to add furniture and appliances to different areas of your home to see how they’d look, and return to the experience as many times as needed before making a purchase.

Lowe’s Persistent View powered by Streem

If you’re interested in building AR experiences that last over time, you can learn more about persistent Cloud Anchors in our docs.

Call for collaborators: test a new way to find AR content

As developers use Cloud Anchors to attach more AR experiences to the world, we also want to make it easier for people to discover them. That’s why we’re working on earth Cloud Anchors, a new feature that uses AR and global localization—the underlying technology that powers Live View features on Google Maps—to easily guide users to AR content. If you’re interested in early access to test this feature, you can apply here.

Some earth Cloud Anchors concepts