Posted by Billy Rutledge (Director) and Vikram Tank (Product Mgr), Coral Team

AI can be beneficial for everyone, especially when we all explore, learn, and build together. To that end, Google's been developing tools like TensorFlow and AutoML to ensure that everyone has access to build with AI. Today, we're expanding the ways that people can build out their ideas and products by introducing Coral into public beta.

Coral is a platform for building intelligent devices with local AI.

Coral offers a complete local AI toolkit that makes it easy to grow your ideas from prototype to production. It includes hardware components, software tools, and content that help you create, train and run neural networks (NNs) locally, on your device. Because we focus on accelerating NN's locally, our products offer speedy neural network performance and increased privacy — all in power-efficient packages. To help you bring your ideas to market, Coral components are designed for fast prototyping and easy scaling to production lines.

Our first hardware components feature the new Edge TPU, a small ASIC designed by Google that provides high-performance ML inferencing for low-power devices. For example, it can execute state-of-the-art mobile vision models such as MobileNet V2 at 100+ fps, in a power efficient manner.

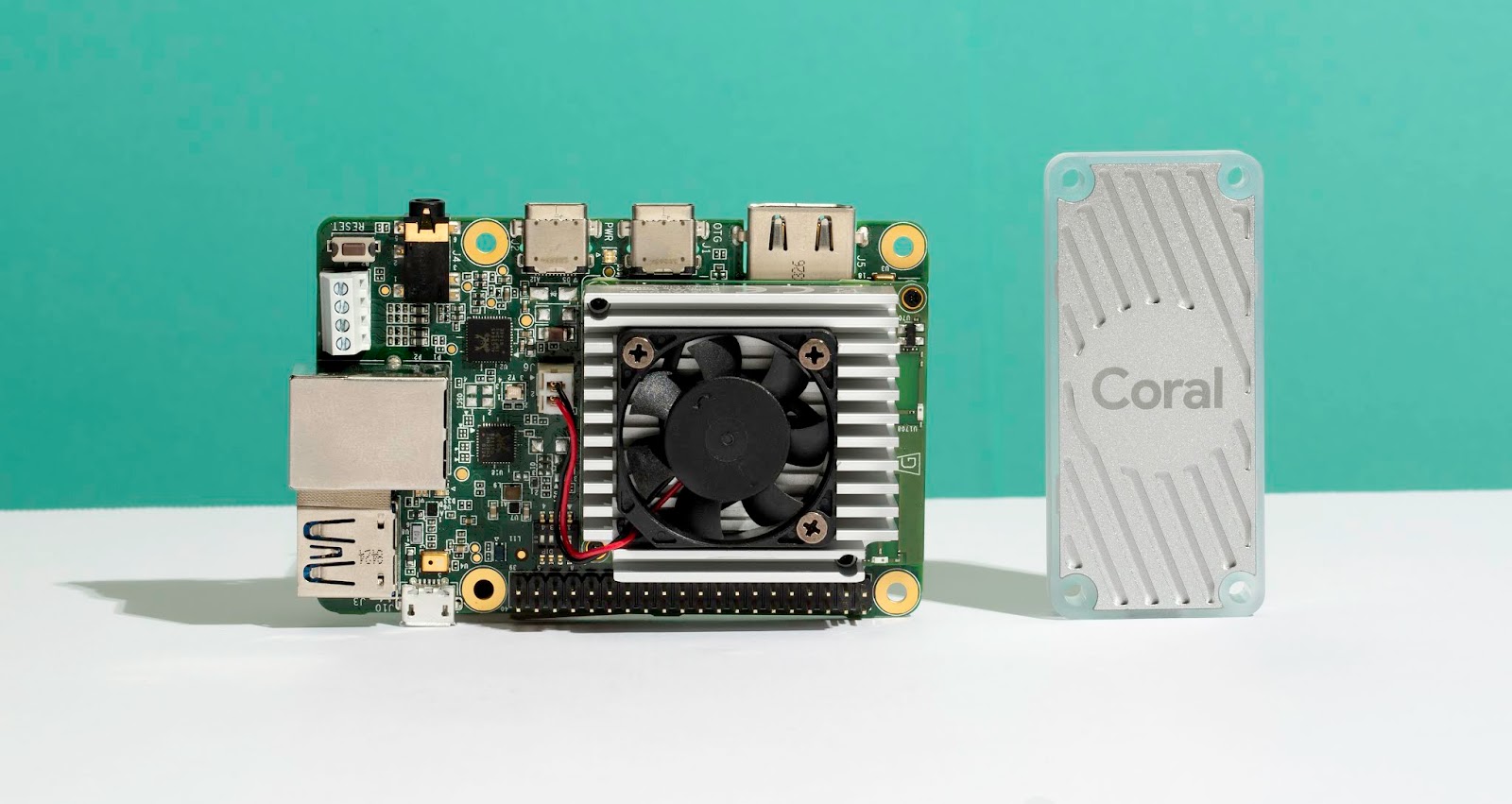

Coral Camera Module, Dev Board and USB Accelerator

For new product development, the Coral Dev Board is a fully integrated system designed as a system on module (SoM) attached to a carrier board. The SoM brings the powerful NXP iMX8M SoC together with our Edge TPU coprocessor (as well as Wi-Fi, Bluetooth, RAM, and eMMC memory). To make prototyping computer vision applications easier, we also offer a Camera that connects to the Dev Board over a MIPI interface.

To add the Edge TPU to an existing design, the Coral USB Accelerator allows for easy integration into any Linux system (including Raspberry Pi boards) over USB 2.0 and 3.0. PCIe versions are coming soon, and will snap into M.2 or mini-PCIe expansion slots.

When you're ready to scale to production we offer the SOM from the Dev Board and PCIe versions of the Accelerator for volume purchase. To further support your integrations, we'll be releasing the baseboard schematics for those who want to build custom carrier boards.

Our software tools are based around TensorFlow and TensorFlow Lite. TF Lite models must be quantized and then compiled with our toolchain to run directly on the Edge TPU. To help get you started, we're sharing over a dozen pre-trained, pre-compiled models that work with Coral boards out of the box, as well as software tools to let you re-train them.

For those building connected devices with Coral, our products can be used with Google Cloud IoT. Google Cloud IoT combines cloud services with an on-device software stack to allow for managed edge computing with machine learning capabilities.

Coral products are available today, along with product documentation, datasheets and sample code at g.co/coral. We hope you try our products during this public beta, and look forward to sharing more with you at our official launch.