Posted by Billy Rutledge, Director of AIY ProjectsOur teams are continually inspired by how Makers

use Google technology to do crazy, cool new things. Things we would've never imagined doing ourselves, things that solve real world problems. After talking to Maker community members, we learned that many were interested in using artificial intelligence in projects, but didn't know where to begin. To address this gap, we're launching AIY Projects: do-it-yourself artificial intelligence for Makers.

With AIY Projects, Makers can use artificial intelligence to make human-to-machine interaction more like human-to-human interactions. We'll be releasing a series of reference kits, starting with voice recognition. The speech recognition capability in our first project could be used to:

- Replace physical buttons and digital displays (those are so 90's) on household appliances and consumer electronics (imagine a coffee machine with no buttons or screen -- just talk to it)

- Replace smartphone apps to control devices (those are so 2000's) on connected devices (imagine a connected light bulb or thermostat -- just talk to them)

- Add voice recognition to assistive robotics (e.g. for accessibility) -- just talk to the robot as a simplified programming interface, e.g. "tell me what's in this room or "tell me when you see the mail-carrier come to the door"

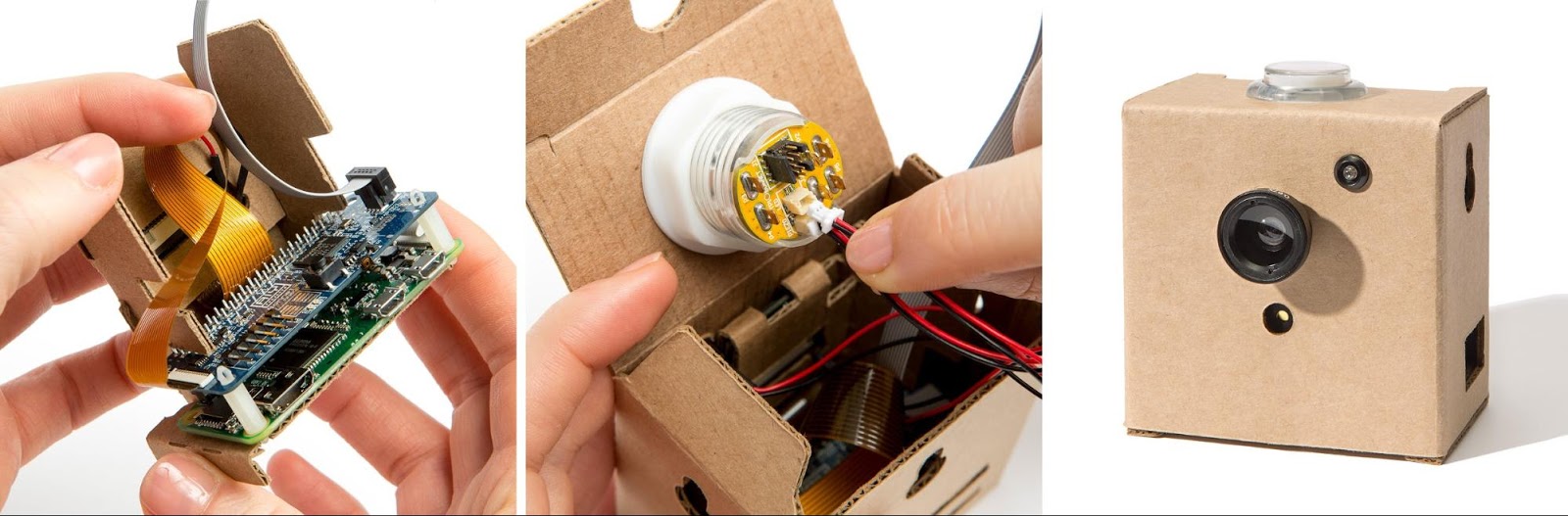

Fully assembled Voice Kit. The first open source reference project is the Voice Kit: instructions to build a Voice User Interface (VUI) that can use cloud services (like the new

Google Assistant SDK or

Cloud Speech API) or run completely on-device. This project extends the functionality of the most popular single board computer used for digital making - the Raspberry Pi.

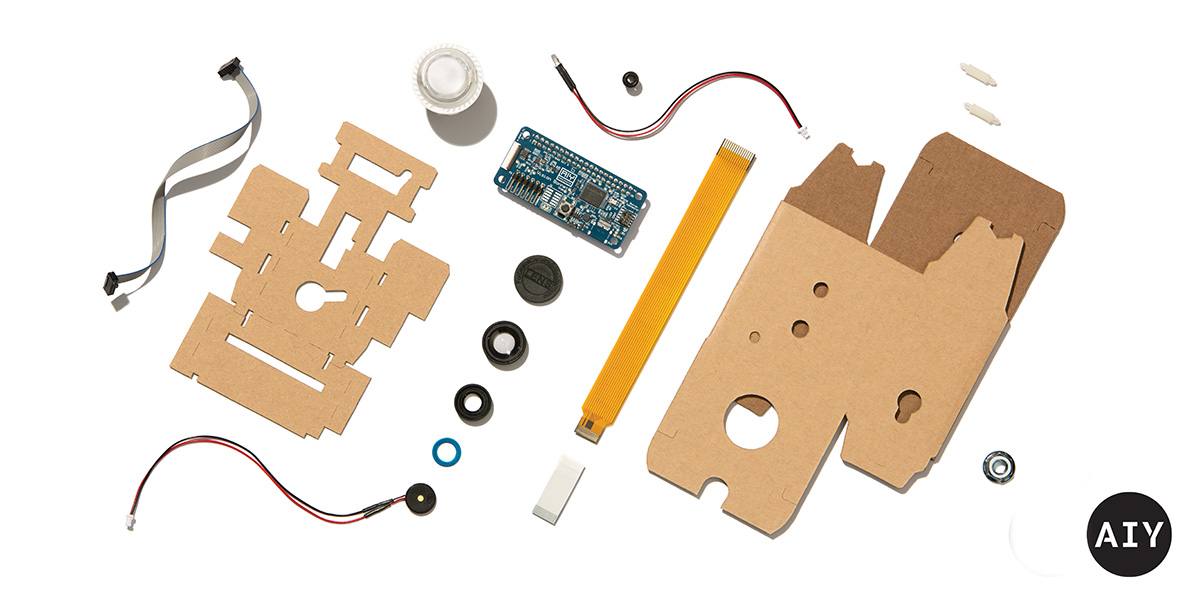

Everything that comes in the Voice Kit.

Everything that comes in the Voice Kit. The included Voice Hardware Accessory on Top (HAT) contains hardware for audio capture and playback: easy-to-use connectors for the dual mic daughter board and speaker, GPIO pins to connect low-voltage components like micro-servos and sensors, and an optional barrel connector for dedicated power supply. It was designed and tested with the Raspberry Pi 3 Model B.

Alternately, Developers can run

Android Things on the Voice Kit with full functionality - making it easy to prototype Internet-of-Things devices and scale to full commercial products with several turnkey hardware solutions available (including Intel Edison, NXP Pico, and Raspberry Pi 3).

Download the latest Android Things developer preview to get started.

Close up of the Voice HAT accessory board. Making with the Google Assistant SDKThe

Google Assistant SDK developer preview was

released last week. It's enabled by default, and brings the Google Assistant to your Voice Kit: including voice control, natural language understanding, Google's smarts, and more.

In combination with the rest of the Voice Kit, we think the Google Assistant SDK will provide you many creative opportunities to build fun and engaging projects. Makers have already started experimenting with the SDK - including building a

mocktail maker. The Voice Kit ships out to all

MagPi Magazine subscribers on May 4, 2017, and we've published a parts list, assembly instructions, source code and suggested extensions to our website:

aiyprojects.withgoogle.com. The complete kit is also for sale at over 500 Barnes & Noble stores nationwide, as well as UK retailers WH Smith, Tesco, Sainsburys, and Asda.

This is just the first AIY Project. There are more in the works, but we need to know how you'd like to incorporate AI into your own projects. Visit

hackster.io to share your experiences and discuss future projects. Use

#AIYprojects on social media to help us find your inventions. And if you happen to be at the San Mateo

Maker Faire on May 19-21, 2017, stop by the Google pavilion to give us feedback.