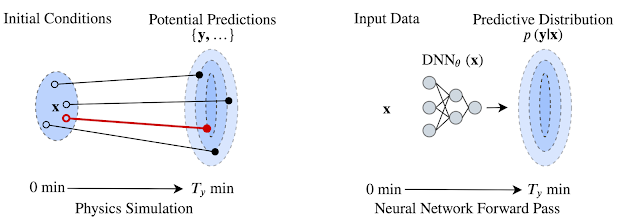

Predicting weather from minutes to weeks ahead with high accuracy is a fundamental scientific challenge that can have a wide ranging impact on many aspects of society. Current forecasts employed by many meteorological agencies are based on physical models of the atmosphere that, despite improving substantially over the preceding decades, are inherently constrained by their computational requirements and are sensitive to approximations of the physical laws that govern them. An alternative approach to weather prediction that is able to overcome some of these constraints uses deep neural networks (DNNs): instead of encoding explicit physical laws, DNNs discover patterns in the data and learn complex transformations from inputs to the desired outputs using parallel computation on powerful specialized hardware such as GPUs and TPUs.

Building on our previous research into precipitation nowcasting, we present “MetNet: A Neural Weather Model for Precipitation Forecasting,” a DNN capable of predicting future precipitation at 1 km resolution over 2 minute intervals at timescales up to 8 hours into the future. MetNet outperforms the current state-of-the-art physics-based model in use by NOAA for prediction times up to 7-8 hours ahead and makes a prediction over the entire US in a matter of seconds as opposed to an hour. The inputs to the network are sourced automatically from radar stations and satellite networks without the need for human annotation. The model output is a probability distribution that we use to infer the most likely precipitation rates with associated uncertainties at each geographical region. The figure below provides an example of the network’s predictions over the continental United States.

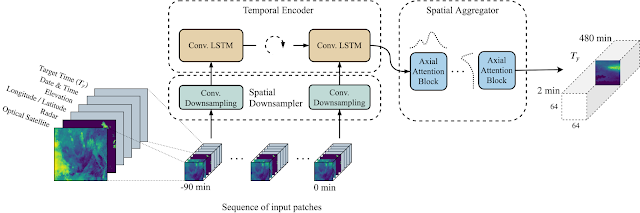

MetNet does not rely on explicit physical laws describing the dynamics of the atmosphere, but instead learns by backpropagation to forecast the weather directly from observed data. The network uses precipitation estimates derived from ground based radar stations comprising the multi-radar/multi-sensor system (MRMS) and measurements from NOAA’s Geostationary Operational Environmental Satellite system that provides a top down view of clouds in the atmosphere. Both data sources cover the continental US and provide image-like inputs that can be efficiently processed by the network.

The model is executed for every 64 km x 64 km square covering the entire US at 1 km resolution. However, the actual physical coverage of the input data corresponding to each of these output regions is much larger, since it must take into account the possible motion of the clouds and precipitation fields over the time period for which the prediction is made. For example, assuming that clouds move up to 60 km/h, in order to make informed predictions that capture the temporal dynamics of the atmosphere up to 8 hours ahead, the model needs 60 x 8 = 480 km of spatial context in all directions. So, to achieve this level of context, information from a 1024 km x 1024 km area is required for predictions being made on the center 64 km x 64 km patch.

|

| Size of the input patch containing satellite and radar images (large, 1024 x 1024 km square) and of the output predicted radar image (small, 64 x 64 km square). |

The output of this architecture is a discrete probability distribution estimating the probability of a given rate of precipitation for each square kilometer in the continental United States.

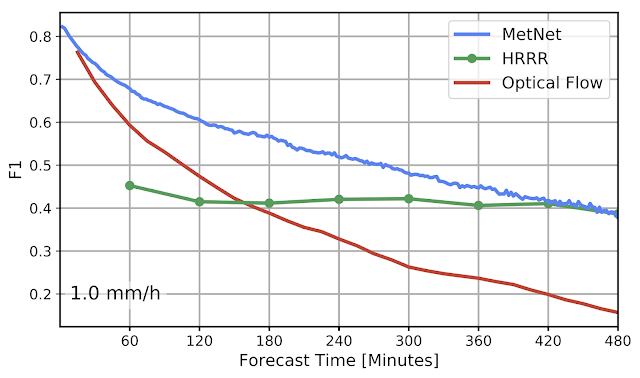

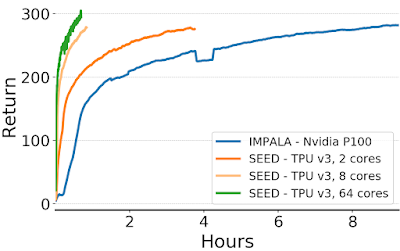

We evaluate MetNet on a precipitation rate forecasting benchmark and compare the results with two baselines — the NOAA High Resolution Rapid Refresh (HRRR) system, which is the physical weather forecasting model currently operational in the US, and a baseline model that estimates the motion of the precipitation field (i.e., optical flow), a method known to perform well for prediction times less than 2 hours.

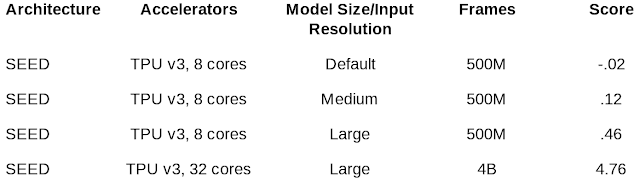

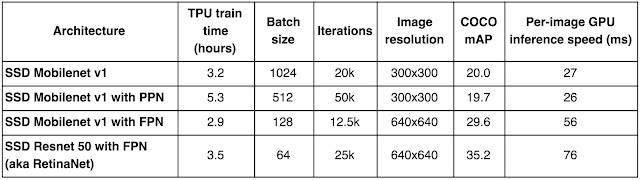

A significant advantage of our neural weather model is that it is optimized for dense and parallel computation and well suited for running on specialty hardware (e.g., TPUs). This allows predictions to be made in parallel in a matter of seconds, whether it is for a specific location like New York City or for the entire US, whereas physical models such as HRRR have a runtime of about an hour on a supercomputer.

We quantify the difference in performance between MetNet, HRRR, and the optical flow baseline model in the plot below. Here, we show the performance achieved by the three models, evaluated using the F1-score at a precipitation rate threshold of 1.0 mm/h, which corresponds to light rain. The MetNet neural weather model is able to outperform the NOAA HRRR system at timelines less than 8 hours and is consistently better than the flow-based model.

Future Directions

We are actively researching how to improve global weather forecasting, especially in regions where the impacts of rapid climate change are most profound. While we demonstrate the present MetNet model for the continental US, it could be extended to cover any region for which adequate radar and optical satellite data are available. The work presented here is a small stepping stone in this effort that we hope leads to even greater improvements through future collaboration with the meteorological community.

Acknowledgements

This project was done in collaboration with Lasse Espeholt, Jonathan Heek, Mostafa Dehghani, Avital Oliver, Tim Salimans, Shreya Agrawal and Jason Hickey. We would also like to thank Manoj Kumar, Wendy Shang, Dick Weissenborn, Cenk Gazen, John Burge, Stephen Hoyer, Lak Lakshmanan, Rob Carver, Carla Bromberg and Aaron Bell for useful discussions and Tom Small for help with the visualizations.