Machine learning (ML) has seen tremendous successes recently, which were made possible by ML algorithms like deep neural networks that were discovered through years of expert research. The difficulty involved in this research fueled AutoML, a field that aims to automate the design of ML algorithms. So far, AutoML has focused on constructing solutions by combining sophisticated hand-designed components. A typical example is that of neural architecture search, a subfield in which one builds neural networks automatically out of complex layers (e.g., convolutions, batch-norm, and dropout), and the topic of much research.

An alternative approach to using these hand-designed components in AutoML is to search for entire algorithms from scratch. This is challenging because it requires the exploration of vast and sparse search spaces, yet it has great potential benefits — it is not biased toward what we already know and potentially allows for the discovery of new and better ML architectures. By analogy, if one were building a house from scratch, there is more potential for flexibility or improvement than if one was constructing a house using only prefabricated rooms. However, the discovery of such housing designs may be more difficult because there are many more possible ways to combine the bricks and mortar than there are of combining pre-made designs of entire rooms. As such, early research into algorithm learning from scratch focused on one aspect of the algorithm, to reduce the search space and compute required, such as the learning rule, and has not been revisited much since the early 90s. Until now.

Extending our research into evolutionary AutoML, our recent paper, to be published at ICML 2020, demonstrates that it is possible to successfully evolve ML algorithms from scratch. The approach we propose, called AutoML-Zero, starts from empty programs and, using only basic mathematical operations as building blocks, applies evolutionary methods to automatically find the code for complete ML algorithms. Given small image classification problems, our method rediscovered fundamental ML techniques, such as 2-layer neural networks with backpropagation, linear regression and the like, which have been invented by researchers throughout the years. This result demonstrates the plausibility of automatically discovering more novel ML algorithms to address harder problems in the future.

Evolving Learning Algorithms from Scratch

We use a variant of classic evolutionary methods to search the space of algorithms. These methods have proved useful in discovering computer programs since the 80s. Their simplicity and scalability makes them especially suitable for the discovery of learning algorithms.

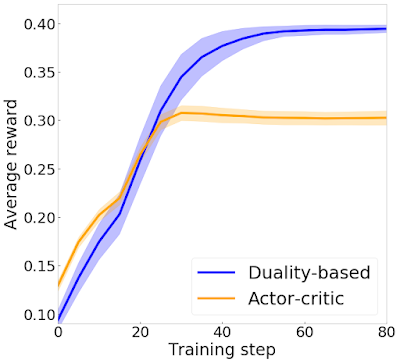

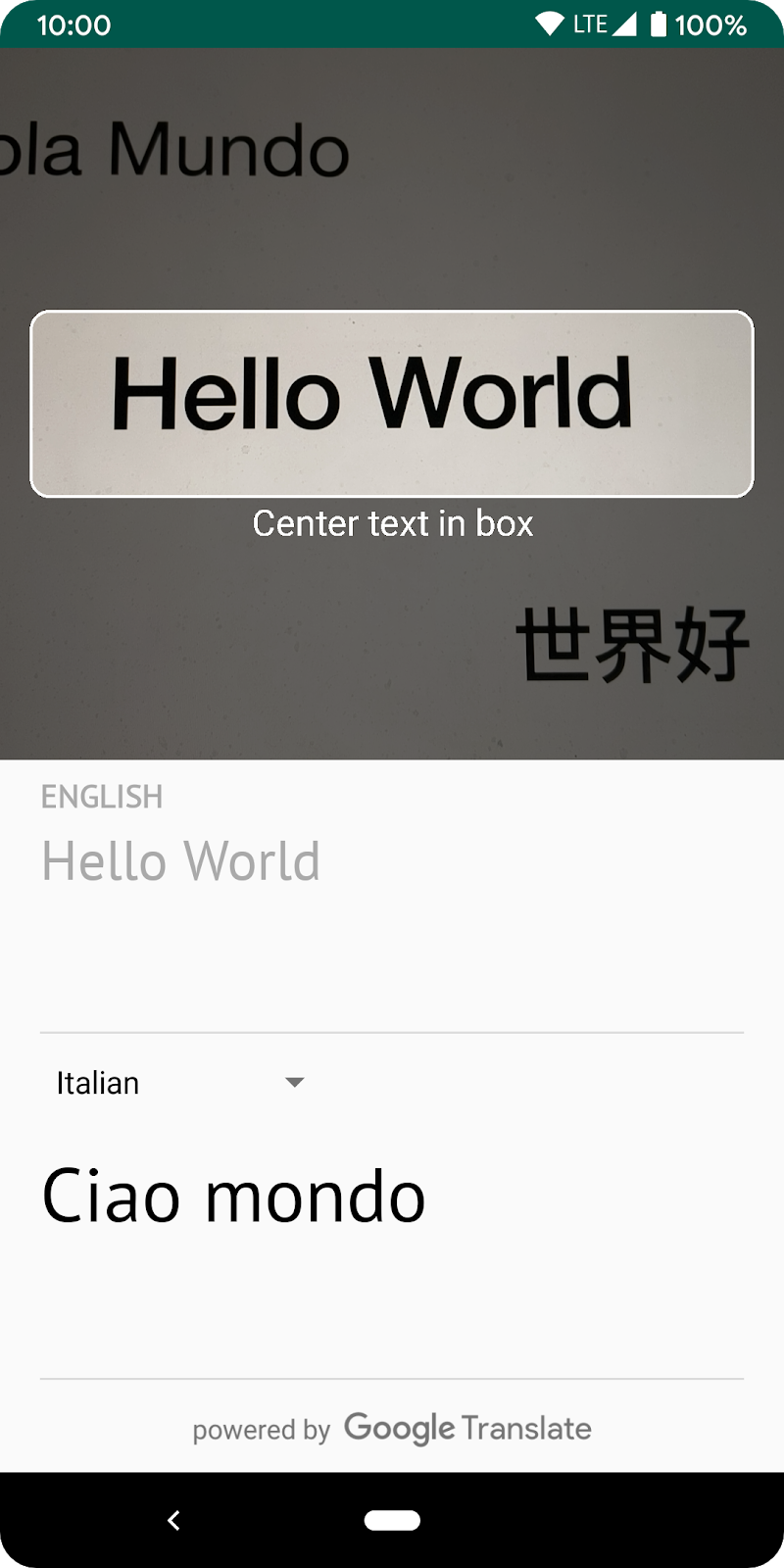

In our case, a population is initialized with empty programs. It then evolves in repeating cycles to produce better and better learning algorithms. At each cycle, two (or more) random models compete and the most accurate model gets to be a parent. The parent clones itself to produce a child, which gets mutated. That is, the child’s code is modified in a random way, which could mean, for example, arbitrarily inserting, removing or modifying a line in the code. The mutated algorithm is then evaluated on image classification tasks.

Our AutoML-Zero setup, in contrast to much previous AutoML work, makes the search space very sparse — an accurate algorithm might be as rare as 1 in 1012 candidates. This is due to the granularity of the building blocks provided to the algorithm, which include only basic operations such as variable assignment, addition, and matrix multiplication. In such an environment, a random search will not find a solution in a reasonable amount of time, yet evolution can be tens of thousands of times faster, according to our measurements. We distributed the search on multiple machines that occasionally exchange algorithms (analogous to migration in real life). We also constructed small proxy classification tasks on which to evaluate each child algorithm, and executed this evaluation with highly optimized code.

Despite the sparsity, the evolutionary search discovers more complex and effective techniques as time passes. Initially, the simplest algorithms appear, which represent linear models with hard-coded weights. In time, stochastic gradient descent (SGD) is invented to learn the weights, in spite of the gradient itself not having been provided as a building block. Though flawed at first, SGD gets fixed relatively quickly, starting a series of improvements to the prediction and learning algorithm. Within our toy scenario, the process discovers several concepts known to have been useful to the research community. In the end, our approach manages to construct a model that outperforms hand-designs of comparable complexity.

|

| Progress of an evolution experiment. As time passes, from left to right, we see the algorithms becoming more complex and more accurate. |

The figure above includes the best evolved algorithm produced by our method. This final algorithm includes techniques such as noise injection as data augmentation, bilinear model, gradient normalization, and weight averaging, and the improvement over the baseline also transfers to datasets that are not used during search. Our paper describes how the different lines in the evolved code implement each of these techniques, and verifies their value through ablation studies.

Through more experiments, we show that it is possible to guide the evolutionary search by controlling "the habitat" — i.e., the tasks on which the evolutionary process evaluates the fitness of the algorithms. For example, when we reduce the amount of data, the noisy ReLU emerges, which helps with regularization. Or when we reduce the number of training steps, we witness the emergence of learning rate decay, which enables faster convergence. Targeted discoveries such as these are important — while it may be interesting if an automatic tool-inventing machine comes up with a hammer or a needle, it is much more interesting if it comes up with a hammer when you show it some nails and a needle when you show it some thread. By analogy, in our work the noisy ReLU ("hammer") is discovered when in the presence of little data ("nails") and the learning rate decay when in the presence of few training steps.

Conclusion

We consider this to be preliminary work. We have yet to evolve fundamentally new algorithms, but it is encouraging that the evolved algorithm can surpass simple neural networks that exist within the search space. Right now, the search process requires significant compute.* As the coming years scale up available hardware and as the search methods become more efficient, it is likely that the search space will become more inclusive and the results will improve. We are excited at the prospects of discovering novel machine learning algorithms as we further our understanding of AutoML-Zero.

Acknowledgements

We want to thank our co-authors, David R. So and Quoc V. Le, and the many who helped us through discussions during the project and paper writing, including Samy Bengio, Vincent Vanhoucke, Doug Eck, Charles Sutton, Yanping Huang, Jacques Pienaar, Jeff Dean, and particularly Gabriel Bender, Hanxiao Liu, Rishabh Singh, Chiyuan Zhang, and Hieu Pham. We also want to especially thank Tom Small for contributing the animations in this post.

* The electricity consumption for the experiments (run in 2019) was matched with the purchase of renewable energy. ↩