LLMs are increasingly popular for reasoning tasks, such as multi-turn QA, task completion, code generation, or mathematics. Yet much like people, they do not always solve problems correctly on the first try, especially on tasks for which they were not trained. Therefore, for such systems to be most useful, they should be able to 1) identify where their reasoning went wrong and 2) backtrack to find another solution.

This has led to a surge in methods related to self-correction, where an LLM is used to identify problems in its own output, and then produce improved results based on the feedback. Self-correction is generally thought of as a single process, but we decided to break it down into two components, mistake finding and output correction.

In “LLMs cannot find reasoning errors, but can correct them!”, we test state-of-the-art LLMs on mistake finding and output correction separately. We present BIG-Bench Mistake, an evaluation benchmark dataset for mistake identification, which we use to address the following questions:

- Can LLMs find logical mistakes in Chain-of-Thought (CoT) style reasoning?

- Can mistake-finding be used as a proxy for correctness?

- Knowing where the mistake is, can LLMs then be prompted to backtrack and arrive at the correct answer?

- Can mistake finding as a skill generalize to tasks the LLMs have never seen?

About our dataset

Mistake finding is an underexplored problem in natural language processing, with a particular lack of evaluation tasks in this domain. To best assess the ability of LLMs to find mistakes, evaluation tasks should exhibit mistakes that are non-ambiguous. To our knowledge, most current mistake-finding datasets do not go beyond the realm of mathematics for this reason.

To assess the ability of LLMs to reason about mistakes outside of the math domain, we produce a new dataset for use by the research community, called BIG-Bench Mistake. This dataset consists of Chain-of-Thought traces generated using PaLM 2 on five tasks in BIG-Bench. Each trace is annotated with the location of the first logical mistake.

To maximize the number of mistakes in our dataset, we sample 255 traces where the answer is incorrect (so we know there is definitely a mistake), and 45 traces where the answer is correct (so there may or may not be a mistake). We then ask human labelers to go through each trace and identify the first mistake step. Each trace has been annotated by at least three labelers, whose answers had inter-rater reliability levels of >0.98 (using Krippendorff’s α). The labeling was done for all tasks except the Dyck Languages task, which involves predicting the sequence of closing parentheses for a given input sequence. This task we labeled algorithmically.

The logical errors made in this dataset are simple and unambiguous, providing a good benchmark for testing an LLM’s ability to find its own mistakes before using them on harder, more ambiguous tasks.

Core questions about mistake identification

1. Can LLMs find logical mistakes in Chain-of-Thought style reasoning?

First, we want to find out if LLMs can identify mistakes independently of their ability to correct them. We attempt multiple prompting methods to test GPT series models for their ability to locate mistakes (prompts here) under the assumption that they are generally representative of modern LLM performance.

Generally, we found these state-of-the-art models perform poorly, with the best model achieving 52.9% accuracy overall. Hence, there is a need to improve LLMs’ ability in this area of reasoning.

In our experiments, we try three different prompting methods: direct (trace), direct (step) and CoT (step). In direct (trace), we provide the LLM with the trace and ask for the location step of the mistake or no mistake. In direct (step), we prompt the LLM to ask itself this question for each step it takes. In CoT (step), we prompt the LLM to give its reasoning for whether each step is a mistake or not a mistake.

|

| A diagram showing the three prompting methods direct (trace), direct (step) and CoT (step). |

Our finding is in line and builds upon prior results, but goes further in showing that LLMs struggle with even simple and unambiguous mistakes (for comparison, our human raters without prior expertise solve the problem with a high degree of agreement). We hypothesize that this is a big reason why LLMs are unable to self-correct reasoning errors. See the paper for the full results.

2. Can mistake-finding be used as a proxy for correctness of the answer?

When people are confronted with a problem where we are unsure of the answer, we can work through our solutions step-by-step. If no error is found, we can make the assumption that we did the right thing.

While we hypothesized that this would work similarly for LLMs, we discovered that this is a poor strategy. On our dataset of 85% incorrect traces and 15% correct traces, using this method is not much better than the naïve strategy of always labeling traces as incorrect, which gives a weighted average F1 of 78.

|

| A diagram showing how well mistake-finding with LLMs can be used as a proxy for correctness of the answer on each dataset. |

3. Can LLMs backtrack knowing where the error is?

Since we’ve shown that LLMs exhibit poor performance in finding reasoning errors in CoT traces, we want to know whether LLMs can even correct errors at all, even if they know where the error is.

Note that knowing the mistake location is different from knowing the right answer: CoT traces can contain logical mistakes even if the final answer is correct, or vice versa. In most real-world situations, we won’t know what the right answer is, but we might be able to identify logical errors in intermediate steps.

We propose the following backtracking method:

- Generate CoT traces as usual, at temperature = 0. (Temperature is a parameter that controls the randomness of generated responses, with higher values producing more diverse and creative outputs, usually at the expense of quality.)

- Identify the location of the first logical mistake (for example with a classifier, or here we just use labels from our dataset).

- Re-generate the mistake step at temperature = 1 and produce a set of eight outputs. Since the original output is known to lead to incorrect results, the goal is to find an alternative generation at this step that is significantly different from the original.

- From these eight outputs, select one that is different from the original mistake step. (We just use exact matching here, but in the future this can be something more sophisticated.)

- Using the new step, generate the rest of the trace as normal at temperature = 0.

It’s a very simple method that does not require any additional prompt crafting and avoids having to re-generate the entire trace. We test it using the mistake location data from BIG-Bench Mistake, and we find that it can correct CoT errors.

Recent work showed that self-correction methods, like Reflexion and RCI, cause deterioration in accuracy scores because there are more correct answers becoming incorrect than vice versa. Our method, on the other hand, produces more gains (by correcting wrong answers) than losses (by changing right answers to wrong answers).

We also compare our method with a random baseline, where we randomly assume a step to be a mistake. Our results show that this random baseline does produce some gains, but not as much as backtracking with the correct mistake location, and with more losses.

|

| A diagram showing the gains and losses in accuracy for our method as well as a random baseline on each dataset. |

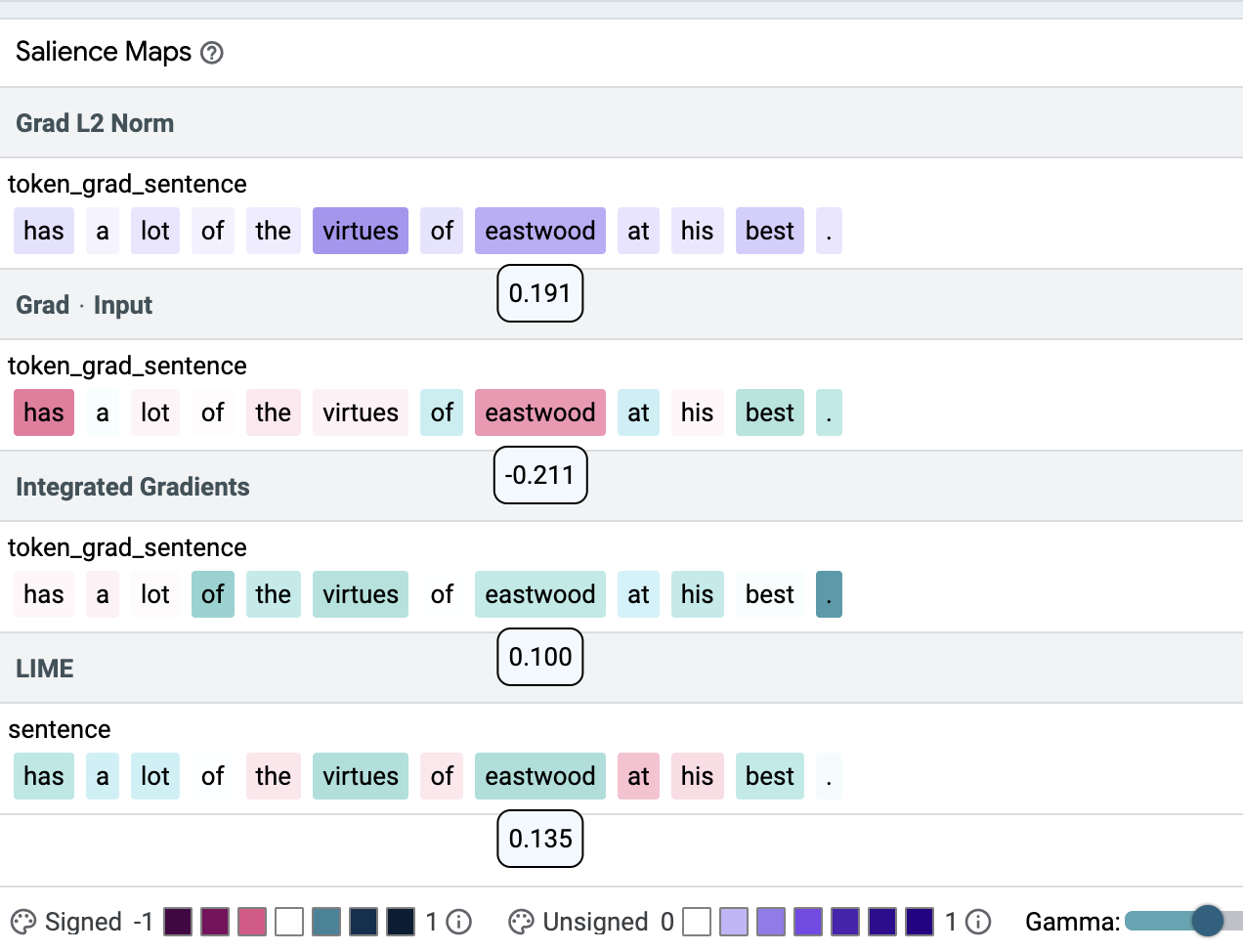

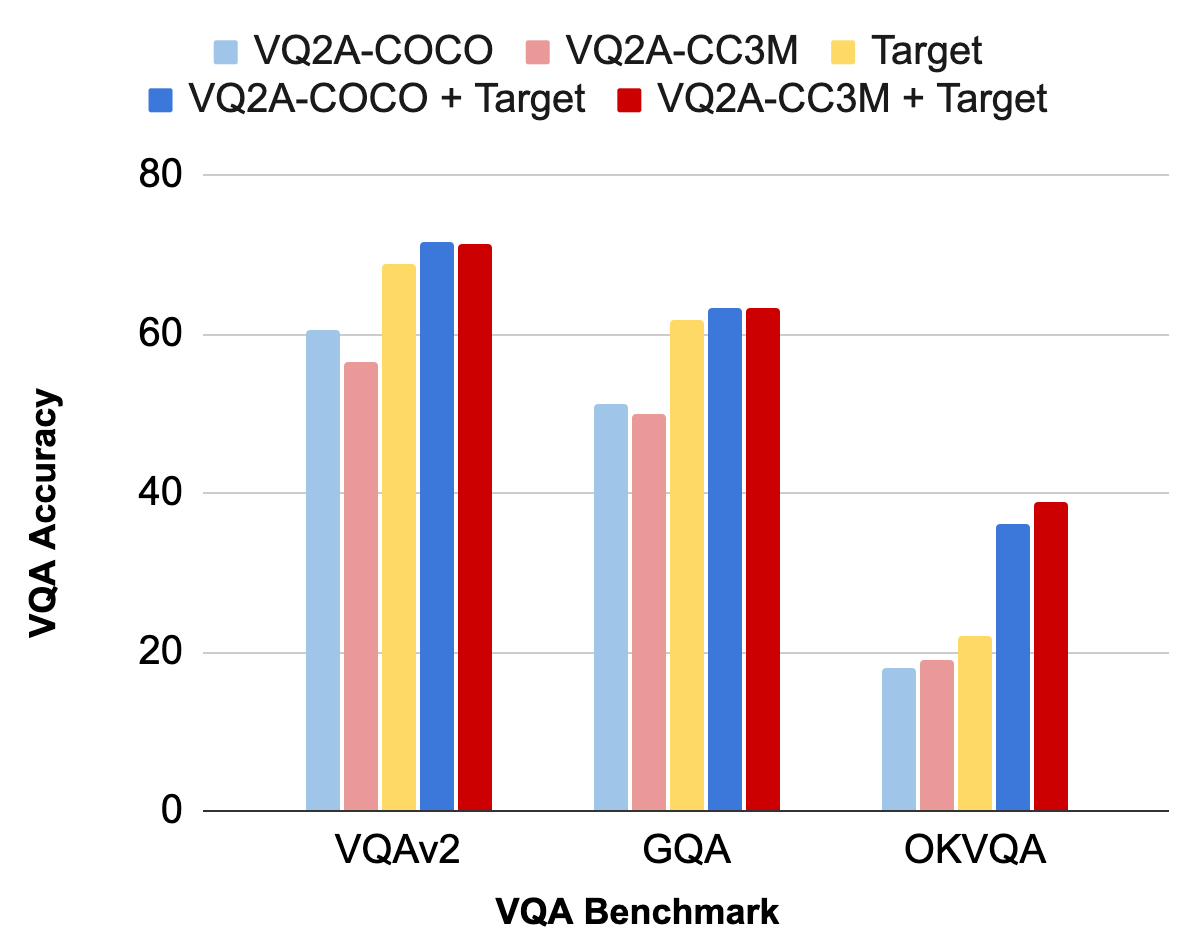

4. Can mistake finding generalize to tasks the LLMs have never seen?

To answer this question, we fine-tuned a small model on four of the BIG-Bench tasks and tested it on the fifth, held-out task. We do this for every task, producing five fine-tuned models in total. Then we compare the results with just zero-shot prompting PaLM 2-L-Unicorn, a much larger model.

|

| Bar chart showing the accuracy improvement of the fine-tuned small model compared to zero-shot prompting with PaLM 2-L-Unicorn. |

Our results show that the much smaller fine-tuned reward model generally performs better than zero-shot prompting a large model, even though the reward model has never seen data from the task in the test set. The only exception is logical deduction, where it performs on par with zero-shot prompting.

This is a very promising result as we can potentially just use a small fine-tuned reward model to perform backtracking and improve accuracy on any task, even if we don’t have the data for it. This smaller reward model is completely independent of the generator LLM, and can be updated and further fine-tuned for individual use cases.

|

| An illustration showing how our backtracking method works. |

Conclusion

In this work, we created an evaluation benchmark dataset that the wider academic community can use to evaluate future LLMs. We further showed that LLMs currently struggle to find logical errors. However, if they could, we show the effectiveness of backtracking as a strategy that can provide gains on tasks. Finally, a smaller reward model can be trained on general mistake-finding tasks and be used to improve out-of-domain mistake finding, showing that mistake-finding can generalize.

Acknowledgements

Thank you to Peter Chen, Tony Mak, Hassan Mansoor and Victor Cărbune for contributing ideas and helping with the experiments and data collection. We would also like to thank Sian Gooding and Vicky Zayats for their comments and suggestions on the paper.