Posted by Gabriel Rubinsky, Senior Product Manager

Today, we’re excited to announce the Device Access Console is available.

The Device Access program lets individuals and qualified partners securely access and control Nest products with their apps and solutions.

At the heart of the Device Access program is the Smart Device Management API. Since we announced the program, Alarm.com, Control4, DISH, OhmConnect, NRG Energy, and Vivint Smart Home have successfully completed the Early Access Program (EAP) with Nest thermostat, camera, or doorbell traits. In the coming months, we expect additional devices to be supported and more smart home partners to launch their new integrations as well.

Enhanced privacy and security

The Device Access program is built on a foundation of privacy and security. The program requires partner submission of qualified use cases and completion of a security assessment before being allowed to utilize the Smart Device Management API for commercial use. The program process gives our users the confidence that commercial partners offering integrated Nest solutions have data protections and safeguards in place that meet our privacy and security standards.

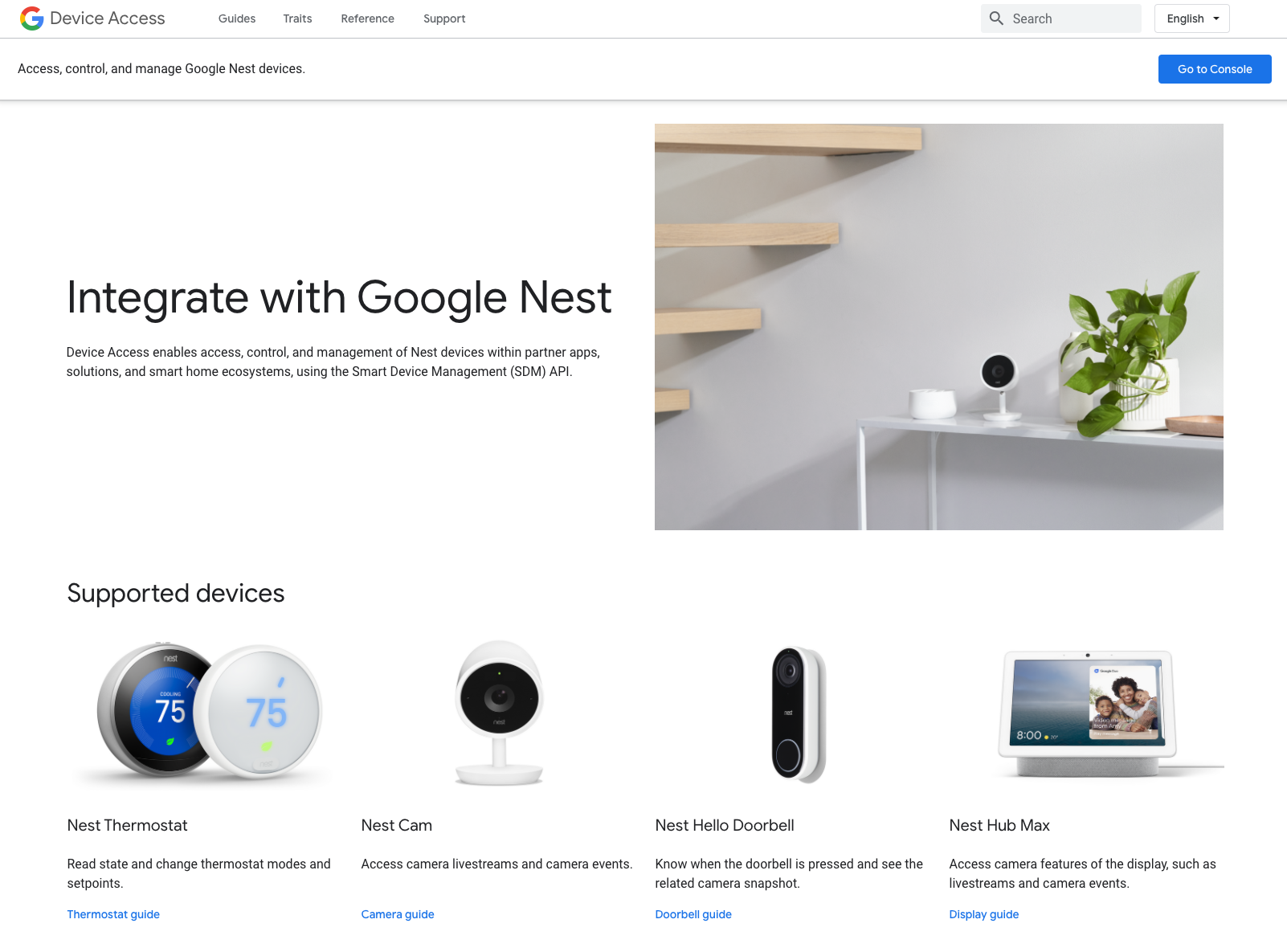

Nest device access and control

The Device Access program currently allows qualified partners to integrate directly with Nest devices, enable control of thermostats, access and view camera feeds, and receive doorbell notifications with images. All qualified partner solutions and services will require end-user consent before being able to access, control, and manage Nest devices as part of their service offerings, either through a partner client app or service platform. Ultimately, this gives users more choice in how to control their home and their own generated data.

If you’re a developer or a Nest user interested in the Device Access program or access to the sandbox development environment,* you can find more information on our Device Access site.

- Device Access for Commercial Developers

The Device Access program allows trusted partners to offer access, management, and control of Nest devices within the partner’s app, solution, and ecosystem. It allows developers to test all API traits in the sandbox environment, before moving forward with commercial integration. Learn more

- Device Access for Individuals

For individual smart home developer enthusiasts, you can register to access the sandbox development environment, allowing you to directly control your own Nest devices through your private integrations and automations. Learn more

We’re doing the work to make Nest devices more secure and protect user privacy long into the future. This means expanding privacy and data security programs, and delivering flexibility for our customers to use thousands of products from partners to create a connected, helpful home.

* Registration consists of the acceptance of the Google API and Nest Device Access Sandbox Terms of Service, along with a one-time, non-refundable nominal fee per account