Posted by Nari Yoon, Bitnoori Keum, Hee Jung, DevRel Community Manager / Soonson Kwon, DevRel Program Manager

Let’s explore highlights and accomplishments of vast Google Machine Learning communities over the first quarter of 2023. We are enthusiastic and grateful about all the activities by the global network of ML communities. Here are the highlights!

ML Campaigns

ML Community Sprint

ML Community Sprint is a campaign, a collaborative attempt bridging ML GDEs with Googlers to produce relevant content for the broader ML community. Throughout Feb and Mar, MediaPipe/TF Recommendation Sprint was carried out and 5 projects were completed.

ML Olympiad 2023

ML Olympiad is an associated Kaggle Community Competitions hosted by ML GDE, TFUG, 3rd-party ML communities, supported by Google Developers. The second, ML Olympiad 2023 has wrapped up successfully with 17 competitions and 300+ participants addressing important issues of our time - diversity, environments, etc. Competition highlights include Breast Cancer Diagnosis, Water Quality Prediction, Detect ChatGpt answers, Ensure healthy lives, etc. Thank you all for participating in ML Olympiad 2023!

Also, “ML Paper Reading Clubs” (GalsenAI and TFUG Dhaka), “ML Math Clubs” (TFUG Hajipur and TFUG Dhaka) and “ML Study Jams” (TFUG Bauchi) were hosted by ML communities around the world.

Community Highlights

Keras

Various ways of serving Stable Diffusion by ML GDE Chansung Park (Korea) and ML GDE Sayak Paul (India) shares how to deploy Stable Diffusion with TF Serving, Hugging Face Endpoint, and FastAPI. Their other project Fine-tuning Stable Diffusion using Keras provides how to fine-tune the image encoder of Stable Diffusion on a custom dataset consisting of image-caption pairs.

Serving TensorFlow models with TFServing by ML GDE Dimitre Oliveira (Brazil) is a tutorial explaining how to create a simple MobileNet using the Keras API and how to serve it with TF Serving.

Fine-tuning the multilingual T5 model from Huggingface with Keras by ML GDE Radostin Cholakov (Bulgaria) shows a minimalistic approach for training text generation architectures from Hugging Face with TensorFlow and Keras as the backend.

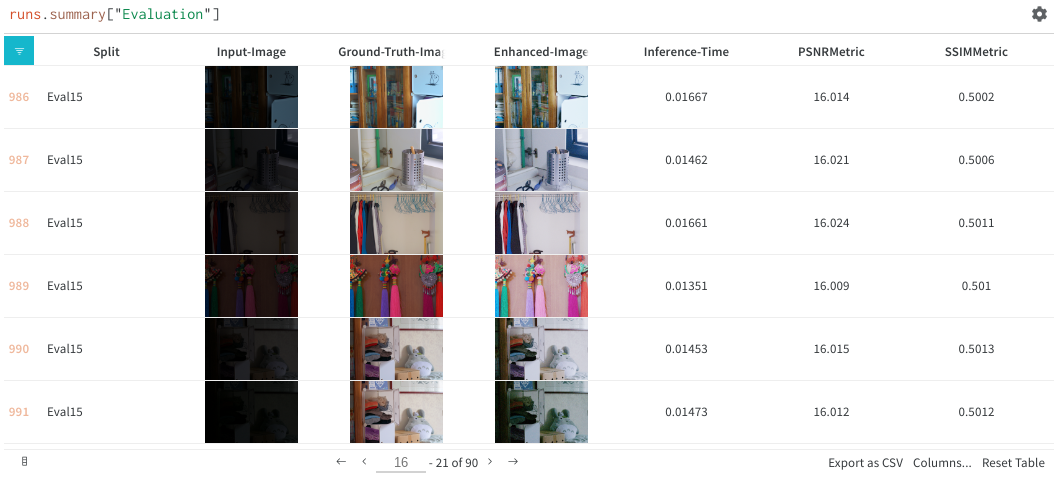

Lighting up Images in the Deep Learning Era by ML GDE Soumik Rakshit (India), ML GDE Saurav Maheshkar (UK), ML GDE Aritra Roy Gosthipaty (India), and Samarendra Dash explores deep learning techniques for low-light image enhancement. The article also talks about a library, Restorers, providing TensorFlow and Keras implementations of SoTA image and video restoration models for tasks such as low-light enhancement, denoising, deblurring, super-resolution, etc.

How to Use Cosine Decay Learning Rate Scheduler in Keras? by ML GDE Ayush Thakur (India) introduces how to correctly use the cosine-decay learning rate scheduler using Keras API.

Implementation of DreamBooth using KerasCV and TensorFlow (Keras.io tutorial) by ML GDE Sayak Paul (India) and ML GDE Chansung Park (Korea) demonstrates DreamBooth technique to fine-tune Stable Diffusion in KerasCV and TensorFlow. Training code, inference notebooks, a Keras.io tutorial, and more are in the repository. Sayak also shared his story, [ML Story] DreamBoothing Your Way into Greatness on the GDE blog.

Focal Modulation: A replacement for Self-Attention by ML GDE Aritra Roy Gosthipaty (India) shares a Keras implementation of the paper. Usha Rengaraju (India) shared Keras Implementation of NeurIPS 2021 paper, Augmented Shortcuts for Vision Transformers.

Images classification with TensorFlow & Keras (video) by TFUG Abidjan explained how to define an ML model that can classify images according to the category using a CNN.

Hands-on Workshop on KerasNLP by GDG NYC, GDG Hoboken, and Stevens Institute of Technology shared how to use pre-trained Transformers (including BERT) to classify text, fine-tune it on custom data, and build a Transformer from scratch.

On-device ML

Stable diffusion example in an android application — Part 1 & Part 2 by ML GDE George Soloupis (Greece) demonstrates how to deploy a Stable Diffusion pipeline inside an Android app.

AI for Art and Design by ML GDE Margaret Maynard-Reid (United States) delivered a brief overview of how AI can be used to assist and inspire artists & designers in their creative space. She also shared a few use cases of on-device ML for creating artistic Android apps.

ML Engineering (MLOps)

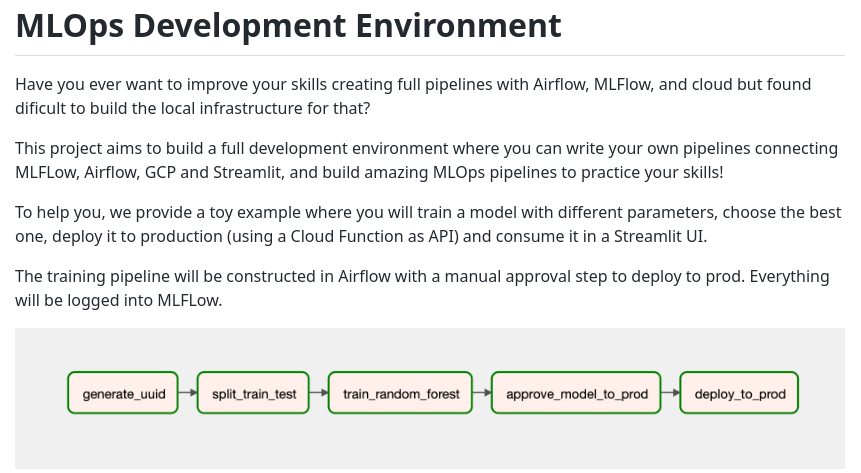

End-to-End Pipeline for Segmentation with TFX, Google Cloud, and Hugging Face by ML GDE Sayak Paul (India) and ML GDE Chansung Park (Korea) discussed the crucial details of building an end-to-end ML pipeline for Semantic Segmentation tasks with TFX and various Google Cloud services such as Dataflow, Vertex Pipelines, Vertex Training, and Vertex Endpoint. The pipeline uses a custom TFX component that is integrated with Hugging Face Hub - HFPusher.

Extend your TFX pipeline with TFX-Addons by ML GDE Hannes Hapke (United States) explains how you can use the TFX-Addons components or examples.

Textual Inversion Pipeline for Stable Diffusion by ML GDE Chansung Park (Korea) demonstrates how to manage multiple models and their prototype applications of fine-tuned Stable Diffusion on new concepts by Textual Inversion.

Running a Stable Diffusion Cluster on GCP with tensorflow-serving (Part 1 | Part 2) by ML GDE Thushan Ganegedara (Australia) explains how to set up a GKE cluster, how to use Terraform to set up and manage infrastructure on GCP, and how to deploy a model on GKE using TF Serving.

Scalability of ML Applications by TFUG Bangalore focused on the challenges and solutions related to building and deploying ML applications at scale. Googler Joinal Ahmed gave a talk entitled Scaling Large Language Model training and deployments.

Discovering and Building Applications with Stable Diffusion by TFUG São Paulo was for people who are interested in Stable Diffusion. They shared how Stable Diffusion works and showed a complete version created using Google Colab and Vertex AI in production.

Responsible AI

In Fairness & Ethics In AI: From Journalism, Medicine and Translation, ML GDE Samuel Marks (United States) discussed responsible AI.

In The new age of AI: A Convo with Google Brain, ML GDE Vikram Tiwari (United States) discussed responsible AI, open-source vs. closed-source, and the future of LLMs.

Responsible IA Toolkit (video) by ML GDE Lesly Zerna (Bolivia) and Google DSC UNI was a meetup to discuss ethical and sustainable approaches to AI development. Lesly shared about the “ethic” side of building AI products as well as learning about “Responsible AI from Google”, PAIR guidebook, and other experiences to build AI.

Women in AI/ML at Google NYC by GDG NYC discussed hot topics, including LLMs and generative AI. Googler Priya Chakraborty gave a talk entitled Privacy Protections for ML Models.

ML Research

Efficient Task-Oriented Dialogue Systems with Response Selection as an Auxiliary Task by ML GDE Radostin Cholakov (Bulgaria) showcases how, in a task-oriented setting, the T5-small language model can perform on par with existing systems relying on T5-base or even bigger models.

Learning JAX in 2023: Part 1 / Part 2 / Livestream video by ML GDE Aritra Roy Gosthipaty (India) and ML GDE Ritwik Raha (India) covered the power tools of JAX, namely grad, jit, vmap, pmap, and also discussed the nitty-gritty of randomness in JAX.

In Deep Learning Mentoring MILA Quebec, ML GDE David Cardozo (Canada) did mentoring for M.Sc and Ph.D. students who have interests in JAX and MLOps. JAX Streams: Parallelism with Flax | EP4 by David and ML GDE Cristian Garcia (Columbia) explored Flax’s new APIs to support parallelism.

March Machine Learning Meetup hosted by TFUG Kolkata. Two sessions were delivered: 1) You don't know TensorFlow by ML GDE Sayak Paul (India) presented some under-appreciated and under-used features of TensorFlow. 2) A Guide to ML Workflows with JAX by ML GDE Aritra Roy Gosthipaty (India), ML GDE Soumik Rakshit (India), and ML GDE Ritwik Raha (India) delivered on how one could think of using JAX functional transformations for their ML workflows.

A paper review of PaLM-E: An Embodied Multimodal Language Model by ML GDE Grigory Sapunov (UK) explained the details of the model. He also shared his slide deck about NLP in 2022.

An annotated paper of On the importance of noise scheduling in Diffusion Models by ML GDE Aakash Nain (India) outlined the effects of noise schedule on the performance of diffusion models and strategies to get a better schedule for optimal performance.

TensorFlow

Three projects were awarded as TF Community Spotlight winners: 1) Semantic Segmentation model within ML pipeline by ML GDE Chansung Park (Korea), ML GDE Sayak Paul (India), and ML GDE Merve Noyan (France), 2) GatedTabTransformer in TensorFlow + TPU / in Flax by Usha Rengaraju, and 3) Real-time Object Detection in the browser with YOLOv7 and TF.JS by ML GDE Hugo Zanini (Brazil).

Building ranking models powered by multi-task learning with Merlin and TensorFlow by ML GDE Gabriel Moreira (Brazil) describes how to build TensorFlow models with Merlin for recommender systems using multi-task learning.

Building ML Powered Web Applications using TensorFlow Hub & Gradio (slide) by ML GDE Bhavesh Bhatt (India) demonstrated how to use TF Hub & Gradio to create a fully functional ML-powered web application. The presentation was held as part of an event called AI Evolution with TensorFlow, covering the fundamentals of ML & TF, hosted by TFUG Nashik.

create-tf-app (repository) by ML GDE Radostin Cholakov (Bulgaria) shows how to set up and maintain an ML project in Tensorflow with a single script.

Cloud

Creating scalable ML solutions to support big techs evolution (slide) by ML GDE Mikaeri Ohana (Brazil) shared how Google can help big techs to generate impact through ML with scalable solutions.

Search of Brazilian Laws using Dialogflow CX and Matching Engine by ML GDE Rubens Zimbres (Brazil) shows how to build a chatbot with Dialogflow CX and query a database of Brazilian laws by calling an endpoint in Cloud Run.

Stable Diffusion Finetuning by ML GDE Pedro Gengo (Brazil) and ML GDE Piero Esposito (Brazil) is a fine-tuned Stable Diffusion 1.5 with more aesthetic images. They used Vertex AI with multiple GPUs to fine-tune it. It reached Hugging Face top 3 and more than 150K people downloaded and tested it.

Posted by Brian Hall, Vice President, Product and Industry Marketing, Google Cloud & Max Saltonstall, Developer Relations Engineer

Posted by Brian Hall, Vice President, Product and Industry Marketing, Google Cloud & Max Saltonstall, Developer Relations Engineer

Posted by Alexandra Dumas, Head of VC & Startup Partnerships, West Coast, Google

Posted by Alexandra Dumas, Head of VC & Startup Partnerships, West Coast, Google

Posted by Ashley Francisco, Head of Startup Developer Ecosystem, North America, & Darren Mowry, Managing Director, Corporate Sales

Posted by Ashley Francisco, Head of Startup Developer Ecosystem, North America, & Darren Mowry, Managing Director, Corporate Sales

Introducing new generative AI capabilities in Google Cloud and Google Workspace, plus PaLM API and MakerSuite for developers.

Introducing new generative AI capabilities in Google Cloud and Google Workspace, plus PaLM API and MakerSuite for developers.