Or how to improve startup time by up to 40%

Posted by Kateryna Semenova, DevRel Engineer; Rahul Ravikumar, Software Engineer; Chris Craik, Software Engineer

Why is startup time important?

A lot of apps find correlation between app performance and user engagement. People expect apps to be responsive and fast to load. Startup time is one of the major metrics for app performance and quality.

Some of our partners have already invested a lot of time and resources for app startup optimizations. For example, check out the Facebook story.

In this blog post we’ll discuss Baseline Profiles and how they improve app and library performance, including startup time by up to 40%. While this blogpost focuses on startup, baseline profiles also significantly improve jank as well.

History

Android 9 (API level 28) introduced ART optimizing profiles in Play Cloud to improve app startup time. On average, we’ve seen that apps' cold starts are at least 15% faster across a variety of devices when Cloud Profiles are available.

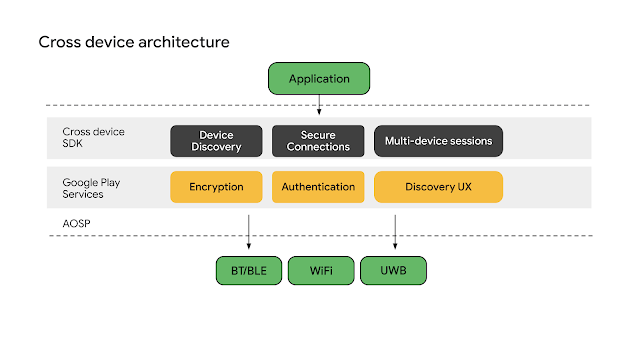

How do Profiles work?

When the app is first launched after install or update, its code runs in an interpreted mode until it is JITted. In an APK, Java and Kotlin code is compiled as dex bytecode, but not fully compiled to machine code (since Android 6), due to the cost of storing and loading fully compiled apps. Classes and methods that are frequently used in the app, as well as those used for app startup, are recorded into a profile file. Once the device enters idle mode, ART compiles the apps based on these profiles. This speeds up subsequent app launches.

Starting with Android 9 (API level 28), Google Play also provides Cloud Profiles. When an app runs on a device, the profiles generated by ART are uploaded by the Play Store app and aggregated in the cloud. Once there are enough profiles uploaded for an application, the Play app uses the aggregated profile for subsequent installs.

Problem

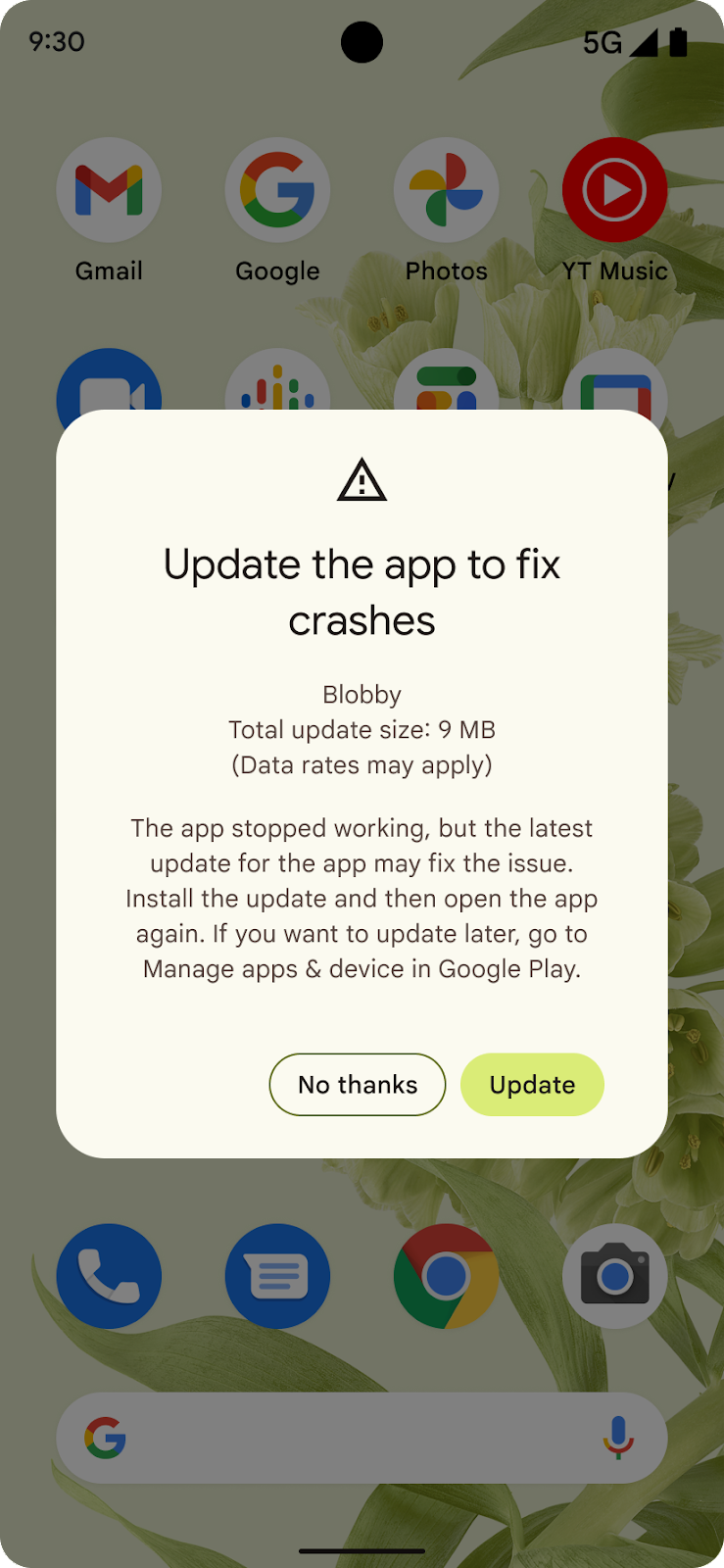

While Cloud Profiles are great when they are available, they aren't always ready to be used when an app is installed. Collecting and aggregating the profiles usually takes several days, which is a problem when many apps update on a weekly basis. Many users will install an update before the Cloud Profile is available. The Google Android team started looking for other ways to improve the latency of profiles.

Solution

Baseline Profiles are a new mechanism to provide profiles which can be used on Android 7 (API level 24) and higher. A baseline profile is an ART profile generated by the Android Gradle plugin using a human readable profile format that can be provided by apps and libraries. An example might look like this:

HSPLandroidx/compose/runtime/ComposerImpl;->updateValue(Ljava/lang/Object;)V

HSPLandroidx/compose/runtime/ComposerImpl;->updatedNodeCount(I)I

HLandroidx/compose/runtime/ComposerImpl;->validateNodeExpected()V

PLandroidx/compose/runtime/CompositionImpl;->applyChanges()V

HLandroidx/compose/runtime/ComposerKt;->findLocation(Ljava/util/List;I)I

Example for Compose library.

The binary profile is stored in a specific location in the APK assets directory

(assets/dexopt/baseline.prof).

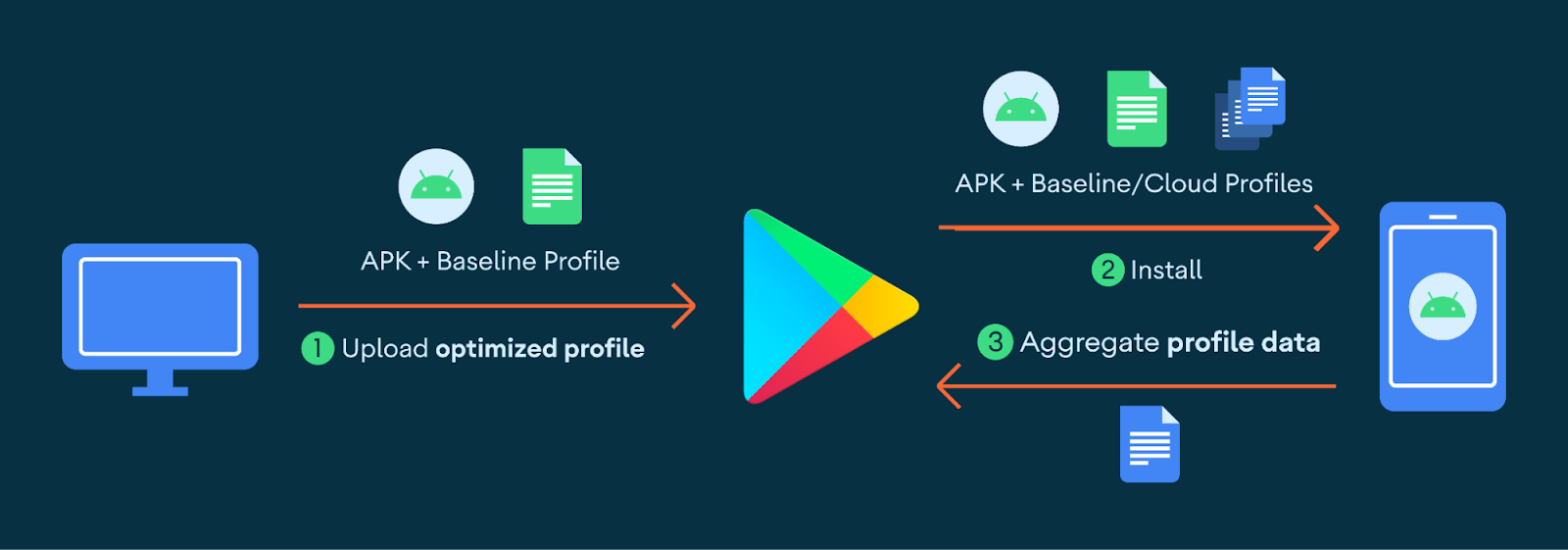

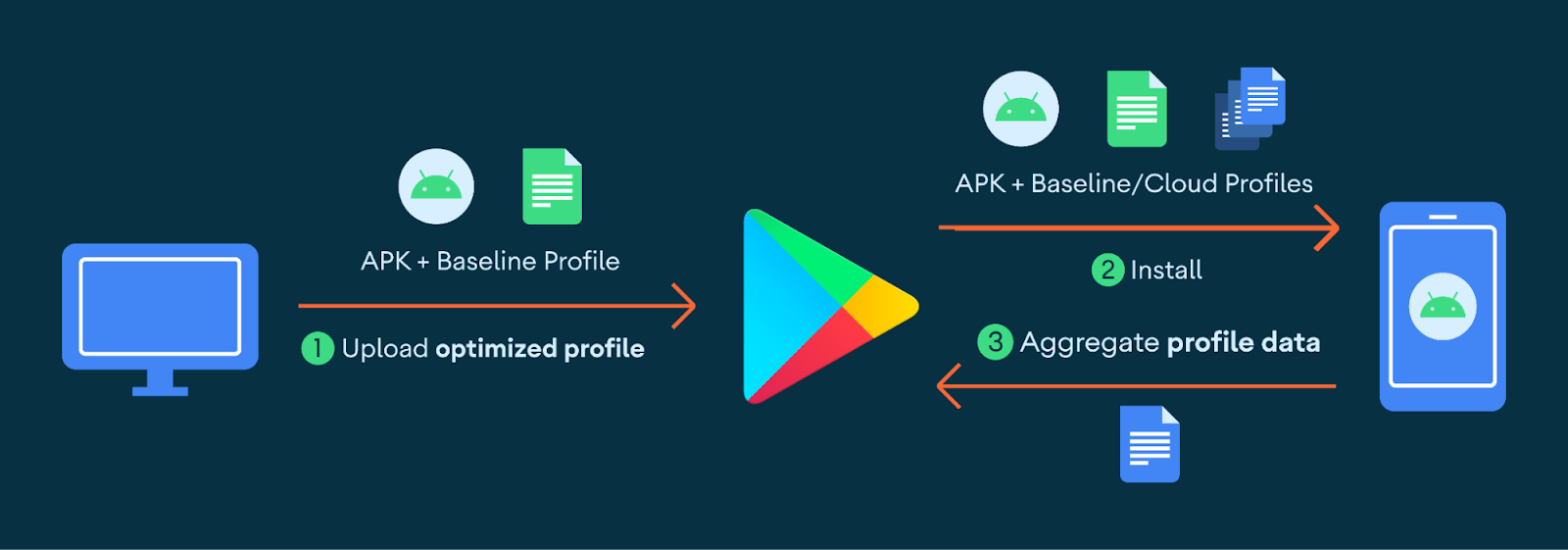

Baseline Profiles are created during build time, shipped as part of the APK to Play, and then sent from Play to users when an app is downloaded. They fill the gap in the ART Cloud Profile pipeline, when Cloud Profiles are not yet available, and automatically merge with Cloud Profiles when they are.

This diagram displays the baseline profile workflow from creation through end-user delivery.

One of the biggest benefits of Baseline Profiles is that they can be developed and evaluated locally so developers can see realistic end-user performance improvements. They are also supported on a lower version of Android(7 and higher) than Cloud Profiles, which are only available starting in Android 9.

Impact

App devs

In early 2021, Google Maps switched from a two-week to a one-week release cycle. More frequent updates meant more frequently discarding local pre-compilation, and more users experiencing slow launches without Play Cloud Profiles. By using Baseline Profiles, Google Maps improved their average startup time by 30% and saw a corresponding increase in searches by 2.4%, an immense gain for such an established app.

Library devs

Code in a library is just like that of an app - it's not fully compiled by default, which can be a problem if it does significant work on the critical path of startup.

Jetpack Compose is a UI library that is not a part of the Android system image and thus not fully compiled when installed, unlike much of the Android View toolkit code. This was causing performance problems, especially for the first few cold launches of the app.

To solve this problem, Compose uses profile installer. It ships baseline profile rules which reduce startup time and jank in Compose apps.

Google PlayStore’s search results page has been re-written with Compose. After incorporating the Baseline Profile rules from Compose, time to render the initial search results page with images improved by ~40%.

The Android team has also added Baseline Profiles to relevant AndroidX libraries. This benefits all Android apps using these libraries. Constraint Layout has found shipping profile rules reduces animation frame times by more than one millisecond.

How to use Baseline Profiles

Create a custom Baseline Profile

All apps and library developers can benefit from including Baseline Profiles. Ideally, developers create profiles for their most critical user journeys to ensure that those journeys have consistently fast performance regardless of whether cloud profiles are available. Check out the detailed guide on how to set up Baseline Profiles for both app and library developers.

Update dependencies

If you are not ready to generate Baseline Profiles for your app right now, you can still benefit from them by updating your dependencies. If you build with Android Gradle Plugin 7.1.0-alpha05 or newer, you'll get Baseline Profiles included in your APK that are already provided by libraries (such as Jetpack). Google Play compiles your app with these profiles at install time. You can supplement these profiles as part of building your application.

Measure Improvements

Don’t forget to measure improvements. Follow the steps on how to measure startup with the generated profile locally.

Provide feedback

Please share your feedback and let us know your experience!

Posted by

Posted by  Posted by Prabhat Sharma – Director, Trust and Safety, Play, Android, and Chrome

Posted by Prabhat Sharma – Director, Trust and Safety, Play, Android, and Chrome

Posted by the Android team

Posted by the Android team

Posted by Kurt Williams, Product Manager, Google Play

Posted by Kurt Williams, Product Manager, Google Play